An Open-source Benchmark of Deep Learning Models for Audio-visual Apparent and Self-reported Personality Recognition

Introduction

This is the official code repo of An Open-source Benchmark of Deep Learning Models for Audio-visual Apparent and Self-reported Personality Recognition (https://arxiv.org/abs/2210.09138).

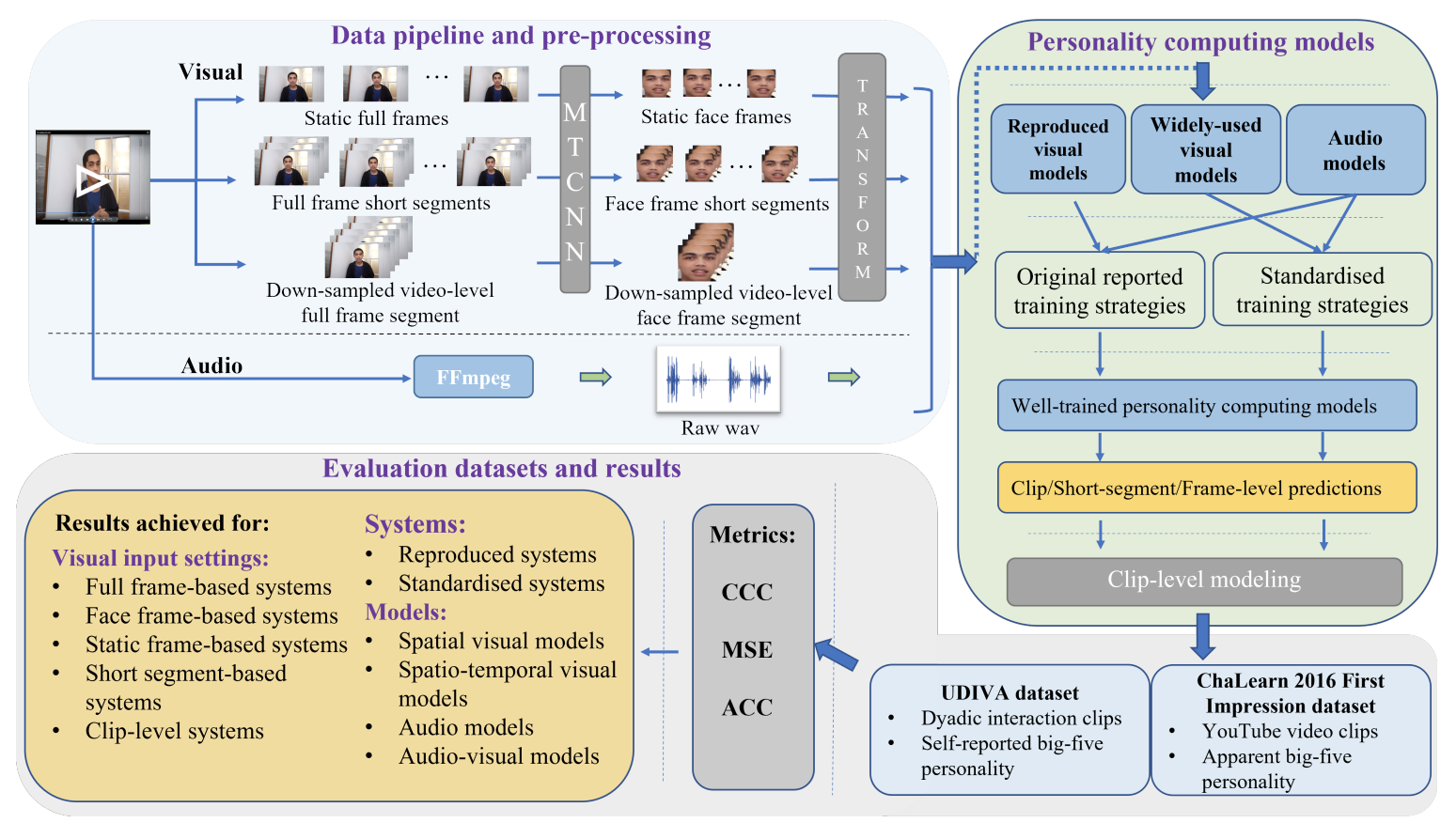

In this project, seven visual models, six audio models and five audio-visual models have been reproduced and evaluated. Besides, seven widely-used visual deep learning models, which have not been applied to video-based personality computing before, have also been employed for benchmark. Detailed description can be found in our paper.

All benchmarked models are evaluated on: the ChaLearn First Impression dataset and the ChaLearn UDIVA self-reported personality dataset

This project is currently under active development. Documentation, examples, and tutorial will be progressively detailed

Installation

Setup project: you can use either Conda or Virtualenv/pipenv to create a virtual environment to run this program.

# create and activate a virtual environment

virtualenv -p python38 venv

source venv/bin/activateInstalling from PyPI

pip install deep_personalityInstalling from Github

# clone current repo

git clone DeepPersonality

cd DeepPersonality

# install required packages and dependencies

pip install -r requirements.txtDatasets

The datasets we used for benchmark are Chalearn First Impression and UDIVA.

-

The former contains 10, 000 video clips that come from 2, 764 YouTube users for apparent personality recognition(impression), where each video lasts for about 15 seconds with 30 fps.

-

The latter, for self-reported personality, records 188 dyadic interaction video clips between 147 voluntary participants, with total 90.5h of recordings. Every clip contains two audiovisual files, where each records a single participant’s behaviours.

-

Each video in both datasets is labelled with the Big-Five personality traits.

To meet various requirements from different models and experiments, we extract raw audio file and all frames from a video and then extract face images from each full frame, termed as face frames.

For quick start and demonstration, we provide a tiny Chalearn 2016 dataset containing 100 videos within which 60 for training, 20 for validation and 20 for test. Please find the process methods in dataset preparation.

For your convenience, we provide the processed face image frames dataset for Chalearn 2016 since that dataset is publicly available, which indicates we can make our processed data open to the community.

Experiments

Reproducing reported experiments

We employ a build-from-config manner to conduct an experiment. After setting up the environments and preparing the data needed, we can have a quick start by the following command line:

# cd DeepPersonality # top directory

script/run_exp.py --config path/to/exp_config.yaml For quick start with tiny ChaLearn 2016 dataset,

if you prepare the data by the instructions in above section, the following command will launch an experiment for bimodal-resnet18 model.

# cd DeepPersonality # top directory

script/run_exp.py --config config/demo/bimodal_resnet18.yamlDetailed arguments description are presented in command line interface file.

For quick start demonstration, please find the Colab Notebook: QuickStart

For experiments start from raw video processing, please find this Colab Notebook: StartFromDataProcessing

Developing new personality computing models

We use config-pipe line files and registration mechanism to organize our experiments. If user want to add their own models or algorithms into this program please reference the Colab Notebook TrainYourModel

Models

On ChaLearn 2016 dataset

| Model | Modal | ChaLearn2016 cfgs | ChaLearn2016 weights |

|---|---|---|---|

| DAN | visual | cfg | weight |

| CAM-DAN+ | visual | cfg | weight |

| ResNet | visual | cfg | weight |

| HRNet | visual | cfg-frame/cfg-face | weight-frame/weight-face |

| SENet | visual | cfg-frame/cfg-face | weight |

| 3D-ResNet | visual | cfg-frame/cfg-face | weight |

| Slow-Fast | visual | cfg-frame/cfg-face | weight |

| TPN | visual | cfg-frame/cfg-face | weight |

| Swin-Transformer | visual | cfg-frame/cfg-face | weight |

| VAT | visual | cfg-frame/cfg-face | weight |

| Interpret Audio CNN | audio | cfg | weight |

| Bi-modal CNN-LSTM | audiovisual | cfg | weight |

| Bi-modal ResNet | audiovisual | cfg | weight |

| PersEmoN | audiovisual | cfg | weight |

| CRNet | audiovisual | cfg | weight |

| Amb-Fac | audiovisual | cfg-frame, cfg-face | weight |

On ChaLearn 2021 dataset

Papers

From which the models are reproduced

- Deep bimodal regression of apparent personality traits from short video sequences

- Bi-modal first impressions recognition using temporally ordered deep audio and stochastic visual features

- Deep impression: Audiovisual deep residual networks for multimodal apparent personality trait recognition

- Cr-net: A deep classification-regression network for multimodal apparent personality analysis

- Interpreting cnn models for apparent personality trait regression

- On the use of interpretable cnn for personality trait recognition from audio

- Persemon: a deep network for joint analysis of apparent personality, emotion and their relationship

- A multi-modal personality prediction system

- Squeeze-and-excitation networks

- Deep high-resolution representation learning for visual recognition

- Swin transformer: Hierarchical vision transformer using shifted windows

- Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet

- Slowfast networks for video recognition

- Temporal pyramid network for action recognition

- Video action transformer network

Citation

If you use our code for a publication, please kindly cite it as:

@article{liao2022open,

title={An Open-source Benchmark of Deep Learning Models for Audio-visual Apparent and Self-reported Personality Recognition},

author={Liao, Rongfan and Song, Siyang and Gunes, Hatice},

journal={arXiv preprint arXiv:2210.09138},

year={2022}

}

History

- 2022/10/17 - Paper submission and make project publicly available.

To Be Updated

- Test Pip install

- Description of adding new models

- Model zoo

- Notebook tutorials