Pytorch implementation of Transformer Temporal Fusion (TFT) for time series forecast. This implementation, adapted from the referenced repository pytorch-forecasting, simplified for developers outside the computer science field.

We recommend installing miniconda for managing Python environment, yet this repo works well with other alternatives e.g., venv.

- Install miniconda by following these instructions

- Create a conda environment

conda create --name your_env_name python=3.10 - Activate conda environment

conda activate your_env_name

pip install -r requirements.txtEither run the following command to download the data or directly visit the provided URL.

NOTE: data must be saved in a folder data/

wget https://archive.ics.uci.edu/ml/machine-learning-databases/00321/LD2011_2014.txt.zip

unzip LD2011_2014.txt.zippython runner.py.

├── runner.py # Run the training step

├── config # User-specified data variables and hyperparameters for TFT

├── data_preprocessor.py # Preprocess data for TFT format

├── metric.py # Loss function for training e.g., Quantile loss

├── model.py # All model architectures required for TFT

├── tft.py # TFT model

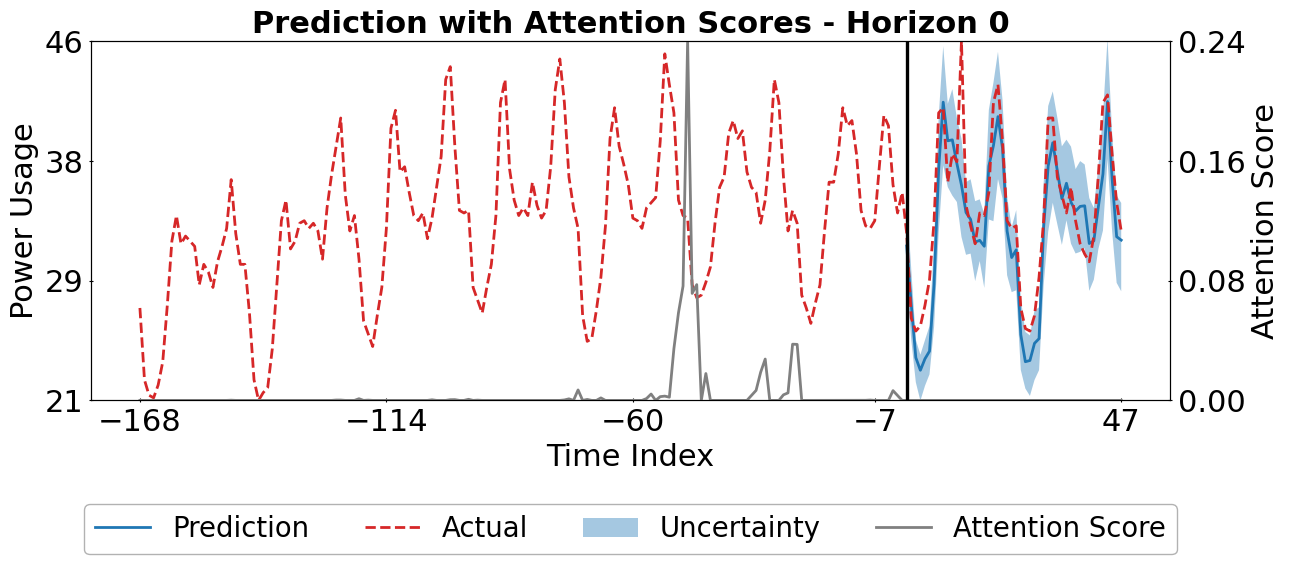

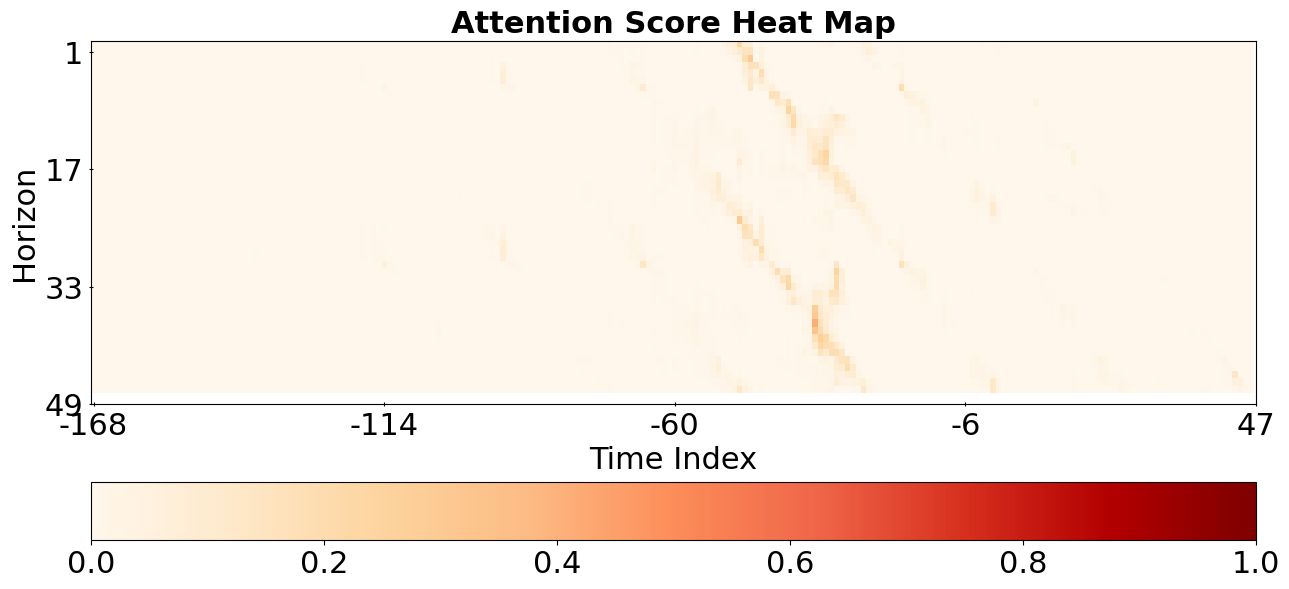

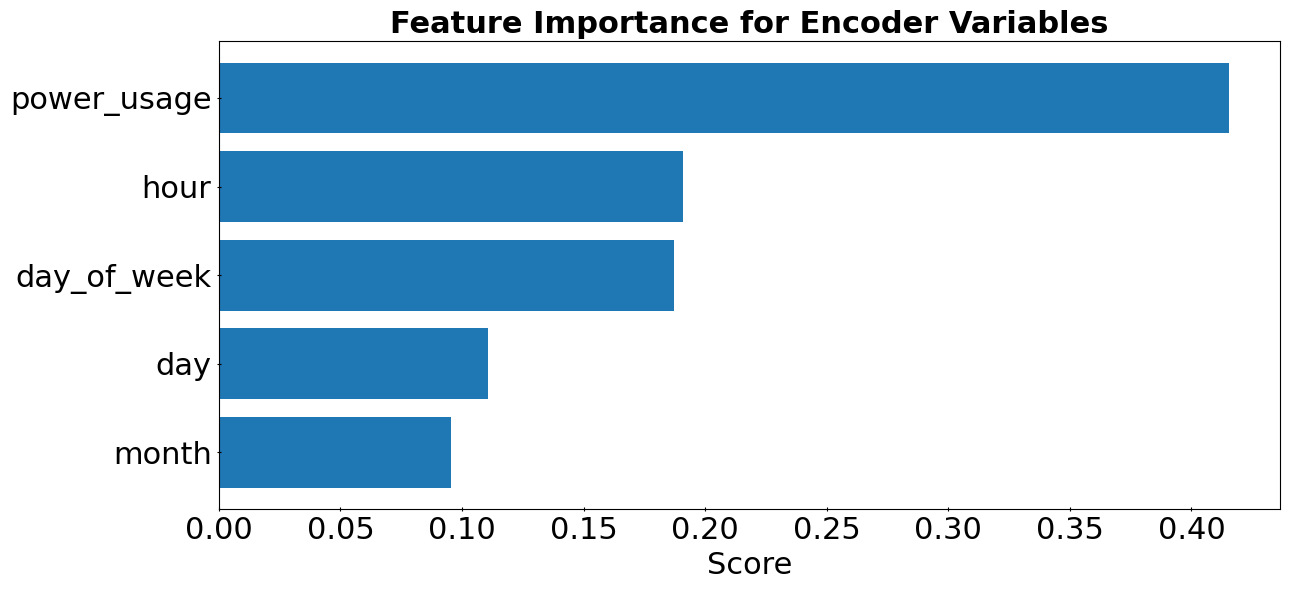

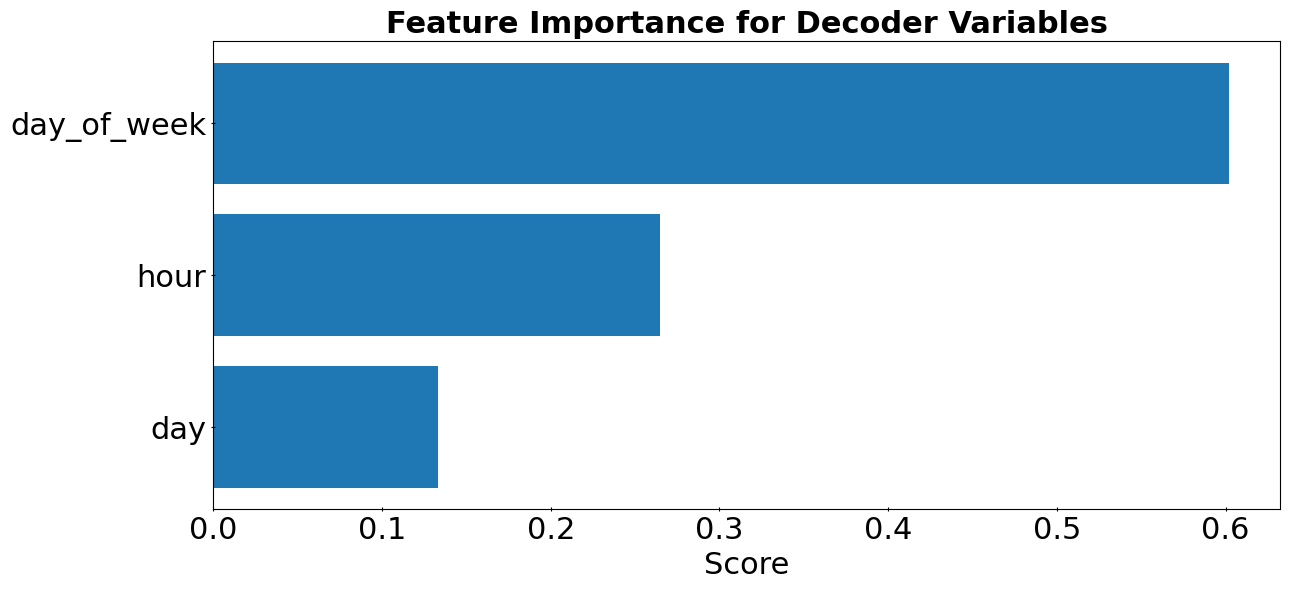

├── tft_interp.py # Output interpretation and visual tools

This implementation is released under the MIT license.

THIS IS AN OPEN SOURCE SOFTWARE FOR RESEARCH PURPOSES ONLY. THIS IS NOT A PRODUCT. NO WARRANTY EXPRESSED OR IMPLIED.

This code is adapted from the repository pytorch-forecasting. In addition, we would like to acknowledge and give credit to UC Irvine Machine Learning Repository for providing the sample dataset that we utilized for this project.