Spamify

Machine Learnin Project

Analysis of Spam Detection Machine Learning Algorithms

Leo Wanxiang Cai, Leon Long

Introduction

Email is one of the most common ways of communication in the digital era. 269 billion emails were sent and received per day in 2017 (Statista, 2018). However, spam email has become a huge concern for people considering its ubiquity and potential negative impact on individuals and society. According to a report from Statista, 59% of total world emails traffic are spam in September, 2017. With enormous email data for training and computational power, machine learning technology has now become a popular and effective way to identify spam emails. This report discusses the application of three machine learning algorithms (Naive Bayesian, Decision Tree and k-Nearest Neighbors) in the context of spam classification. It compares and contrasts the performance of those three algorithms and evaluate the strengths and weaknesses of each one.

Data

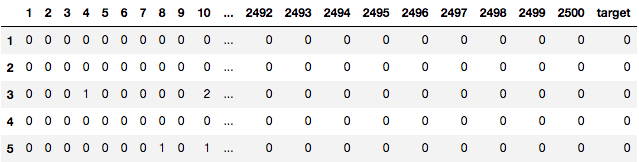

For this project, we utilzied a dataset from Stanford OpenClassroom. The dataset contains 960 real email messages. We applied a train-test split of 3:7. The common words like “and”, “the” and “of”, which might cause bias in our models, were removed from the dataframe. Each unique word was assigned to a unique word id, which is considered as an independent feature in our dataframe. In total, there are total 2500 features in our dataframe and a target value that indicates whether an email is spam or not--with 0 representing non spam and 1 representing spam.

Algorithms

K-Nearest Neighbors

K-Nearest Neighbors is a lazy learning algorithm. The key principle of this algorithm is to find the nearest distance from the training data points to the new data point, and classify the target data with similar labels. Also, the algorithm is simple to implement and flexible to feature choices. The algorithm is considered as a potential solution for the problem of email spam detection due to its features and functions: it classifies an email based on a similarity measure, like distance matrices. In this particular problem, most of the spam emails may share some similar features. The algorithm determines whether an email is spam or not through the distance of the features of the email to the training data points. The default number of neighbors of the classifier is 5. We tested with the data and find out 1 neighbor has the best performance in our model. The large K value in K-Nearest Neighbors may cause underfitting and high bias in our model, which leads to a low accuracy score. The overall performance of this algorithm is fairly good.

Naive Bayes

Naive Bayes is a family of algorithms based on the Bayes Theorem, with strong independence assumptions between the features. It first breaks down a document into tokens, and calculate the probability of the document being identified as a class based on the frequency of each token presented. The algorithm utilizes a posterior probability model, which is to calculate the conditional probability of an event after the relevant evidence or background is taken into account. This makes the algorithm very ideal for supervised machine learning settings such as document classification, since it is relatively easy to obtain well-labeled document datasets for training. In fact, Naive Bayes has been used for text classification for a long history and widely considered as the best algorithm for spam detection. This is resulted from a very core assumption of this algorithm: the value of a particular feature is independent of the value of any other feature, which is a prerequisite for the statistical model behind it. Favorably, in spam detection, every word, as a feature, can be regarded as independent from the rest of the words considering the nature of language. Using Maximum-likelihood can also make the algorithm linear time and highly scalable working with very large email datasets.

Decision Tree

Decision Tree is another algorithm that can be used for classification. A tree is constructed recursively by determining the best split with largest information gain. The branches are labelled with the values of the feature in the parent node. This algorithm could potentially work well with spam detection since the values of the features, which are the frequencies of the words, are discrete numbers. Moreover, unlike Naive Bayes algorithm, by calculating the largest information gain on each node, Decision Tree implicitly performs feature selection on the dataset. The top few nodes are actually the most important variables within the dataset. In other words, it determines the top-classifier words. This can be beneficial in terms of running time considering that we have 2500 features in total. However, without performing smoothing, depth limit, and having a large amount of data ( 2500 words are not enough), the model might result in overfitting.

Performance Summary

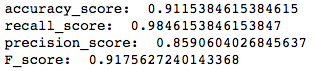

K-Nearest Neighbors:

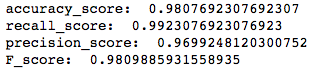

Naive Bayes

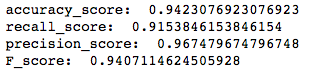

Decision Tree

Comparison

Accuracy, Recall, and Precision

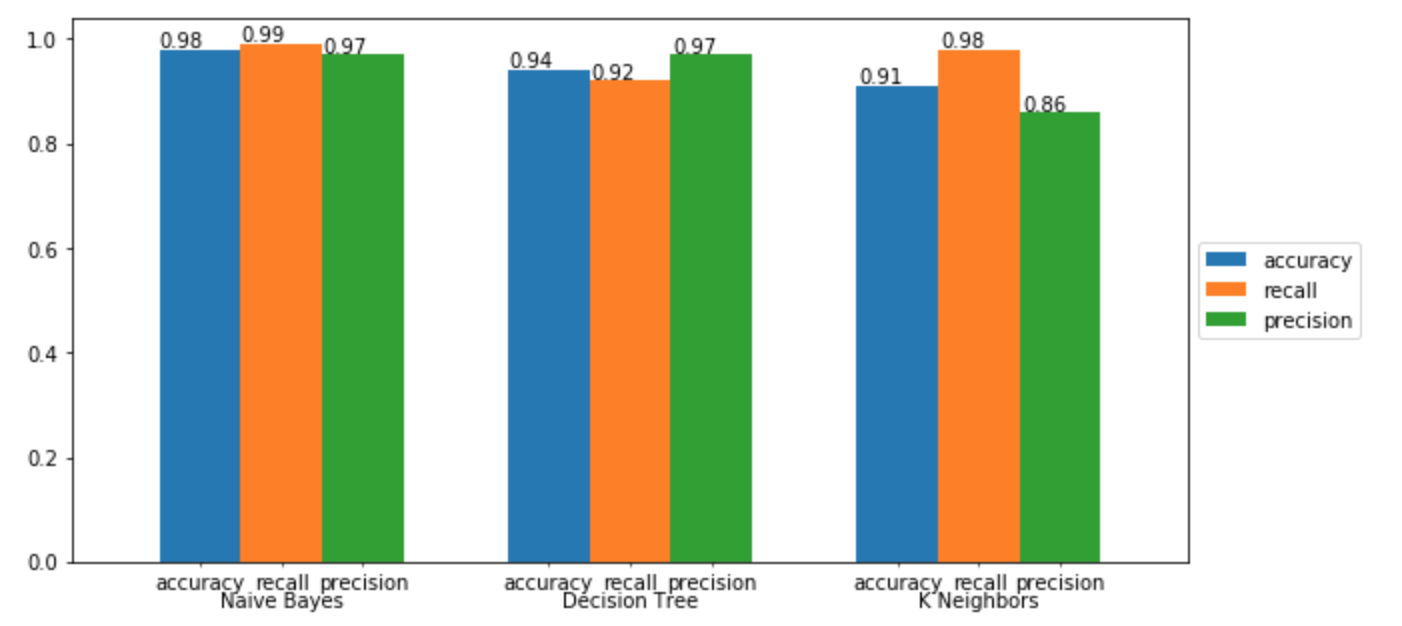

Accuracy is the most intuitive performance measurement in data science. Naive Bayes has 0.98 accuracy score, which is the highest score among three algorithms. The accuracy score of Decision Tree is only 0.94. For K Neighbors, the accuracy score drops to 0.91, which is the most accurate algorithm for the problem of spam detection. It is not enough to evaluate the performance of three algorithms only with accuracy. We also applied precision and recall into account in order to comprehensively assess the performance of algorithms. Precision indicates how well a model is out of the total predicted positive observations. Both Naive Bayes and Decision Tree have the same 0.97 precision scores. K Neighbors has the lowest precision score, 0.86. Recall represents the ratio of the actual positives to the true positive. Naive Bayes again has the highest recall score, 0.99. Unexpectedly, K Neighbors has a high recall score, 0.98. We believe that Naive Bayes has the best performance comparing with the other two algorithms, and would be the best solution for spam detection. Naive Bayes works great with high dimensions. The problem of spam detection also satisfies the independence assumption of Naive Bayes. Each word in the email list is independent as a feature of the model. K Neighbors performs worse in spam detection, because K Neighbors does not work well with high dimensions and it is highly sensitive to localized data.

Running Time

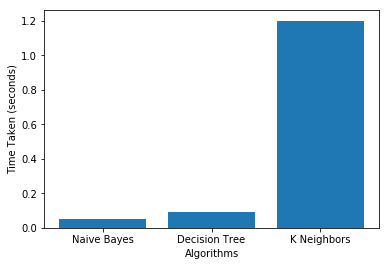

Computational efficiency is also a crucial metrics for assessing machine learning algorithm performance, regarding that good models are usually trained by very large dataset. We performed running time testing on the three algorithms to evaluate their computational friendliness. Despite working with relatively small dataset, they result instructively shows that the running time difference among these algorithms. In total, we used 960 emails, which contain 2500 unique words, for training and testing. Naive Bayes took about 0.05 second, Decision Tree took about 0.09 seconds, and, in contrast, K Nearest Neighbors took about 1.2 seconds. We concluded that K Nearest Neighbors has the least computational efficiency at spam detection. By understanding the mechanism of the algorithm itself, we can better explain the result: for each data point, KNN computes the distances between the point to every other point, which requires O(n)^2 times of distance calculation. The difference will become exponentially noticeable as the dataset grows.

Conclusion

After analyzing the working mechanisms and looking at different performance metrics of the three algorithms, we found that Naive Bayes turned out to be the highest performed algorithm for solving this spam detection problems, with 0.98 accuracy score, 0.99 recall score, and 0.97 precision score. This result can be explained by high dimensions of data and the independent nature of the features: every word in the documents can be considered as independent. On top of that, it has the shortest running time of 0.05 seconds. The use of Maximum-likelihood can help a small number of training data estimate the parameters necessary for classification. On the other hand, K-Nearest Neighbor illustrated its limitation in computation through the relatively large running time. Also, 2500 data points might be considered as sparse for using K-NN most effectively. Besides, Decision Tree also performed very well with discrete values and implicit feature selection in this analysis.

By evaluating and analyzing the performance metrics of the three algorithms in the context of spam detection, we have not only successfully selected the best algorithm for solving this particular problem, but also, most importantly, understood that every algorithm performs differently in varied domains regarding its strengths and limitations. We have also practiced the processes and techniques of assessing them and learned to make the right decisions accordingly. In the fancy AI or ML world, technologies, like the various algorithms, are changing rapidly. It is the ability to really understand the problem and choose the right technologies to tackle it, that’s going to make fancy technologies as fancy and powerful as it is.

Reference:

Statista (2018). Number of sent and received e-mails per day worldwide from 2017 to 2022 (in billions), Retrieved from: https://www.statista.com/statistics/456500/daily-number-of-e-mails-worldwide/

Stanford OpenClassroom. Ling-Spam Dataset, provided by Ion Androutsopoulos. Retrieved from: http://openclassroom.stanford.edu/MainFolder/DocumentPage.php?course=MachineLearning&doc=exercises/ex6/ex6.html