The goal of this application is to implement PPO algorithm[paper] on Open AI BipedalWalker enviroment.

PPO: Episode 8 vs Episode 125 vs Episode 240

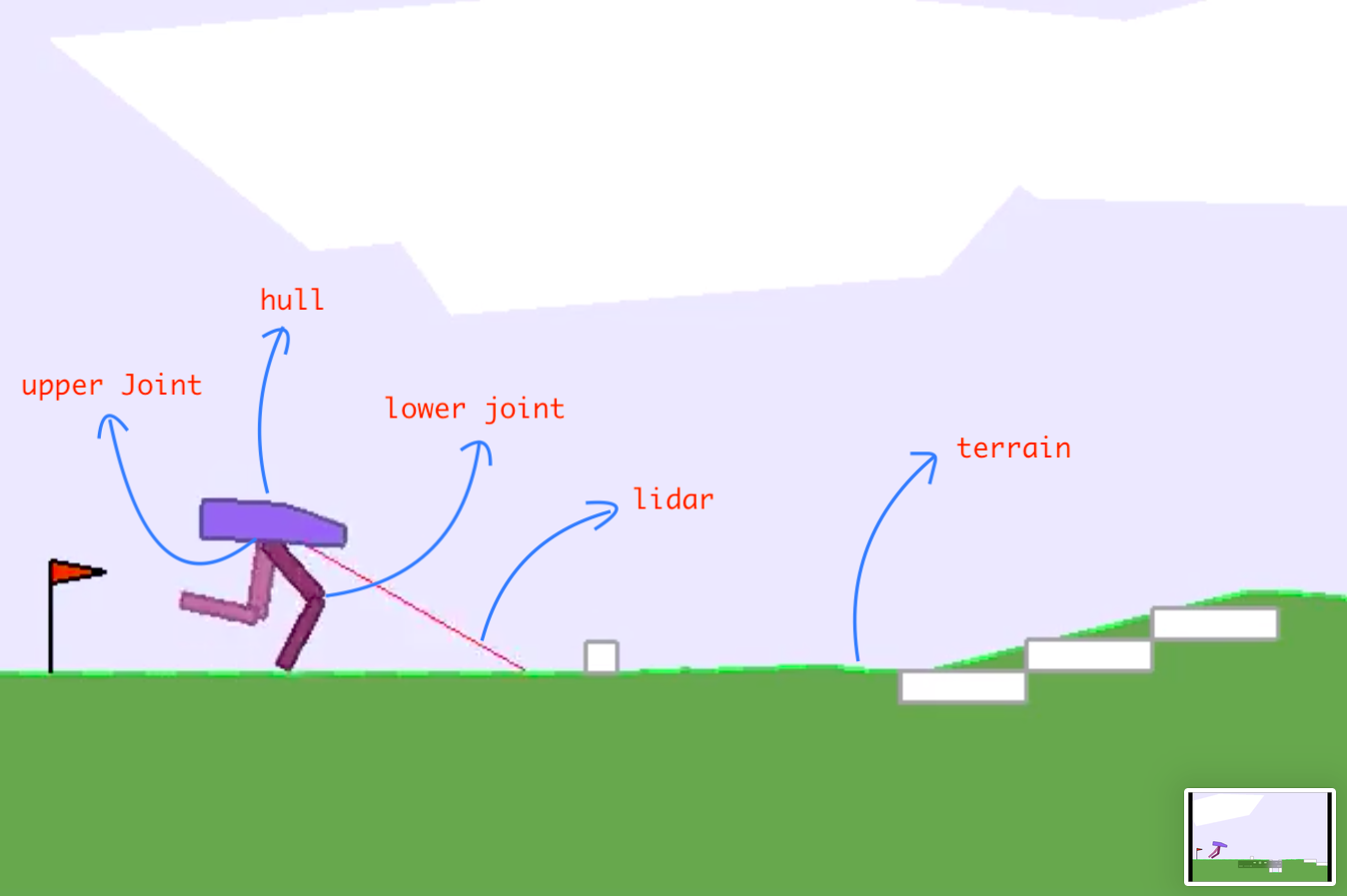

BipedalWalker is OpenAI Box2D enviroment which corresponds to the simple 4-joints walker robot environment. BipedalWalker enviroment contains the head (hull) and 4 joints that represent 2 legs. Normal version of BipedalWalker has slightly uneven terrain that is randomly generated. The robot moves by applying force to 4 joints.

BipedalWalker Enviroment Image source

State consists of hull angle speed, angular velocity, horizontal speed, vertical speed, position of joints and joints angular speed, legs contact with ground, and 10 lidar rangefinder measurements to help to deal with the hardcore version. There's no coordinates in the state vector. Lidar is less useful in normal version, but it works.

Reward is given for moving forward, total 300+ points up to the far end. If the robot falls, it gets -100. Applying motor torque costs a small amount of points, more optimal agent will get better score.

There are four continuous actions, each in range from -1 to 1, represent force which will be applied to certain joint.

The episode ends when the robot comes to the end of the terrain or episode length is greater than 500. Goal is reached when algorithm achieves mean score of 300 or higher on last 100 episodes (games).

Proximal Policy Optimization algorithm is advanced policy gradient method which relies upon optimizing parametrized policies with respect to the expected return (long-term cumulative reward) by gradient descent. The policy is parametrized with neural network, where input is 24x1 vector that represents current state and output is 4x1 vector with means of each action. In this case, two different neural networks were used, one for Policy (which is same as the policy network described previously) and Critic neural network which represents value function whose role is to estimate how good of a choise was an action chosen by Policy NN.

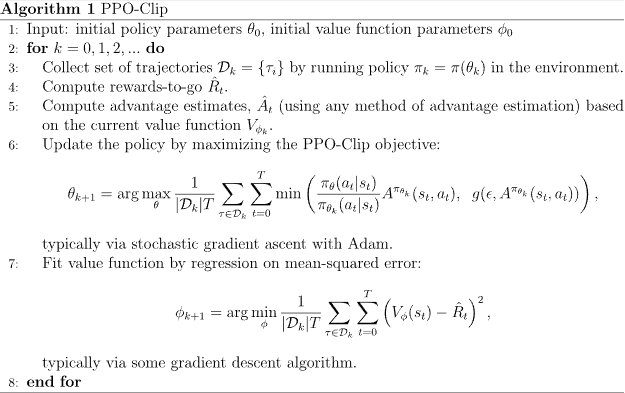

PPO algorithm is an upgrade to basic Policy Gradient methods like REINFORCE, Actor-Critic and A2C. First problem with basic PG algorithms was collapse in performance due to incorect step size. If step size is too big, policy will change too much, it its bad we wont be able to recover. Second problem is sample inefficiency - we can get more than one gradient step per enviroment sample. PPO solves this using Trust Regions or not allowing for step to becomes too big. Step size will be determened by difference between new (current) policy and old policy we used to collect samples. PPO suggests two ways to handle this solution: KL Divergence and Clipped Objective. In this project we used Clipped Objective where we calculated ratio between new and old policy which we clipped to (1-Ɛ, 1+Ɛ). To be pessimistic as possible, minimum was calculated between ratio * advantage and clipped ratio * advantage.

Since BipedalWalker is Continuous Control enviroment with multiple continuous actions we can't use NN to give us action probabilities. Instead NN will output four action means and we will add aditional PyTorch Parameter without input, which will represent logarithm of standard deviation. Using mean and std we can calculate Normal distribution from which can we sample to get actions we want to take.

Since basic PPO didn't give satisfactory results, there were several implementational improvements which resultet in better performance. Implemented improvements that are suggested by Implementation Matters in Deep Policy Gradients: A Case Study on PPO and TRPO Engstrom et al.:

- Value Function Loss Clipping

- Adam Learning Rate Annealing

- Global Gradient Clipping

- Normalization of Observation and Observation Clipping

- Scaling and Clipping Reward

- Hyperbolic Tangent Activations

Implemented improvements that are suggested by OpenAI's implementation of PPO algorithm:

- Not Sharing Hidden Layers for Policy and Value Functions

- Generalized Advantage Estimation (GAE)

- Normalization of Advantages

- The Epsilon Parameter of Adam Optimizer

- Overall Loss Includes Entropy Loss

To get accurate results, algorithm has additional class (test process) whose job is to occasionally test 100 episodes and calculate mean reward of last 100 episodes. By the rules, if test process gets 300 or higher mean score over last 100 games, goal is reached and we should terminate. If goal isn't reached, training process continues. Testing is done every 50 * 2048 steps or when mean of last 40 returns is 300 or more.

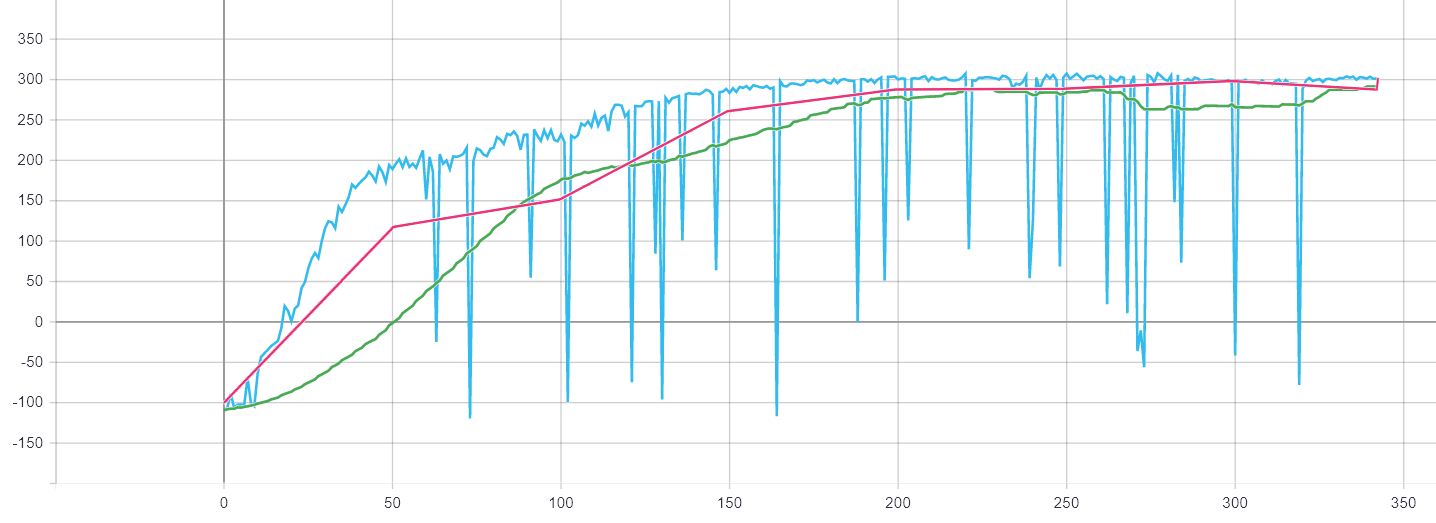

One of the results can be seen on graph below, where X axis represents number of steps in algorithm and Y axis represents episode reward, mean training return and mean test return (return = mean episode reward over last 100 episodes). Keep in mind that for goal to be reached mean test return has to reach 300. Also one step in algorithm is 2048 steps in enviroment.

- During multiple runs, mean test return is over 300, therefore we can conclude that goal is reached!

Additional statistics

- Fastest run reached the goal after 700,416 enviroment steps.

- Highest reward in a single episode achieved is 320.5.

If you wish to use trained models, there are saved NN models in /models. Keep in mind that in that folder you need both data.json and model.p files (with same date in name) for script load.py to work. You will have to modify load.py PATH parameters and run the script to see results of training.

If you dont want to bother with running the script, you can head over to the YouTube or see best recordings in /recordings.

Rest of the training data can be found at /content/runs. If you wish to see it and compare it with the rest, I recommend using TensorBoard. After installation simply change the directory where the data is stored, use the following command

LOG_DIR = "full\path\to\data"

tensorboard --logdir=LOG_DIR --host=127.0.0.1and open http://localhost:6006 in your browser. For information about installation and further questions visit TensorBoard github