rhte2019-maa-hcm-cost-aws

Welcome to the 2019 HCM Cost Management with OpenShift and AWS Lab

Red Hat is in the midst of developing SaaS offerings for our customer base all available at https://cloud.redhat.com and https://cloud.openshift.com. One of the early offerings will be Hybrid Cloud Cost Management (HCCM.) It is an Open Source project called "Koku" hosted at https://github.com/project-koku/ with documentation at https://koku.readthedocs.io/en/latest/

Cost Management reveals the true costs of Business Initiatives. It does so by appropriately matching resources and their costs across varied platforms. Resources are matched through common tagging and labeling of the resources used by an initiative and offering. Once the matches are made, Cost Management appropriate reports on them. Most cloud platforms offer hourly reports on the costs of resources consumed, and when properly labeled and tagged, analysts can report on them. Further features like forecasting, price-markups, discounts (like AWS Reserve Instances) etc. are being developed now.

To understand and sell this product, Red Hatters will not only need to learn the the techincal details of deploying and managing this product, but Red Hatters will also need to gain facility with basic financial terminology and the problem domains that this product seeks to solve, including:

-

Budgeting

-

Forecasting

-

Workload Optimization

A blog post series is being planned, with explanations of the theory and examples of the product in use.

Red Hat Global Partner and Technical Enablement (GPTE) will be producing on-line training, and itself will be a business use case for the product. Learn more in upcoming blog posts.

In this lab you will:

-

Walk through the setup of the AWS Cost Usage And Reporting into a S3 bucket

-

Walk through the setup of the Metering Operator on your own cluster

-

Add your cluster as a "source" for cost related information to the Account

-

Use labels and tags to associate OpenShift resources with AWS resource tags according to Business Initiatives

-

Analyse of the existing Account’s current costs per Business Initiative

-

Forecast the cost of the Business’s proposed Initiatives

|

Warning

|

Hybrid Cloud Cost Managment is in the BETA state. Not all features are expected to work. Your suggestions for improvements and features are welcome. |

|

Note

|

OCP Usage Data Upload Upon General Availability, HCCM will upload usage data via the separate project OpenShift Telemetry (OpenShift Telemetry). Until the products are integrated and are GA, a sub-project called "katakura" is supplied by the Cost Management team to upload data from OpenShift to the HCCM SaaS. |

-

Your OCP4 Cluster - one small OCP4 cluster

-

1 Master, 3 workers, 1 bastion

-

Metering Operator already installed. Learn more at https://github.com/operator-framework/operator-metering

-

-

Your Bastion Host

-

Bastion SSH: user:`lab-user` password:

rhte2019 -

OCP 4 Installer - already complete

-

OCP

occlient -system:adminkeys already set up -

Cost Collector (sub-project "katakura") already installed and configured

-

-

Your AWS Sandbox Account

-

CLI access ONLY (sorry)

-

Details are custom to each student, via email

-

aws access key id

-

aws secret access key

-

-

-

The Cost Management SaaS accounts

-

Account 1 - Number: 6289400 (rhte19mbu1org)

-

Live data where you will add your clusters as a data source

-

-

Account 2 - Number: 6289401 (rhte19mbu2org)

-

Static Sample data where you can see an example of Tags and Labels working together

-

-

-

Get a GUID from the GUIDGrabber. This is the GUID of your personal cluster.

-

Cluster 1 has a bastion VM that you will use to execute all tasks.

-

GUIDGrabber will show the command to connect to the bastion VM.

-

Your cluster runs in a Sandbox. Make a note of the Sandbox Number.

-

For example if GUIDGrabber shows the following command:

ssh lab-user@bastion.GUID.sandboxNN.opentlc.com

this means that your GUID is b1fa and your Sandbox Number is 23.

-

-

|

Caution

|

You are not actually doing this part of the lab. This is for your reference so you are familiar with the AWS interface. |

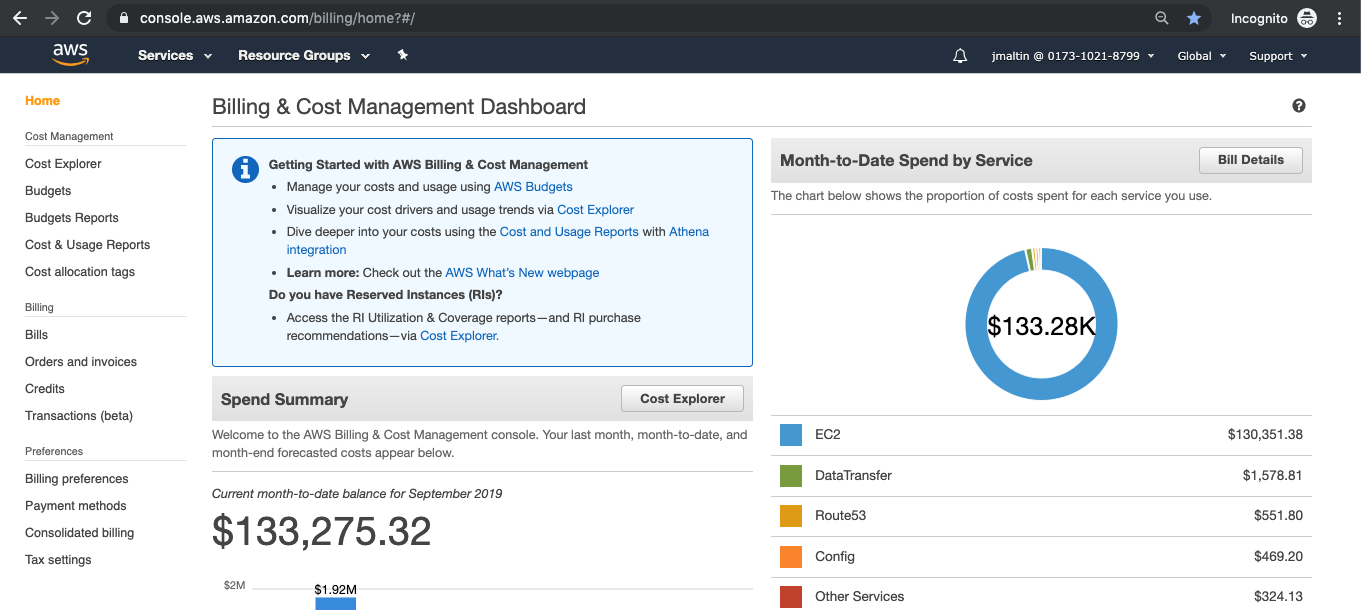

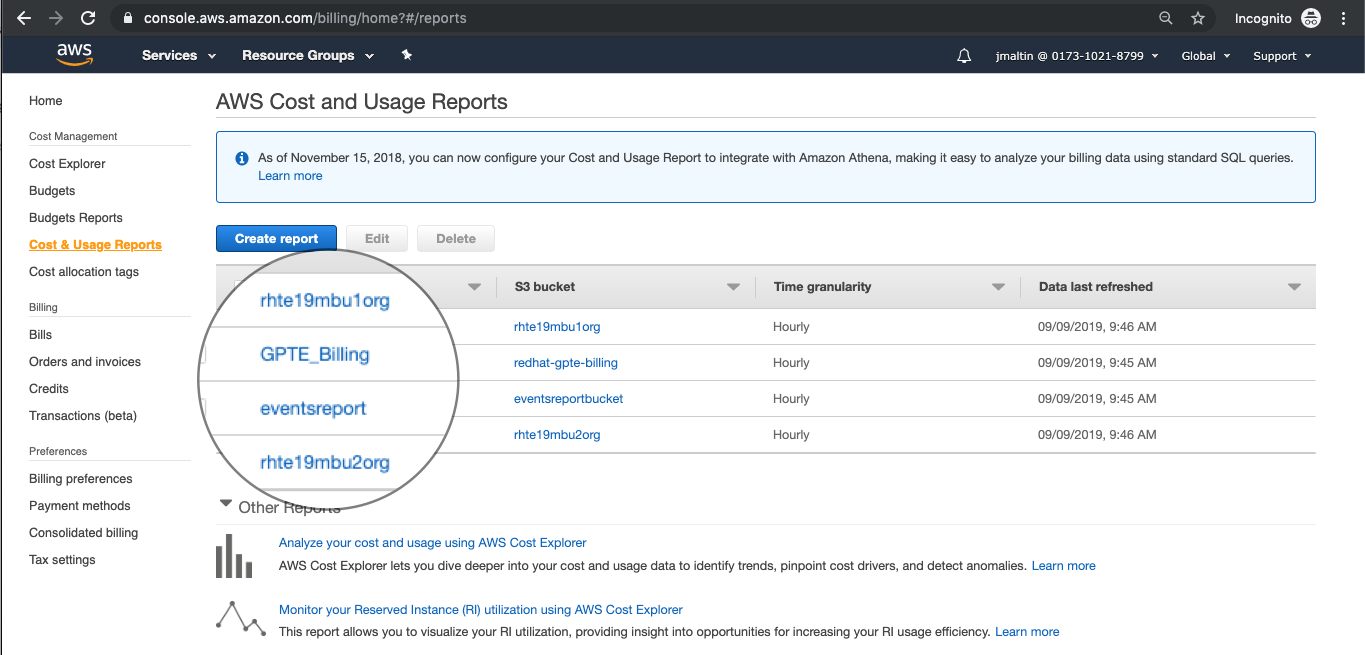

Your cloud provider is the first stop in gathering cost information. The Cost Management will support both AWS and Azure, with more platforms coming. We’ll focus on AWS.

|

Note

|

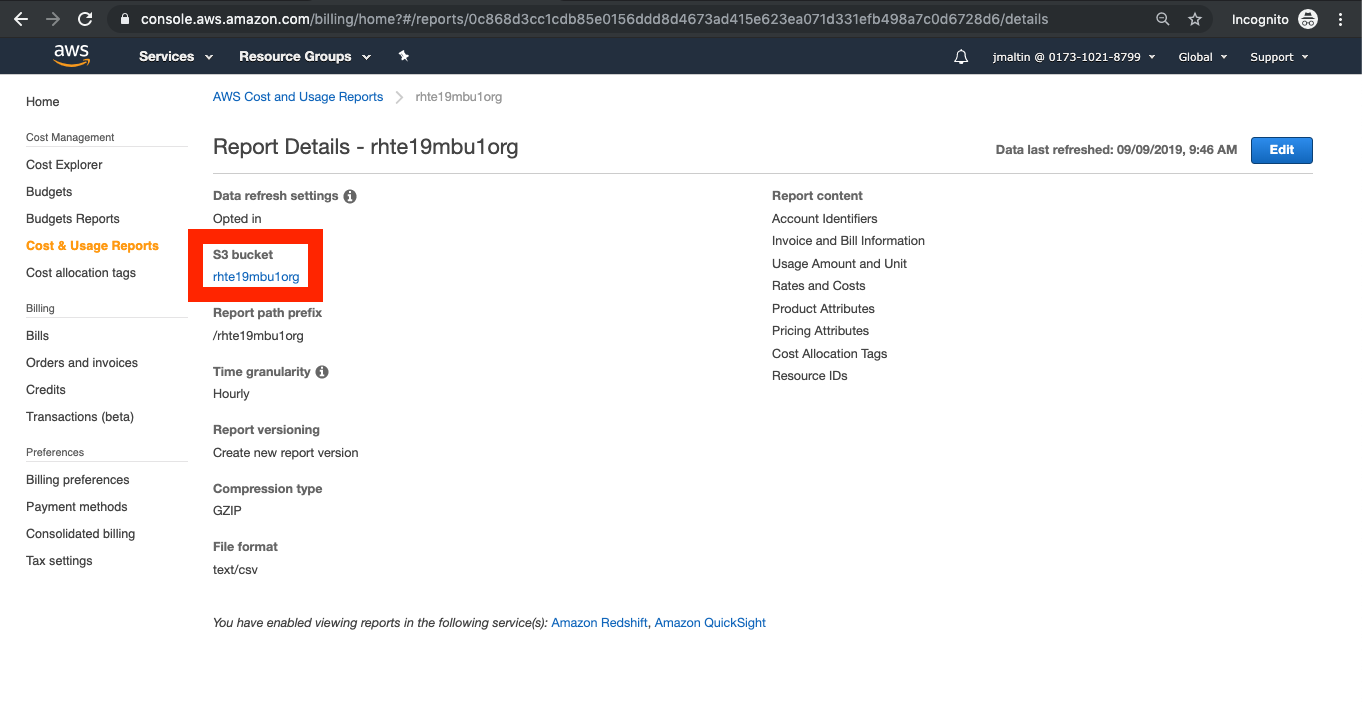

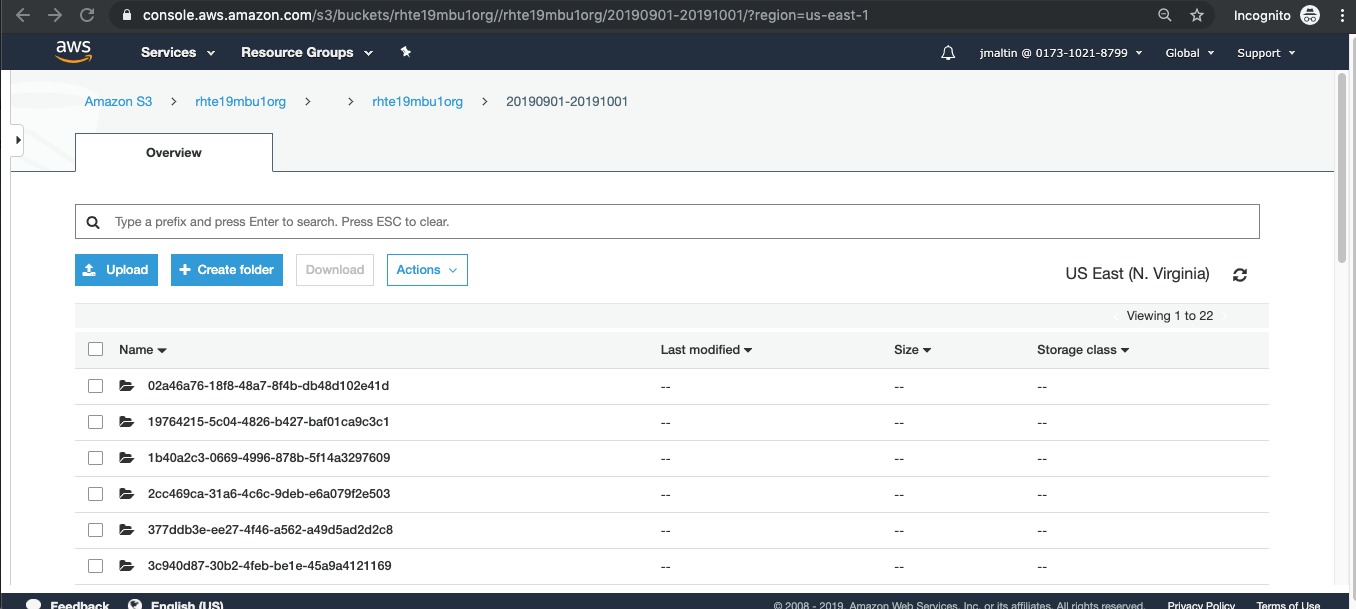

Since your sandbox linked account does not have access to the AWS web console, we’ve made screenshots to walk you through the process. |

-

Turn on AWS Cost and Usage Reports

-

There’s already a Cost and Usage Report already setup for the rhte19mbu1org

-

AWS is generating the reports and putting them in a bucket named rhte19mbu1org

-

AWS has already filled the bucket with some information for September 2019

-

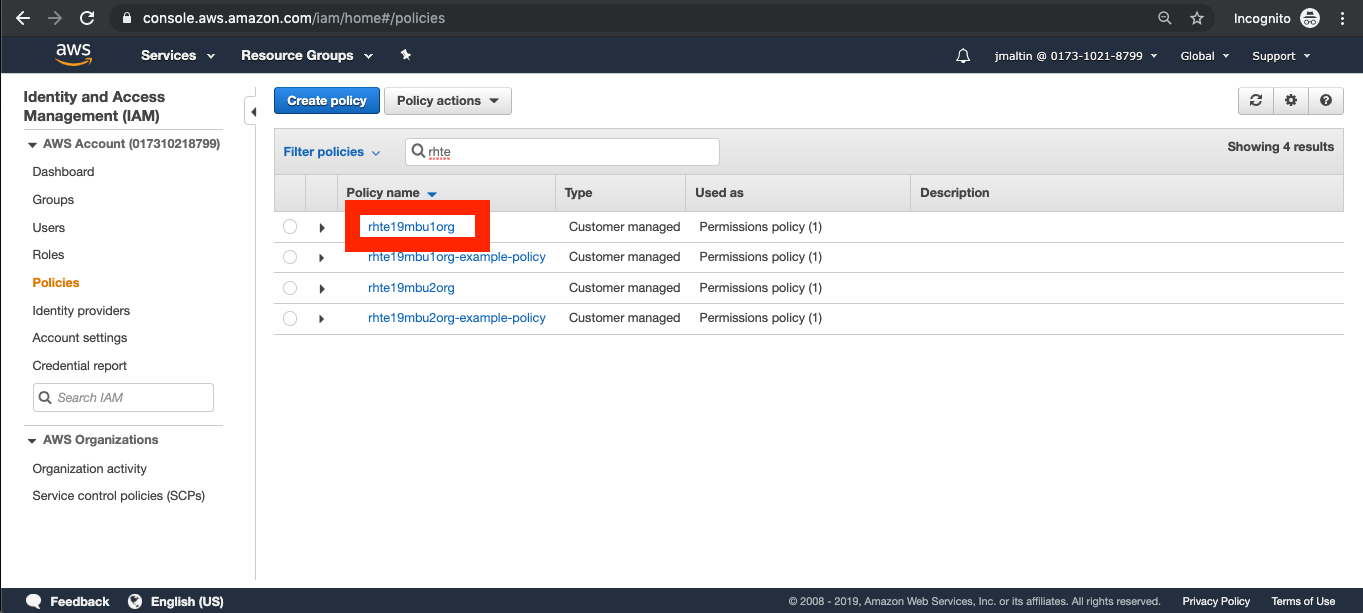

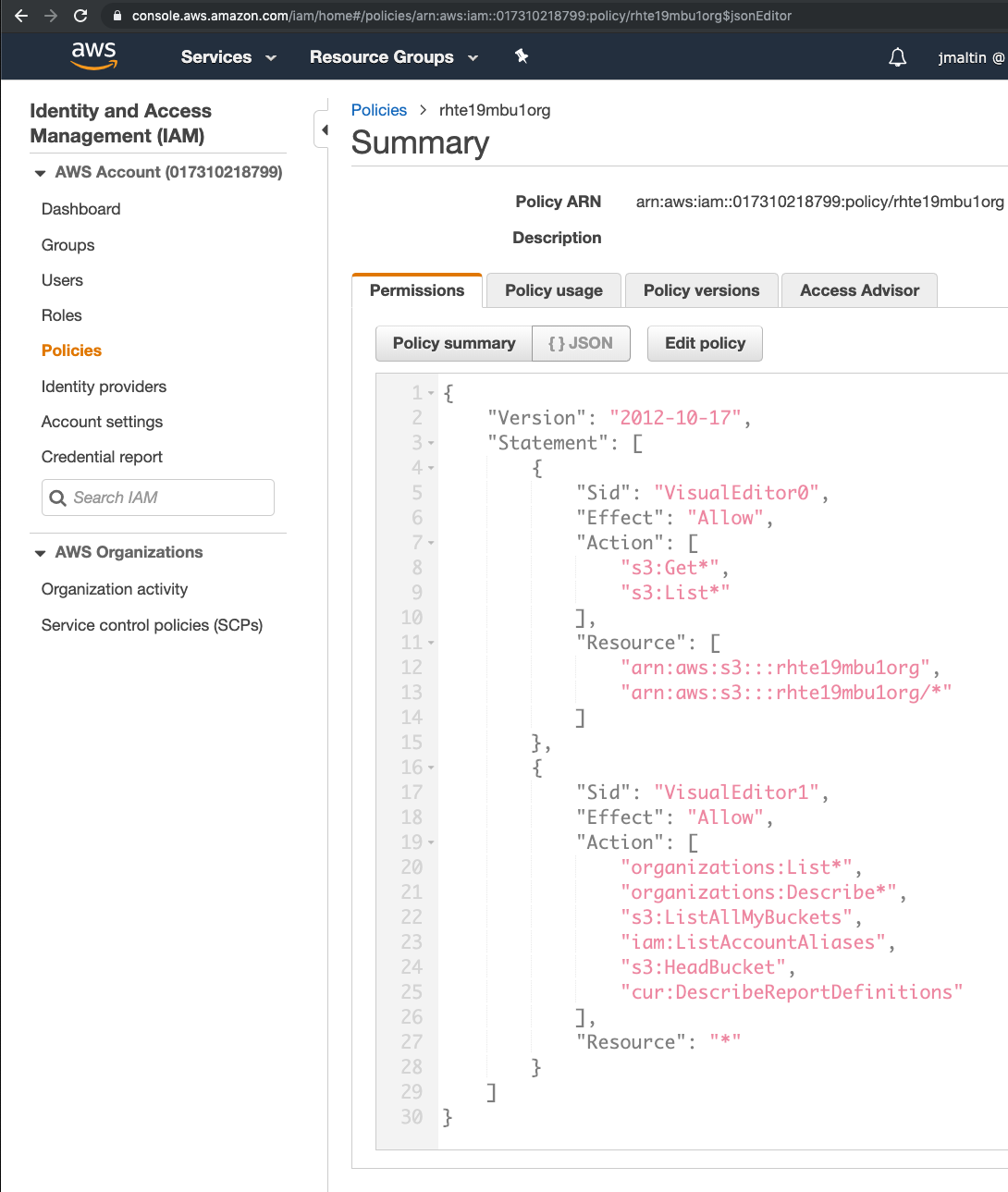

To protect the bucket and allow only the HCCM SaaS access to the bucket, a policy is created.

-

Red Hat HCCM accesses the bucket based on a strict access policy

-

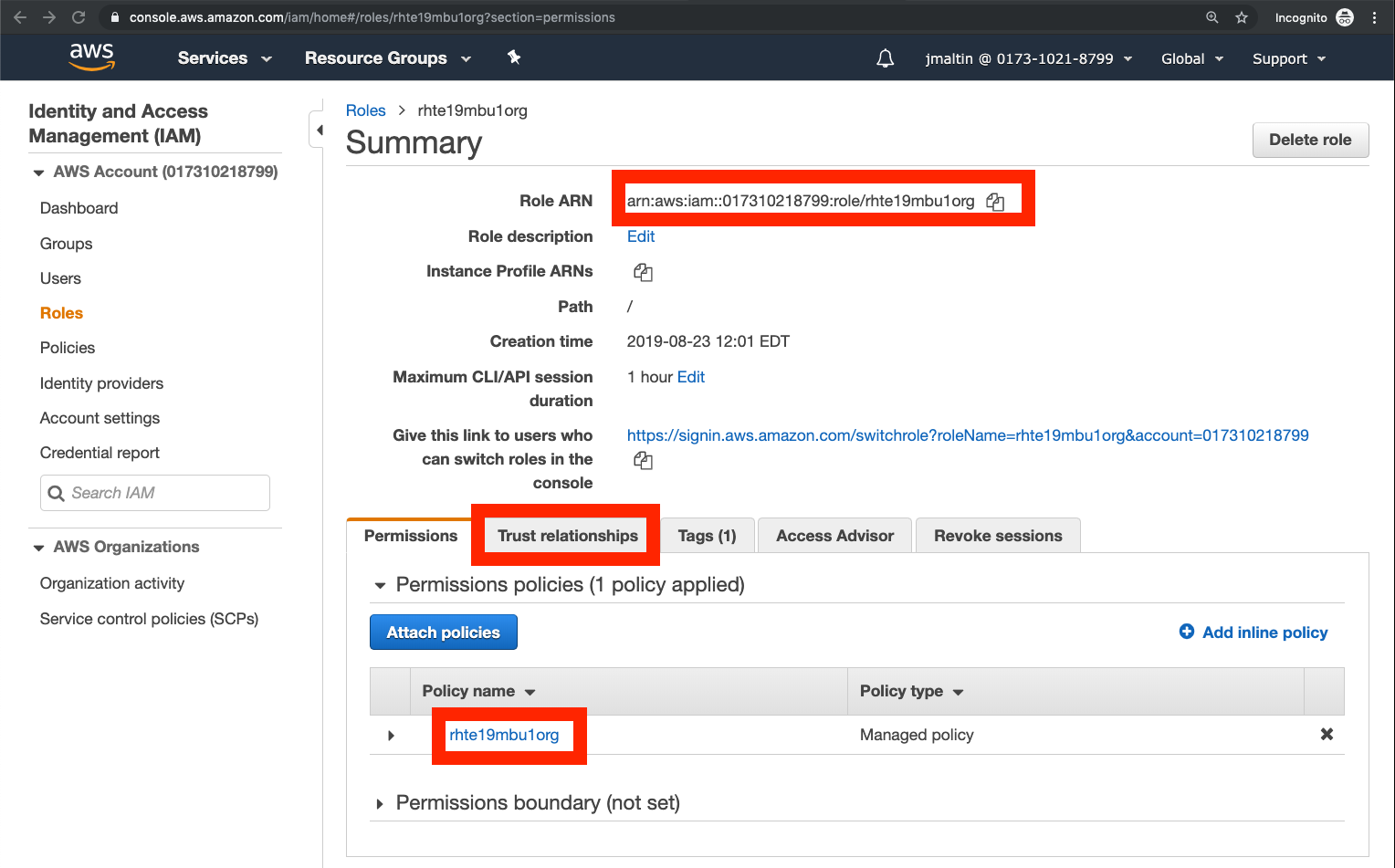

An AWS Role is created to join the policy governing bucket access with the HCCM root account as a Trust Relationship.

-

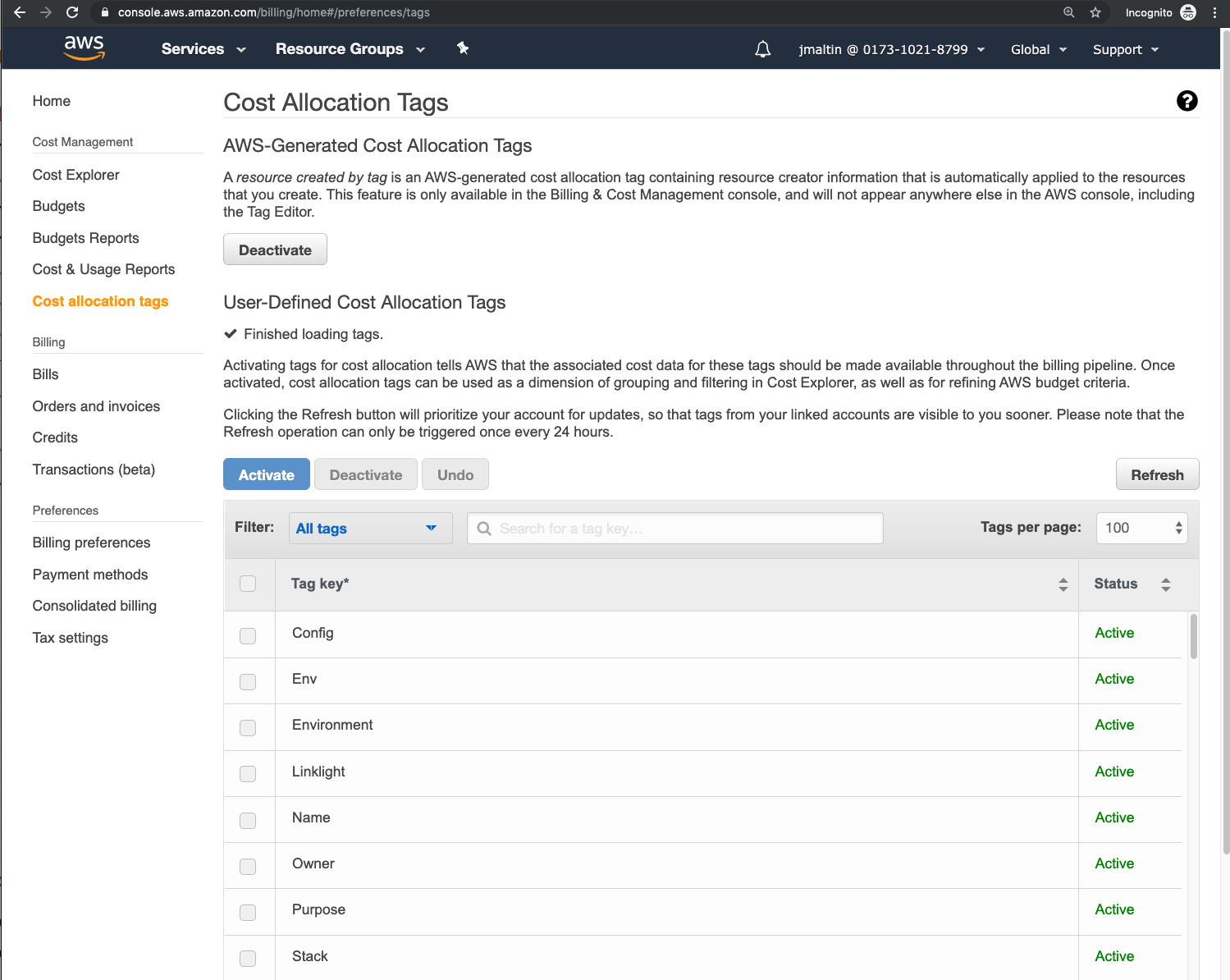

Finally, select AWS tags that will be used by AWS to report resource utilization.

-

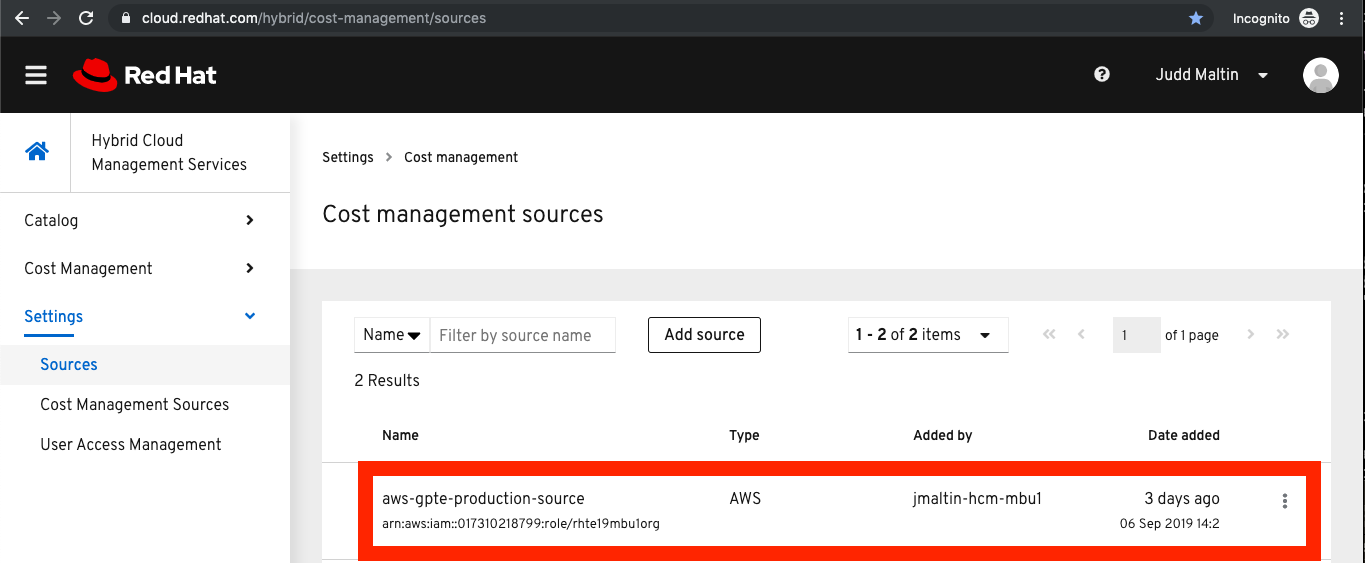

Now, if you were to change to the HCCM "Cost Management Sources" GUI you would add an AWS source by indicating the bucket name and role created above. Don’t do it

-

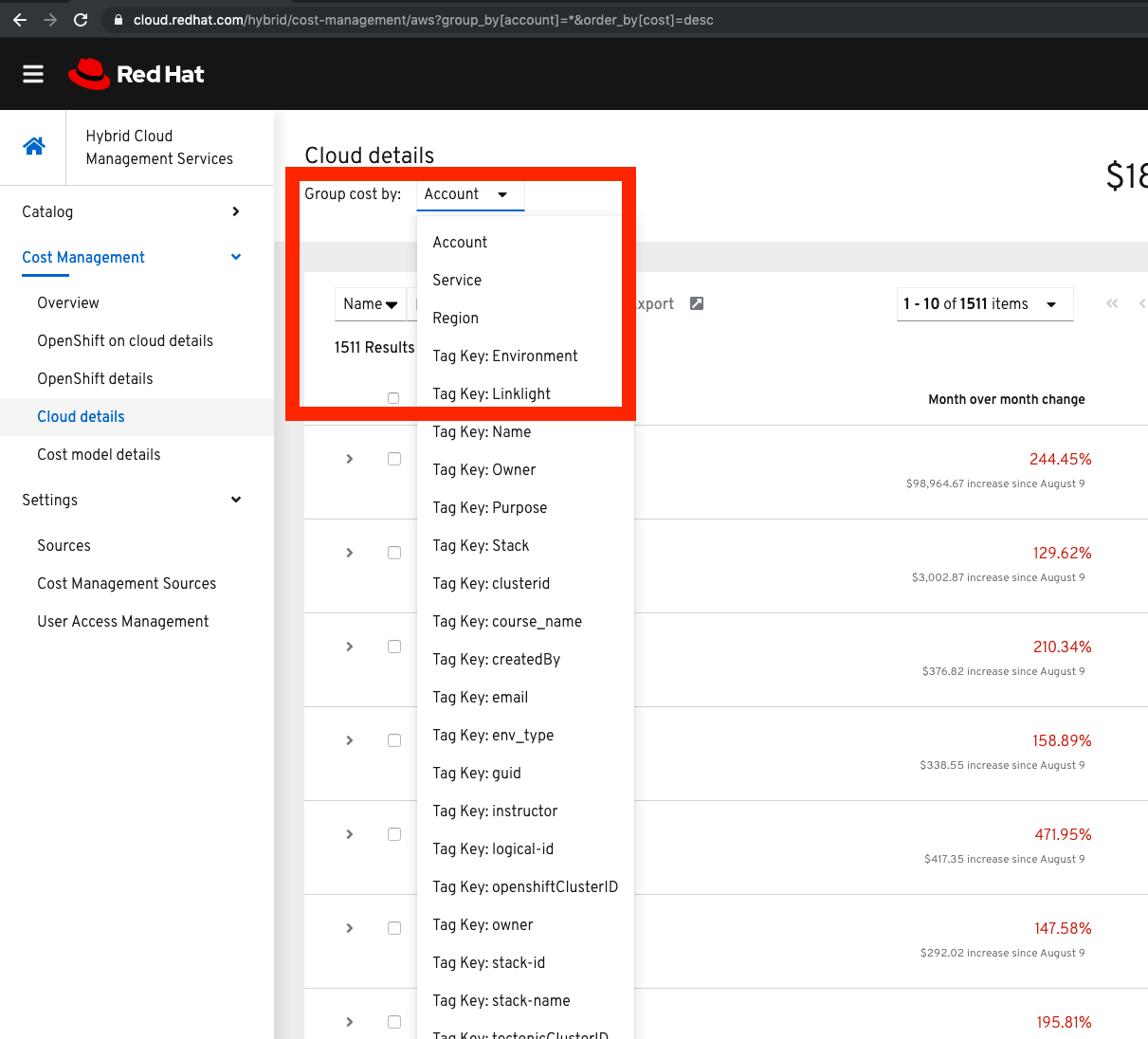

Within a few hours, the cloud tags would appear in the HCCM "Cloud Details" GUI. You can then group your costs by these tags and begin getting insights into the cost of your business initiatives. account=*&order_by[cost]=desc

|

Caution

|

Yes, actually do this part of the lab |

On the bastion host, use the oc tool to talk to the API and learn about the Metering Operator

|

Note

|

If you have trouble logging in, ask one of the lab assistants |

-

SSH from your laptop to the Bastion

$ ssh lab-user@<bastion>The password is

rhte2019 -

Become

ec2-user, who setup the OCP4 cluster.$ sudo -i $ su - ec2-user $ oc whoamiSample Output:system:admin -

A dedicated namespace was create for OpenShift Metering

$ oc project openshift-meteringSample Output:Now using project "openshift-metering" on server "https://api.shared.na.openshift.opentlc.com:6443". -

The Metering Operator was made available to the cluster via the Metering Catalog Source

$ oc get catalogsource -ASample Output:NAMESPACE NAME NAME TYPE PUBLISHER AGE openshift-logging cluster-logging-operator Custom grpc Custom 6d3h openshift-marketplace certified-operators Certified Operators grpc Red Hat 6d4h openshift-marketplace community-operators Community Operators grpc Red Hat 6d4h openshift-marketplace redhat-operators Red Hat Operators grpc Red Hat 6d4h openshift-metering metering-operators Custom grpc Custom 6d3h openshift-operator-lifecycle-manager olm-operators OLM Operators internal Red Hat 6d4h openshift-operators elasticsearch-operator Custom grpc Custom 6d3h -

It needs an OLM OperatorGroup to define relationships between operators. (More OLM info here.)

$ oc get operatorgroup metering-operators -n openshift-metering -oyamlSample Output

apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: annotations: olm.providedAPIs: HiveTable.v1alpha1.metering.openshift.io,Metering.v1alpha1.metering.openshift.io,PrestoTable.v1alpha1.metering.openshift.io,Report.v1alpha1.metering.openshift.io,ReportDataSource.v1alpha1.metering.openshift.io,ReportQuery.v1alpha1.metering.openshift.io,StorageLocation.v1alpha1.metering.openshift.io creationTimestamp: 2019-09-03T21:42:54Z generation: 2 name: metering-operators namespace: openshift-metering resourceVersion: "71746600" selfLink: /apis/operators.coreos.com/v1/namespaces/openshift-metering/operatorgroups/metering-operators uid: c998fe67-ce93-11e9-b5d9-0a16ab677b4c spec: serviceAccount: metadata: creationTimestamp: null targetNamespaces: - openshift-metering status: lastUpdated: 2019-09-03T21:42:54Z namespaces: - openshift-metering -

The Metering Subscription is also part of the OLM and defines which version and channel

$ oc get subscriptions.operators.coreos.com metering -n openshift-metering -oyaml

Sample Output

apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: creationTimestamp: "2019-09-10T15:45:14Z" generation: 1 labels: csc-owner-name: installed-community-openshift-metering csc-owner-namespace: openshift-marketplace name: metering namespace: openshift-metering resourceVersion: "20929" selfLink: /apis/operators.coreos.com/v1alpha1/namespaces/openshift-metering/subscriptions/metering uid: fb4f6b21-d3e1-11e9-9c86-06ae53090800 spec: channel: preview name: metering source: metering-operators sourceNamespace: openshift-metering status: currentCSV: metering-operator.v4.1.0 installPlanRef: apiVersion: operators.coreos.com/v1alpha1 kind: InstallPlan name: install-ln82l namespace: openshift-metering resourceVersion: "20867" uid: 341e2c12-d3e2-11e9-8f8b-06ae53090800 installedCSV: metering-operator.v4.1.0 installplan: apiVersion: operators.coreos.com/v1alpha1 kind: InstallPlan name: install-ln82l uuid: 341e2c12-d3e2-11e9-8f8b-06ae53090800 lastUpdated: "2019-09-10T15:46:51Z" state: AtLatestKnown -

Finally, we actually kicked off the Metering install by creating the Metering Custom Resource

$ oc describe meterings.metering.openshift.io operator-meteringSample Output

Name: operator-metering Namespace: openshift-metering Labels: <none> Annotations: <none> API Version: metering.openshift.io/v1alpha1 Kind: Metering Metadata: Creation Timestamp: 2019-09-03T17:32:17Z Generation: 6 Resource Version: 1824854 Self Link: /apis/metering.openshift.io/v1alpha1/namespaces/openshift-metering/meterings/operator-metering UID: c6d01c80-ce70-11e9-ae9b-021aec9d41ee Spec: Hdfs: Spec: Datanode: Resources: [ommitted] Namenode: Resources: [ommitted] Presto: Spec: Hive: Metastore: Resources: [omitted] Storage: Size: 10Gi Server: Resources: [omitted] Presto: Coordinator: Resources: [omitted] Worker: Replicas: 1 Resources: [omitted] Reporting - Operator: Spec: Auth Proxy: Cookie Seed: 7091da5a0a374e4a92a9356c963e1690 Delegate UR Ls Enabled: true Enabled: true Subject Access Review Enabled: true Resources: [omitted] Route: Enabled: true Status: Observed Version: 680107 Events: <none> -

After a while, check it out, there are pods in the Metering Namespace. The metering operator is an implementation of hdfs, i.e. Hadoop. Big Data

$ oc get pods -n openshift-meteringSample Output:NAME READY STATUS RESTARTS AGE hdfs-datanode-0 1/1 Running 1 6d3h hdfs-namenode-0 1/1 Running 1 6d3h hive-metastore-0 1/1 Running 1 6d3h hive-server-0 1/1 Running 1 6d3h metering-operator-698f55bb84-fx5zl 2/2 Running 2 4d16h presto-coordinator-7c57b6dfb5-cndbx 1/1 Running 1 4d16h presto-worker-69f6f8c587-697g4 1/1 Running 1 6d3h reporting-operator-6b5fdc8b5c-29qnx 2/2 Running 3 6d3h

-

The OCP Usage uploader created some reports in the reporting operator that was installed. They’re prefixed with HCCM.

$ oc get reportsSample Output:NAME QUERY SCHEDULE RUNNING FAILED LAST REPORT TIME AGE hccm-openshift-persistentvolumeclaim hccm-openshift-persistentvolumeclaim hourly ReportingPeriodWaiting 2019-09-09T21:00:00Z 6d3h hccm-openshift-persistentvolumeclaim-lookback hccm-openshift-persistentvolumeclaim-lookback hourly ReportingPeriodWaiting 2019-09-09T21:00:00Z 6d3h hccm-openshift-usage hccm-openshift-usage hourly ReportingPeriodWaiting 2019-09-09T21:00:00Z 6d3h hccm-openshift-usage-lookback hccm-openshift-usage-lookback hourly ReportingPeriodWaiting 2019-09-09T21:00:00Z 6d3h -

The metering operator has a lot of moving parts. There are more things to try, if you like:

Hadoop Queries$ oc get reportqueries.metering.openshift.ioHadoop DataSources$ oc get reportdatasources.metering.openshift.io

|

Note

|

This is the fun part! |

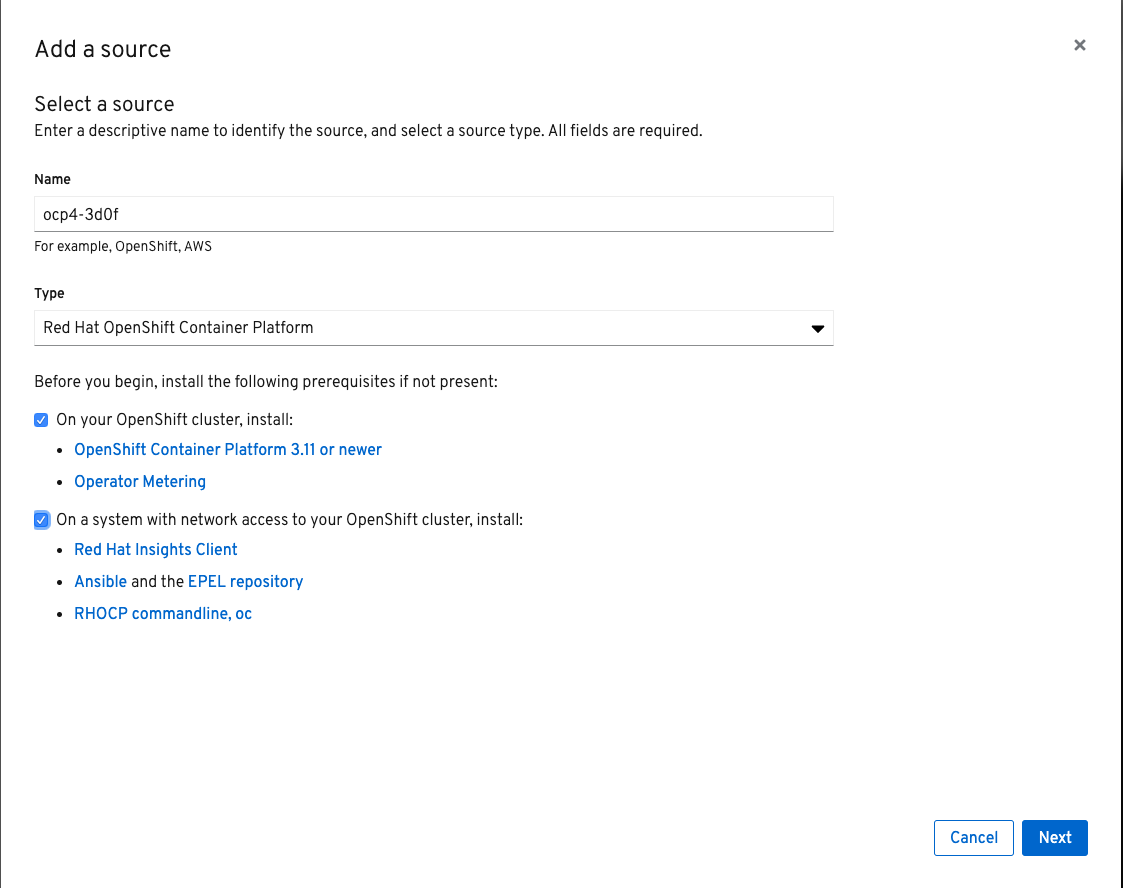

To use the Cost Management website to add a source, you’ll need to gather details of your OCP4 cluster and the uploader.

To upload data that the metering operator has collected into the Cost Management SaaS, we’ll be using the Koku sub-project korekuta. Korekuta has several moving parts:

Korekuta components:

-

Custom reports added to the reporting-operator part of the metering-operator

-

Shell scripts to run the collector

-

Ansible playbooks to keep the shell scripts a sane length

-

Cronjobs to collect data periodically

-

A configuration of the

insights-client. It first sets up reports and then periodically reads the reports from the metering operator and uploads them to cloud.redhat.com with theinsights-client.

We’ve already set all this up for you on your environment. (You’re welcome.) Let’s have a look at its configuration.

-

The

ocp_usage.shscript keeps its configuration data in the filesystem of the bastion host. The directory names under$HOME/.config/ocp_usage/are the cluster identifiers.Examine the Configs$ cat $HOME/.config/ocp_usage/*/config.json

Sample Output:{ "ocp_api": "https://api.cluster-7371.7371.sandbox448.opentlc.com:6443", (1) "ocp_token_file": "/home/ec2-user/7371.token", (2) "ocp_cluster_id": "a1d4986f-eb03-57a9-bd1d-2ed6a9af4da0", (3) "ocp_metering_namespace": "openshift-metering", (4) "ocp_cli": "/usr/bin/oc", (5) "ocp_validate_cert": "False", (6) "metering_api": "https://metering-openshift-metering.apps.cluster-7371.7371.sandbox448.opentlc.com" (7) }-

The

ocp_usage.shcollector will access the OpenShift cluster through the API endpoing. Get it withoc whoami --show-server -

The token that belongs to the service account that was created to display reports. Get it with

oc serviceaccounts get-token reporting-operator -n openshift-metering -

The cluster identifier used between the

ocp_usage.shscripts and the HCCM SaaS. Also the name of the parent directory. -

This is the Metering Operator namespace.

-

The is the the

occommand line tool appropriate for accessing this cluster. Might need anocclient version 3 for older clusters. -

Certs are optional, though encouraged.

-

The Route to the Reporting system to gather report to upload via

insights-client. Get it with `oc get route -n openshift-metering metering -o=jsonpath='{.status.ingress[0].host}'

-

-

The korekuta source code is in

/home/ec2-user/korekuta-master/ -

There’s a cronjob in the ec2-user’s account:

$ crontab -l

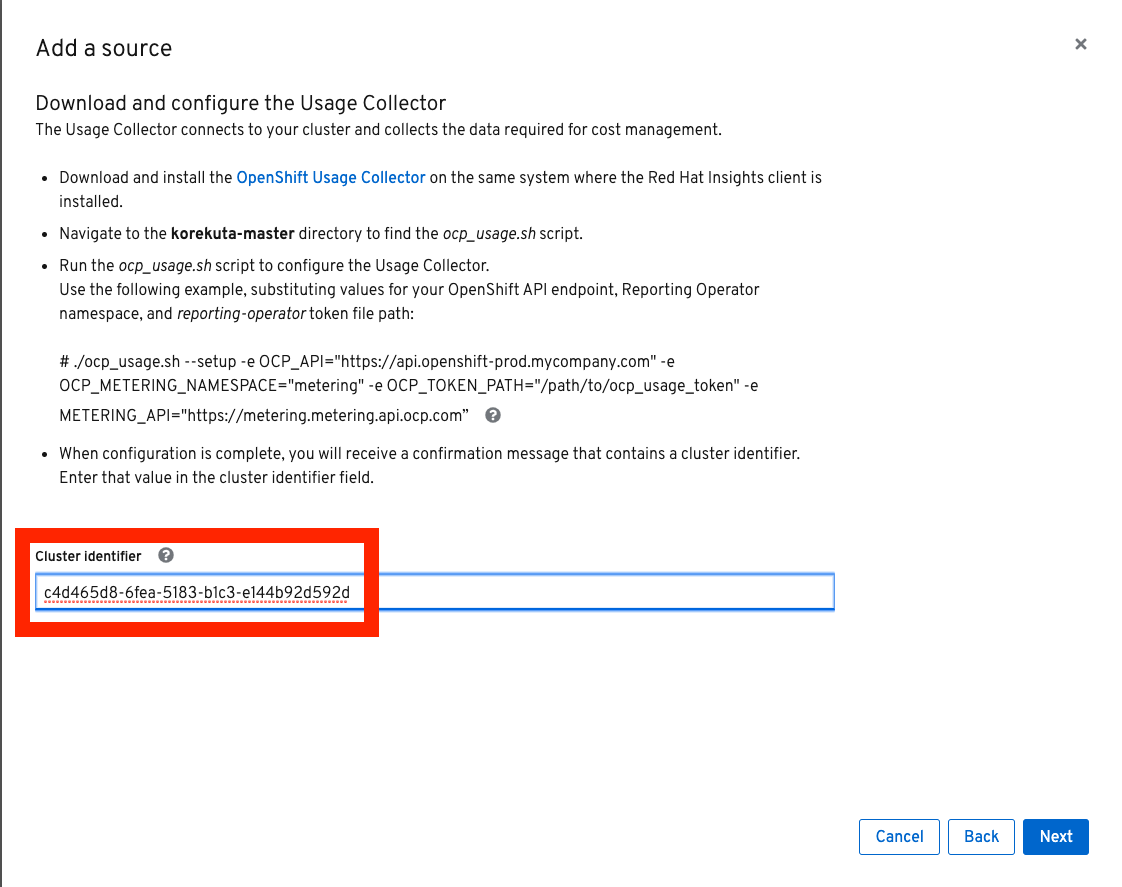

Sample Output:#Ansible: korekuta */45 * * * * /home/ec2-user/korekuta-master/ocp_usage.sh --collect --e OCP_CLUSTER_ID=c4d465d8-6fea-5183-b1c3-e144b92d592d

-

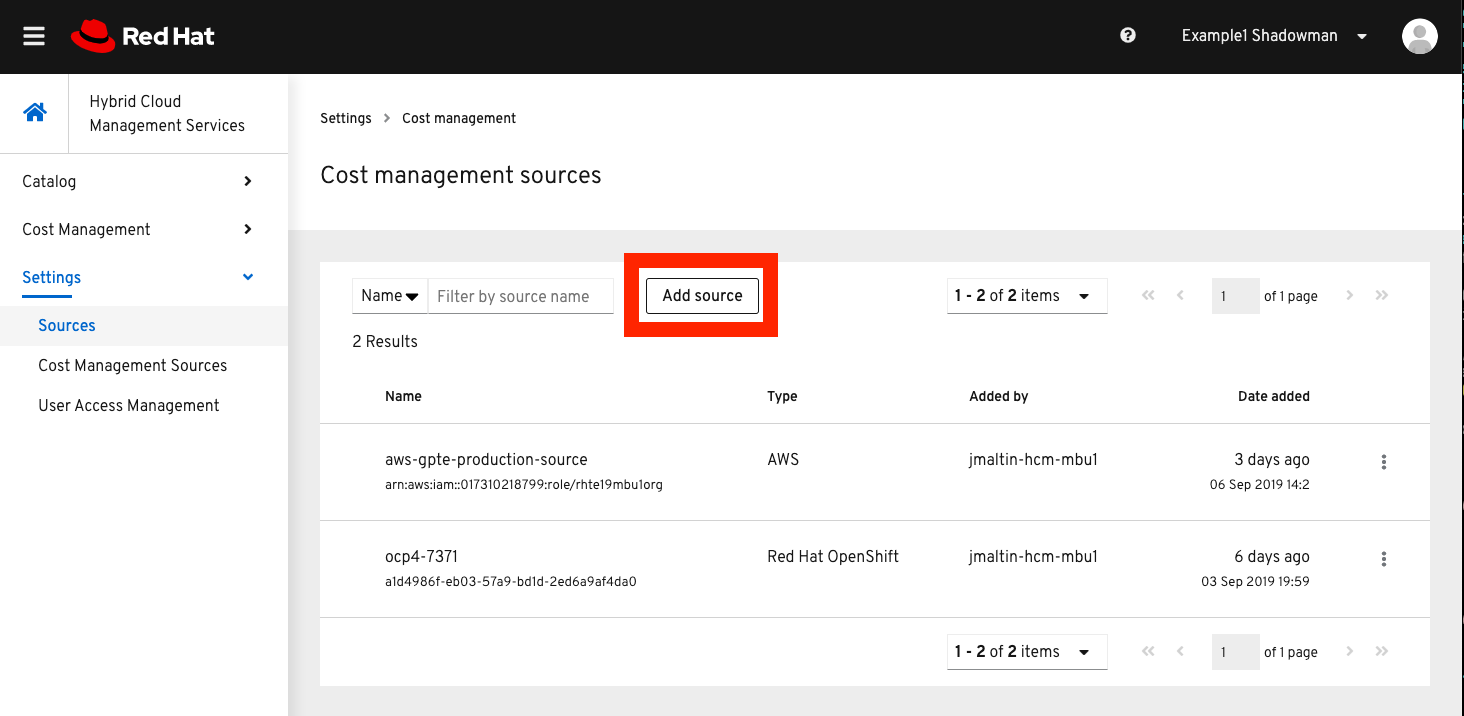

Navigate to https://cloud.redhat.com/hybrid/cost-management/sources

-

Sign in as username

rhte-example-1passwordr3dh4t1!

|

Note

|

You may have to again go to https://cloud.redhat.com/hybrid/cost-management/sources |

-

Click Add Source

-

Fill out the Add a source form:

-

You already have the token. Click Next

-

Paste the Cluster Identifier you got in the last step. It’s also in the output of the crontab.

-

The crontab is already setup for you. Click Next

-

Confirm the status details and click Add Source

-

You will eventually see your cluster in the list of Top Clusters or click on All Clusters to find yours.

|

Note

|

If data for your cluster hasn’t already populated, wait a few hours for Korekuta and AWS to deliver reports and Koku to process them. Report frequencies are by the hour. Initial reports can take up to four hours to sync properly. |

GPTE is in the business of delivering training. GPTE delivers both online training (ELT) and in-person training (ILT).

Let’s create a system to track the cost of each student’s resource usage in the cloud as they take classes.

When students take classes they use online lab environments to do the exercises taught in their classes. The labs environemnts are hosted in the cloud. The lab environments for each student can be provided by "Dedicated Environments" or "Shared Clusters," and sometimes both.

"Shared Clusters" are made up of resources shared with other students, on which they do their lab work. For example, students in a Shared Cluster are creating and deleting projects and associated OpenShift resources as part of their training. Or perhaps, they might be sharing resources by pulling images from a common Quay registry.

"Dedicated Environments" are created for the student, and only the individual student has access to the resources. Oftentimes, these students are confined to a linked or "sandbox" account where they can create new cloud resources in a controlled fashion.

Classes can use Shared and/or Dedicated Resources to provide online environments to the students running labs as the lab creator sees fit. ELTs and ILTs can be taught by giving students access to a "Shared Cluster," or allowing the student to create new "Dedicated Environments". Some use both "Shared Clusters" and "Dedicated Environments."

By default, the Red Hat Cost Management service can detect which AWS EC2 instance IDs are being used by an OpenShift cluster. This gives the user coarse grained information regarding the Cloud Resource consumption of the cluster. This would be appropriate for the OCP-related costs of a student with a Dedicated Environment. However, this does not give us precise knowledge of the students' activities in a Shared Cluster.

To give us precise information as to the students' activities, GPTE needs a tagging system to ensure that the class lab environment that was used by the student is properly accounted for in the Cost Management system. As many as possible of the resourced need to be tagged or labeled, according to the features of the infrastructure providing them.

Let’s say that a student with ID student1-redhat.com is taking the OpenShift 4 Foundations ELT. We need to label and tag all the resources they will be using for the course of the class. We should choose a meaningful identifier for the student taking the class. Let’s say class_session: student1-redhat.com_ocp4-foundations

Each system has its own limitations in their tagging and labeling mechanisms. The total number of tags or labels in a system may be limited. The number of tags on a particular resource may be limited. The character count and allowed characters may differ. Care must be taken to create tags and labels that suit all the systems involved.

Our sample account, which you have access to, can show you how OpenShift projects/namespaces are associated with AWS resources.

Using OpenShift labels and AWS tags you are expressing the relationship between student ILT clusters, the applications they’re hosting, and the AWS resources that power them.

We have created same data for eight ILTs, each of which have two students who have deployed their own single OCP clusters.

Each cluster has three masters, three worker nodes, and associated storage with tags called storageclass whicn represents EBS volumes and S3 storage.

Three "workload" projects were deployed on the clusters. They are the basis of a sample "cost management" system and the OCP control plane: The apps are install-test, cost-management, catalog, analytics. The control plane is also represented by projects openshift, and kube-system.

[NOTE] You won’t be looking at Cost Management data as instances of AWS resources - rather, you’ll be presented with the cost derived from the tag and label associations that connect the OCP resources to underlying AWS resources.

-

Log into the Sample Data Account

-

Navigate to https://cloud.redhat.com/hybrid/cost-management/sources/

-

Log OUT of the previous user.

-

Sign in as example user 2: username

rhte-example-2passwordr3dh4t1!

-

-

All of the cluster data has already been loaded

-

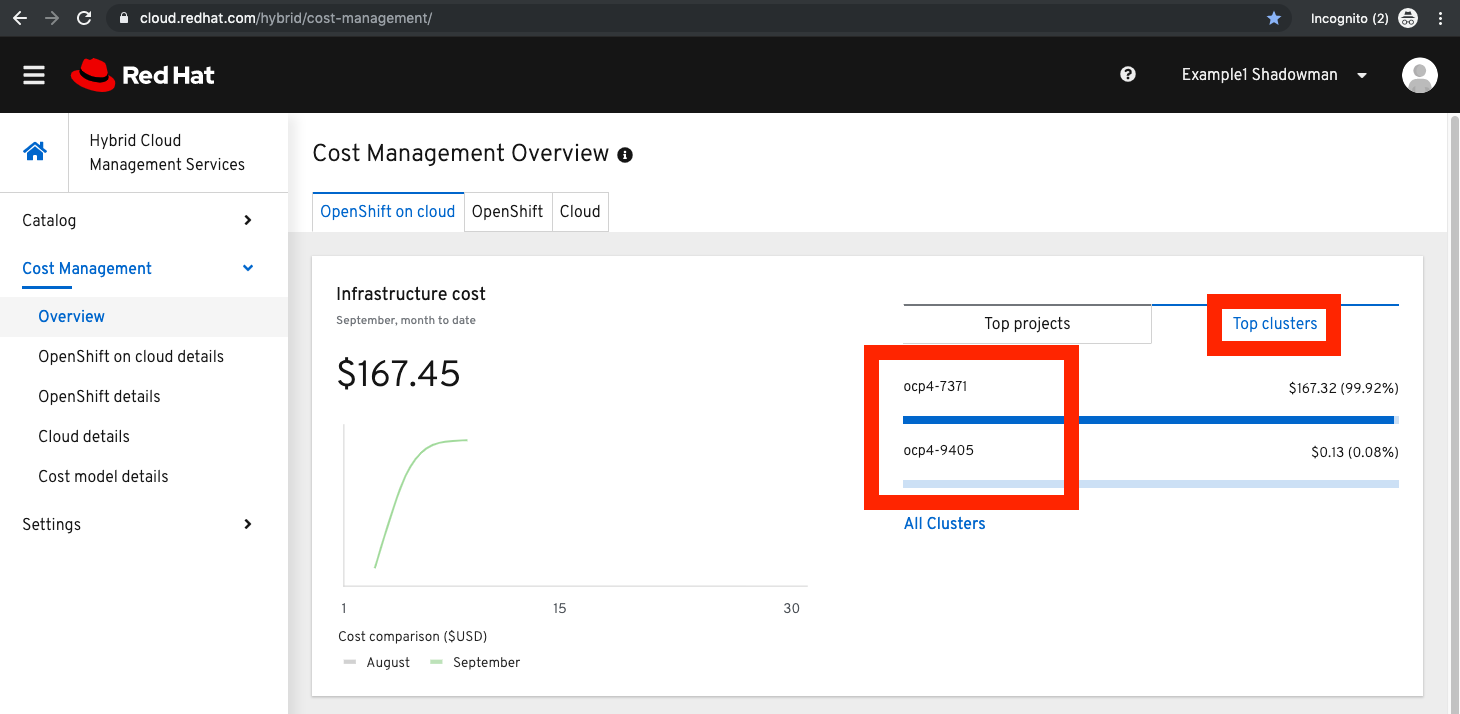

Click on Cost Management on the left to open up the five options and Click on Overview. The Cost Management Overview page opens.

-

Look at the "Infrastructure cost" graph, and note that it only covers this month and the last. HCM Cost Management is currently designed for quick analysis of recent changes in spending.

-

Using your mouse, determine which week-long time periods ILTs were run. Note that sometimes the graph lines are unbroken.

-

Note which particular projects have cost the most money this month.

-

Click on the Top Clusters tab and see the top spenders for the month.

-

Scroll Down and observe the AWS resource utilization and the actual resource usage of all OCP projects compared to the resources requested by all OCP projects.

-

On the left, click on OpenShift on cloud details and in the list of projects that opens, click the to open the first project name (usually "analytics") on the list. Note how it identifies a single cluster and the spending per region of the projects.

-

Continue working with the Cost Management GUI to try to answer the following questions:

|

Note

|

Note that this data is in some parts matched incorrecgly and faulty just for today, Tuesday’s breakout, and can produce strange results. |

Senior Management wants to know:

-

How much have we spent in the last month with AWS

-

Infrastructure Cost per student to Run one OpenShift 4 ILT

-

How many Students have done the OpenShift 4 "Foundations" ELT the past two months

-

At current rate of usage increase, how much will we be spending on OpenShift 4 ELTs

This lab has shown you the basics of the Cost Management system. It covered the infrastructure setup and basic use of the product.

Don’t forget to register your attendance in the app!!

|

Note

|

Remember, you can learn a lot more about Cost Management in the docs and blog posts indicated at the top of this document. |

Have a Great Tech Exchange! -The HCM Cost Management Team and GPTE