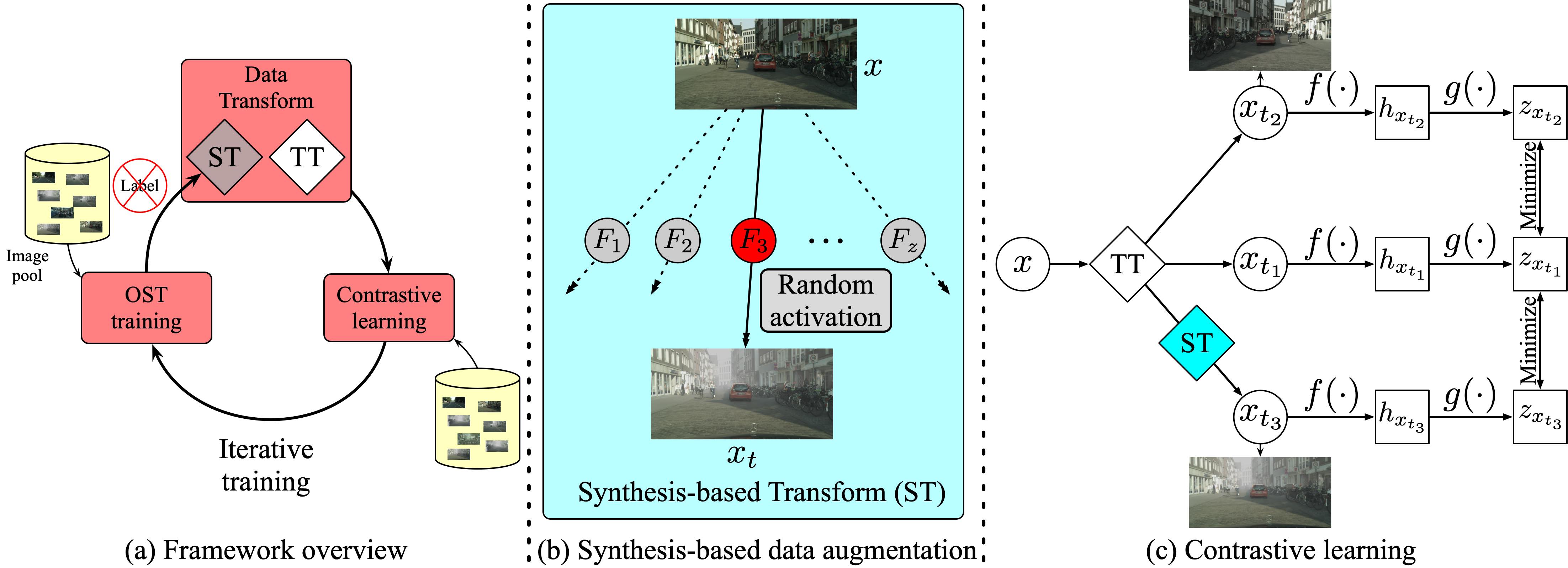

A PyTorch implementation of OSSCo based on TCSVT 2023 paper Fully Unsupervised Domain-Agnostic Image Retrieval.

conda install pytorch=1.7.1 torchvision cudatoolkit=11.0 -c pytorch

pip install pytorch-metric-learning

pip install thop

Cityscapes FoggyDBF and

CUFSF datasets are used in this repo, you could download these datasets

from official websites, or download them from MEGA. The data

should be rearranged, please refer the paper to acquire the details of train/val split. The data directory structure

is shown as follows:

cityscapes

├── train

├── clear (clear images)

├── aachen_000000_000019_leftImg8bit.png

└── ...

├── bochum_000000_000313_leftImg8bit.png

└── ...

├── fog (fog images)

same structure as clear

...

├── val

same structure as train

...

cufsf

same structure as cityscapes

python main.py or comp.py --data_name cufsf

optional arguments:

# common args

--data_root Datasets root path [default value is 'data']

--data_name Dataset name [default value is 'cityscapes'](choices='cityscapes', 'cufsf'])

--method_name Compared method name [default value is 'ossco'](choices=['ossco', 'simclr', 'npid', 'proxyanchor', 'softtriple', 'pretrained'])

--proj_dim Projected feature dim for computing loss [default value is 128]

--temperature Temperature used in softmax [default value is 0.1]

--batch_size Number of images in each mini-batch [default value is 16]

--total_iter Number of bp to train [default value is 10000]

--ranks Selected recall to val [default value is [1, 2, 4, 8]]

--save_root Result saved root path [default value is 'result']

# args for ossco

--style_num Number of used styles [default value is 8]

--gan_iter Number of bp to train gan model [default value is 4000]

--rounds Number of round to train whole model [default value is 5]

For example, to train npid on cufsf dataset, report R@1 and R@5:

python comp.py --method_name npid --data_name cufsf --batch_size 64 --ranks 1 5

to train ossco on cityscapes dataset, with 16 random selected styles:

python main.py --method_name ossco --data_name cityscapes --style_num 16

The models are trained on one NVIDIA GTX TITAN (12G) GPU. Adam is used to optimize the model, lr is 1e-3

and weight decay is 1e-6. batch size is 16 for ossco, 32 for simclr, 64 for npid.

lr is 2e-4 and betas is (0.5, 0.999) for GAN, other hyper-parameters are the default values.

| Method | Clear --> Foggy | Foggy --> Clear | Clear <--> Foggy | Download | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R@1 | R@2 | R@4 | R@8 | R@1 | R@2 | R@4 | R@8 | R@1 | R@2 | R@4 | R@8 | ||

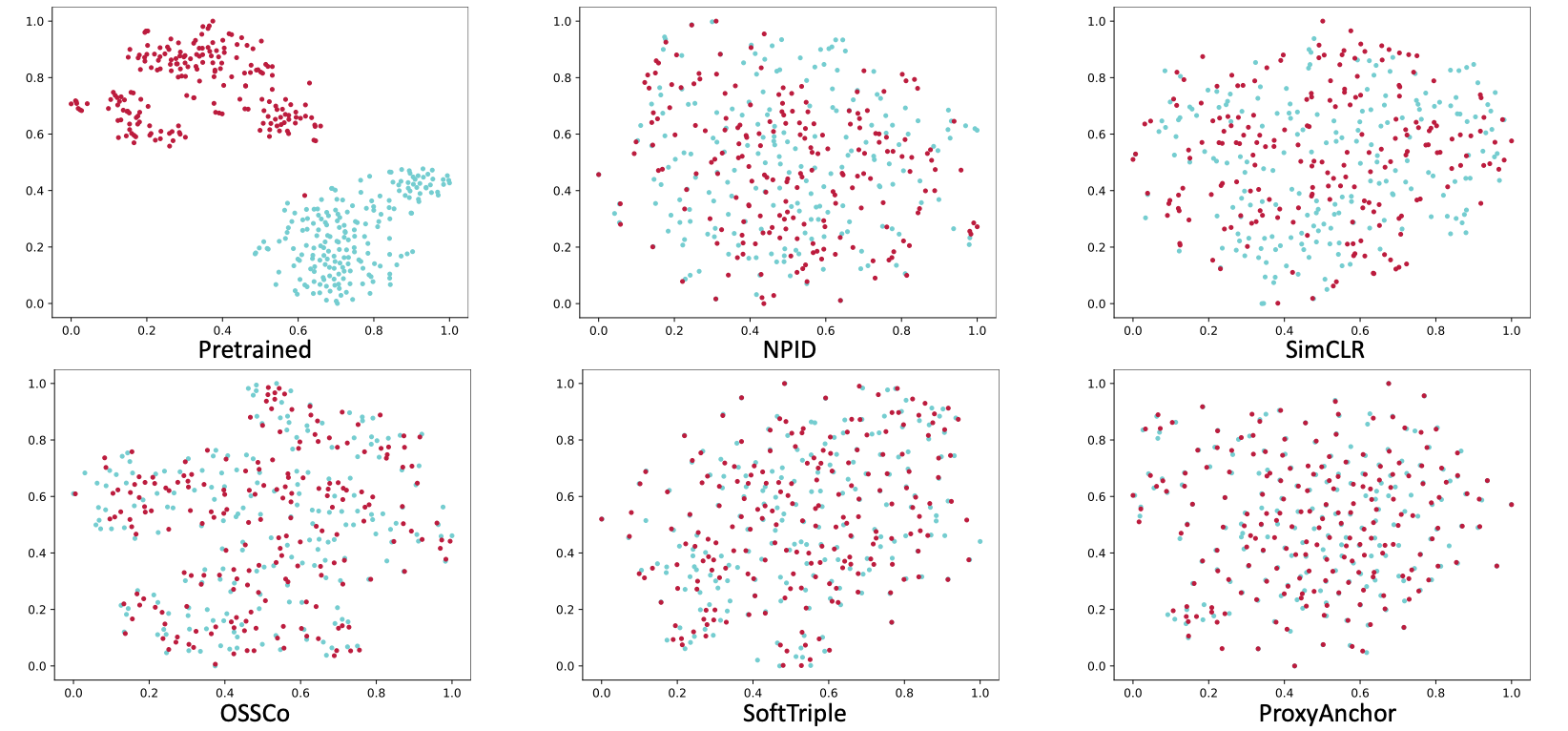

| Pretrained | 77.0 | 82.0 | 86.6 | 89.2 | 93.4 | 95.6 | 97.0 | 98.4 | 45.7 | 53.3 | 59.3 | 65.4 | ea3u |

| NPID | 22.8 | 29.4 | 37.2 | 46.4 | 21.6 | 28.4 | 35.6 | 43.6 | 5.9 | 8.3 | 11.2 | 14.1 | hu2k |

| SimCLR | 92.2 | 94.6 | 96.6 | 97.8 | 89.6 | 93.0 | 95.4 | 98.2 | 80.1 | 85.4 | 88.8 | 92.3 | 4jvm |

| SoftTriple | 99.6 | 99.8 | 100 | 100 | 99.8 | 99.8 | 99.8 | 100 | 98.4 | 99.7 | 99.8 | 99.9 | 6we5 |

| ProxyAnchor | 99.6 | 100 | 100 | 100 | 99.6 | 99.8 | 99.8 | 100 | 98.8 | 99.6 | 99.6 | 99.8 | 99k3 |

| OSSCo | 99.2 | 99.6 | 99.8 | 99.8 | 99.2 | 99.4 | 99.4 | 99.6 | 96.9 | 98.9 | 99.4 | 99.5 | cb2b |

| Method | Sketch --> Image | Image --> Sketch | Sketch <--> Image | Download | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R@1 | R@2 | R@4 | R@8 | R@1 | R@2 | R@4 | R@8 | R@1 | R@2 | R@4 | R@8 | ||

| Pretrained | 9.0 | 13.1 | 18.1 | 25.6 | 16.6 | 24.1 | 30.7 | 38.2 | 0.3 | 0.3 | 1.3 | 3.0 | imi4 |

| NPID | 37.2 | 48.7 | 63.8 | 73.4 | 40.7 | 52.8 | 67.8 | 73.9 | 27.1 | 34.4 | 46.0 | 60.1 | xvci |

| SimCLR | 24.1 | 39.2 | 56.3 | 72.4 | 32.7 | 45.2 | 56.3 | 68.8 | 15.1 | 21.9 | 33.7 | 49.0 | xtux |

| SoftTriple | 86.4 | 92.5 | 95.5 | 99.0 | 89.4 | 93.5 | 97.5 | 99.5 | 79.6 | 85.9 | 92.7 | 96.2 | 5qb9 |

| ProxyAnchor | 95.5 | 98.0 | 100 | 100 | 95.5 | 98.5 | 100 | 100 | 91.7 | 95.7 | 98.2 | 99.7 | inai |

| OSSCo | 82.4 | 93.5 | 97.5 | 99.5 | 88.4 | 98.0 | 99.5 | 99.5 | 55.0 | 70.4 | 87.2 | 94.5 | q6ji |

If you find OSSCo helpful, please consider citing:

@article{zheng2023fully,

title={Fully Unsupervised Domain-Agnostic Image Retrieval},

author={Zheng, Ziqiang and Ren, Hao and Wu, Yang and Zhang, Weichuan and Lu, Hong and Yang, Yang and Shen, Heng Tao},

journal={IEEE Transactions on Circuits and Systems for Video Technology},

year={2023},

publisher={IEEE}

}