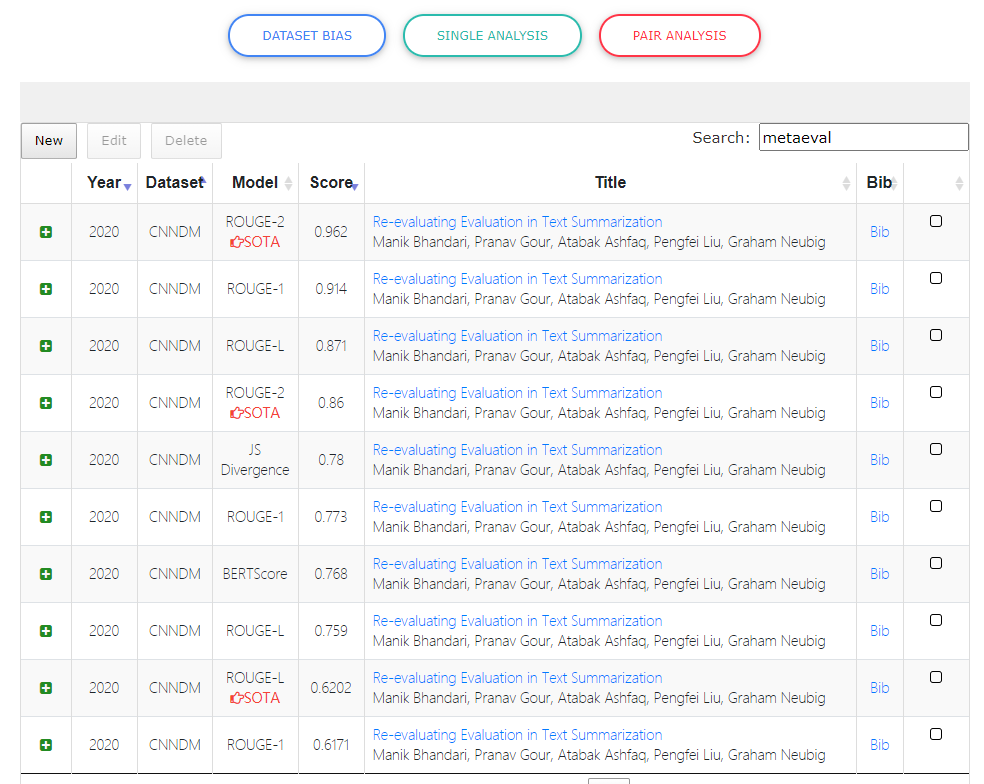

Authors: Manik Bhandari, Pranav Gour, Atabak Ashfaq, Pengfei Liu, Graham Neubig

Evaluating summarization is hard. Most papers still use ROUGE, but recently a host of metrics (eg BERTScore, MoverScore) report better correlation with human eval. However, these were tested on older systems (the classic TAC meta-evaluation datasets are now 6-12 years old), how do they fare with SOTA models? Will conclusions found there hold with modern systems and summarization tasks?

Including all the system variants, there are total 25 system outputs - 11 extractive and 14 abstractive.

- All outputs

- All ouputs used for human evaluation

- Semantic Content Units (SCUs) and manual annotations of outputs

- All outputs with human scores

Please read our reproducibility instructions in addition to our paper in order to reproduce this work for another dataset.

- Calculate the metric scores for each of the summary and create a scores dict in the below format. See the section below to calculate scores with a new metric.

Make sure to include

litepyramid_recallin the scores dict, which is the metric used by human evaluators. - Run the analysis notebook on the scores dict to get all the graphs and tables used in the paper.

- Update

scorer.pysuch that (1) if there is any setup required by your metric, it is done in the__init__function of scorer as the scorer will be used to score all systems. And (2) add your metric in thescorefunction as

elif self.metric == "name_of_my_new_metric":

scores = call_to_my_function_which_gives_scores(passing_appropriate_arguments)where scores is a list of scores corresponding to each summary in a file. It should be a list of dictionaries e.g. [{'precision': 0.0, 'recall': 1.0} ...]

- Calculate the scores and the scores dict using

python get_scores.py --data_path ../selected_docs_for_human_eval/<abs or ext> --output_path ../score_dicts/abs_new_metric.pkl --log_path ../logs/scores.log -n_jobs 1 --metric <name of metric> - Your scores dict is generated at the output path.

- Merge it with the scores dict with human scores provided in

scores_dicts/usingpython score_dict_update.py --in_path <score dicts folder with the dicts to merge> --out_path <output path to place the merged dict pickle> -action merge - Your dict will be merged with the one with human scores and the output will be placed in

out_path. You can now run the analysis notebook on the scores dict to get all the graphs and tables used in the paper.

{

doc_id: {

'doc_id': value of doc id,

'ref_summ': reference summary of this doc,

'system_summaries': {

system_name: {

'system_summary': the generated summary,

'scores': {

'js-2': the actual score,

'rouge_l_f_score': the actual score,

'rouge_1_f_score': the actual score,

'rouge_2_f_score': the actual score,

'bert_f_score': the actual score

}

}

}

}

}

@inproceedings{Bhandari-2020-reevaluating,

title = "Re-evaluating Evaluation in Text Summarization",

author = "Bhandari, Manik and Narayan Gour, Pranav and Ashfaq, Atabak and Liu, Pengfei and Neubig, Graham ",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP)",

year = "2020"

}