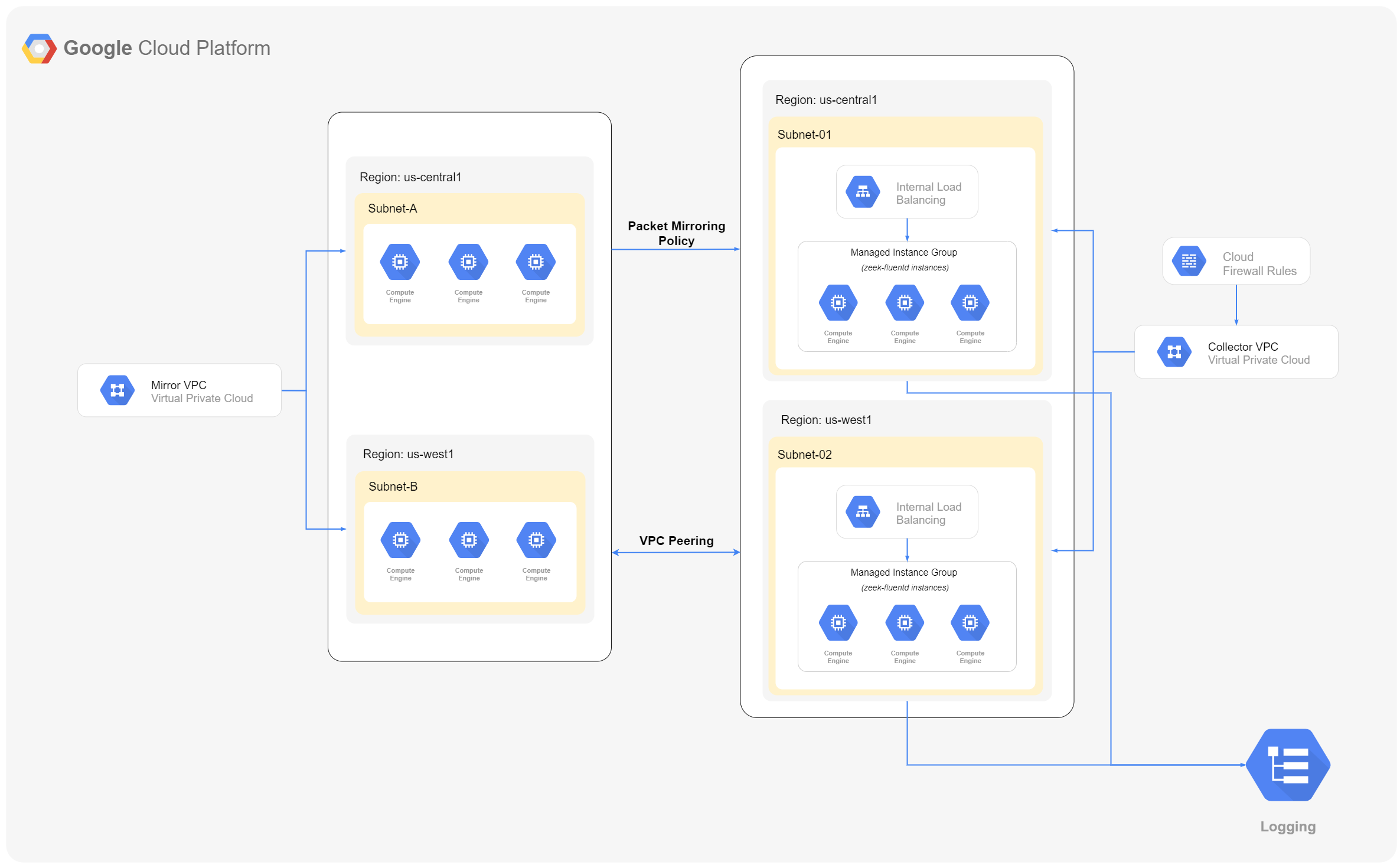

This module simplifies the deployment of Zeek so GCP customers can feed raw packets from VPC Packet Mirroring and produce rich security telemetry for threat detection and investigation in our Chronicle Security Platform.

This module is meant for use with Terraform v0.13.5 or above.

Examples of how to use these modules can be found in the examples folder.

- Basic Configurations: Demonstrates how to use google zeek automation module with basic configurations.

- Mirror Resource Filtering: Demonstrates how to specify mirror vpc network sources, for packet mirroring policy.

- Packet Mirroring Traffic Filtering: Demonstrates how to use traffic filtering parameters for packet-mirroring policy.

-

Creates regional managed instance groups using same network peering between mirror-collector vpc for collecting logs from mirror vpc sources.

-

Enables regional packet mirroring policies for mirroring mirror vpc sources like:

- mirror-vpc subnets

- mirror-vpc tags

- mirror-vpc instances

with optional parametes like: ip_protocols, direction, & cidr_ranges.

-

Enables packaging of logs in order to send it to Chronicle Platform.

- Packer Image should exist before running terraform script.

- Terraform is installed on the machine where Terraform is executed.

- The Service Account you execute the module with has the right permissions.

- The Compute Engine APIs are active on the project you will launch the infrastructure on.

- User must create a GCS Bucket.

- If Mirror VPC is in a different project, then the user will have to do the manual network peering from Mirror VPC to Collector VPC, if they want to mirror traffic from that project VPC.

- If Mirror VPC is in a different project, then the user will have to add an egress firewall rule in the Mirror VPC for redirecting incoming traffic to Collector VPC.

There are two ways for using packer image:

- One can use our pre-configured packer image which is published on GCP and publicly available to all.

- We have already configured our packer image with terraform script by configuring variable

golden_image. (i.e.projects/zeekautomation/global/images/zeek-fluentd-golden-image-v1)

- One can make their own custom image by following this documentation.

- Once the custom image is created, change the

golden_imagevariable value with your custom image name to use it in terraform script.

Service account or user credentials with the following roles must be used to provision the resources of this module:

- Service Account User -

roles/iam.serviceAccountUser - Service Account Token Creator -

roles/iam.serviceAccountTokenCreator - Compute Admin -

roles/compute.admin - Compute Network Admin -

roles/compute.networkAdmin - Compute Packet Mirroring User -

roles/compute.packetMirroringUser - Compute Packet Mirroring Admin -

roles/compute.packetMirroringAdmin - Logs Writer -

roles/logging.logWriter - Monitoring Metric Writer -

roles/monitoring.metricWriter - Storage Admin -

roles/storage.admin

In addition to above roles, for Mirror VPCs residing in different projects than Collector VPC, the Service account email used for provisioning Collector VPC resources must be added as IAM Member to respective Mirror VPC project with the following role:

- Compute Packet Mirroring Admin -

roles/compute.packetMirroringAdmin

In order to operate with the Service Account you must activate the following APIs on the project where the Service Account was created:

- Compute Engine API -

compute.googleapis.com - Service Usage API -

serviceusage.googleapis.com - Identity and Access Management (IAM) API -

iam.googleapis.com - Cloud Resource Manager API -

cloudresourcemanager.googleapis.com - Cloud Logging API -

logging.googleapis.com - Cloud Monitoring API -

monitoring.googleapis.com - Cloud Storage API -

storage.googleapis.com

module "google_zeek_automation" {

source = "<link>/google_zeek_automation"

gcp_project = "collector_project-123"

service_account_email = "service-account@collector-project-123.iam.gserviceaccount.com"

collector_vpc_name = "collector-vpc"

subnets = [

{

mirror_vpc_network = "projects/mirror-project-123/global/networks/test-mirror"

collector_vpc_subnet_cidr = "10.11.0.0/24"

collector_vpc_subnet_region = "us-west1"

},

]

mirror_vpc_subnets = {

"mirror-project-123--mirror_vpc_name--us-west1" = ["projects/mirror-project-123/regions/us-west1/subnetworks/subnet-01"]

}

}Note: For packet mirroring policy, it requires a mirror source to be specified before running the script. So, out of 3 variables: mirror_vpc_instances | mirror_vpc_tags| mirror_vpc_subnets, at least one of them should be specified while running the terraform script.

Then perform the following commands on the root folder:

terraform initto get the pluginsterraform planto see the infrastructure planterraform applyto apply the infrastructure buildterraform destroyto destroy the built infrastructure

- Terraform v0.13.5

- Terraform Provider for GCP v3.55

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| gcp_project | GCP Project ID where collector vpc will be provisioned. | string |

n/a | yes |

| golden_image | This is name of zeek-fluentd packer image | string |

"projects/zeekautomation/global/images/zeek-fluentd-golden-image-v1" |

no |

| collector_vpc_name | This is name of collector vpc. | string |

n/a | yes |

| mirror_vpc_instances | Mirror VPC Instances list to be mirrored. (Note: Mirror VPC should reside in the same project as collector VPC because cross project referencing of instances is not allowed by GCP) |

map(list(string)) |

{} |

no |

| mirror_vpc_subnets | Mirror VPC Subnets list to be mirrored. | map(list(string)) |

{} |

no |

| mirror_vpc_tags | Mirror VPC Tags list to be mirrored. | map(list(string)) |

{} |

no |

| service_account_email | User's Service Account Email. | string |

n/a | yes |

| subnets | The list of subnets being created | list(object({ |

n/a | yes |

| Name | Description |

|---|---|

| autoscaler_ids | Autoscaler identifier for the resource with format projects/{{project}}/regions/{{region}}/autoscalers/{{name}} |

| collector_vpc_network_id | The identifier of the VPC network with format projects/{{project}}/global/networks/{{name}}. |

| collector_vpc_subnets_ids | Sub Network identifier for the resource with format projects/{{project}}/regions/{{region}}/subnetworks/{{name}} |

| forwarding_rule_ids | Forwarding Rule identifier for the resource with format projects/{{project}}/regions/{{region}}/forwardingRules/{{name}} |

| health_check_id | Health Check identifier for the resource with format projects/{{project}}/global/healthChecks/{{name}} |

| intance_group_ids | Managed Instance Group identifier for the resource with format {{disk.name}} |

| intance_groups | The full URL of the instance group created by the manager. |

| intance_template_ids | Instance Templates identifier for the resource with format projects/{{project}}/global/instanceTemplates/{{name}} |

| loadbalancer_ids | Internal Load Balancer identifier for the resource with format projects/{{project}}/regions/{{region}}/backendServices/{{name}} |

| packet_mirroring_policy_ids | Packet Mirroring Policy identifier for the resource with format projects/{{project}}/regions/{{region}}/packetMirrorings/{{name}} |

The Google Zeek Automation uses external scripts to perform a few tasks that are not implemented by Terraform providers. Because of this the Google Zeek Automation needs a copy of service account credentials to pass to these scripts. Credentials can be provided via two mechanisms:

- Explicitly passed to the Google Zeek Automation with the

credentialsvariable. This approach typically uses the same credentials for thegoogleprovider and the Google Zeek Automation:provider "google" { credentials = "${file(var.credentials)}" } module "google_zeek_automation" { source = "<link>/google_zeek_automation" # other variables follow ... }

- Implicitly provided by the Application Default Credentials

flow, which typically uses the

GOOGLE_APPLICATION_CREDENTIALSenvironment variable:# `GOOGLE_APPLICATION_CREDENTIALS` must be set in the environment before Terraform is run. provider "google" { # Terraform will check the `GOOGLE_APPLICATION_CREDENTIALS` variable, so no `credentials` # value is needed here. } module "google_zeek_automation" { source = "<link>/google_zeek_automation" # Google Zeek Automation will also check the `GOOGLE_APPLICATION_CREDENTIALS` environment variable. # other variables follow ... }

- VPC Network

- Network Subnets

- Network Firewall

- VPC Network Peering

- Instance Template

- Managed Instance Group

- Internal Load Balancer

- Packet Mirroring

This repo has the following folder structure:

-

examples: This folder contains examples of how to use the module.

-

files: This folder contains startup script file.

-

packer: To generate your own packer image.

-

test: Automated tests for the module.

Contributions to this repo are very welcome and appreciated! If you find a bug or want to add a new feature or even contribute an entirely new module, we are very happy to accept pull requests, provide feedback, and run your changes through our automated test suite.

Please see contributing guidelines for information on contributing to this module.

-

If you get error:

Error: Error waiting for Adding Network Peering: An IP range in the peer network (X.X.X.X/X) overlaps with an IP range in the local network (X.X.X.X/X) allocated by resource (projects/<project-id>/regions/<region>/subnetworks/<subnet-id>).Reason: A subnet CIDR range in one peered VPC network cannot overlap with a static route in another peered network. This rule covers both subnet routes and static routes.

Refer: https://cloud.google.com/vpc/docs/vpc-peering#restrictionsSolution: Users should establish a new configuration with mirror VPCs whose CIDR ranges clash with those of the present infrastructure. Following that, a new collector VPC will be launched, as well as a new set of mirror VPCs will be mirrored, which will resolve the overlapping CIDR problem.