Weakly-Supervised Spatio-Temporally Grounding Natural Sentence in Video

This repo contains the main baselines of VID-sentence dataset introduced in WSSTG. Please refer to our paper and the repo for the information of VID-sentence dataset.

Task

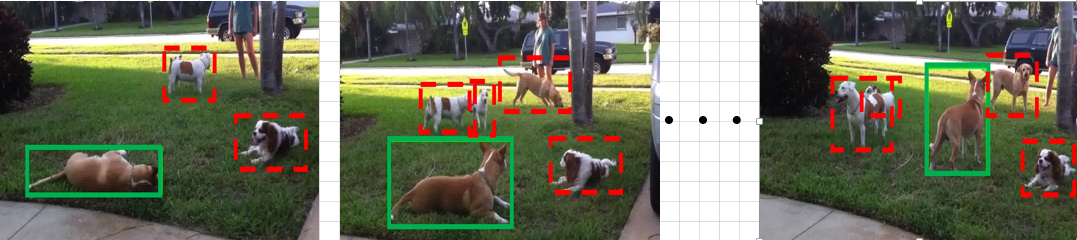

Description: "A brown and white dog is lying on the grass and then it stands up."

The proposed WSSTG task aims to localize a spatio-temporal tube (i.e., the sequence of green bounding boxes) in the video which semantically corresponds to the given sentence, with no reliance on any spatio-temporal annotations during training.

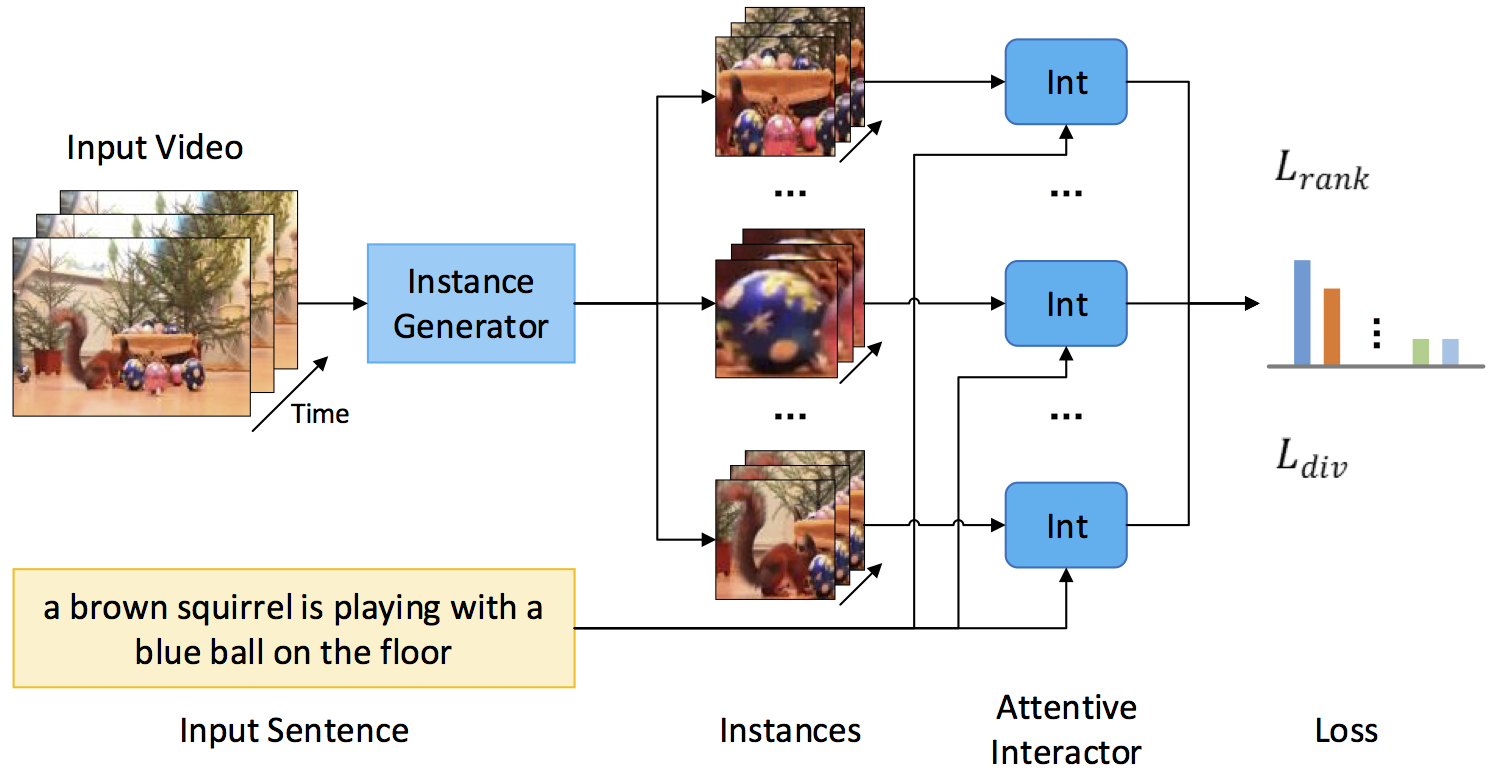

Architecture

The architecture of the proposed method.

Contents

Requirements: software

- Pytorch (version=0.4.0)

- python 2.7

- numpy

- scipy

- magic

- easydict

- dill

- matplotlib

- tensorboardX

Installation

- Clone the WSSTG repository and VID-sentence reposity

git clone https://github.com/JeffCHEN2017/WSSTG.git

git clone https://github.com/JeffCHEN2017/VID-Sentence.git

ln -s VID-sentence_ROOT/data/ILSVRC WSSTG_ROOT/data-

Download tube proposals, RGB feature and I3D feature from Google Drive.

-

Extract *.tar files and make symlinks between the download data and the desired data folder

tar xvf tubePrp.tar

ln -s tubePrp $WSSTG_ROOT/data/tubePrp

tar xvf vid_i3d.tar vid_i3d

ln -s vid_i3d $WSSTG_ROOT/data/vid_i3d

ln -s $WSSTG_ROOT/data/vid_i3d/val test

tar xvf vid_rgb.tar vid_rgb

ln -s vid_rgb $WSSTG_ROOT/data/vid_rgb

ln -s $WSSTG_ROOT/data/vid_rgb/vidTubeCacheFtr/val testNote: We extract the tube proposals using the method proposed by Gkioxari and Malik .A python implementation here is provided by Yamaguchi etal.. We extract singel-frame propsoals and RGB feature for each frame using a faster-RCNN model pretrained on COCO dataset, which is provided by Jianwei Yang. We extract I3D-RGB and I3D-flow features using the model provided by Carreira and Zisserman.

Training

cd $WSSTG_ROOT

sh scripts/train_video_emb_att.shNotice: Because the changes of batch sizes and the random seed, the performance may be slightly different from our submission. We provide a checkpoint here which achieves similar performance (38.1 VS 38.2 on the accuracy@0.5 ) to the model we reported in the paper.

Testing

Download the checkpoint from Google Drive, put it in WSSTG_ROOT/data/models and run

cd $WSSTG_ROOT

sh scripts/test_video_emb_att.shLicense

WSSTG is released under the CC-BY-NC 4.0 LICENSE (refer to the LICENSE file for details).

Citing WSSTG

If you find this repo useful in your research, please consider citing:

@inproceedings{chen2019weakly,

Title={Weakly-Supervised Spatio-Temporally Grounding Natural Sentence in Video},

Author={Chen, Zhenfang and Ma, Lin and Luo, Wenhan and Wong, Kwan-Yee K},

Booktitle={ACL},

year={2019}

}

Contact

You can contact Zhenfang Chen by sending email to chenzhenfang2013@gmail.com