- Create and activate a Conda environment:

conda create -y -n chatbot python=3.11 conda activate chatbot

- Install required packages:

pip install -r requirements.txt

- Set up environment variables:

- Write your

OPENAI_API_KEYin the.envfile. A template can be found in.env.example.

source .env - Write your

To start the application, use the following command:

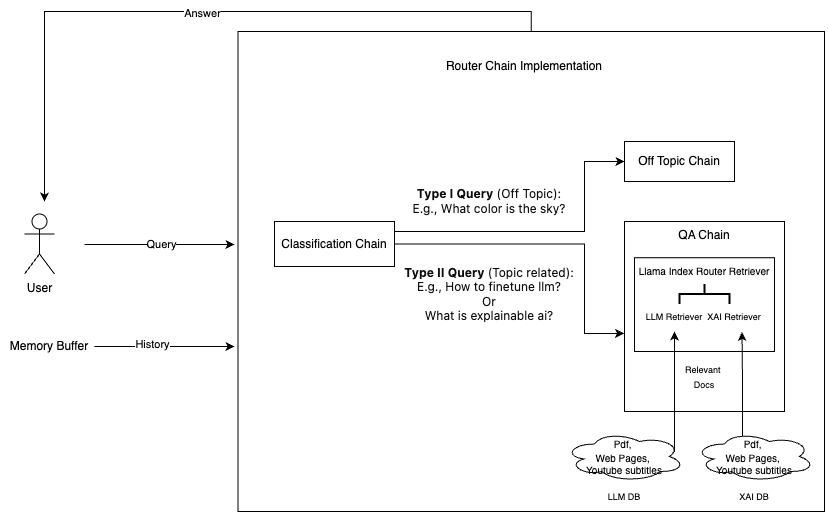

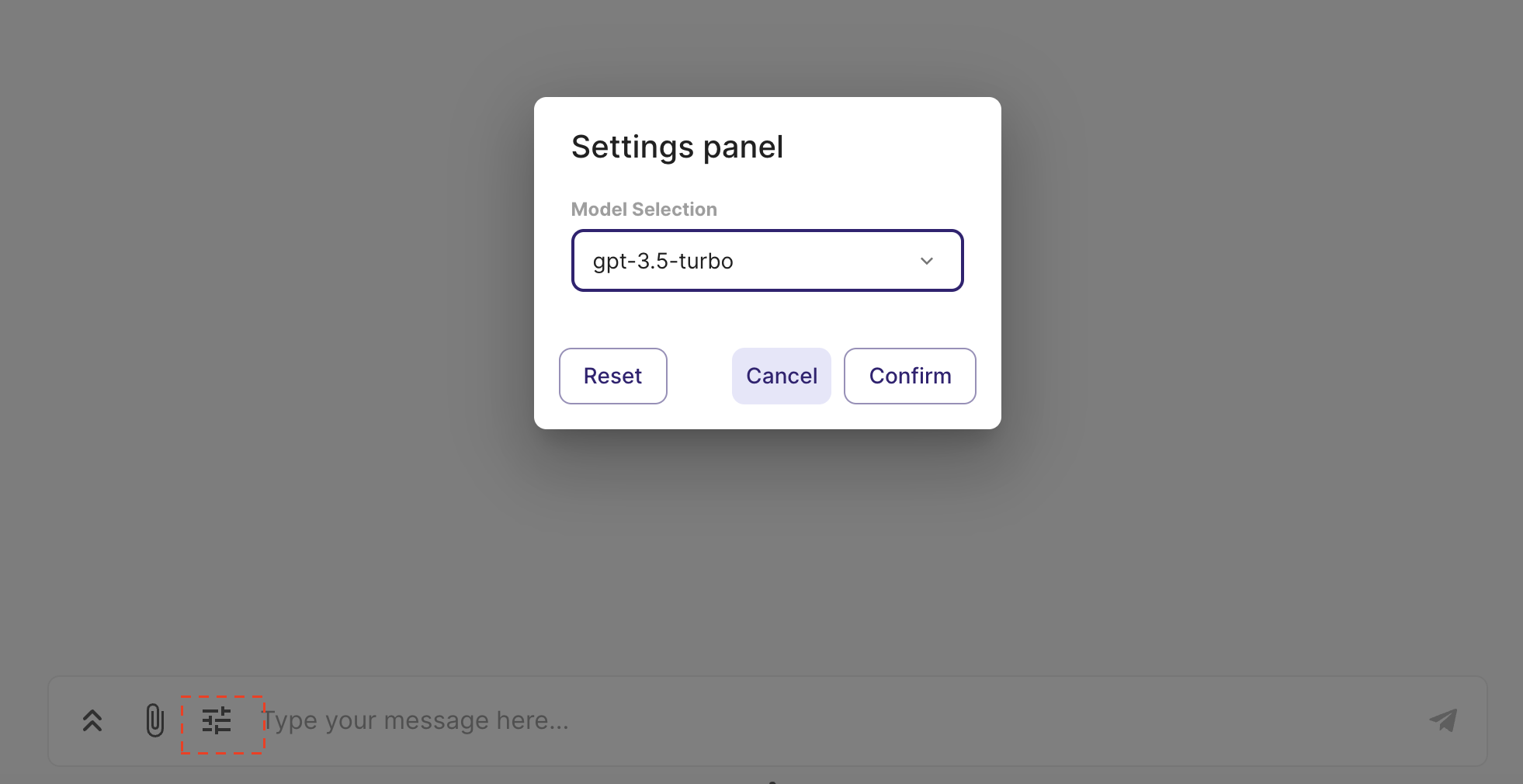

chainlit run app.pyUsers have the option to select the specific LLM (language learning model) they prefer for generating responses. The switch between different LLMs can be accomplished within a single conversation session.

- Various Information Source: The chatbot can retrieve information from web pages, YouTube videos, and PDFs.

- Source Display: You can view the source of the information at the end of each answer.

- LLM Model Identification: The specific LLM model utilized for generating the current response is indicated.

- Router retriever: Easy to adapt to different domains, as each domain can be equipped with a different retriever.

- Memory Management: The chatbot is equipped with a conversation memory feature. If the memory exceeds 500 tokens, it is automatically summarized.

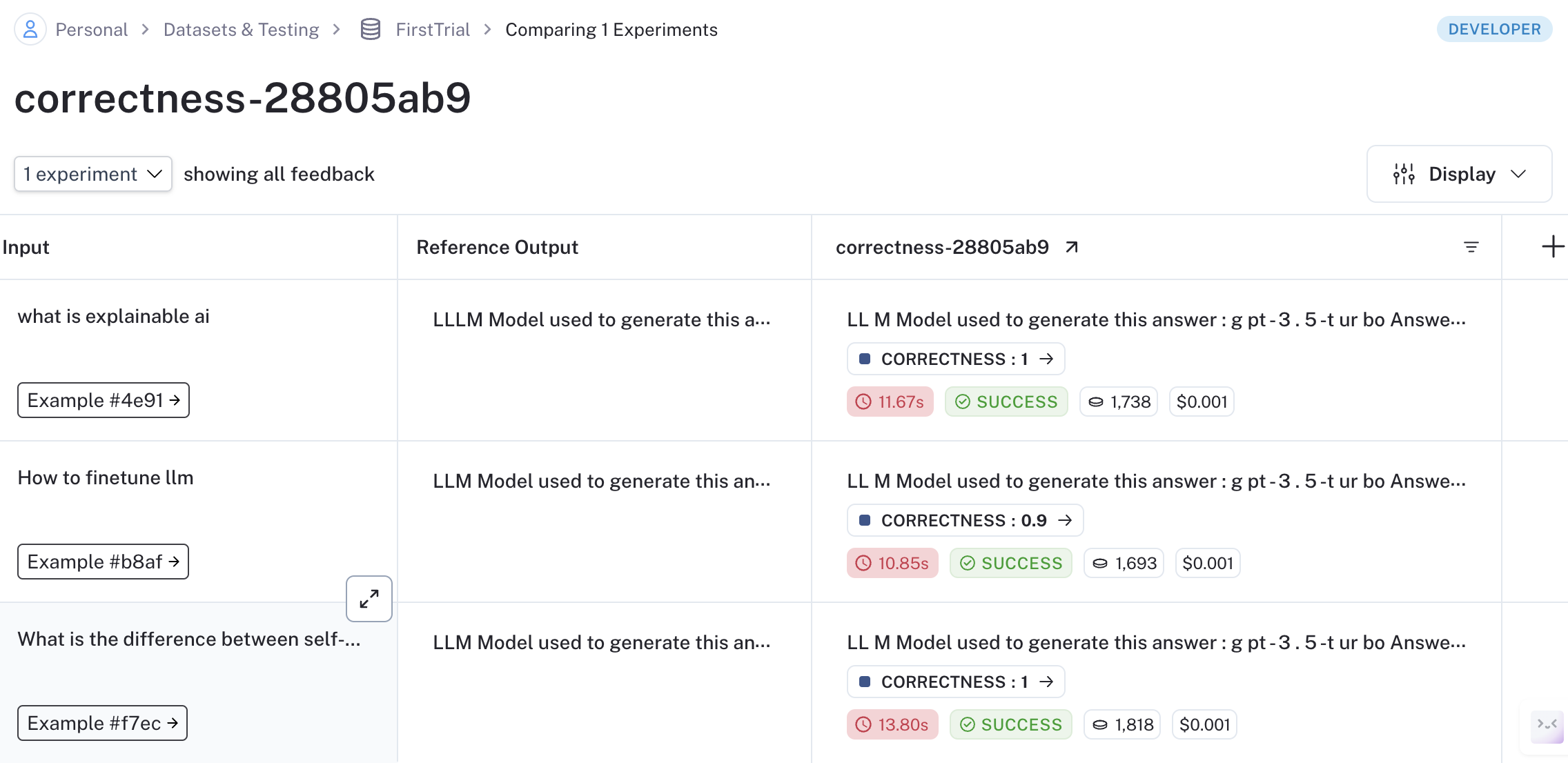

To evaluate model generation against human references or log outputs for specific test queries, use Langsmith.

- Register an account at Langsmith.

- Add your

LANGCHAIN_API_KEYto the.envfile. - Execute the script with your dataset name:

python langsmith_tract.py --dataset_name <YOUR DATASET NAME>

- Modify the data path in

langsmith_evaluation/config.tomlif necessary (e.g., path to a CSV file with question and answer pairs).

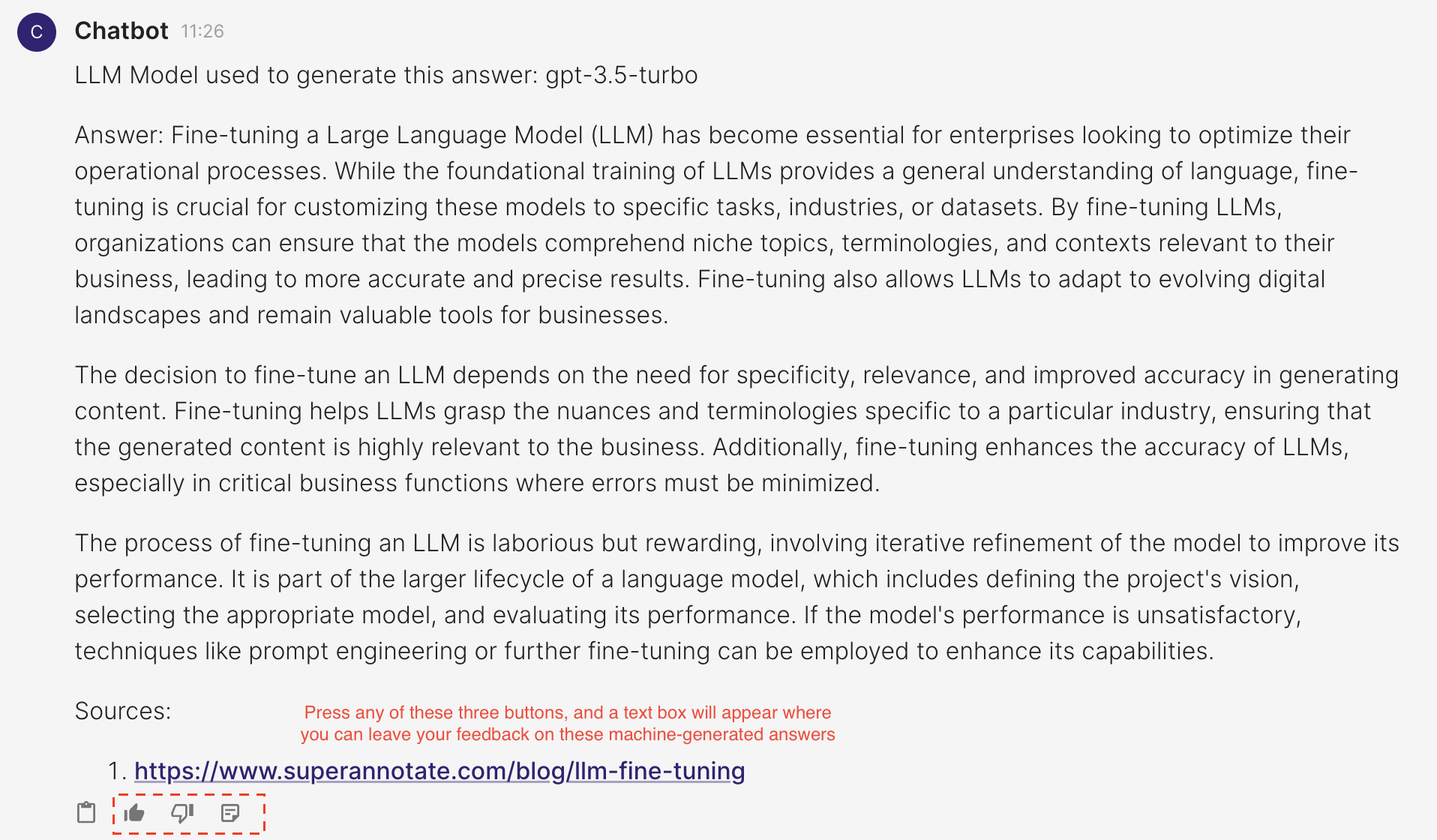

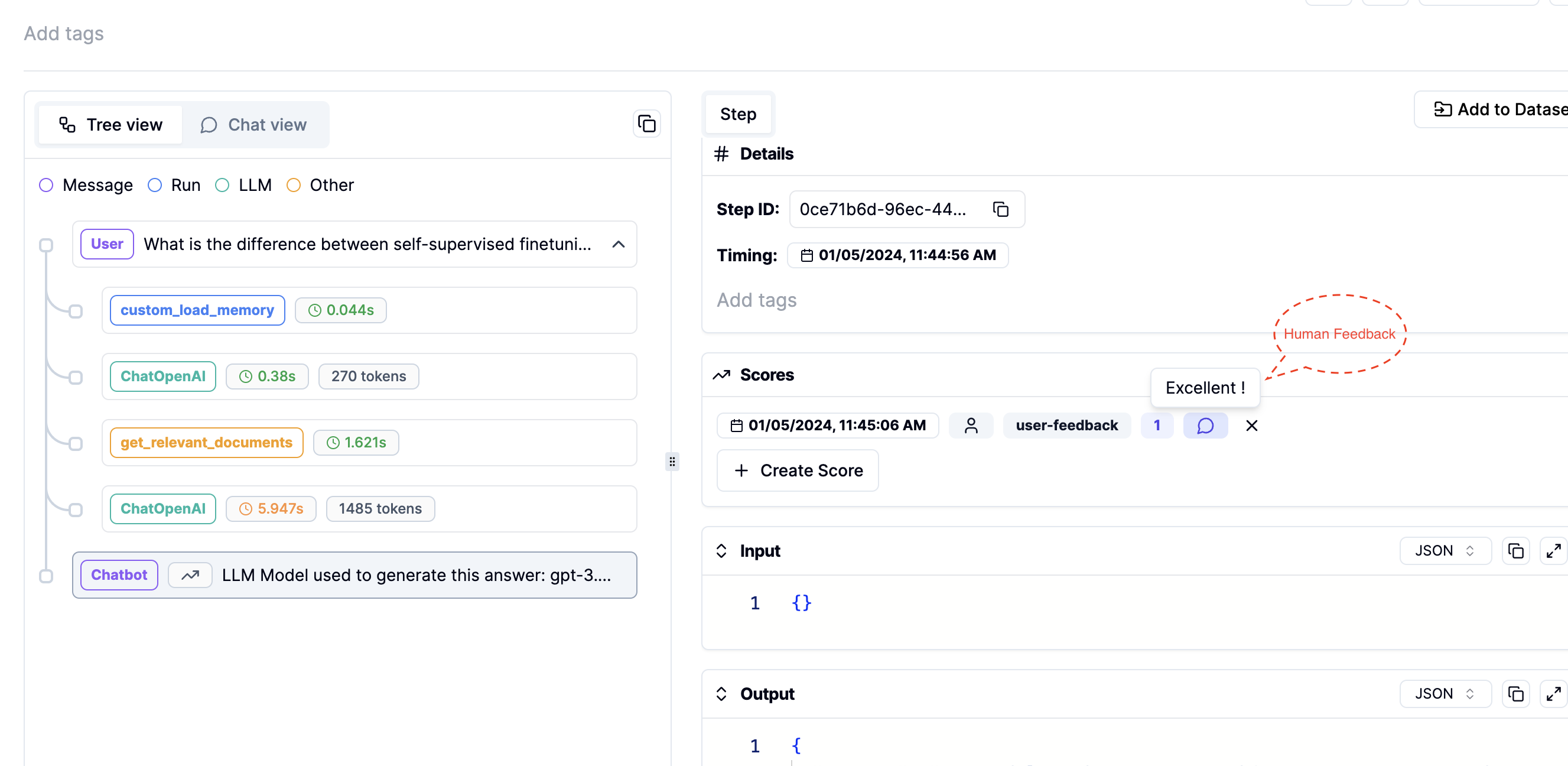

Use Literal AI to record human feedback for each generated answer. Follow these steps:

- Register an account at Literal AI.

- Add your

LITERAL_API_KEYto the.envfile. - Once the

LITERAL_API_KEYis added to your environment, run the commandchainlit run app.py. You will see three new icons as shown in the image below, where you can leave feedback on the generated answers.

- Track this human feedback in your Literal AI account. You can also view the prompts or intermediate steps used to generate these answers.

This guide details the steps for setting up user authentication in your application. Each authenticated user will have the ability to view their own past interactions with the chatbot.

- Add your APP_LOGIN_USERNAME and APP_LOGIN_PASSWORD to the

.envfile. - Run the following command to create a secret which is essential for securing user sessions:

Copy the outputted CHAINLIT_AUTH_SECRET and add it to your .env file

chainlit create-secret

- Once you launch your application, you will see a login authentication page

- Login with your APP_LOGIN_USERNAME and APP_LOGIN_PASSWORD

- Upon successful login, each user will be directed to a page displaying their personal chat history with the chatbot.

Below is a preview of the web interface for the chatbot:

To customize the chatbot according to your needs, define your configurations in the config.toml file.