We create and provide open-source code of a model and training scheme called BrainFounder. BrainFounder focuses on the movement towards large-scale models capable of few-shot or zero-shot learning in the neuroimaginge AI space (known as Foundation Models). Our results show that BrainFounder provides a promising foundation for the future creation of such Foundation Models through its leveraging of massive unlabeled data in self-supervised learning techniques; results validated through evaluation on the Brain Tumor Segmentation (BraTS) Challenge and the ATLAS v2.0 challenge.

This repository provides the official implementation of BrainFounder Stage 1 and 2 Pretraining and Fine-tuning methods presented in the paper:

BrainFounder: Towards Brain Foundation Models for Neuroimage Analysis by Joseph Cox1, Peng Liu1, Skylar E. Stolte1, Yunchao Yang2, Kang Liu3, Kyle B. See1, Huiwen Ju4, and Ruogu Fang1,5,6,7

1 J. Crayton Pruitt Family Department of Biomedical Engineering, Herbert Wertheim College of Engineering, University of Florida, Gainesville, USA

2 University of Florida Research Computing, University of Florida, Gainesville, USA

3 Department of Physics, University of Florida, Gainesville, FL, 32611, USA

4 NVIDIA Corporation, Santa Clara, CA, USA

5 Center for Cognitive Aging and Memory, McKnight Brain Institute, University of Florida, Gainesville, USA

6 Department of Electrical and Computer Engineering, Herbert Wertheim College of Engineering, University of Florida, Gainesville, USA

7 Department Of Computer Information Science Engineering, Herbert Wertheim College of Engineering, University of Florida, Gainesville, USA

-

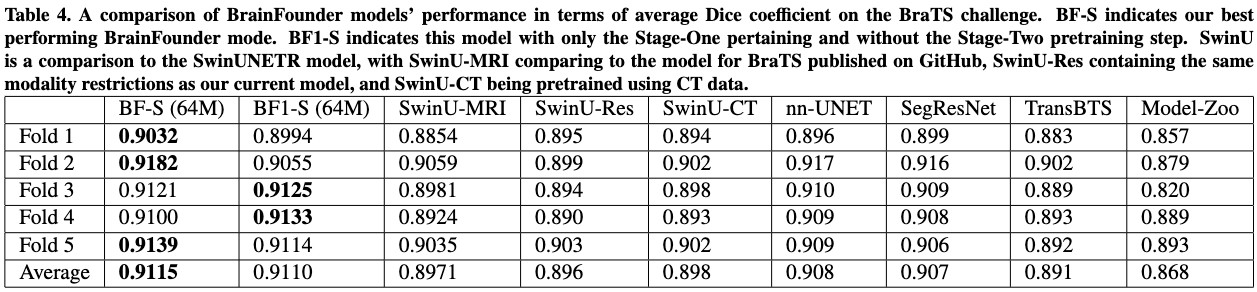

Our pretrained model outperformed current state of the art models on BratS across 5 folds.

-

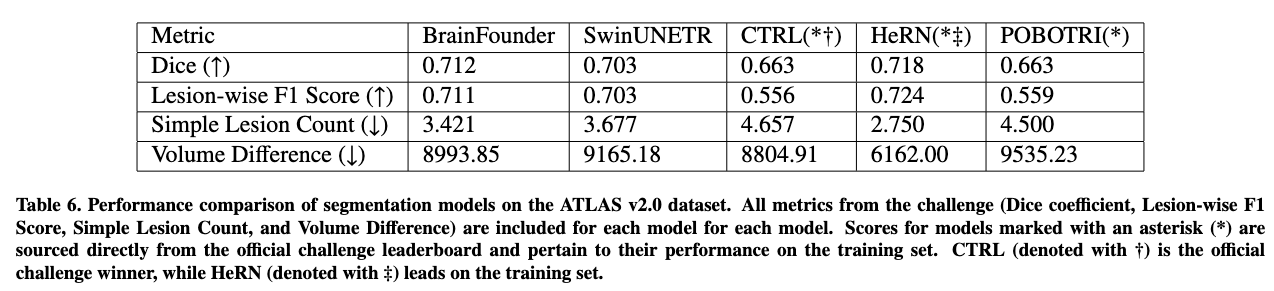

Our model performed competitively with winning models on the ATLAS challenge despite not utilizing ensemble learning

This repository is organized into 2 main directories:

This directory contains scripts and pretrained models for Stage 1 pretraining. Our models are pretrained on the UK Biobank dataset using Self-Supervised Learning (SSL) heads and a 3-way loss function. This stage sets the foundation for advanced feature extraction crucial for downstream tasks.

This directory includes scripts and pretrained models for Stage 2 pretraining and finetuning. The models are further trained and fine-tuned on downstream tasks using datasets such as BraTS and ATLAS, as detailed in our accompanying paper. This stage focuses on tailoring our robust pre-trained models to specific tasks to achieve high precision and reliability in practical applications.

Detailed instructions for pretraining/fine-tuning your own models using our scheme can be found in each directory. To get started, follow these steps:

-

Clone the repository:

git clone https://www.github.com/lab-smile/BrainSegFounder.git cd BrainSegFounder -

Install dependencies. This project was trained using Project MONAI's singularity containers, which we highly recommend using. The dependencies for this project are Project MONAI's dev requirements and the NPNL Lab's BIDSIO library. You can install both with pip using the following commands:

pip install -r https://raw.githubusercontent.com/Project-MONAI/MONAI/dev/requirements-dev.txt pip install -U git+https://github.com/npnl/bidsio

-

Navigate to the desired directory to begin working with the code:

cd pretrain # OR cd downstream

-

Refer to the

README.mdfiles in each directory for instructions on running the scripts in those directories.

JSON files containing the folds used for our data and PyTorch pretrained models can be downloaded from this Google Drive link.

If you encounter any problems while using the repository, please submit an issue on GitHub. Include as much detail as possible - screenshots, error messages, and steps to reproduce - to help us understand and fix the problem efficiently.

We welcome contributions to this repository. If you have suggestions or improvements, please fork this repository and submit a pull request.

This project is licenced under the GNU GPL v3.0 License. See the LICENSE file for details.

If you use this code, please cite our paper

We employ the SwinUNETR as our base model from: https://github.com/Project-MONAI/research-constributeions/tree/main/SwinUNETR