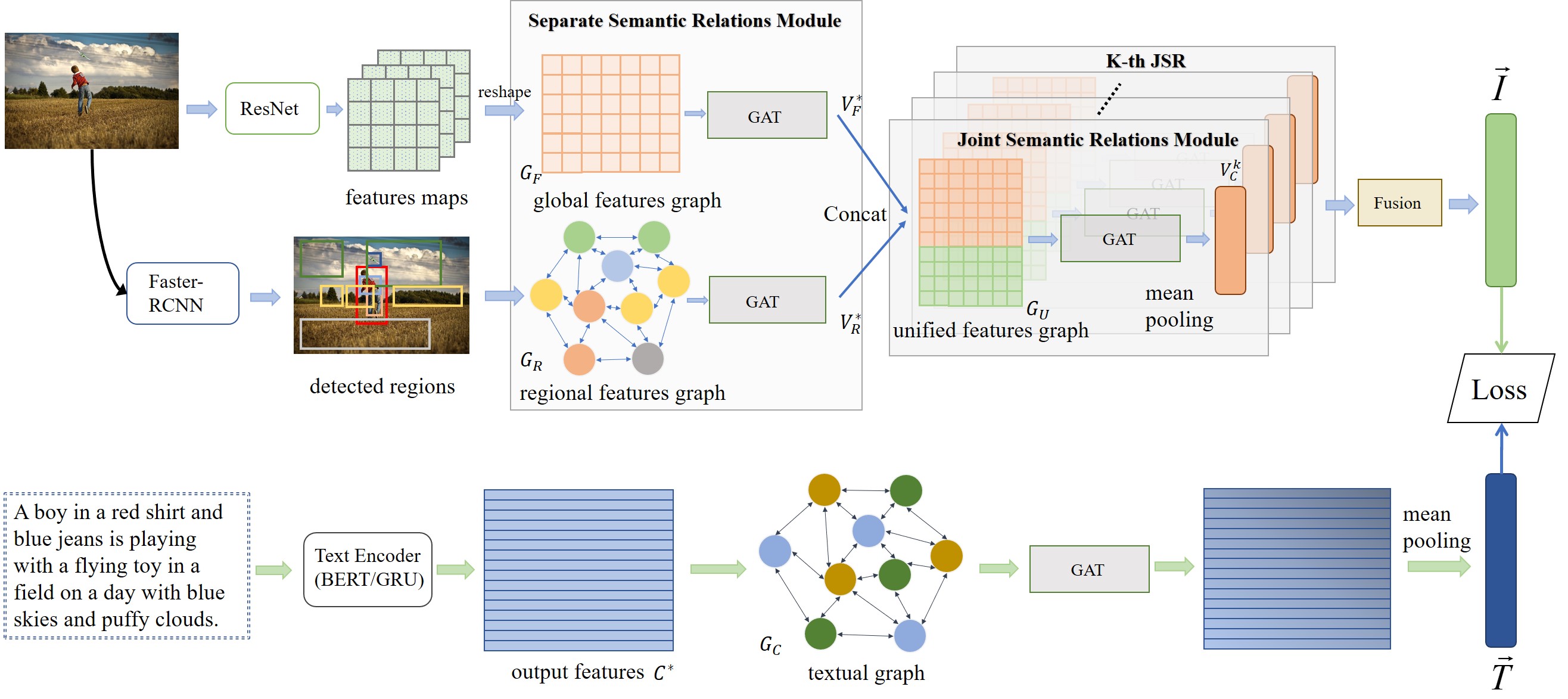

This is the official source code for Dual Semantic Relations Attention Network(DSRAN) proposed in our journal paper Learning Dual Semantic Relations with Graph Attention for Image-Text Matching (TCSVT 2020). It is built on top of the VSE++ in PyTorch.

The framework of DSRAN:

The results on MSCOCO and Flickr30K dataset:(With BERT or GRU)

| GRU | Image-to-Text | Text-to-Image | |||||

| Dataset | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | Rsum |

| MSCOCO-1K | 80.4 | 96.7 | 98.7 | 64.2 | 90.4 | 95.8 | 526.2 |

| MSCOCO-5K | 57.6 | 85.6 | 91.9 | 41.5 | 71.9 | 82.1 | 430.6 |

| Flickr30k | 79.6 | 95.6 | 97.5 | 58.6 | 85.8 | 91.3 | 508.4 |

| BERT | Image-to-Text | Text-to-Image | |||||

| Dataset | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | Rsum |

| MSCOCO-1K | 80.6 | 96.7 | 98.7 | 64.5 | 90.8 | 95.8 | 527.1 |

| MSCOCO-5K | 57.9 | 85.3 | 92.0 | 41.7 | 72.7 | 82.8 | 432.4 |

| Flickr30k | 80.5 | 95.5 | 97.9 | 59.2 | 86.0 | 91.9 | 511.0 |

We recommended the following dependencies.

- Python 3.6

- PyTorch 1.1.0

- NumPy (>1.12.1)

- torchtext

- pycocotools

- nltk

Download the raw images, pre-computed image features, pre-trained BERT models, pre-trained ResNet152 model and pre-trained DSRAN models. As for the raw images, they can be downloaded from VSE++.

wget http://www.cs.toronto.edu/~faghri/vsepp/data.tar

wget http://www.cs.toronto.edu/~faghri/vsepp/vocab.tar

We refer to the path of extracted files for data.tar as $DATA_PATH while only raw images are used which are coco and f30k.

For pre-computed image features, they can be obtained from VLP. These zip files should be extracted into the fold data/joint-pretrain. We refer to the path of extracted region_bbox_file(.h5) as $REGION_BBOX_FILE and regional feature paths feat_cls_1000/ for COCO and trainval/ for FLICKR30K as $FEATURE_PATH.

Pre-trained ResNet152 model can be downloaded from torchvision and put in the root directory.

wget https://download.pytorch.org/models/resnet152-b121ed2d.pth

For our trained DSRAN models, you can download runs.zip on Google Drive or GRU.zip together with BERT.zip on BaiduNetDisk(extract code:1119). There are totally 8 models (4 for each dataset).

Pre-trained BERT models are obtained form an old version of transformers. It is noticed that there's a simpler way of using BERT as seen in transformers. We'll update the code in the future. The pre-trained models we use can be downloaded from the same Google Drive and BaiduNetDisk(extract code:1119) links. We refer to the path of extracted files for uncased_L-12_H-768_A-12.zip as $BERT_PATH.

├── data/

| ├── coco/ /* MSCOCO raw images

| | ├── images/

| | | ├── train2014/

| | | ├── val2014/

| | ├── annotations/

| ├── f30k/ /* Flickr30K raw images

| | ├── images/

| | ├── dataset_flickr30k.json

| ├── joint-pretrain/ /* pre-computed image features

| | ├── COCO/

| | | ├── region_feat_gvd_wo_bgd/

| | | | ├── feat_cls_1000/ /* $FEATURE_PATH

| | | | ├── coco_detection_vg_thresh0.2_feat_gvd_checkpoint_trainvaltest.h5 /* $REGION_BBOX_FILE

| | | ├── annotations/

| | ├── flickr30k/

| | | ├── region_feat_gvd_wo_bgd/

| | | | ├── trainval/ /* $FEATURE_PATH

| | | | ├── flickr30k_detection_vg_thresh0.2_feat_gvd_checkpoint_trainvaltest.h5 /* $REGION_BBOX_FILE

| | | ├── annotations/

-

Test on MSCOCO dataset (1K and 5K simultaneously):

- Test on BERT-based models:

python evaluation_bert.py --model BERT/cc_model1 --fold --data_path "$DATA_PATH" --region_bbox_file "$REGION_BBOX_FILE" --feature_path "$FEATURE_PATH"

- Test on GRU-based models:

python evaluation.py --model GRU/cc_model1 --fold --data_path "$DATA_PATH" --region_bbox_file "$REGION_BBOX_FILE" --feature_path "$FEATURE_PATH"

-

Test on Flickr30K dataset:

- Test on BERT-based models:

python evaluation_bert.py --model BERT/f_model1 --data_path "$DATA_PATH" --region_bbox_file "$REGION_BBOX_FILE" --feature_path "$FEATURE_PATH"

- Test on GRU-based models:

python evaluation.py --model GRU/f_model1 --data_path "$DATA_PATH" --region_bbox_file "$REGION_BBOX_FILE" --feature_path "$FEATURE_PATH"

/* Remember to modify the "$DATA_PATH", "$REGION_BBOX_FILE" and "$FEATURE_PATH" in the .sh files.

-

Test on MSCOCO dataset (1K and 5K simultaneously):

- Test on BERT-based models:

sh test_bert_cc.sh

- Test on GRU-based models:

sh test_gru_cc.sh

-

Test on Flickr30K dataset:

- Test on BERT-based models:

sh test_bert_f.sh

- Test on GRU-based models:

sh test_gru_f.sh

Train a model with BERT on MSCOCO:

python train_bert.py --data_path "$DATA_PATH" --data_name coco --num_epochs 18 --batch_size 320 --lr_update 9 --logger_name runs/cc_bert --bert_path "$BERT_PATH" --ft_bert --warmup 0.1 --K 4 --feature_path "$FEATURE_PATH" --region_bbox_file "$REGION_BBOX_FILE"Train a model with BERT on Flickr30K:

python train_bert.py --data_path "$DATA_PATH" --data_name f30k --num_epochs 12 --batch_size 128 --lr_update 6 --logger_name runs/f_bert --bert_path "$BERT_PATH" --ft_bert --warmup 0.1 --K 2 --feature_path "$FEATURE_PATH" --region_bbox_file "$REGION_BBOX_FILE"Train a model with GRU on MSCOCO:

python train.py --data_path "$DATA_PATH" --data_name coco --num_epochs 18 --batch_size 300 --lr_update 9 --logger_name runs/cc_gru --use_restval --K 2 --feature_path "$FEATURE_PATH" --region_bbox_file "$REGION_BBOX_FILE"Train a model with GRU on Flickr30K:

python train.py --data_path "$DATA_PATH" --data_name f30k --num_epochs 16 --batch_size 128 --lr_update 8 --logger_name runs/f_gru --use_restval --K 2 --feature_path "$FEATURE_PATH" --region_bbox_file "$REGION_BBOX_FILE"We thank Linyang Li for the help with the code and provision of some computing resources.

If DSRAN is useful for your research, please cite our paper:

@ARTICLE{9222079,

author={Wen, Keyu and Gu, Xiaodong and Cheng, Qingrong},

journal={IEEE Transactions on Circuits and Systems for Video Technology},

title={Learning Dual Semantic Relations With Graph Attention for Image-Text Matching},

year={2021},

volume={31},

number={7},

pages={2866-2879},

doi={10.1109/TCSVT.2020.3030656}}