This repository provides an inplementation of our paper Dynamic 3D Gaze from Afar: Deep Gaze Estimation from Temporal Eye-Head-Body Coordination in CVPR 2022. If you use our code and data please cite our paper.

Please note that this is research software and may contain bugs or other issues – please use it at your own risk. If you experience major problems with it, you may contact us, but please note that we do not have the resources to deal with all issues.

@InProceedings{Nonaka_2022_CVPR,

author = {Nonaka, Soma and Nobuhara, Shohei and Nishino, Ko},

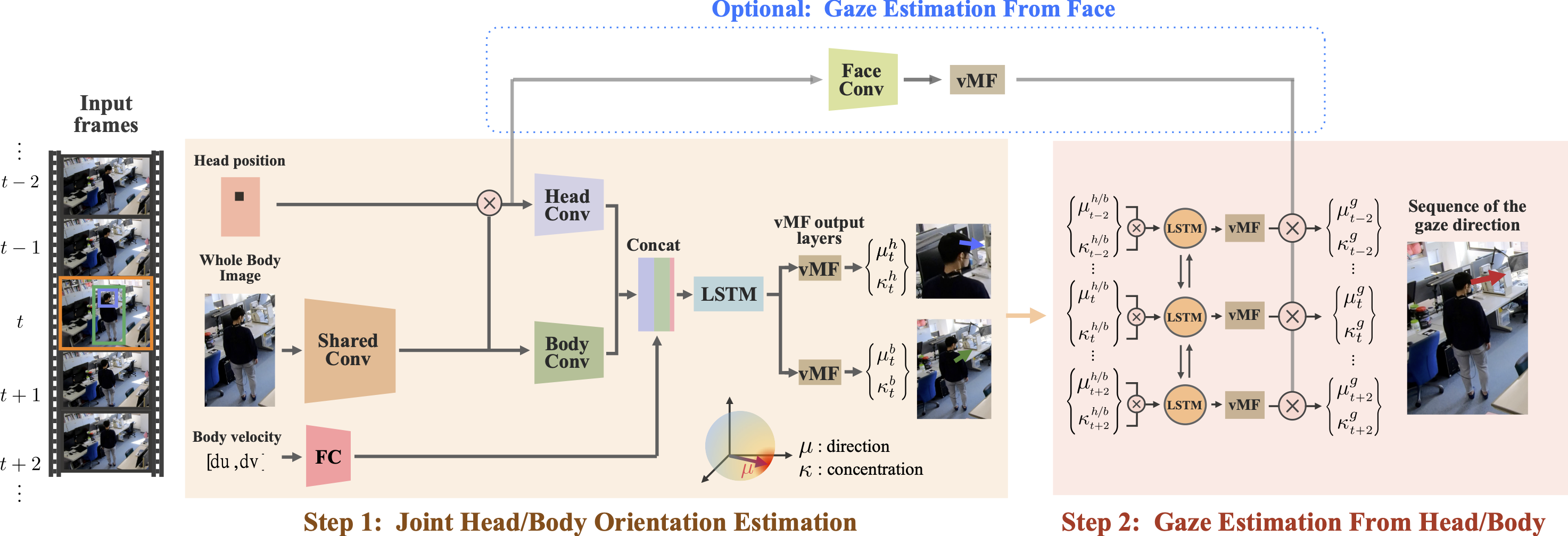

title = {Dynamic 3D Gaze From Afar: Deep Gaze Estimation From Temporal Eye-Head-Body Coordination},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {2192-2201}

}

We tested our code with Python 3.7 on Ubuntu 20.04 LTS. Our code depends on the following modules.

- numpy

- opencv_python

- matplotlib

- tqdm

- pytorch

- torchvision

- pytorch_lightning

- efficientnet_pytorch

- albumentations

Please browse conda.yaml to find the specific versions we used in our test environment, or you can simply do

$ conda env create --file conda.yaml

in your (virtual) environment.

Alternatively, you can use gafa.def to build your singularity container by

$ singularity build --fakeroot ./singularity/gafa.sif ./singularity/gafa.def

INFO: Starting build...

Getting image source signatures

(snip)

INFO: Creating SIF file...

INFO: Build complete: ./gafa.sif

The file size of the generated container file (gafa.sif in this example) will be around 7.7GB.

The GAFA dataset is provided under the Creative Commons Attribution 4.0 International License (CC BY 4.0).

The GAFA dataset is collected under approval by the Research Ethics Committee of the Graduate School of Informatics, Kyoto University (KUIS-EAR-2020-002).

We provide the raw data and preprocessed data. As the raw data is so large (1.7 TB), we recommend you to use the preprocessed data (5.9 GB), which contain cropped human images. If you would like to use your own cropping or head detection models, please use the raw data.

- Preprocessed data: Download all sequence (5.9GB, MD5:85b64a64c7f8c367bf0d3cffbaf3d693)

- Raw data:

The large files above (Library and Courtyard) are also available as 128GB chunks.

The chunks are generated by

$ split -b 128G -d library.tar.gz library.tar.gz.

$ split -b 128G -d courtyard.tar.gz courtyard.tar.gz.

and can be merged by

$ cat library.tar.gz.0[0-7] > library.tar.gz

$ cat courtyard.tar.gz.0[0-3] > courtyard.tar.gz

- library.tar.gz.00 (128GB, MD5:082c5abaed1868e581948a838238166a)

- library.tar.gz.01 (128GB, MD5:962537c097dac73df83434f92b8e629b)

- library.tar.gz.02 (128GB, MD5:0674283cc0d25c9a6f3552bae61333a3)

- library.tar.gz.03 (128GB, MD5:d2ddca8a856740b7c6729a65168c85c9)

- library.tar.gz.04 (128GB, MD5:75a611f5cd56a08d3f84ca5315f740b3)

- library.tar.gz.05 (128GB, MD5:3a5320c544131a3d189037f0167c8686)

- library.tar.gz.06 (128GB, MD5:e5931e6b9acbfbd44cd973cb298f6940)

- library.tar.gz.07 (31GB, MD5:4c8a0ccc3dca3005eeb6172846ce5743)

We provide the raw surveillance videos, calibration data (camera intrinsics and extrainsics), and annotation data (gaze/head/body directions). Our dataset contains 5 daily scenes, lab, library, kitchen, courtyard, and living room.

The data is organized as follows.

data/raw_data

├── library/

│ ├──1026_3/

│ │ ├── Camera1_0/

│ │ │ ├── 000000.jpg

│ │ │ ├── 000001.jpg

│ │ │ ...

│ │ ├── Camera1_0.pkl

│ │ ...

│ │ ├── Camera1_1/

│ │ ├── Camera1_1.pkl

│ │ ...

│ │ ├── Camera2_0/

│ │ ├── Camera2_0.pkl

│ │ ...

│ ├──1028_2/

│ ...

├── lab/

├── courtyard/

├── living_room/

├── kitchen/

└── intrinsics.npz

Data is stored in five scenes (e.g. library/).

- Each scene is subdivided by shooting session (e.g.

1026_3/). - Each session is stored frame by frame with images taken from each camera (e.g.

Camera1_0, Camera2_0, ..., Caemra8_0).- For example,

Camera1_0/000000.jpgandCamera2_0/000000.jpgcontain images of a person as seen from each camera at the same time. - In some cases, we further divided the session into multiple subsessions (e.g.

Camera1_1, Camera1_2, ...).

- For example,

- The calibration data of each camera and annotation data (gaze, head, and body directions) in the camera coordinate are stored in a pickle file (e.g.

Caera1_0.pkl). - The cameras share a single intrinsic parameter given in

intrinsics.npz.

The preprocessed data are stored in a similar format as the raw data, but each frame contains cropped person images.

We also provide a script to preprocess raw data as data/preprocessed/preprocess.py.

Please open the following Jupyter notebooks.

demo.ipynb: Demo code for end-to-end gaze estimation with our proposed model.dataset-demo.ipynb: Demo code to visualize annotations of the GAFA dataset.

In case you have built your singularity container as mentioned above, you can launch jupyter in singularity by

$ singularity exec ./singularity/gafa.sif jupyter notebook --port 12345

To access the notebook, open this file in a browser:

file:/// ...

Or copy and paste one of these URLs:

http://localhost:12345/?token= ...

or http://127.0.0.1:12345/?token= ...

Or, you can launch bash shell in singularity by

singularity shell --nv ./singularity/gafa.sif --port 12345

Please download the weights of the pretrained models from here, and place the .pth files to models/weights.

We can then evaluate the accuracy of the estimated gaze direction with our model.

python eval.py \

--gpus 1 (If no GPU is available, set -1)

# Result

MAE (3D front): 20.697409

MAE (2D front): 17.393957

MAE (3D back): 23.210892

MAE (2D back): 21.901659

MAE (3D all): 21.688675

MAE (2D all): 20.889896

You can train our model with the GAFA dataset by

python train.py \

--epoch 10 \

--n_frames 7 \

--gpus 2

It will consume 24GB for each GPUs, and takes about 24 hours for training. As we implement our model using distributed data parallel functionality in PyTorch Lightning, you can speed up the training by adding more GPUs.

If your GPU's VRAM is less than 24 GB, please switch the model to data parallel mode by changing strategy="ddp" in train.py to strategy="dp".