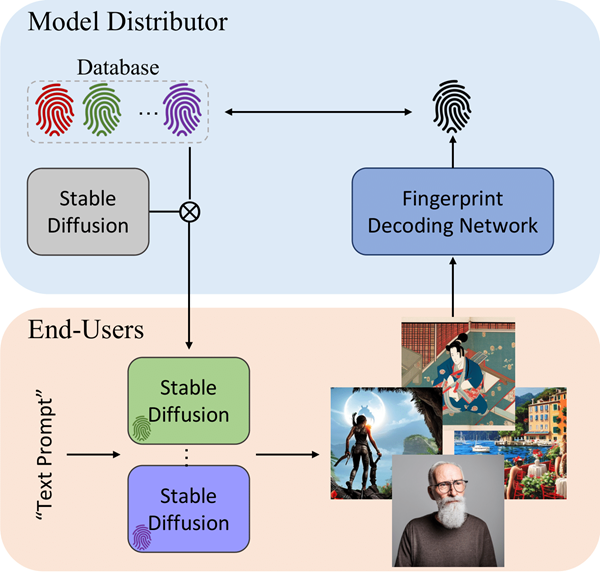

WOUAF: Weight Modulation for User Attribution and Fingerprinting in Text-to-Image Diffusion Models (CVPR 2024)

Paper | Project page | Demo

Preliminary requirements:

- Python>=3.5

- PyTorch==1.12.1

Run the following command:

pip3 install -r requirements.txt

First, clone this repository and download the pre-extracted latent vectors for validation: Google Drive.

Then, use trainval_WOUAF.py to train and evaluate the model:

CUDA_VISIBLE_DEVICES=0 python trainval_WOUAF.py \

--pretrained_model_name_or_path stabilityai/stable-diffusion-2-base \

--dataset_name HuggingFaceM4/COCO \

--dataset_config_name 2014_captions --caption_column sentences_raw \

--center_crop --random_flip \

--dataloader_num_workers 4 \

--train_steps_per_epoch 1_000 \

--max_train_steps 50_000 \

--pre_latents latents/HuggingFaceM4/COCO

- Our code builds on the fine-tuning scripts for Stable Diffusion from Diffusers.

- The implementation of MappingNetwork is from StyleGAN2-ADA.

@inproceedings{kim2024wouaf,

title={WOUAF: Weight modulation for user attribution and fingerprinting in text-to-image diffusion models},

author={Kim, Changhoon and Min, Kyle and Patel, Maitreya and Cheng, Sheng and Yang, Yezhou},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={8974--8983},

year={2024}

}