- Problem Statement

- Dataset

- Model Architecture using Transfer Learning

- Random Search Hyperparameter Tuning

- The Problem of Vanishing Gradients

- Further work

- References

Apples are one of the most important temperate fruit crops in the world. Foliar (leaf) diseases pose a major threat to the overall productivity and quality of apple orchards. The current process for disease diagnosis in apple orchards is based on manual scouting by humans, which is time-consuming and expensive.

Although computer vision-based models have shown promise for plant disease identification, there are some limitations that need to be addressed. Large variations in visual symptoms of a single disease across different apple cultivars, or new varieties that originated under cultivation, are major challenges for computer vision-based disease identification. These variations arise from differences in natural and image capturing environments, for example, leaf color and leaf morphology, the age of infected tissues, non-uniform image background, and different light illumination during imaging etc.

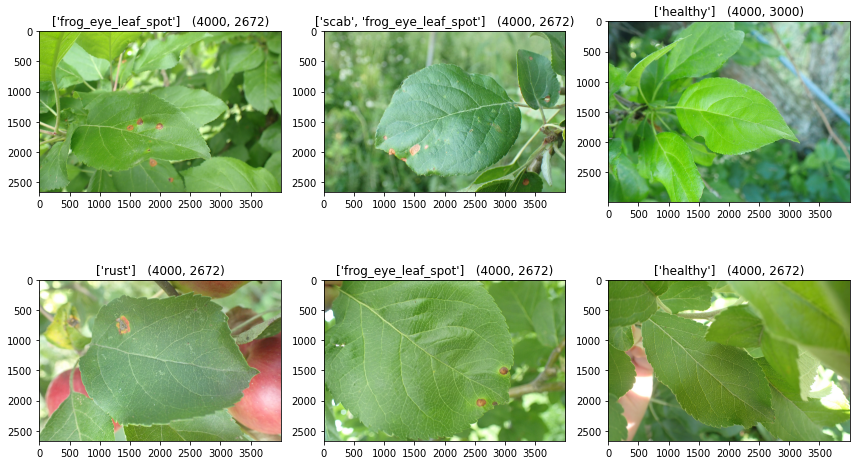

The Plant Pathology 2021-FGVC8 Dataset [1] contains approximately 23,000 high-quality RGB images of apple foliar diseases, including a large expert-annotated disease dataset. This dataset reflects real field scenarios by representing non-homogeneous backgrounds of leaf images taken at different maturity stages and at different times of day under different focal camera settings.

But for a faster image loading and processing, we use the 256x256 resized version of the original rgb images, thanks to this dataset.

Convolutional neural networks (CNNs) are the most dominant machine learning approach for Visual Object Recognition. In practice, Visual Recognition systems have gained widespread attention after the release of the publicly available imagenet dataset [2] containing 14 million images and 22 thousand visual categories. Starting from 2010, the ILSVR Challenges [3] have led to the research of new CNN architectures [6] [7] [8] [9], which achieve high accuracies on most of the image recognition problems, being trained on the robust imagenet dataset. These models and their weights are publicely available, which led to the idea of Transfer Learning, as discussed here. There are two major types of transfer learning: Fixed Feature Extractor and Fine-Tuning.

We have Fine-Tuned all the conv-layers of our base model and the fc layers which predict the output based on the number of classes. In our case, we are solving a multilabel multiclass classification problem, wherein we may have multiple diseases for each plant image. We apply the Sigmoid activation function on the raw output logits.

In general, we use Sigmoid activation function for multilabel multiclass classification problem. The predicted labels are determined by a threshold value, which is a hyperparameter we can tune. More details about Sigmoid here.

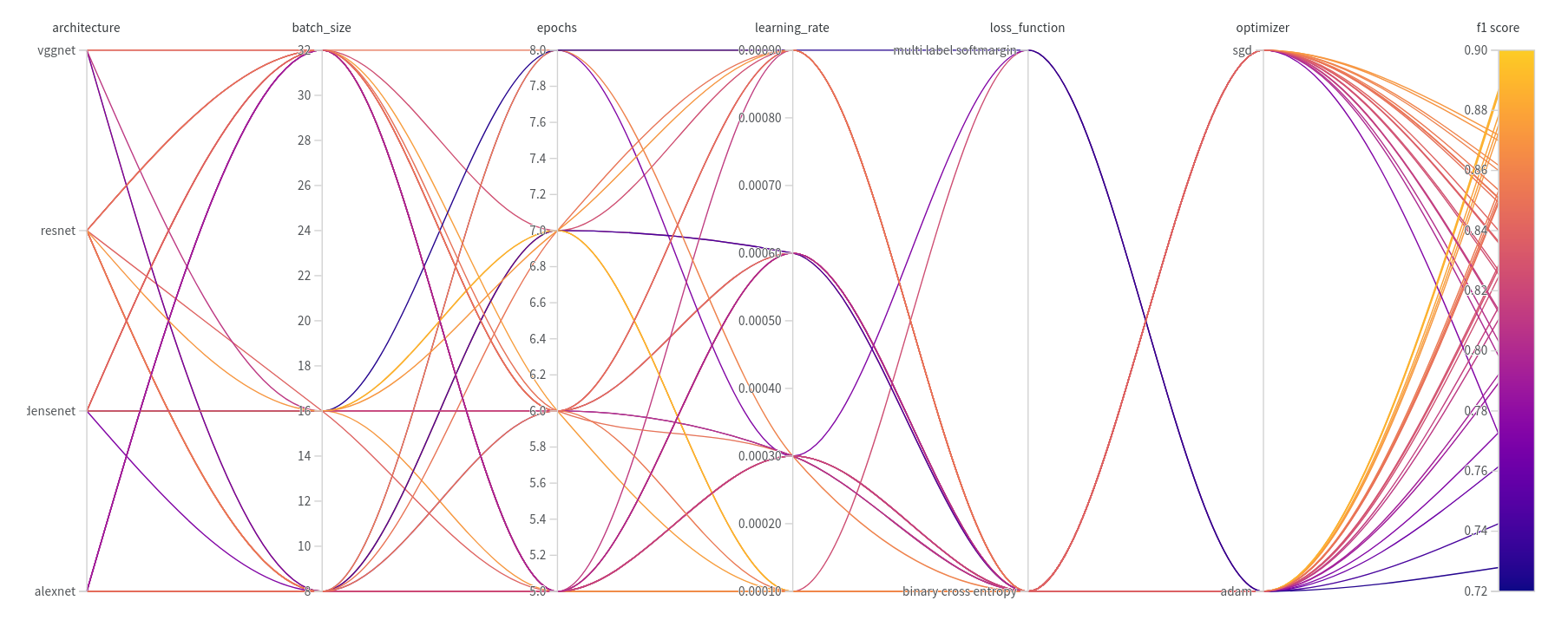

For our research, Random Search [4] was used for sweeping hyperparameters to find the most optimum set. Random Search has proven to yield better results as compared to Grid Search Algorithm. More details about why we choose Randomized Search here.

Also, another way is to first run Randomized Search to narrow down the set of hyperparameters to tune, and then apply Grid Search to extensively search for the most valuable hyperparameter set.

The hyperparameters we are tuning are:

- Base Model

- Learning Rate

- Optimizer

- Batch Size

- Epochs

- Loss Function

The metric for measuring the accuracy of predictions for the model was F1-Score [5], which is calculated using Precision and Recall. More details on accuracy metrics for multilabel classification here.

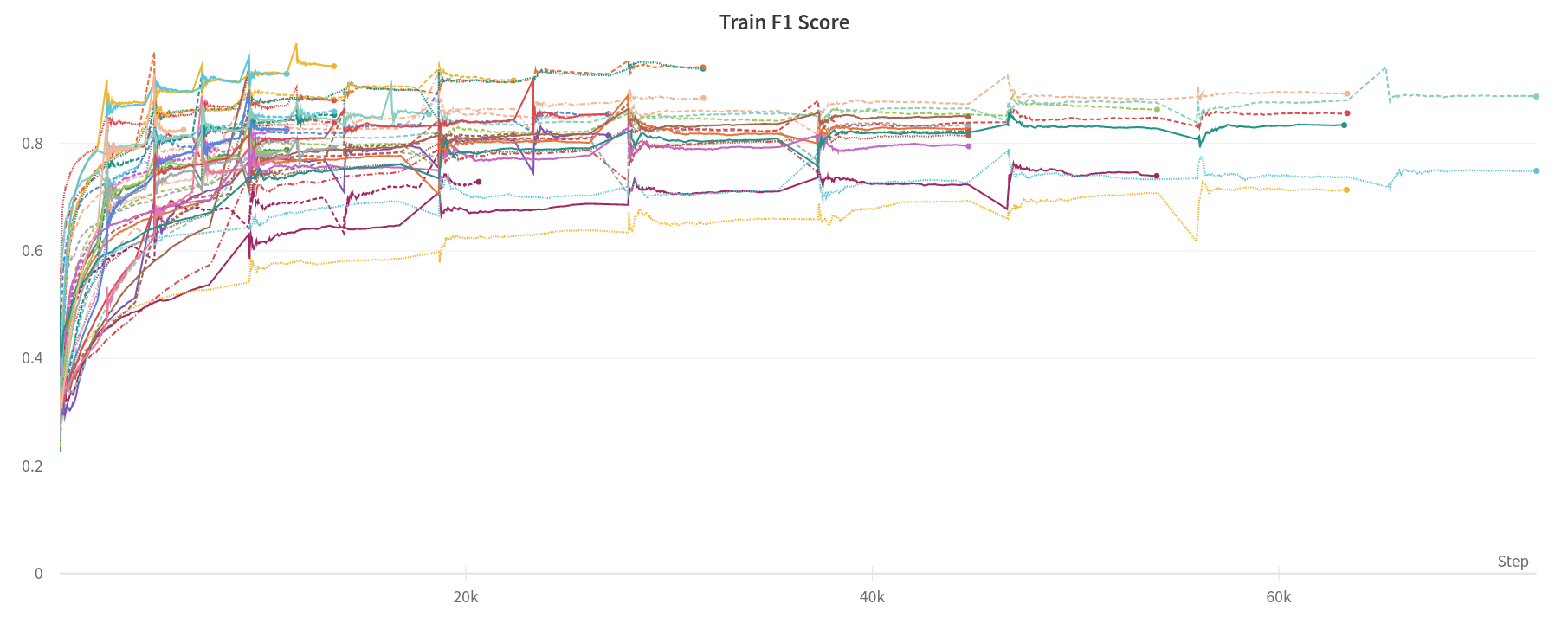

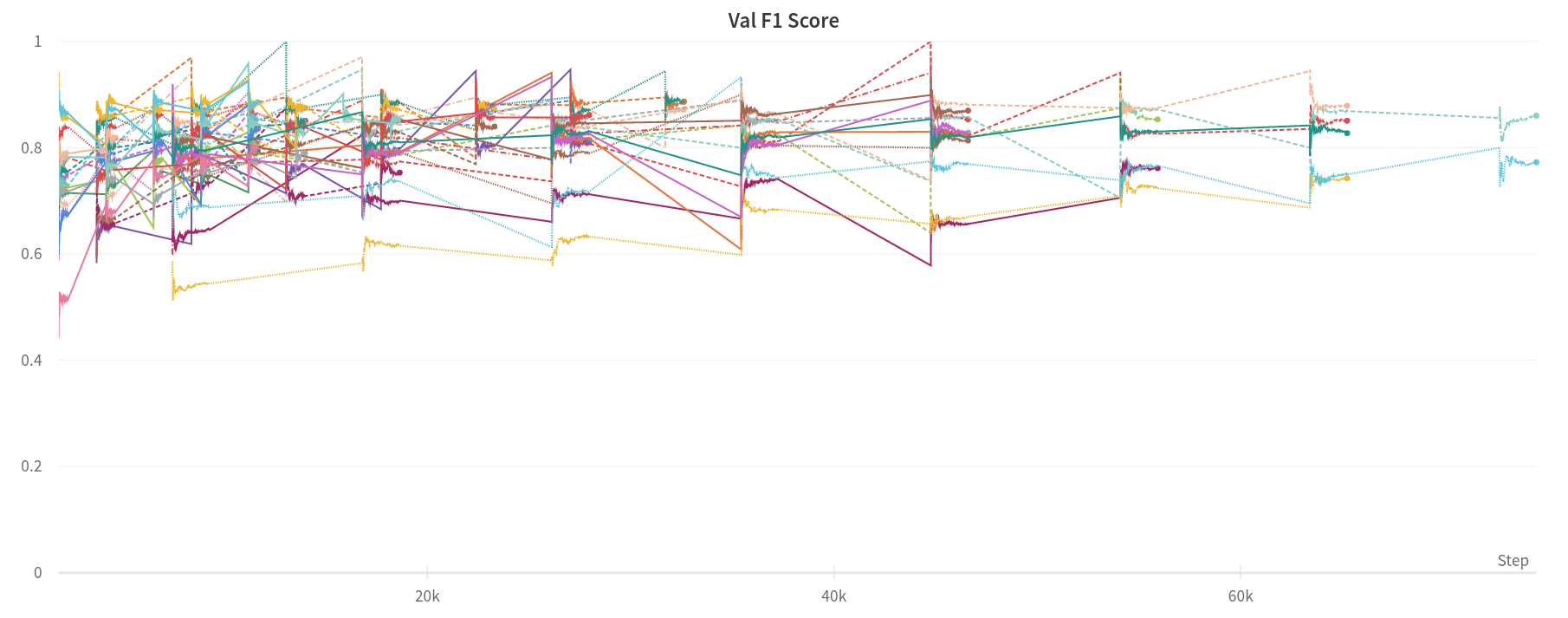

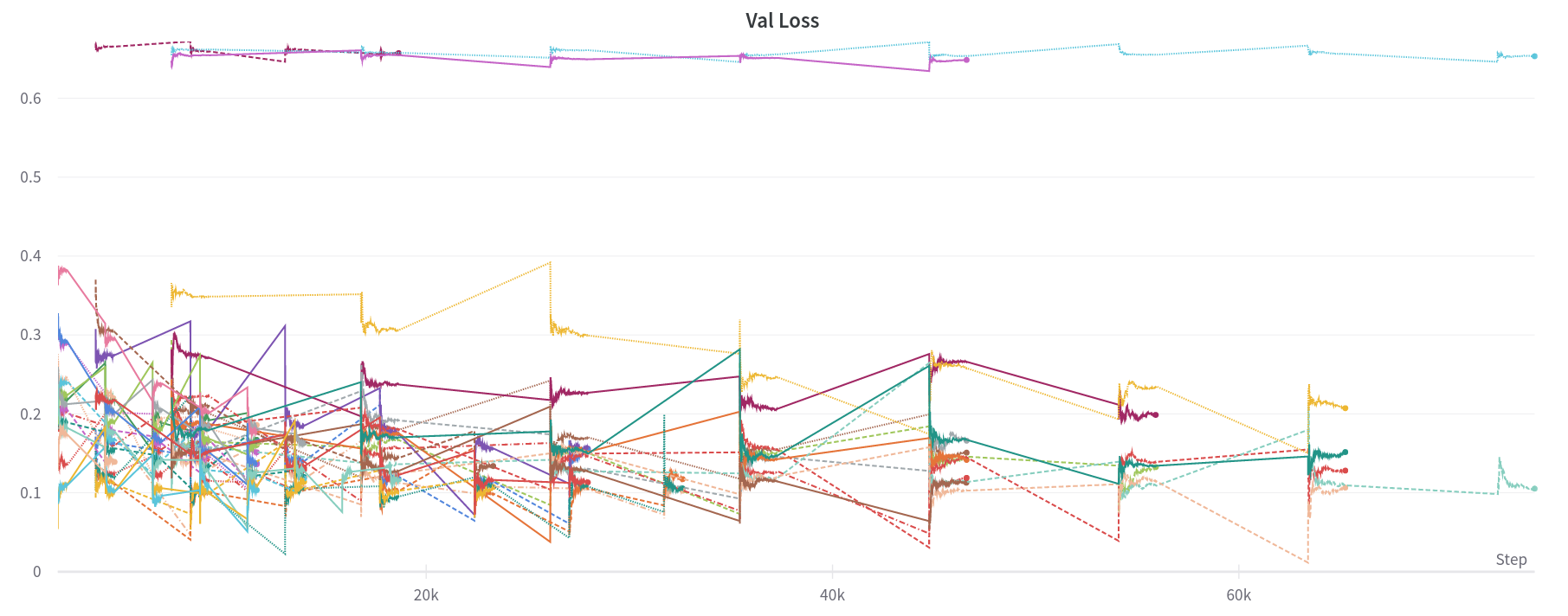

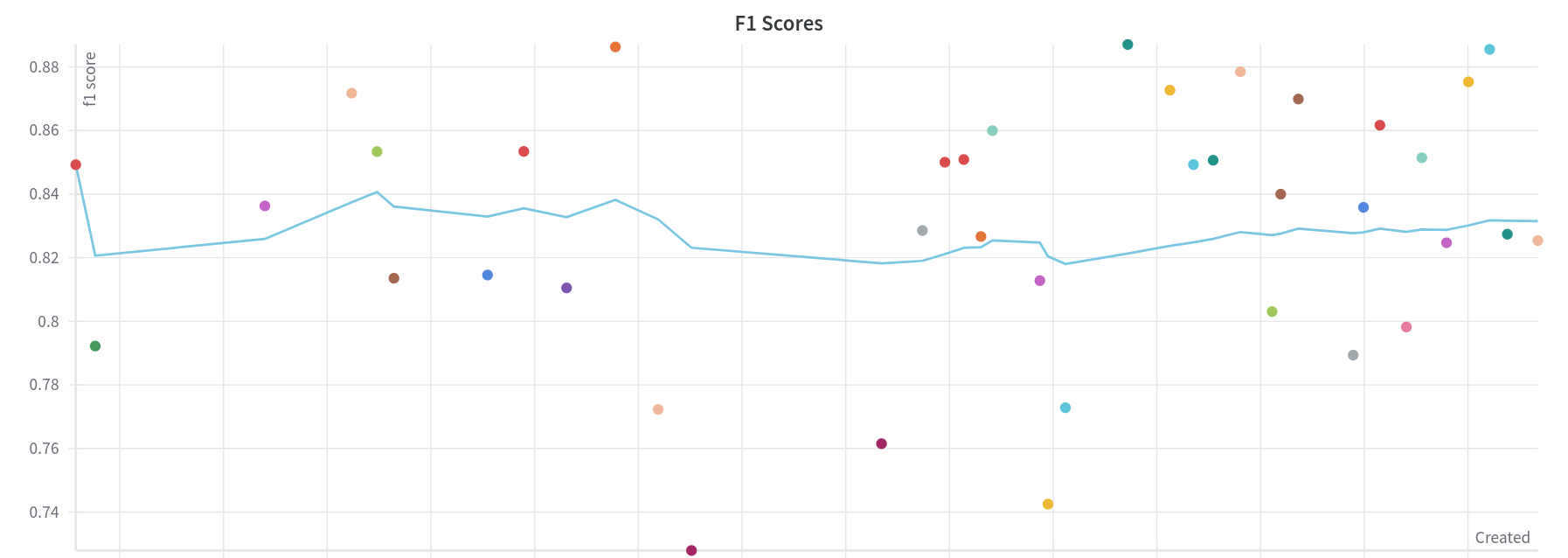

There were 40 Runs for 18 hours in the WandB Sweep, to produce the following results:

| Sr. | Train F1 | Val F1 | Architecture | Loss Fun | Optimizer | LR | Batch Size | Epochs |

|---|---|---|---|---|---|---|---|---|

| 1 | 0.9433 | 0.8753 | densenet | BCE | Adam | 1e-4 | 32 | 6 |

| 2 | 0.9392 | 0.8871 | densenet | BCE | Adam | 1e-4 | 16 | 7 |

| 3 | 0.9292 | 0.8855 | densenet | BCE | Adam | 1e-4 | 32 | 5 |

| 4 | 0.9171 | 0.8727 | densenet | BCE | Adam | 1e-4 | 16 | 5 |

| 5 | 0.8924 | 0.8785 | resnet | BCE | Adam | 1e-4 | 8 | 7 |

| 6 | 0.8868 | 0.8600 | vggnet | BCE | SGD | 3e-4 | 8 | 8 |

On analysing the results we find that:

- Top 4 Train F1 Scores was obtained on the DenseNet Architecture [9].

- The Densenet model having the highest Train F1 score of 0.9433 did overfit on the 6th epoch, reducing its Validation F1 Score from 0.8855 to 0.8753.

- Binary Cross Entropy is a better loss function for this problem as compared to the Multi Label Soft Margin Classifier.

- Adam Optimizer [10] is more efficient than the SGD Optimizer.

- 1e-4 is the optimum converging learning rate relative to the number of epochs our models are tested.

We are comparing two different loss functions, the Binary Cross Entropy and the Multi Label Soft Margin Loss. The loss values for MLSML is relatively greater (for a particular f1 score) as compared to the BCE loss, as observed above.

A plot of Validation F1 Scores, tracing the Average values across all 40 runs of the sweep.

As stated in the ResNet paper [8], the problem of Vanishing/Exploding gradients is a notorious problem, hampering convergence. To overcome this problem to some extent, we have used the values for normalized initialization of the imagenet dataset [2], referenced [11].

But still, while adding the bottleneck dense layers on top of our transfer learning base models, we witness the Vanishing Gradient Problem. We have submitted models for different fully connected layer combinations for DenseNet [9] and ResNet [8], to get a substantive observation of the Vanishing Gradient Problem.

| Architecture | Accuracy | Architecture | Accuracy |

|---|---|---|---|

| densenet | 0.77045 | resnet | 0.76805 |

| densenet+16 | 0.72179 | resnet+16 | 0.72908 |

| densenet+64+16 | 0.74275 | resnet+64+16 | 0.72280 |

| densenet+256+64+16 | 0.70655 | resnet+256+64+16 | 0.71283 |

| densenet+512+256+64+16 | 0.69944 | resnet+512+256+64+16 | 0.70046 |

| densenet+1024+512+256+64+16 | 0.67220 | resnet+1024+512+256+64+16 | 0.65060 |

Therefore, we have replaced the top layer of the imagenet pre-trained model with a linear layer, having the previous layer input size, predicting Sigmoid probablities for the 6 classes. For training the model, we fine tune all the layers of the model, i.e. the weights of all the layers backpropagate to update its values on the train dataset. The values of the 6 output neurons are compared with the threshold to determine the labels.

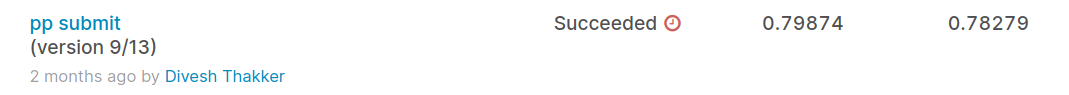

Our kaggle submission achieved:

- Exploring the Focal Loss function [12] to overcome Class Imbalance, using techniques like OHEM [13].

- Hyperband Optimization [14] for Hyperparameter Tuning Sweeps.

[1] Ranjita Thapa, Qianqian Wang, Noah Snavely, Serge Belongie, Awais Khan. The Plant Pathology 2021 Challenge dataset to classify foliar disease of apples.

[2] Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248–255).

[3] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, Li Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision volume 115, pages211–252 (2015).

[4] James Bergstra, Yoshua Bengio. Random Search for Hyper-Parameter Optimization. Journal of Machine Learning Research 13 (2012) 281-305.

[5] Zachary C. Lipton, Charles Elkan, and Balakrishnan Naryanaswamy. Thresholding Classifiers to Maximize F1 Score. Joint European Conference on Machine Learning and Knowledge Discovery in Databases (2014) 225-239.

[6] Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton. ImageNet Classification with Deep Convolutional Neural Networks.

[7] Karen Simonyan, Andrew Zisserman. Very Deep Convolutional Networks for Large-Scale Image Recognition. ICLR (2015).

[8] Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. Deep Residual Learning for Image Recognition. Conference on Computer Vision and Pattern Recognition (CVPR) (2016).

[9] Gao Huang, Zhuang Liu, Laurens Van Der Maaten, Kilian Q. Weinberger. Densely Connected Convolutional Networks. Conference on Computer Vision and Pattern Recognition (CVPR) (2017).

[10] Kingma, D.P., & Ba, J. (2015). Adam: A Method for Stochastic Optimization. CoRR, abs/1412.6980.

[11] Glorot, X., & Bengio, Y. (2010). Understanding the difficulty of training deep feedforward neural networks. AISTATS.

[12] Lin, T., Goyal, P., Girshick, R.B., He, K., & Dollár, P. (2020). Focal Loss for Dense Object Detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42, 318-327.

[13] Shrivastava, A., Gupta, A., & Girshick, R.B. (2016). Training Region-Based Object Detectors with Online Hard Example Mining. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2016) 761-769.

[14] Li, L., Jamieson, K.G., DeSalvo, G., Rostamizadeh, A., & Talwalkar, A.S. (2017). Hyperband: A Novel Bandit-Based Approach to Hyperparameter Optimization._ J. Mach. Learn. Res., 18, 185:1-185:52._