Bringing Old Photos Back to Life, CVPR2020 (Oral)

Old Photo Restoration via Deep Latent Space Translation, PAMI Under Review

Ziyu Wan1,

Bo Zhang2,

Dongdong Chen3,

Pan Zhang4,

Dong Chen2,

Jing Liao1,

Fang Wen2

1City University of Hong Kong, 2Microsoft Research Asia, 3Microsoft Cloud AI, 4USTC

You can now play with our Colab and try it on your photos.

Clone the Synchronized-BatchNorm-PyTorch repository for

cd Face_Enhancement/models/networks/

git clone https://github.com/vacancy/Synchronized-BatchNorm-PyTorch

cp -rf Synchronized-BatchNorm-PyTorch/sync_batchnorm .

cd ../../../

cd Global/detection_models

git clone https://github.com/vacancy/Synchronized-BatchNorm-PyTorch

cp -rf Synchronized-BatchNorm-PyTorch/sync_batchnorm .

cd ../../

Download the landmark detection pretrained model

cd Face_Detection/

wget http://dlib.net/files/shape_predictor_68_face_landmarks.dat.bz2

bzip2 -d shape_predictor_68_face_landmarks.dat.bz2

cd ../

Download the pretrained model from shared one-drive links, put the file Face_Enhancement/checkpoints.zip under ./Face_Enhancement, put the file Global/checkpoints.zip under ./Global. Then unzip them respectively.

cd Face_Enhancement/

unzip checkpoints.zip

cd ../

cd Global/

unzip checkpoints.zip

cd ../

Install dependencies:

pip install -r requirements.txtYou could easily restore the old photos with one simple command after installation and downloading the pretrained model.

For images without scratches:

python run.py --input_folder [test_image_folder_path] \

--output_folder [output_path] \

--GPU 0

For scratched images:

python run.py --input_folder [test_image_folder_path] \

--output_folder [output_path] \

--GPU 0 \

--with_scratch

Note: Please try to use the absolute path. The final results will be saved in ./output_path/final_output/. You could also check the produced results of different steps in output_path.

Currently we don't plan to release the scratched old photos dataset with labels directly. If you want to get the paired data, you could use our pretrained model to test the collected images to obtain the labels.

cd Global/

python detection.py --test_path [test_image_folder_path] \

--output_dir [output_path] \

--input_size [resize_256|full_size|scale_256]

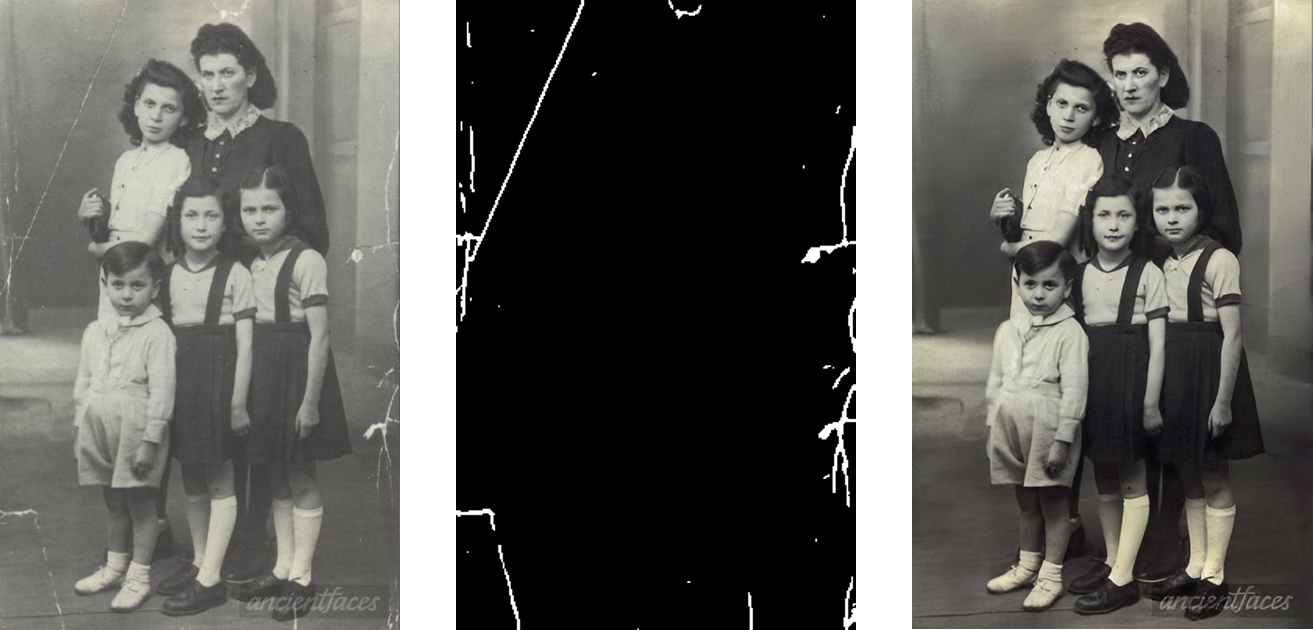

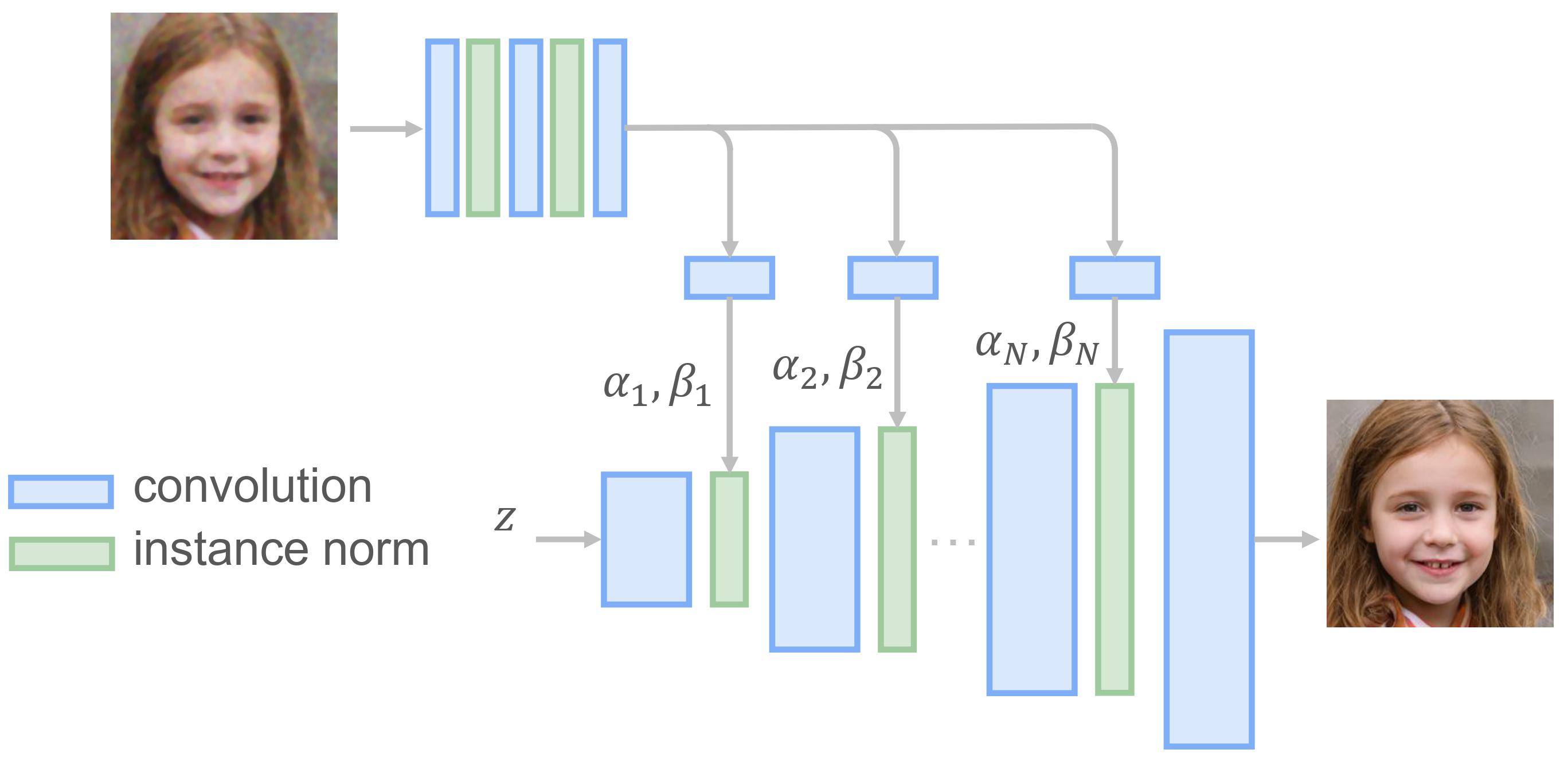

A triplet domain translation network is proposed to solve both structured degradation and unstructured degradation of old photos.

cd Global/

python test.py --Scratch_and_Quality_restore \

--test_input [test_image_folder_path] \

--test_mask [corresponding mask] \

--outputs_dir [output_path]

python test.py --Quality_restore \

--test_input [test_image_folder_path] \

--outputs_dir [output_path]

We use a progressive generator to refine the face regions of old photos. More details could be found in our journal submission and ./Face_Enhancement folder.

NOTE: This repo is mainly for research purpose and we have not yet optimized the running performance.

Since the model is pretrained with 256*256 images, the model may not work ideally for arbitrary resolution.

- Clean testing code

- Release pretrained model

- Collab demo

- Replace face detection module (dlib) with RetinaFace

- Release training code

If you find our work useful for your research, please consider citing the following papers :)

@inproceedings{wan2020bringing,

title={Bringing Old Photos Back to Life},

author={Wan, Ziyu and Zhang, Bo and Chen, Dongdong and Zhang, Pan and Chen, Dong and Liao, Jing and Wen, Fang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={2747--2757},

year={2020}

}

@misc{2009.07047,

Author = {Ziyu Wan and Bo Zhang and Dongdong Chen and Pan Zhang and Dong Chen and Jing Liao and Fang Wen},

Title = {Old Photo Restoration via Deep Latent Space Translation},

Year = {2020},

Eprint = {arXiv:2009.07047},

}

This project is currently maintained by Ziyu Wan and is for academic research use only. If you have any questions, feel free to contact raywzy@gmail.com.

The codes and the pretrained model in this repository are under the MIT license as specified by the LICENSE file. We use our labeled dataset to train the scratch detection model.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.