This repository contains an implementation of the Neural Radiance Fields (NeRF) algorithm for dynamic environments.

Neural Radiance Fields (NeRF) is a novel approach that models a scene as a continuous 3D function which can be rendered from novel viewpoints. However, it's important to note that the original NeRF algorithm is not designed to dynamic scenes where objects move over time. This implementation serves as a basic adaptation attempt to extend NeRF's capabilities to dynamic environments.

- Clone repository

git clone git@github.com:ks-7-afk/nerf_in_dynamic.git cd nerf_in_dynamic - Install dependences

conda env create -f environment.yaml conda activate dynamic_nerf pip install torch

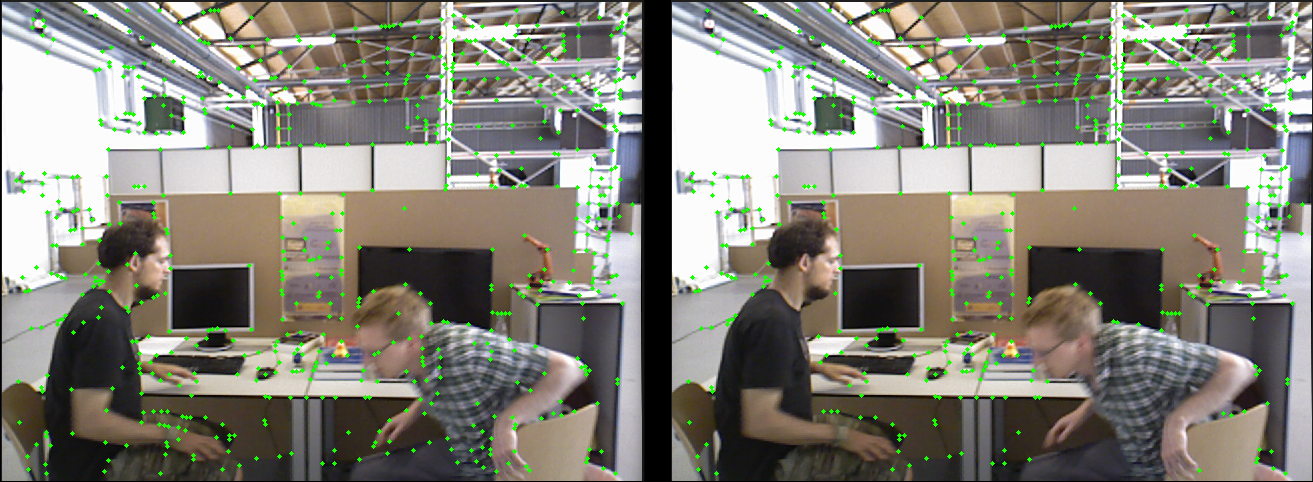

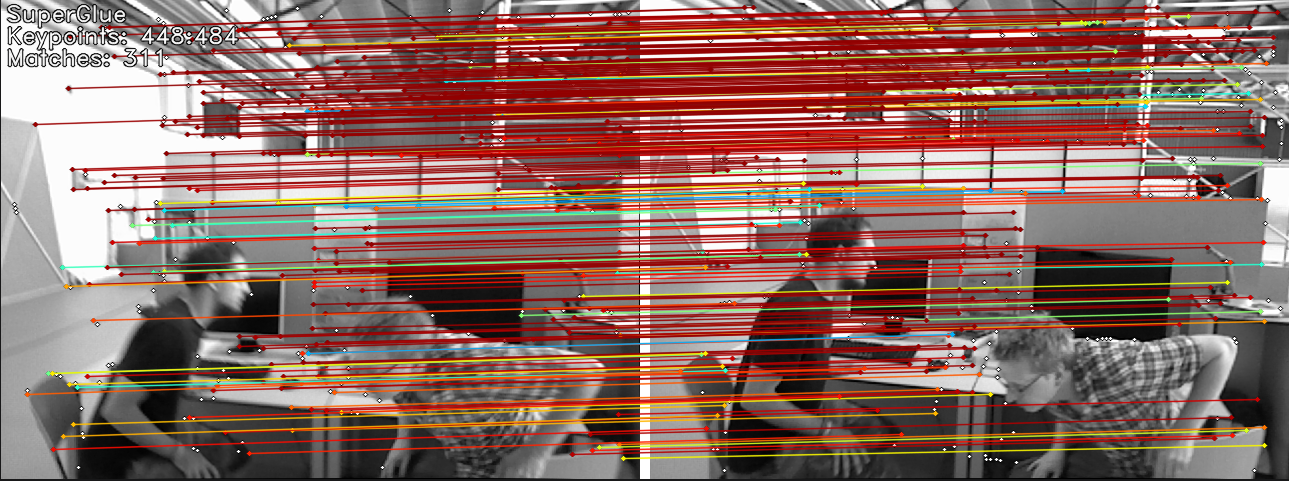

For preprocessing data, we use SuperPoint and SuperGlue. SuperPoint is used for detecting keypoints and descriptors, while SuperGlue is used for matching these points between images. Additionally, masking is applied to filter out irrelevant keypoints.

python -m preparation_data.prep_data \

--dataset-dir /dataset/tum-rgbd/rgbd_dataset_freiburg3_walking_halfsphere \

--vid-ids rgb \

--run-sfm \

--write-json \

--vis \

--masksImages are located in the following directory structure:

/dataset/

└── tum-rgbd/

└── rgbd_dataset_freiburg3_walking_halfsphere/

└── rgb/

├── image1.png

├── image2.png

├── ...

Masking to filter out irrelevant keypoints.

Masking to filter out irrelevant keypoints.

To train the model, run the following command:

ns-train nerfacto \

--output-dir $OUTPUT_DIR \

--data dataset/tum-rgbd/rgbd_dataset_freiburg3_walking_halfsphere/rgb \

--viewer.quit-on-train-completion True \

--pipeline.datamanager.camera-optimizer.mode off

--output-dir: Specifies the directory where the training outputs (model, logs, etc.) will be saved.

--data: Path to the directory containing the RGB images for training.

To evaluate the trained model, run the following command:

ns-eval \

--load-config $OUTPUT_DIR/config.yml \

--output-path output.json \

--render-output-path $OUTPUT_RENDER--load-config: Path to the configuration file in $OUTPUT_DIR.

--output-path: Path to save the evaluation metrics in a JSON file (PSNR, SSIM, LPIPS).

--render-output-path: Path to save the rendered output image.

| Method | PSNR (↑) | SSIM (↑) | LPIPS (↓) |

|---|---|---|---|

| NeRF | 18.086 | 0.713 | 0.294 |

| NeRF + Masking + Robust loss | 19.388 | 0.780 | 0.201 |

| NeRF + Masking + Robust loss + Filter out irrelevant keypoints | 19.577 | 0.792 | 0.198 |