This repository contains code for feature dimensionality reduction using autoencoder. The repository will be updated with other methods to encode the input as well as code to train autoencoder on textual dataset.

- Python 2.7

- Tensorflow 1.2.1

- Numpy

-

utility_dir: storage module for data, vocab files, saved models, tensorboard logs, outputs.

-

implementation_module: code for model architecture, data reader, training pipeline and test pipeline.

-

settings_module: code to set directory paths (data path, vocab path, model path etc.), set model parameters (hidden dim, attention dim, regularization, dropout etc.), set vocab dictionary.

-

run_module: wrapper code to execute end-to-end train and test pipeline.

-

visualization_module: code to generate embedding visualization via tensorboard.

-

utility_code: other utility codes

-

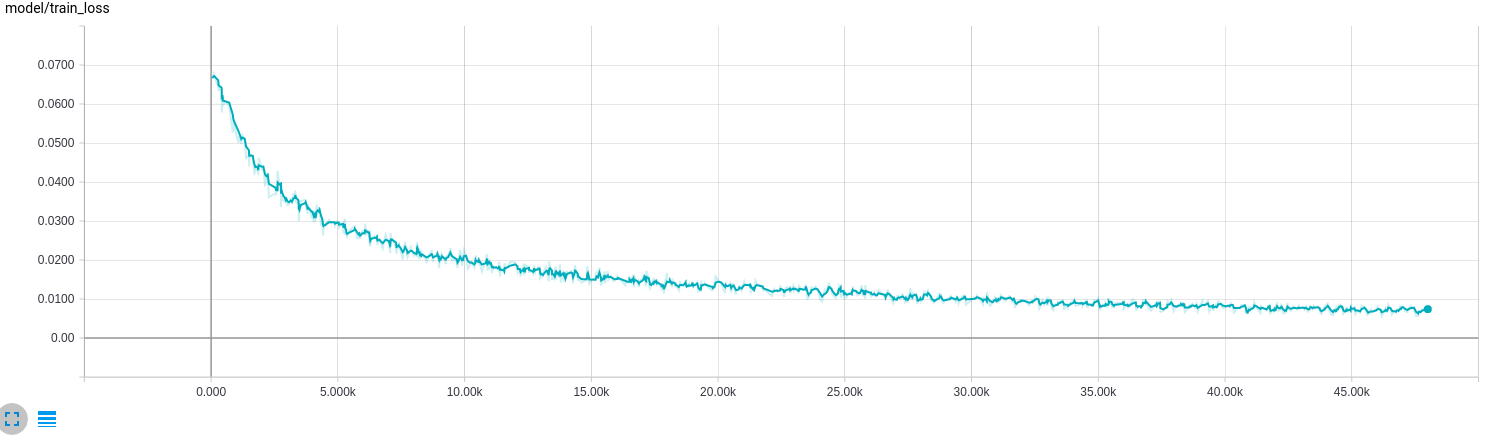

train:

python -m global_module.run_module.run_train -

test:

python -m global_module.run_module.run_test -

visualize tensorboard:

tensorboard --logdir=PATH-TO-LOG-DIR -

visualize embeddings:

tensorboard --logdir=PATH-TO-LOG-DIR/EMB_VIZ

Go to set_params.py here.

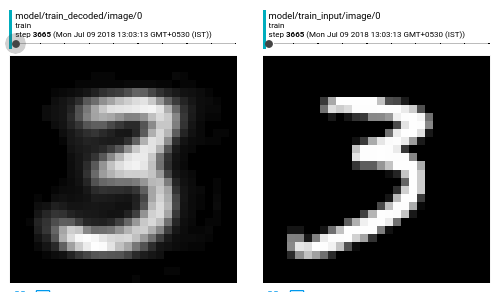

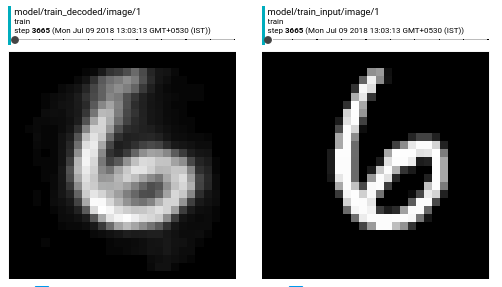

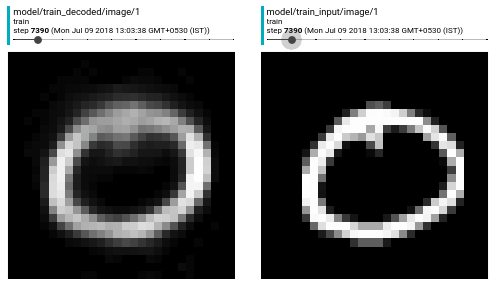

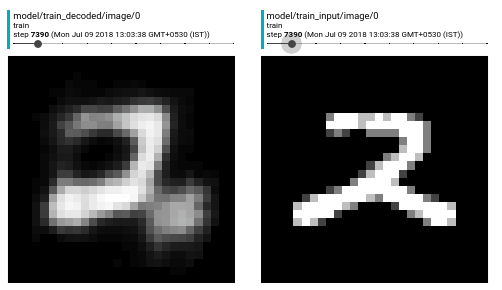

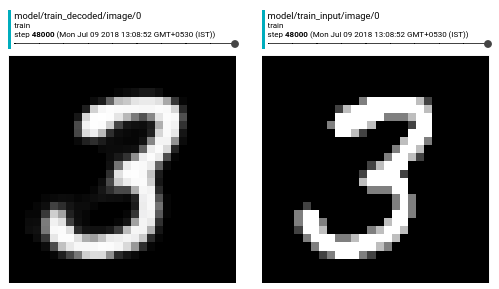

Decoded and corresponding input image of training set at different steps:

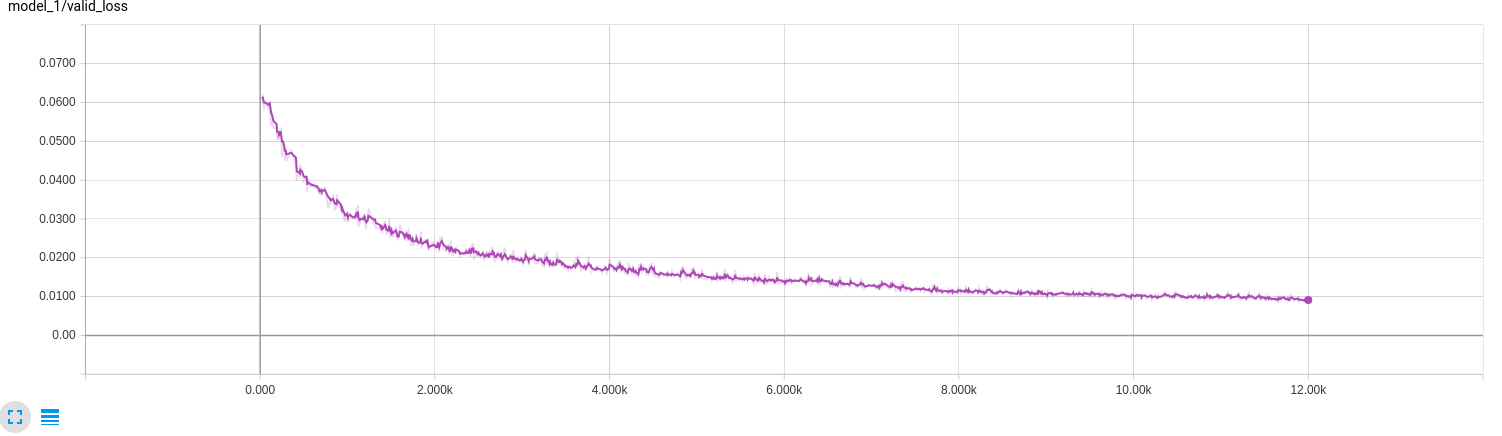

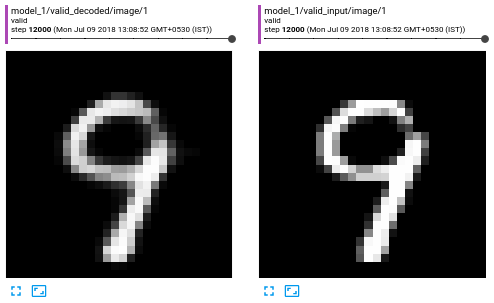

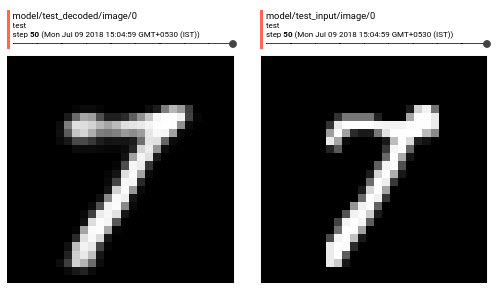

Decoded and corresponding input image of valid set:

Decoded and corresponding input image of test set:

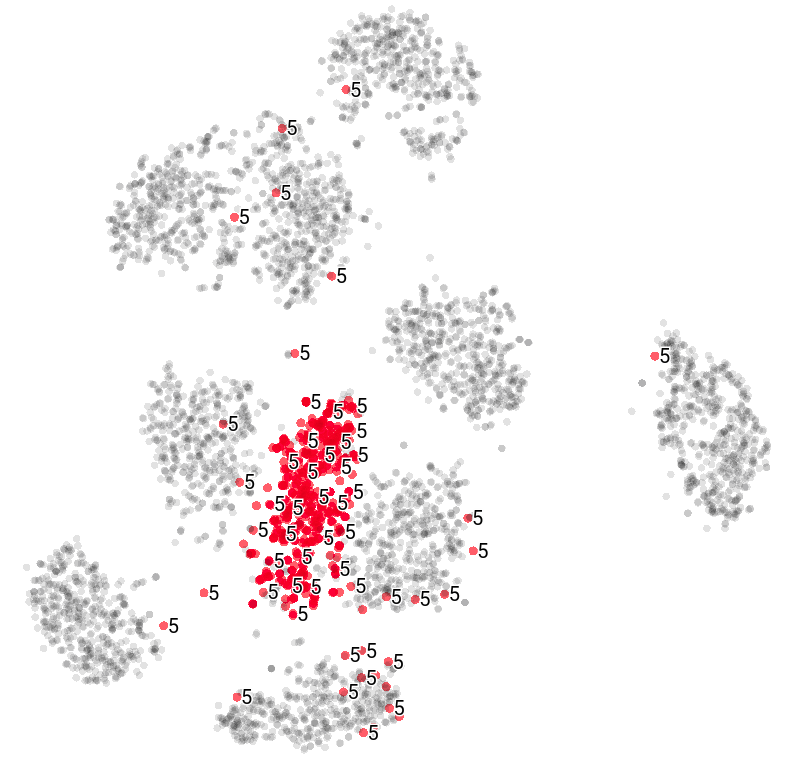

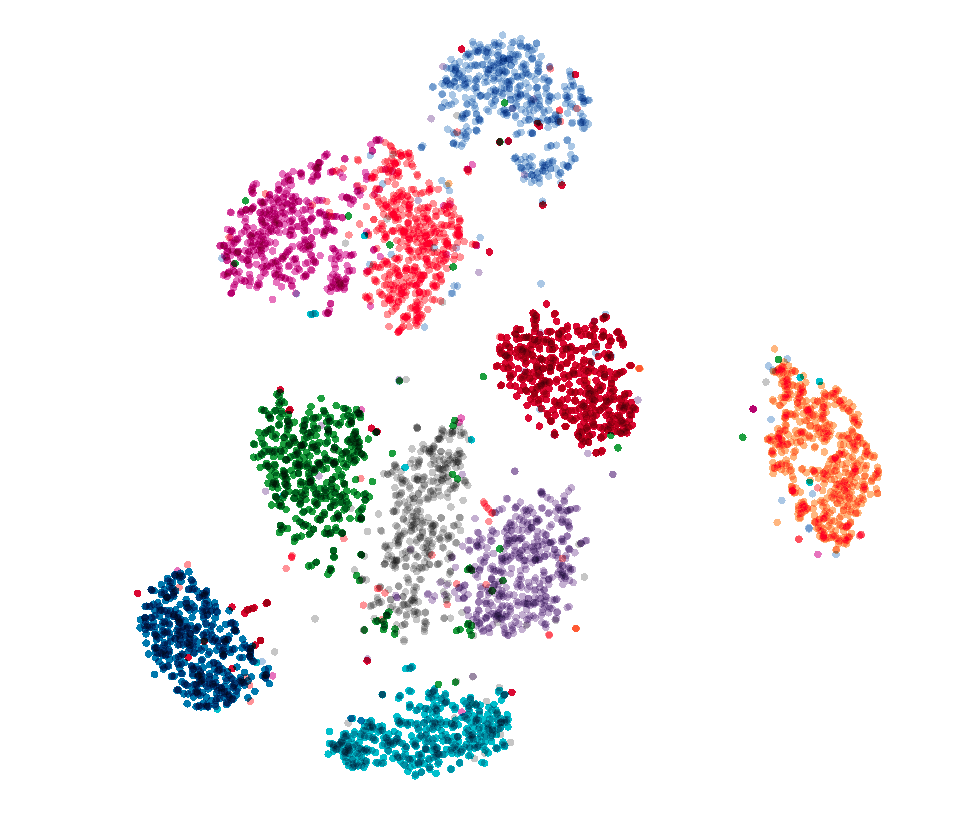

t-SNE representation of latent features of images in test set

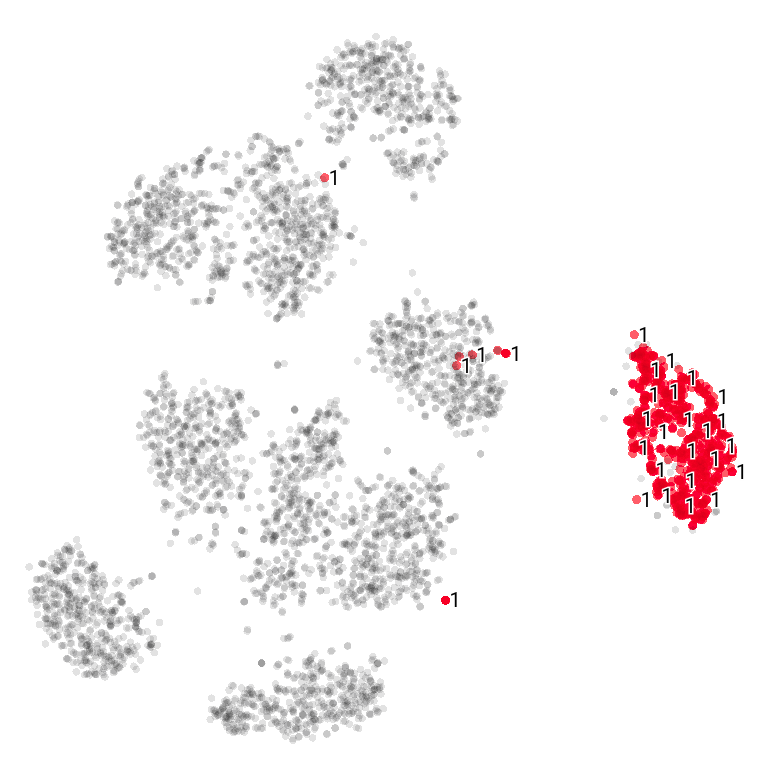

Visualization of points corresponding to latent feature of image '1' in test set in t-SNE representation

Visualization of points corresponding to latent feature of image '5' in test set in t-SNE representation