-

Install the following additional Lua libs:

luarocks install nn luarocks install rnn luarocks install penlight

To train with CUDA install the latest CUDA drivers, toolkit and run:

luarocks install cutorch luarocks install cunn

To train with opencl install the lastest Opencl torch lib:

luarocks install cltorch luarocks install clnn

-

Download the Cornell Movie-Dialogs Corpus and extract all the files into data/cornell_movie_dialogs.

th train.lua [-h / options]Use the --dataset NUMBER option to control the size of the dataset. Training on the full dataset takes about 5h for a single epoch.

The model will be saved to data/model.t7 after each epoch if it has improved (error decreased).

Download:

Put them into the data directory.

Run the following command

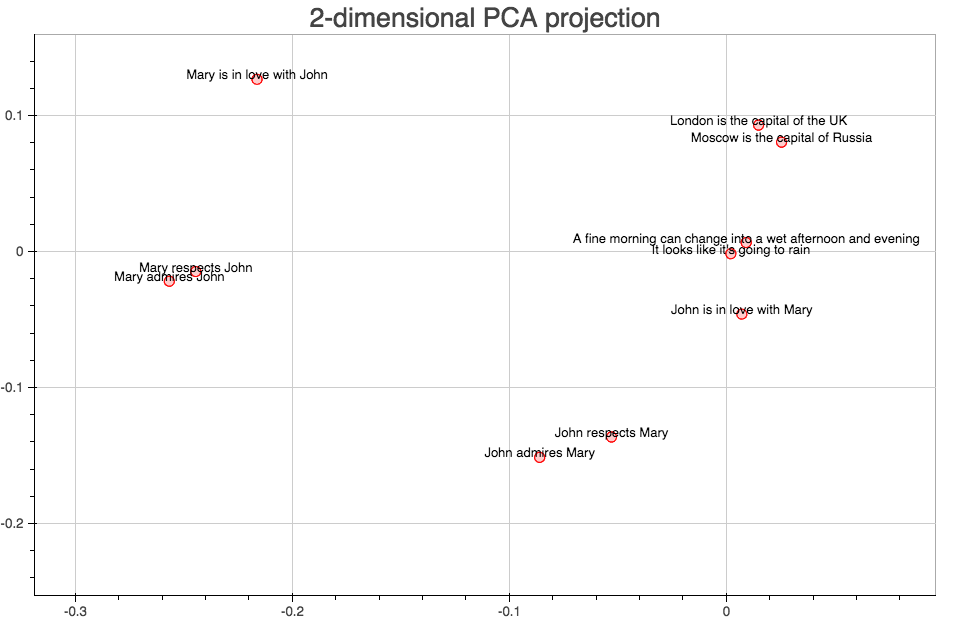

th -i extract_embeddings.lua --model_file data/model.t7 --input_file data/test_sentences.txt --output_file data/embeddings.t7 --cudaTo visualize 2D projections of the embeddings refer to: example.ipynb

This implementation utilizes code from Marc-André Cournoyer's repo

MIT License