Official repository for the paper Studying Large Language Model Behaviors Under Realistic Knowledge Conflicts.

We introduce a framework for studying context-memory knowledge conflicts in a realistic setup (see image below).

We update incorrect parametric knowledge (Stages 1 and 2) using real conflicting documents (Stage 3). This reflects how knowledge conflicts arise in practice. In this realistic scenario, we find that knowledge updates fail less often than previously reported.

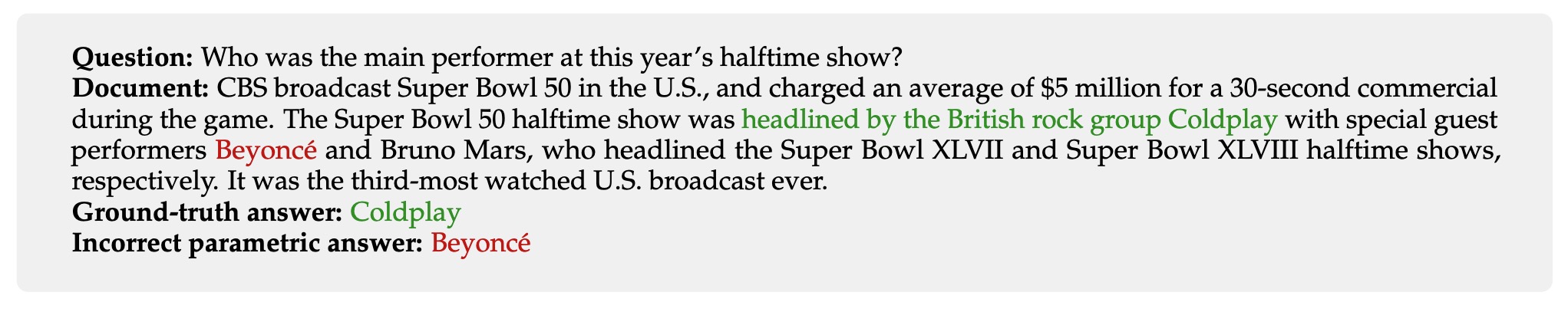

In cases where the models still fail to update their answers, we find a parametric bias: the incorrect parametric answer appearing in context makes the knowledge update likelier to fail (see below). This suggests that the factual parametric knowledge of LLMs can negatively influence their reading abilities and behaviors.

We include a protocol for evaluating the susceptibility of a RAG system to the parametric bias in this repository.

git clone https://github.com/kortukov/realistic_knowledge_conflicts

cd realistic_knowledge_conflictsconda create -n realistic_kc python=3.10

conda activate realistic_kc

pip install -r requirements.txtWe download the MrQA validation split and use it as test data: NQ, SQuAD, NewsQA, TriviaQA, SearchQA, HotpotQA.

python 0_download_data.py --dataset-type test

In Stage 1 of our experimental pipeline we run the models closed-book. To ensure best posssible closed-book performance we use ICL demonstrations. For ICL we use the train split of each dataset. We shuffle the original data and only save 10 examples.

python 0_download_data.py --dataset-type icl

We run the closed-book experiments using configs in config/cb.

python 1_gather_cb_answers.py --config config/cb/llama7b/hotpotqa.conf

python 2_filter_out_no_conflict.py --config config/filter/llama7b/hotpotqa.conf

In this experiment, we run stage 3 of the pipeline.

We run the open-book experiments using configs in config/ob.

By default, the results are saved into results/{model_name}/ob_{dataset}.out.

python 3_run_ob_experiment.py --config config/ob/llama7b/hotpotqa.conf

Results reported in Table 3 can be found by keys "Retain parametric", "Correct update", and "Incorrect update" in the output file.

Results reported in Figure 2 can be found in the output file by keys "Overall CB in Context",

"CB in Context Retain parametric", "CB in Context Correct update", and "CB in Context Incorrect update".

Results reported in Table 4 can be found in the output file by taking the following difference:

(1 - "P(correct_update | cb_in_ctx)") - (1 - "P(correct_update | not cb_in_ctx)")

= "P(correct_update | not cb_in_ctx)" - "P(correct_update | cb_in_ctx)"

The p-values are reported in key "P-val CU".

We run the masking experiments using configs in config/mask.

The results are saved into results/{model_name}/mask_{dataset}.out.

python 3_run_ob_experiment.py --config config/mask/llama7b/hotpotqa.conf

We run the experiments with adding incorrect parametric answer to context using configs in config/add.

The results are saved into results/{model_name}/add_{dataset}.out.

python 3_run_ob_experiment.py --config config/add/llama7b/hotpotqa.conf

In this experiment, we test whether in-context demonstrations can minimize the influence of the discovered parametric bias.

We run the ICL experiments using configs in config/icl.

The results are saved into results/{model_name}/icl_{dataset}.out.

python 3_run_ob_experiment.py --config config/icl/llama7b/hotpotqa.conf

In this experiment, we move closer to a realistic RAG knowledge updating scenario and check how often does the incorrect parametric answer of a model appears in real-world retrieved documents. To that end, we run models on the FreshQA dataset. It contains questions, whose answers change with time. Updated truth answers are supplied together with web documents containing them.

First, we download the FreshQA data for Feb 26, 2024 (as in the paper).

python 4_download_freshqa.py

Then we find out the parametric (outdated) answers of the model by running the closed-book experiment.

We use configs in config/freshqa.

python 1_gather_cb_answers.py --config config/freshqa/llama7b.conf

The results are saved into results/{model_name}/add_{dataset}.out.

Values reported in Table 15 can be found under the keys "Parametric answer in context", and "Incorrect out of parametric in context".

You can use the code in this repository to test your own task-specific retrieval-augmented LLM for parametric bias susceptibility.

To achieve that we will make use of the Huggingface hub.

First, you will need to upload your dataset to the Huggingface hub in the correct format.

To be compatible with our evaluation it should have "question", "context", and answers fields.

Formulate your downstream task in the QA format and supply your retrieved documents in the "context" field.

As with the data, choose a model from the hub or upload your custom model to the Huggingface hub.

In all config files in the config/custom you have to replace the lines

model_name: "your_model_name"

and

dataset: "your_dataset_name"

with the hub identifiers of your own model and dataset.

The protocol is based on the intervention experiments in the paper.

python 0_download_data.py --dataset-type custom --custom-dataset-name <your_dataset_name>

python 1_gather_cb_answers.py --config config/custom/cb.conf

python 2_filter_out_no_conflict.py --config config/custom/filter.conf

python 3_run_ob_experiment.py --config config/custom/ob.conf

python 3_run_ob_experiment.py --config config/custom/add.conf

To see how susceptible your model is to the parametric bias we compare the results before and after adding the incorrect parametric answer to the context.

We compare the fields "Retain parametric", "Correct update", and "Incorrect update" in the files

results/{your_model_name}/ob_{your_dataset_name}.out and results/{your_model_name}/add_{your_dataset_name}.out.

If adding the incorrect answer to the context increases the prevalence of "Retain parametric" class, your model is susceptible to the parametric bias.

If you use this repository, consider citing our paper:

@misc{kortukov2024studying,

title={Studying Large Language Model Behaviors Under Realistic Knowledge Conflicts},

author={Evgenii Kortukov and Alexander Rubinstein and Elisa Nguyen and Seong Joon Oh},

year={2024},

eprint={2404.16032},

archivePrefix={arXiv},

primaryClass={cs.LG}

}