devops-diplom-yandexcloud

- Цели:

- Этапы выполнения:

- Что необходимо для сдачи задания?

- Как правильно задавать вопросы дипломному руководителю?

- Зарегистрировать доменное имя (любое на ваш выбор в любой доменной зоне).

- Подготовить инфраструктуру с помощью Terraform на базе облачного провайдера YandexCloud.

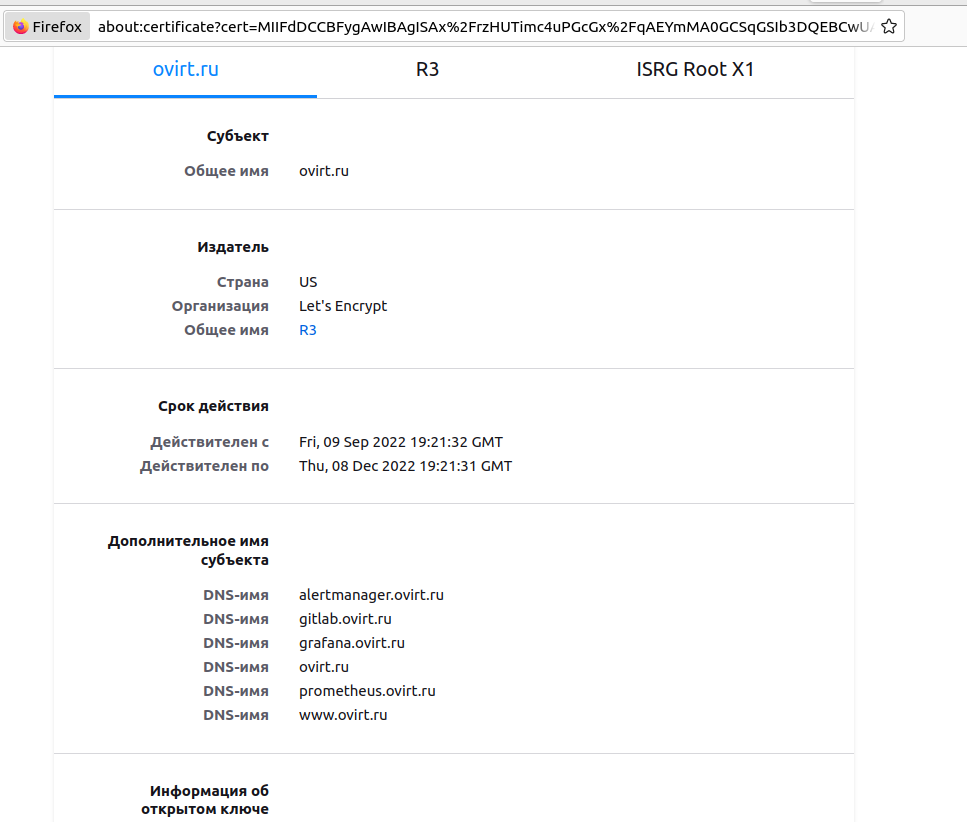

- Настроить внешний Reverse Proxy на основе Nginx и LetsEncrypt.

- Настроить кластер MySQL.

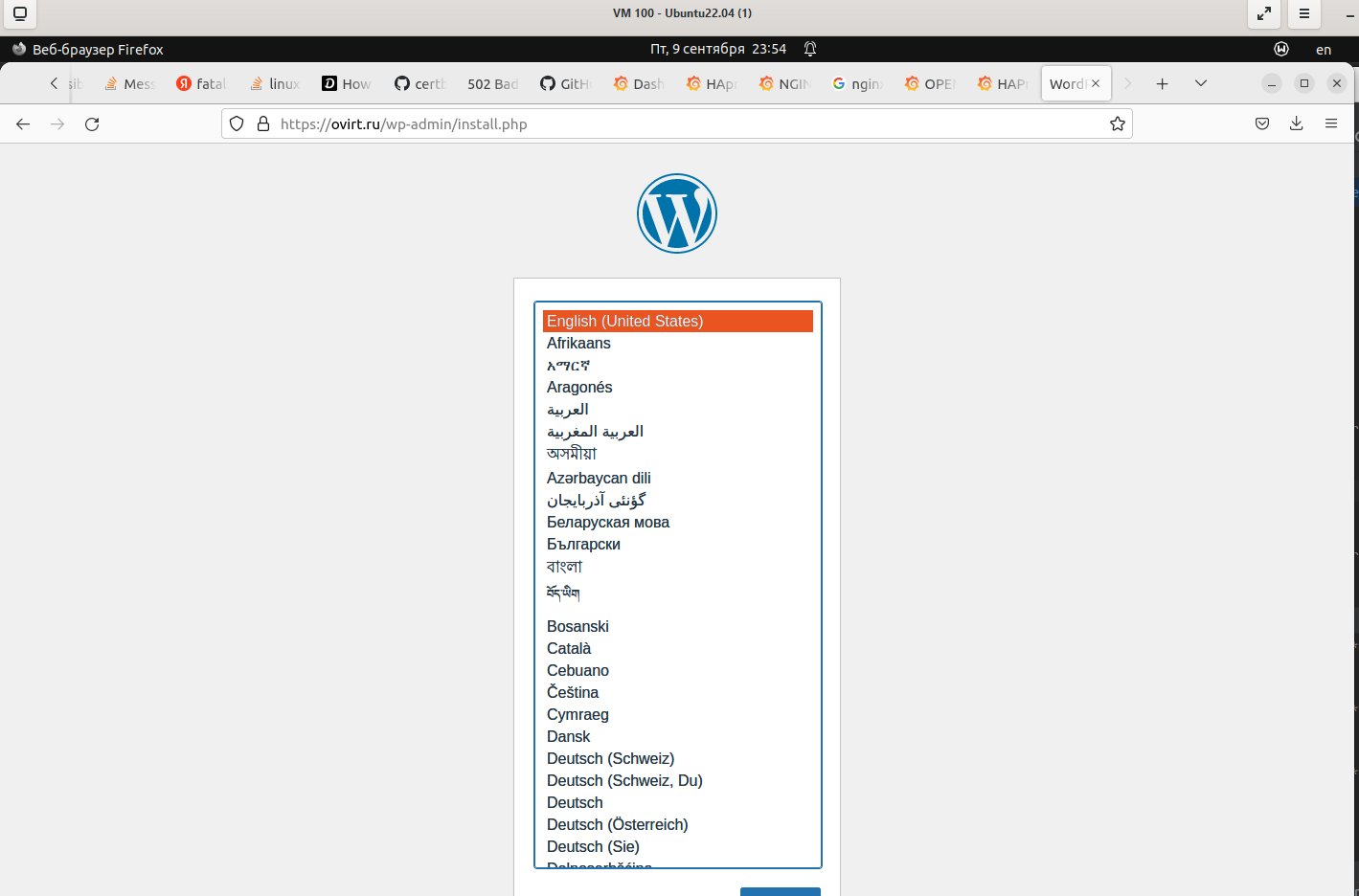

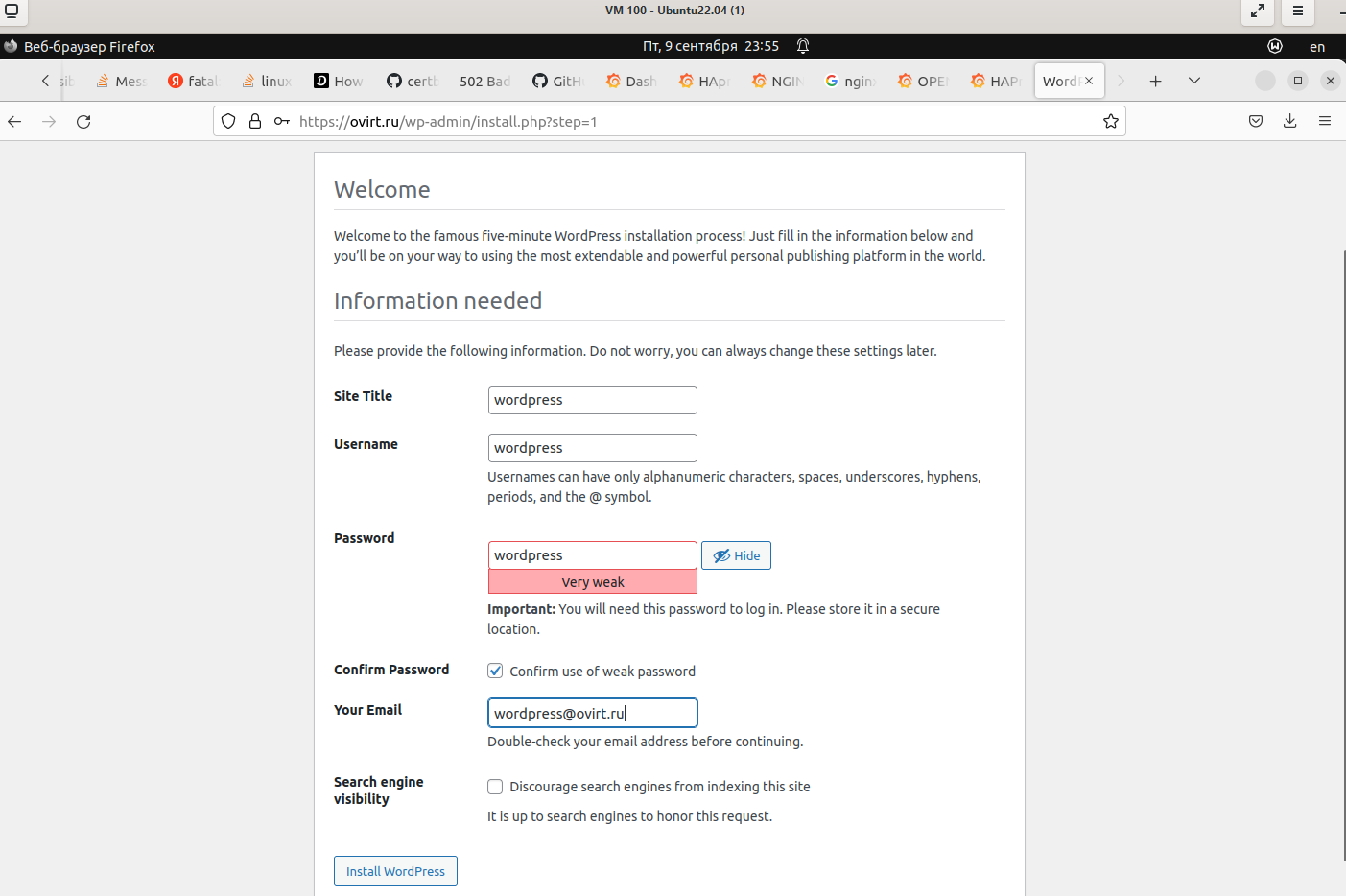

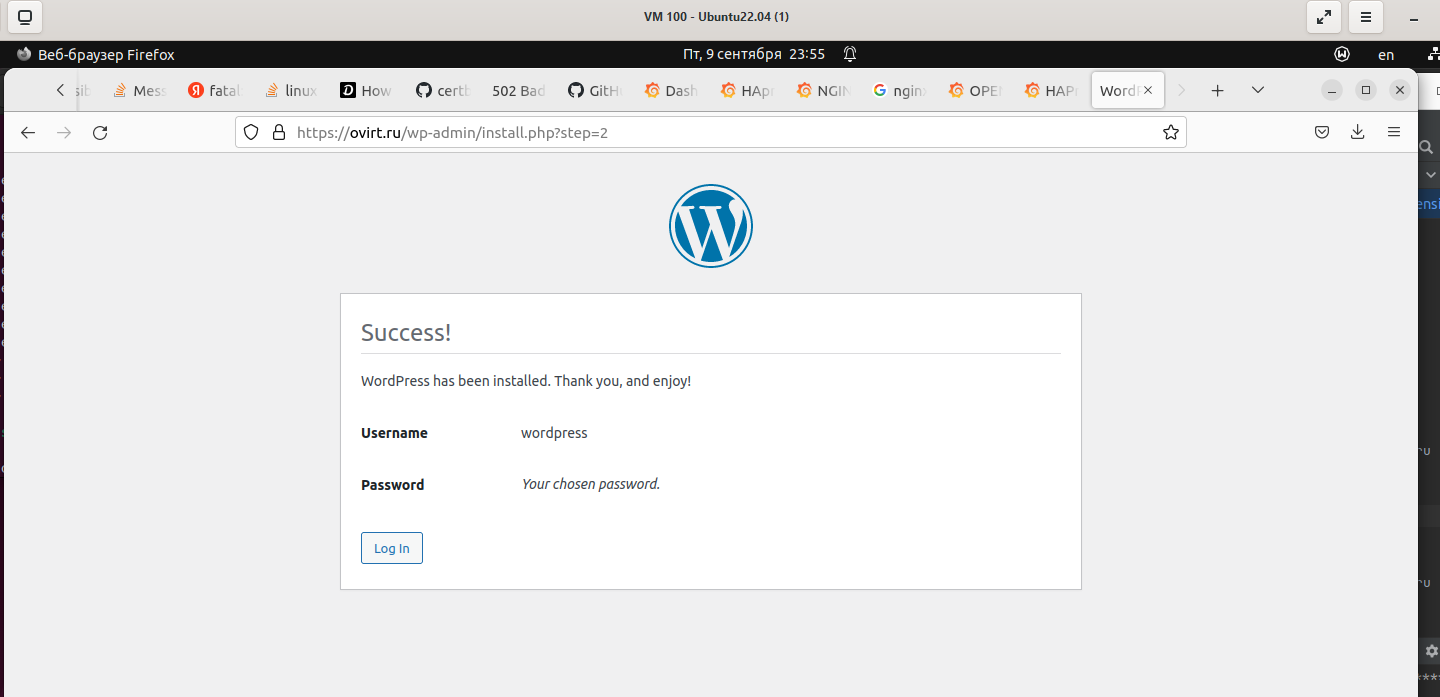

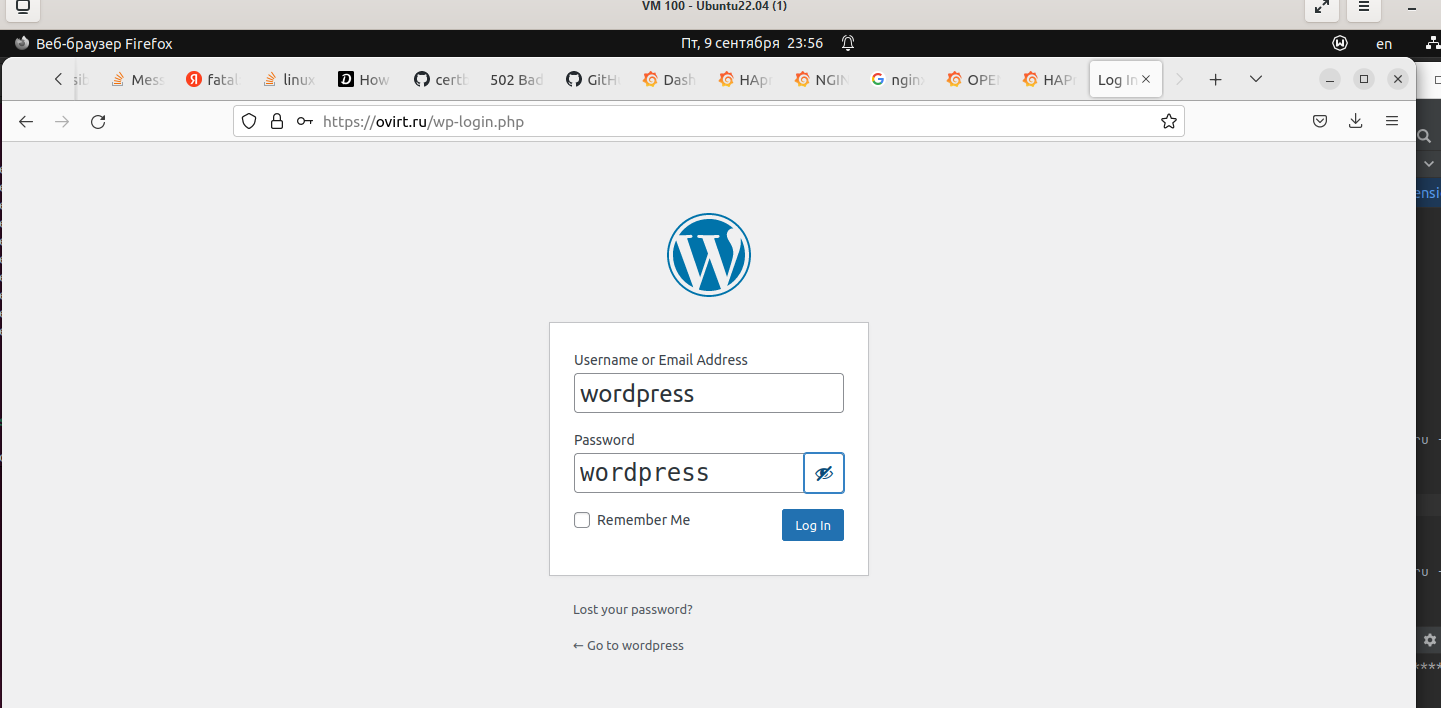

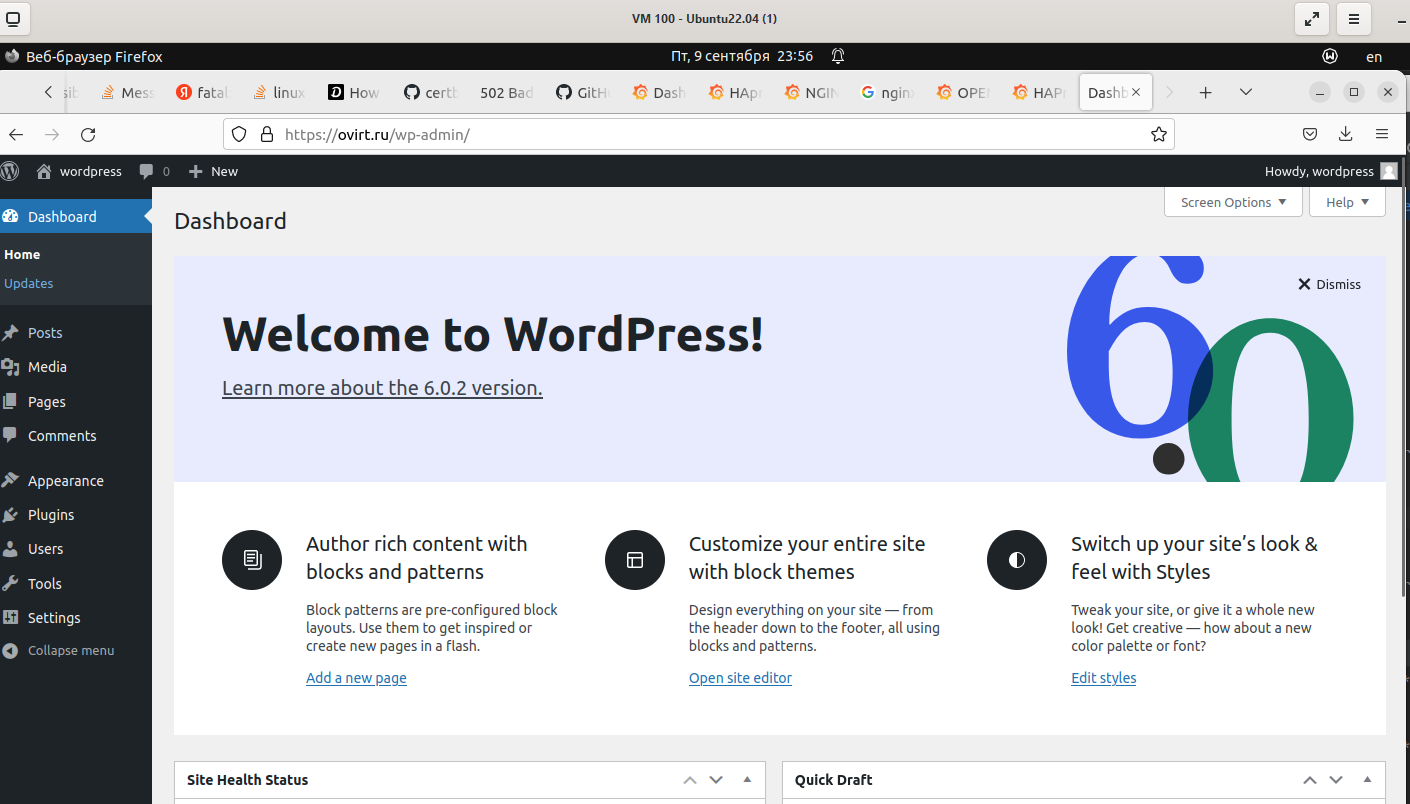

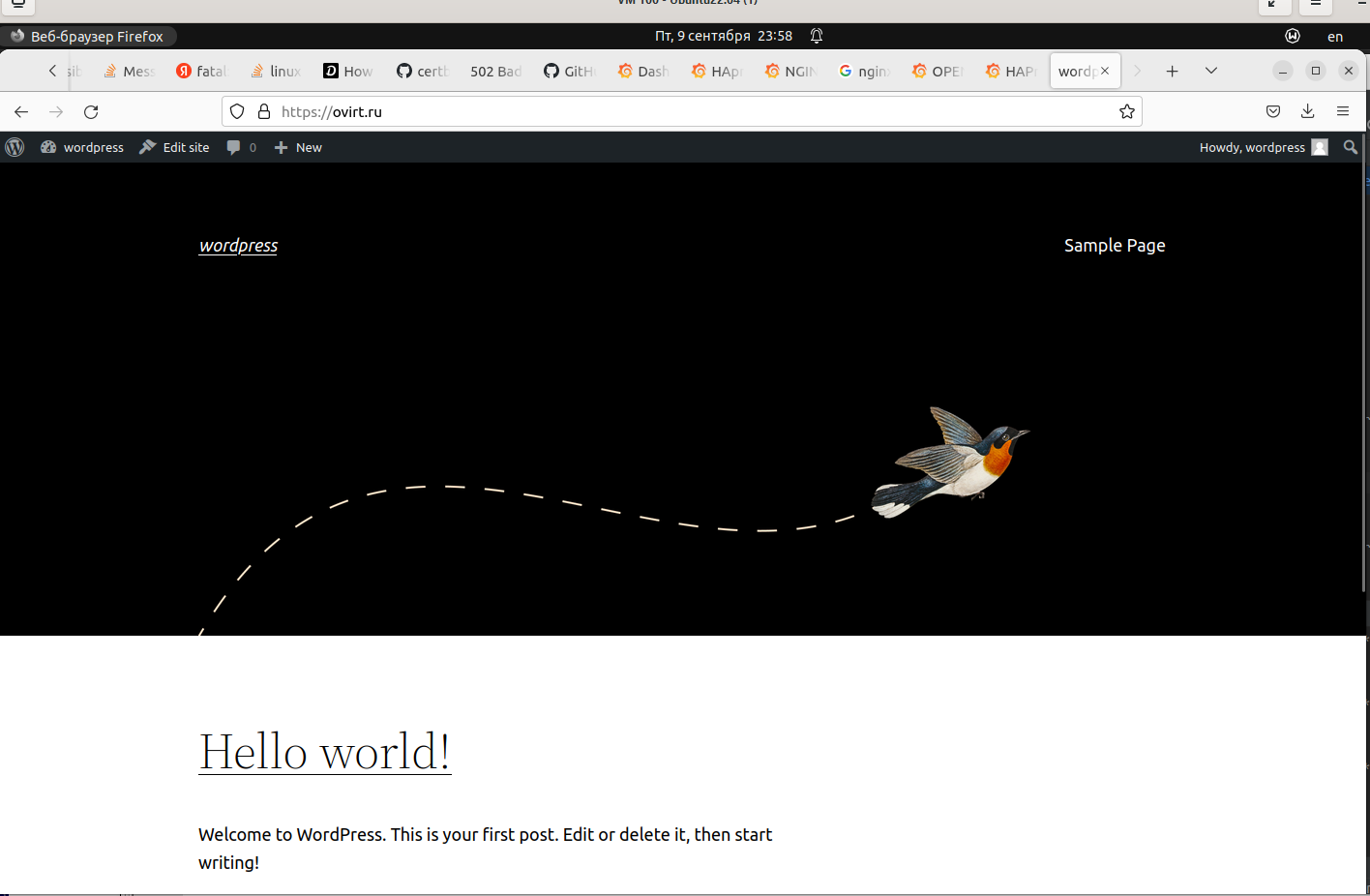

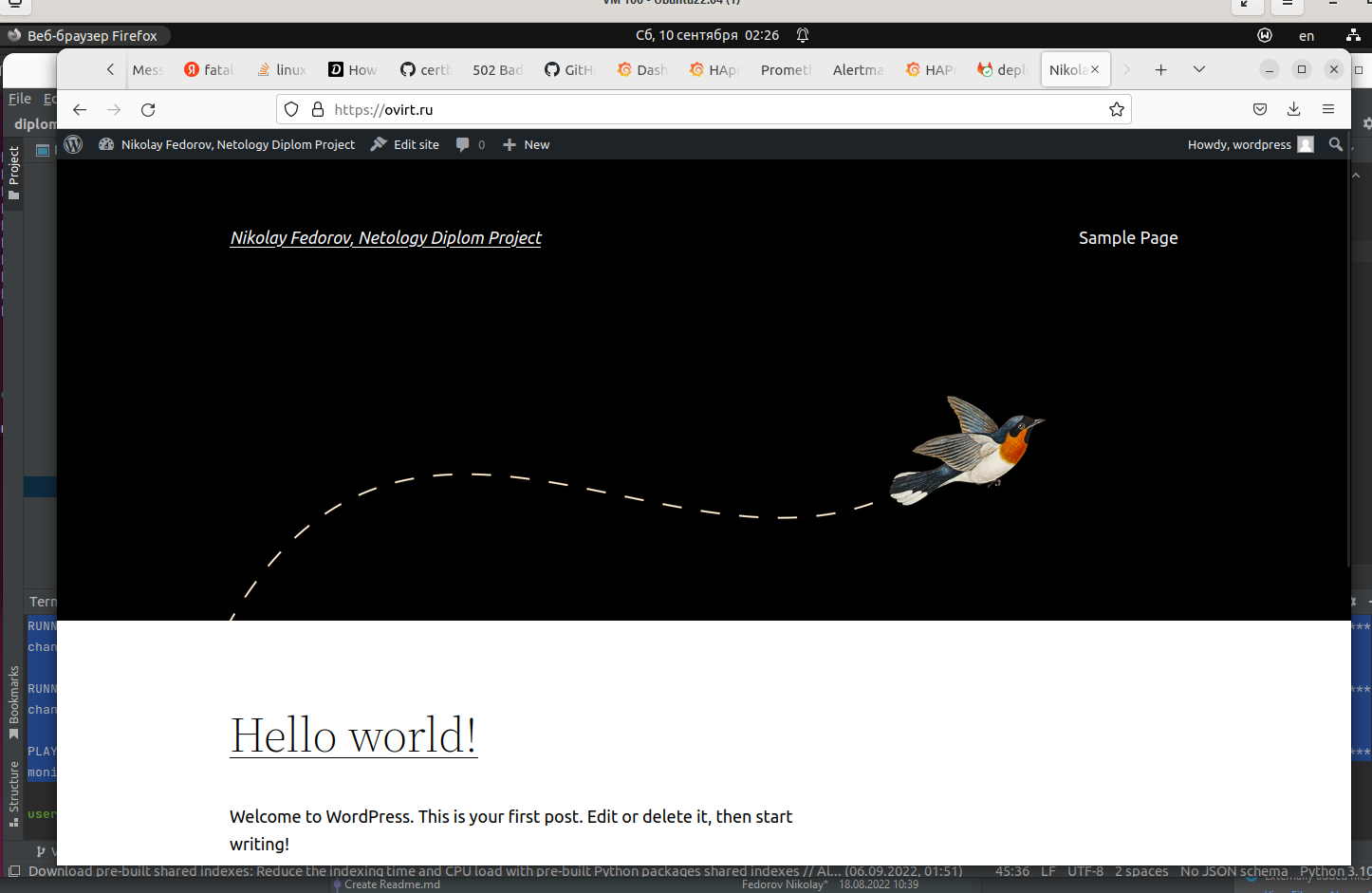

- Установить WordPress.

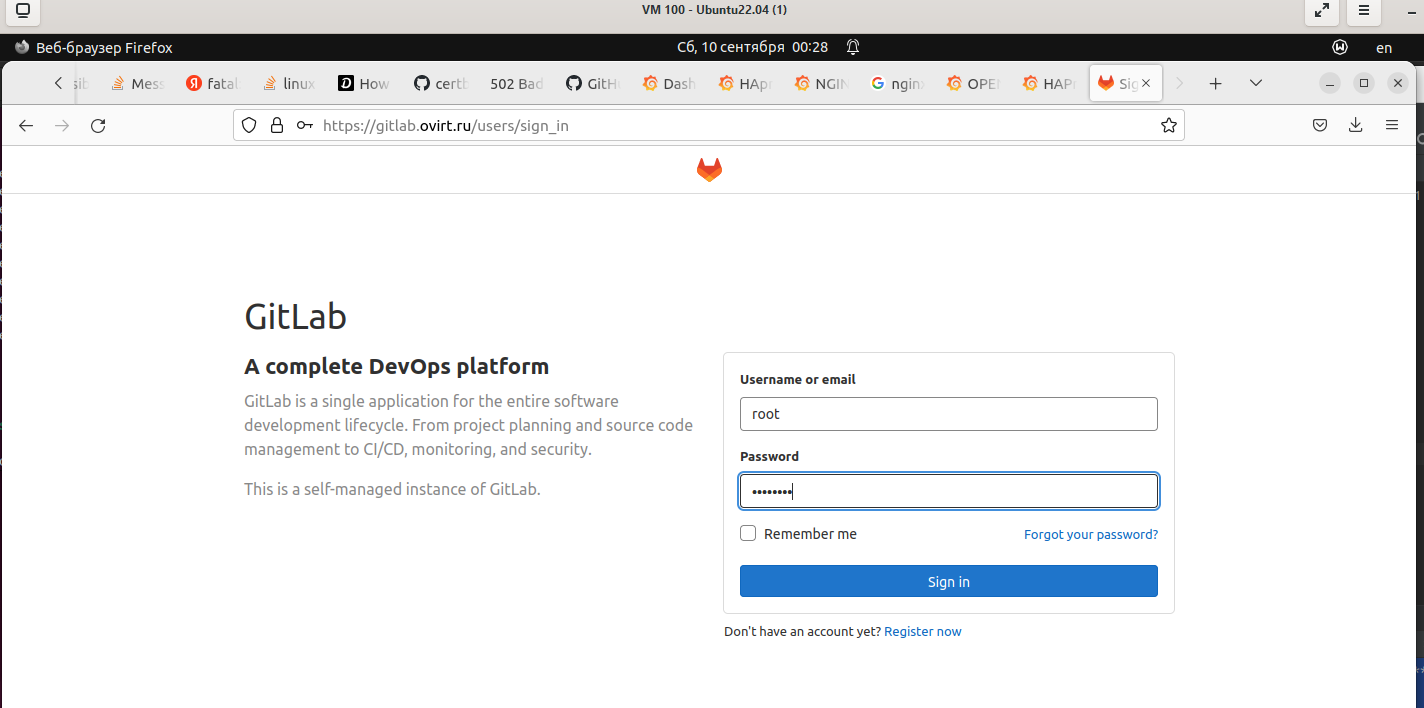

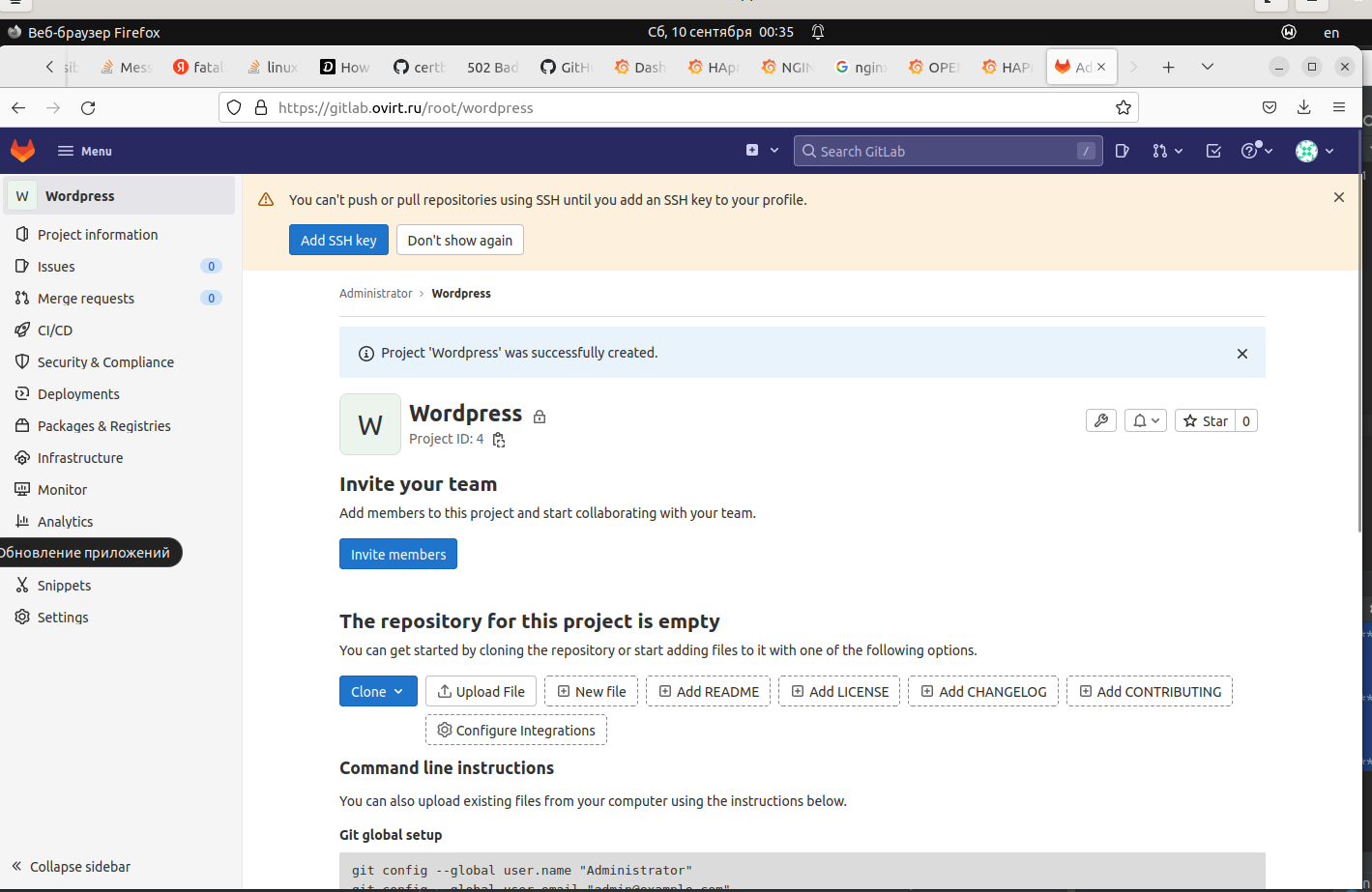

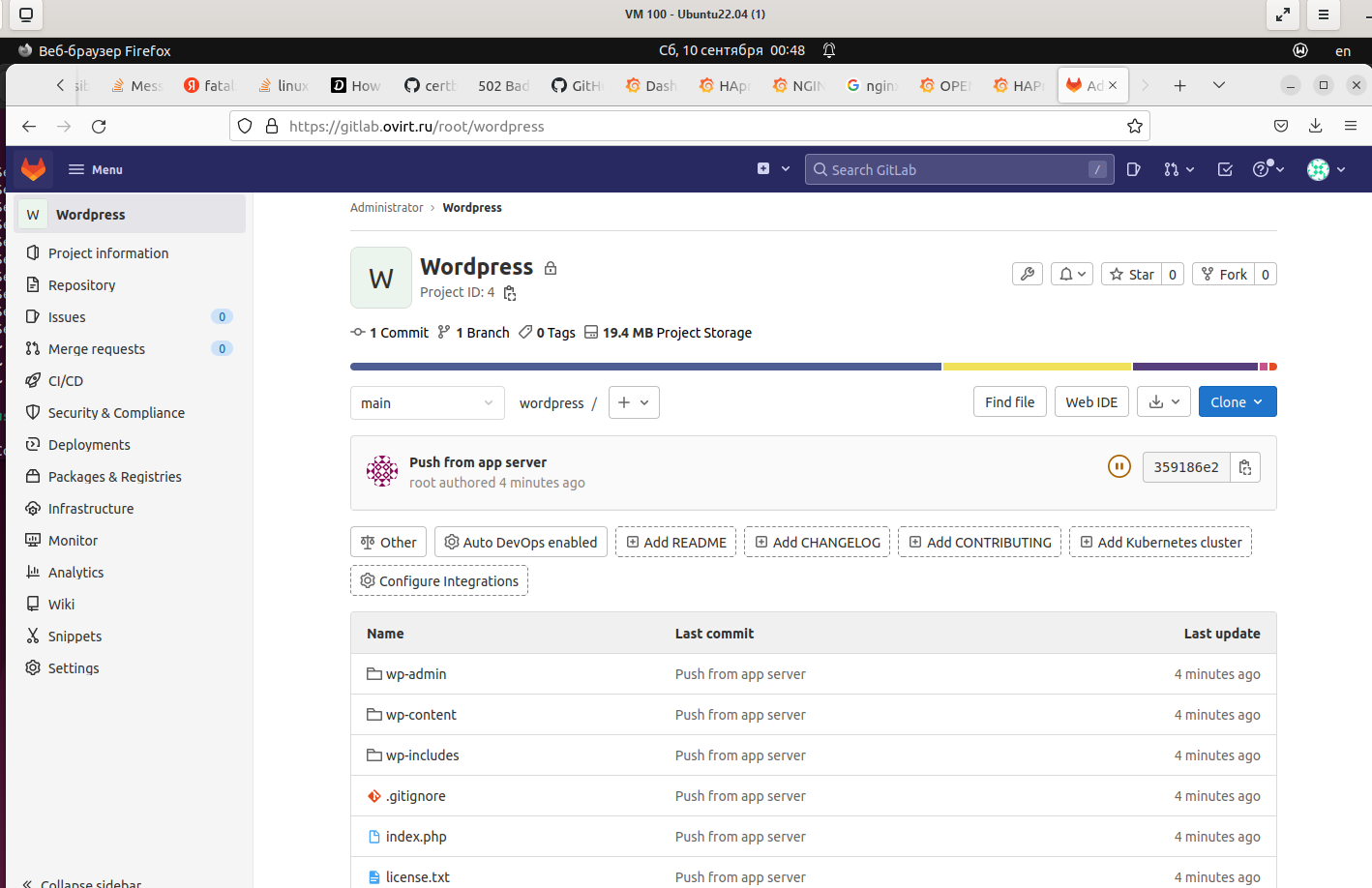

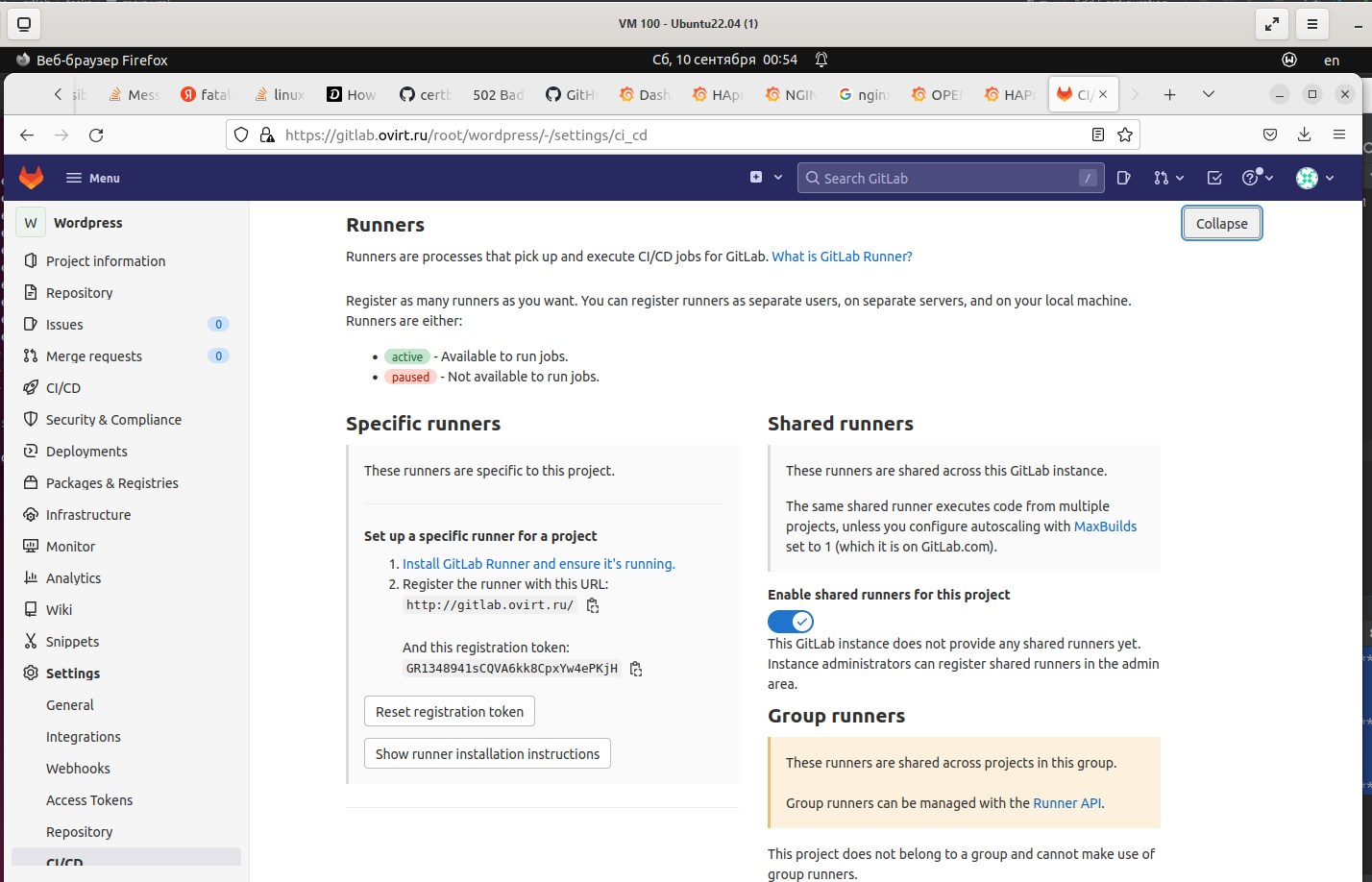

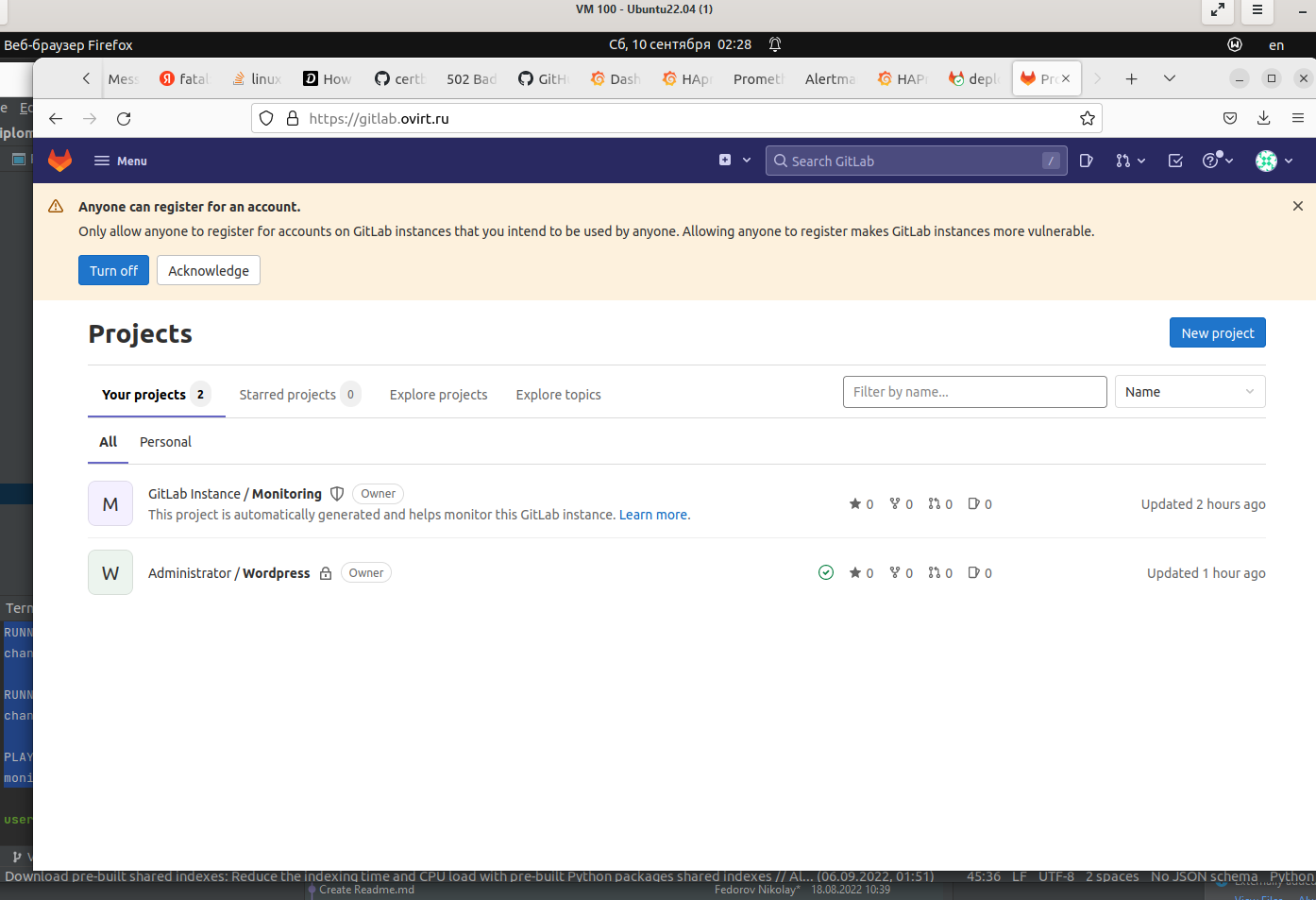

- Развернуть Gitlab CE и Gitlab Runner.

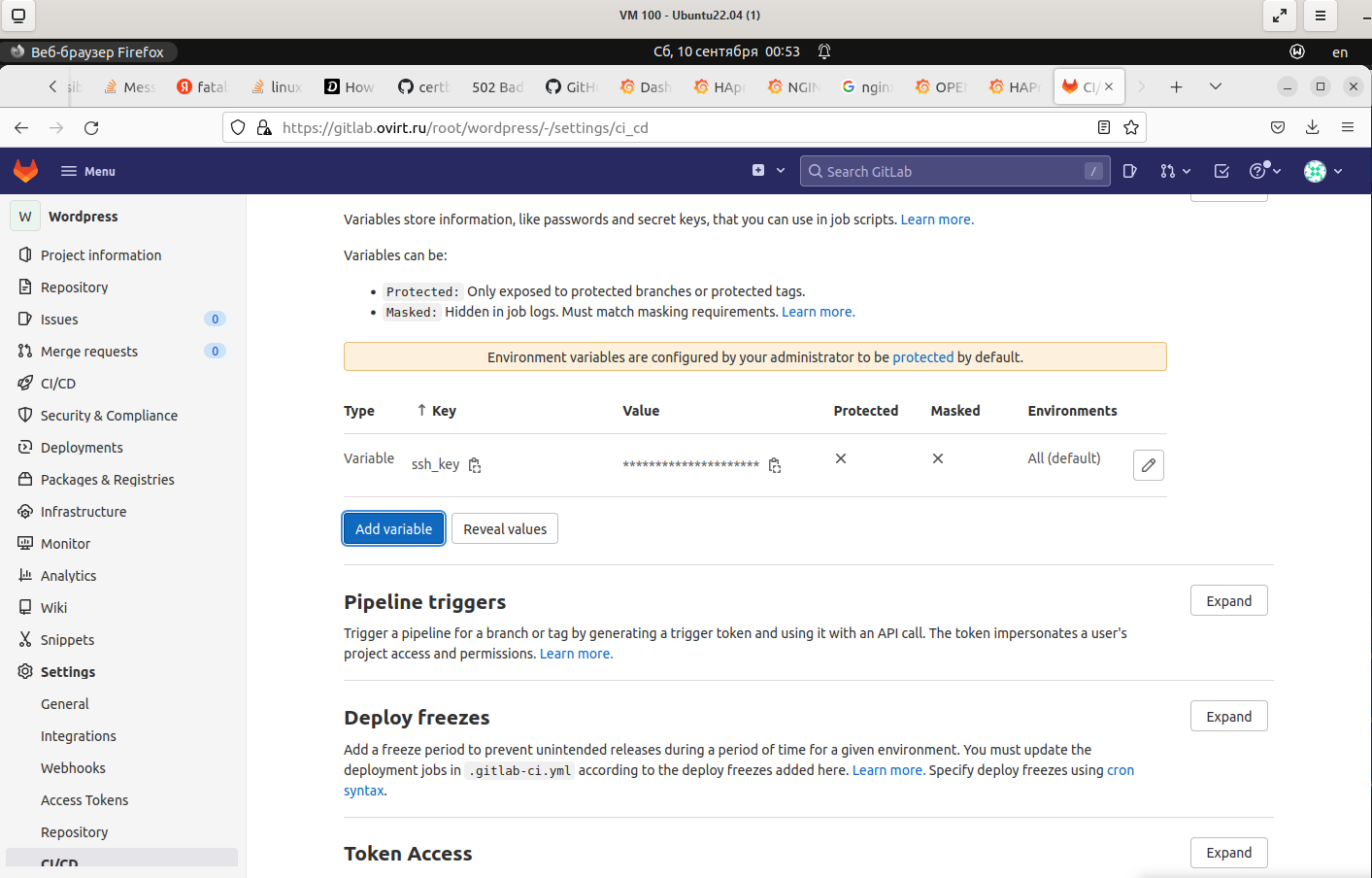

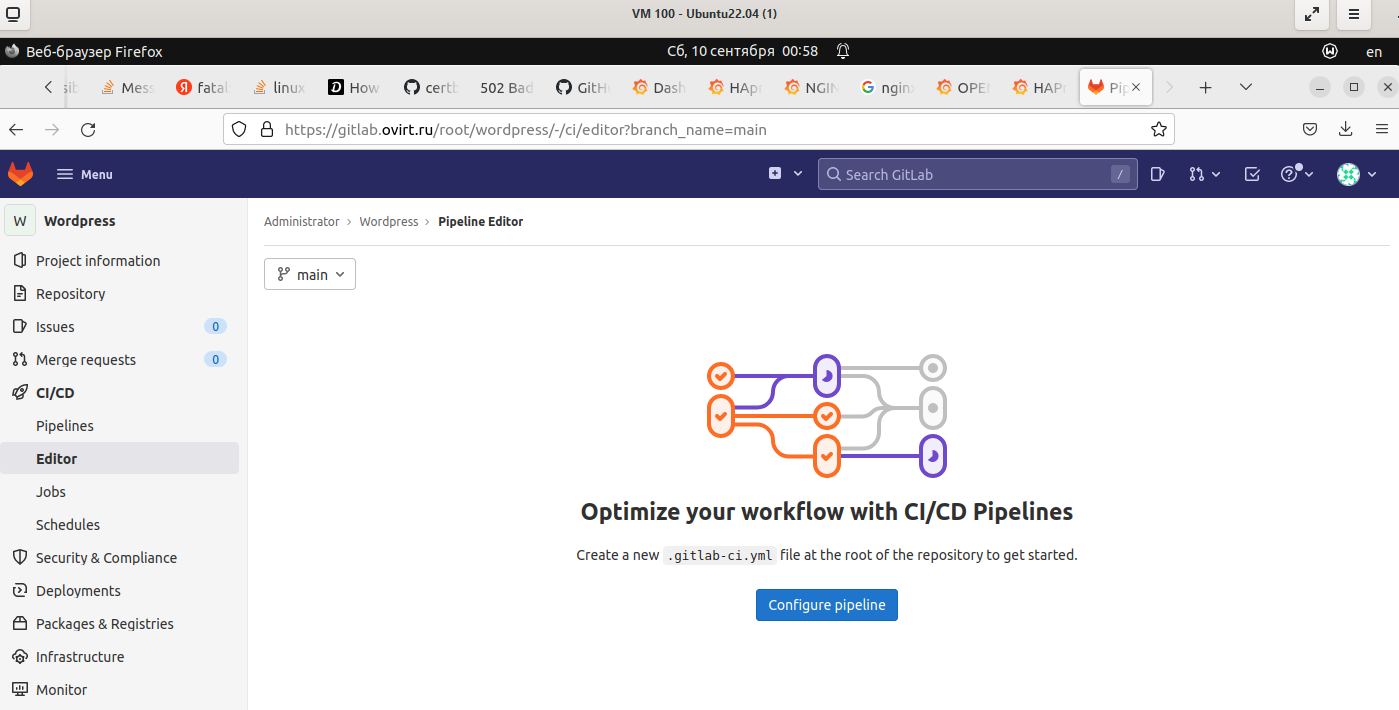

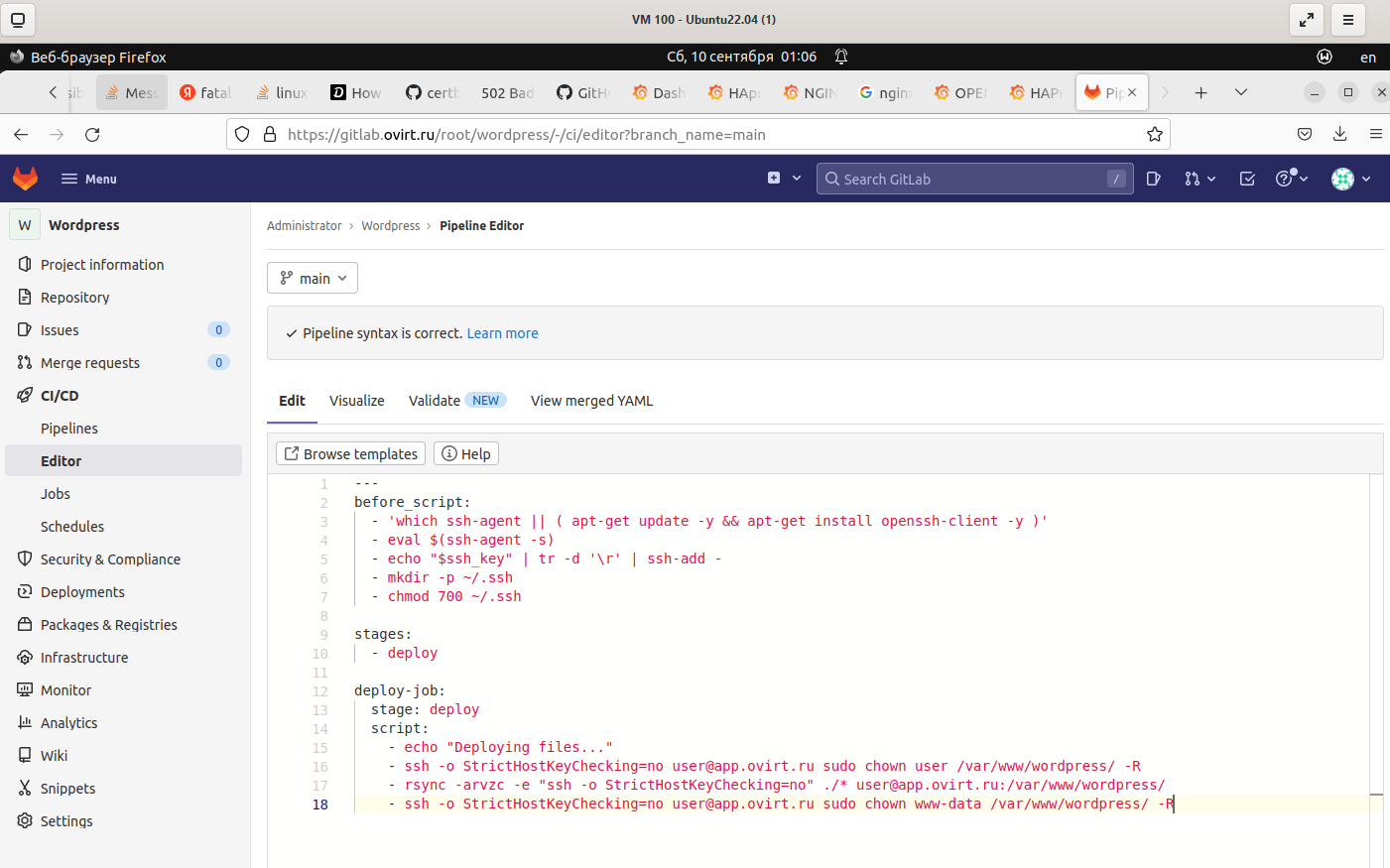

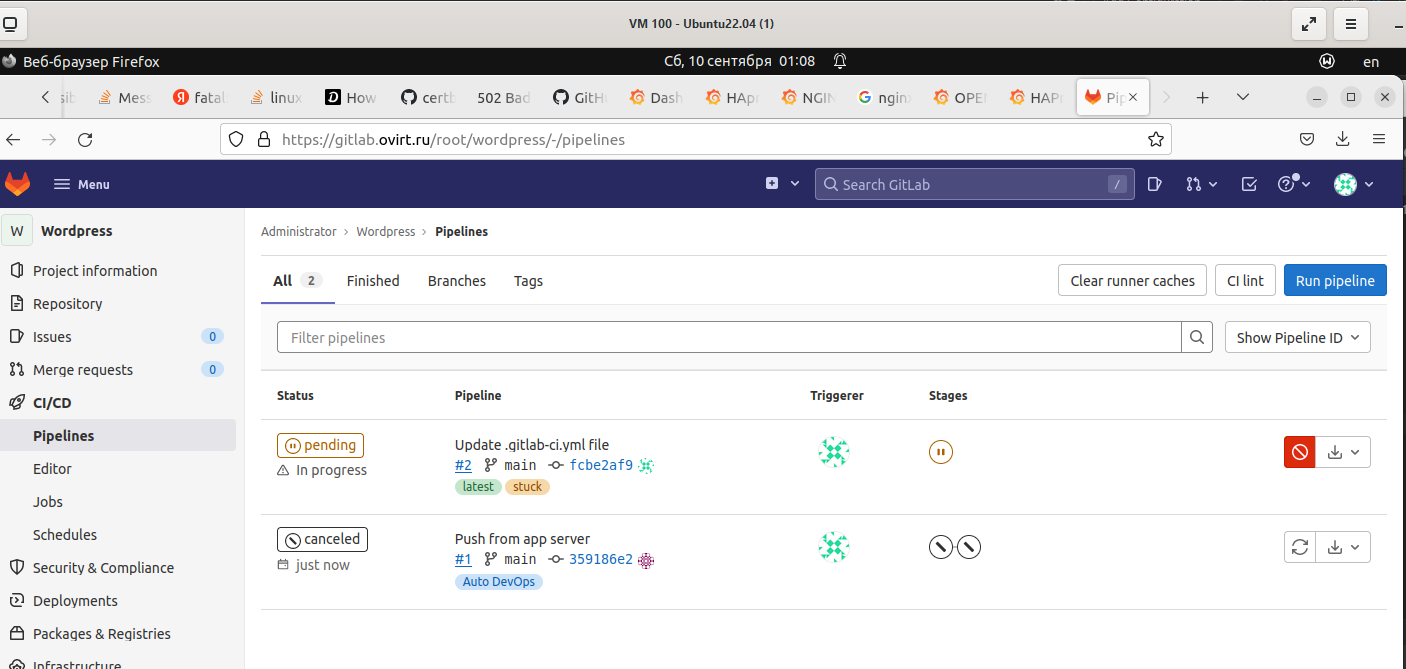

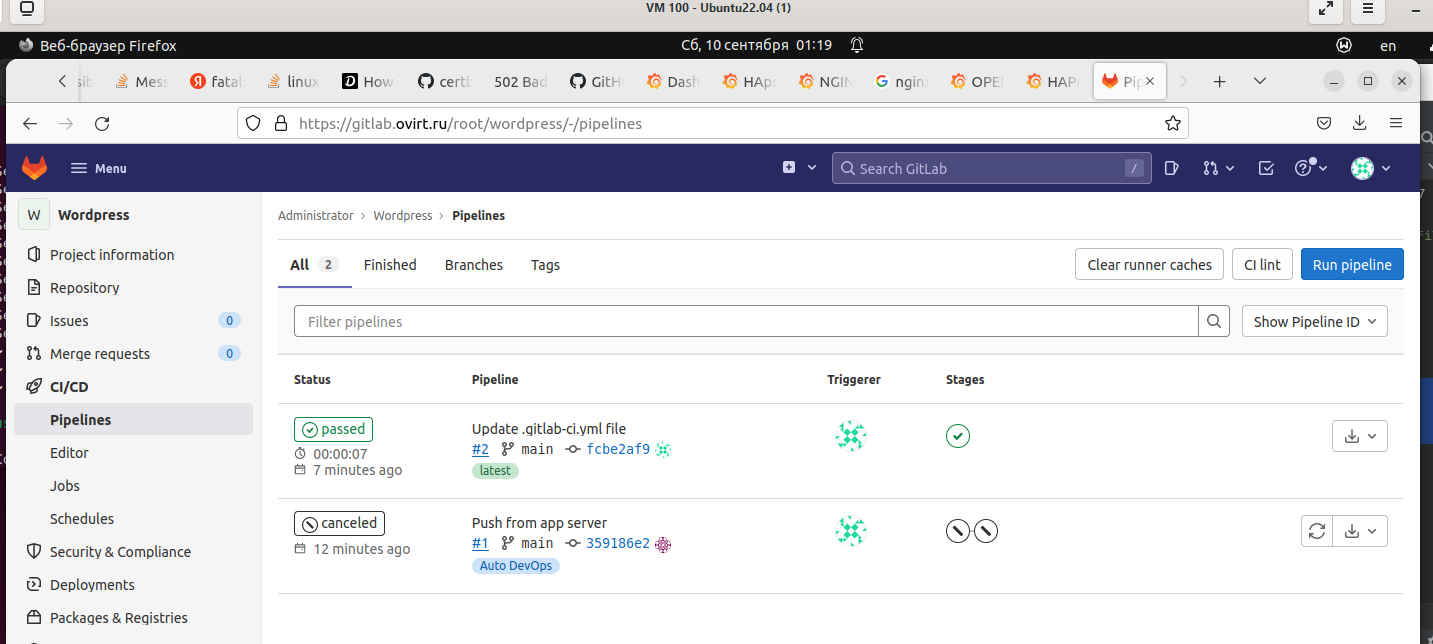

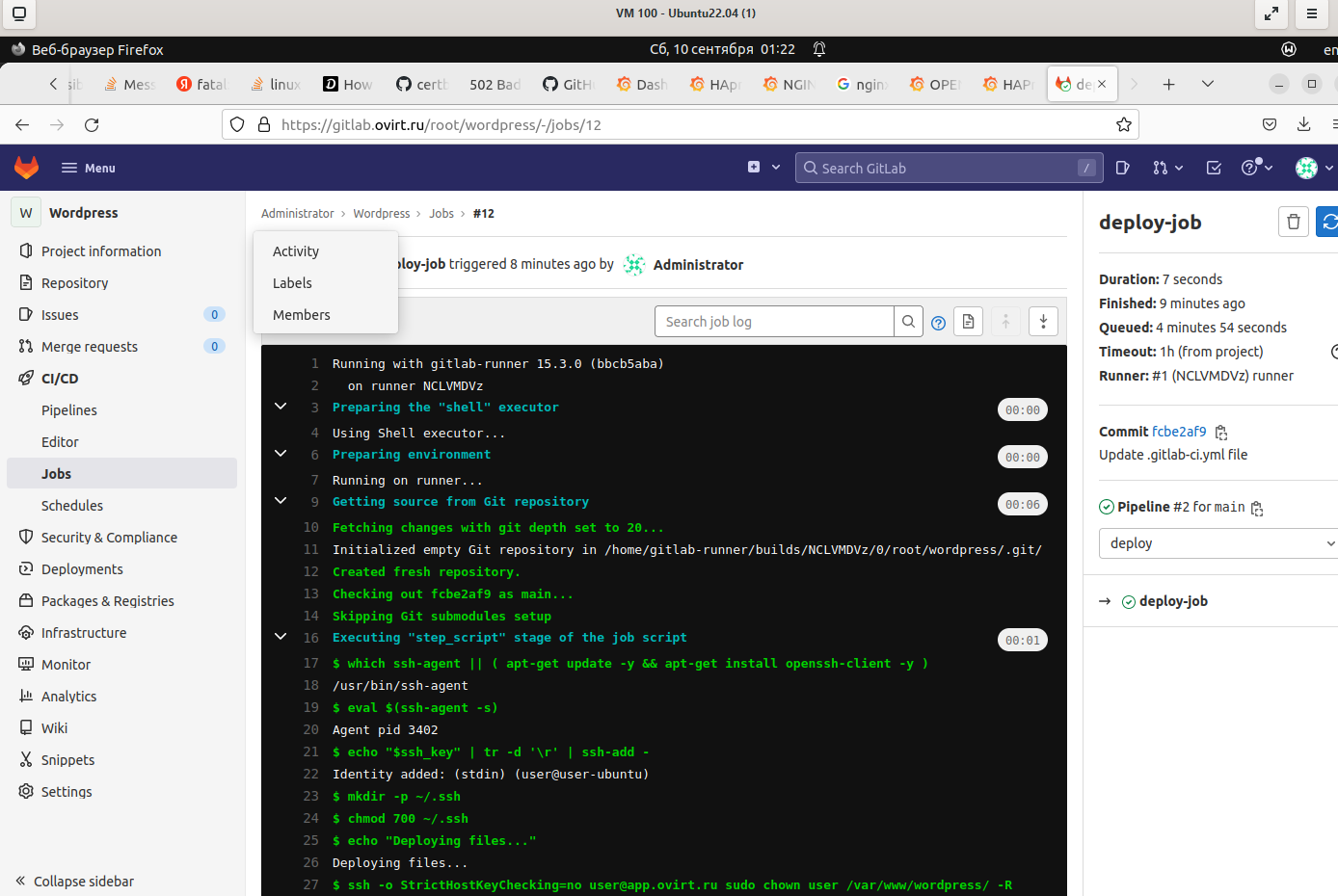

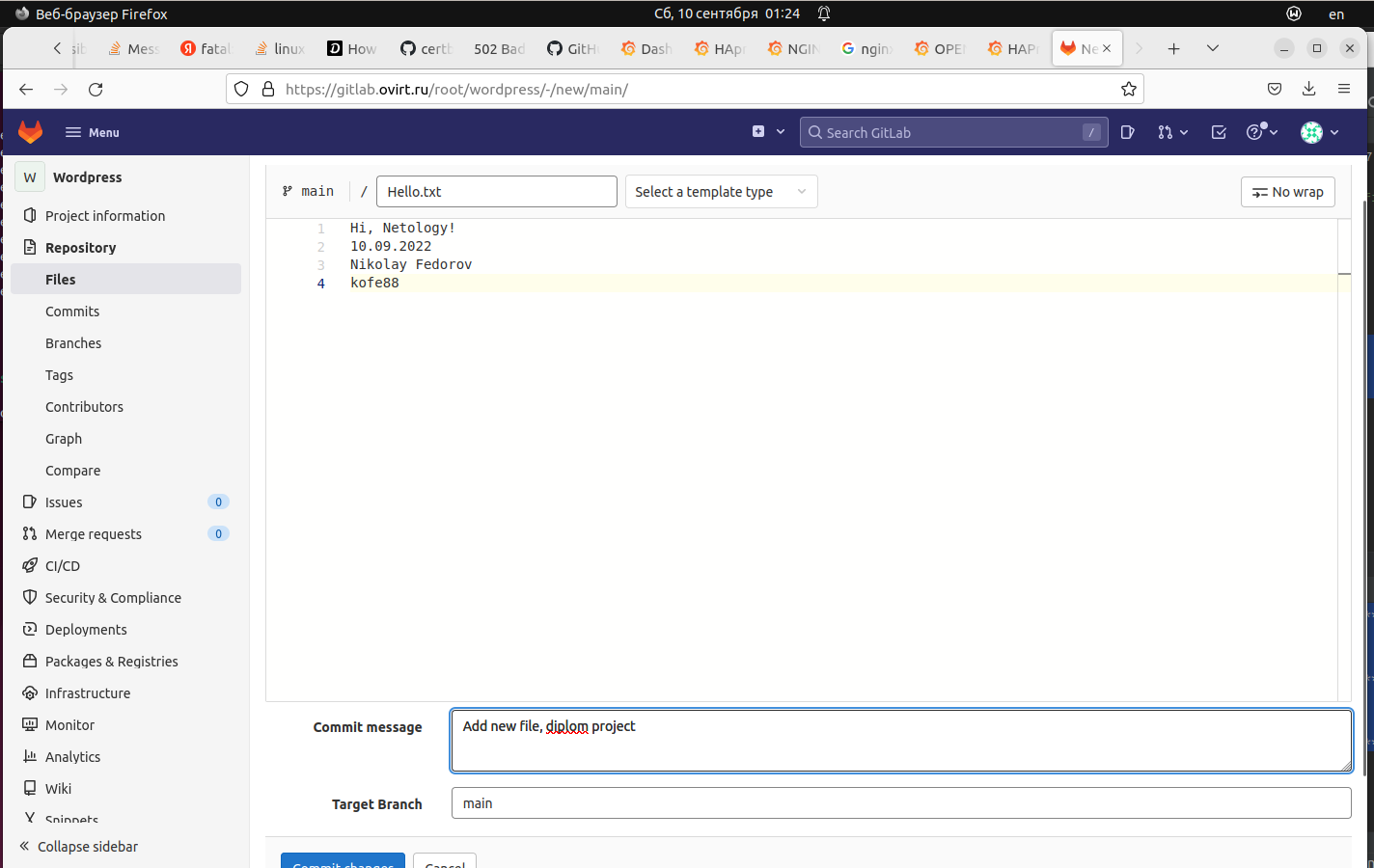

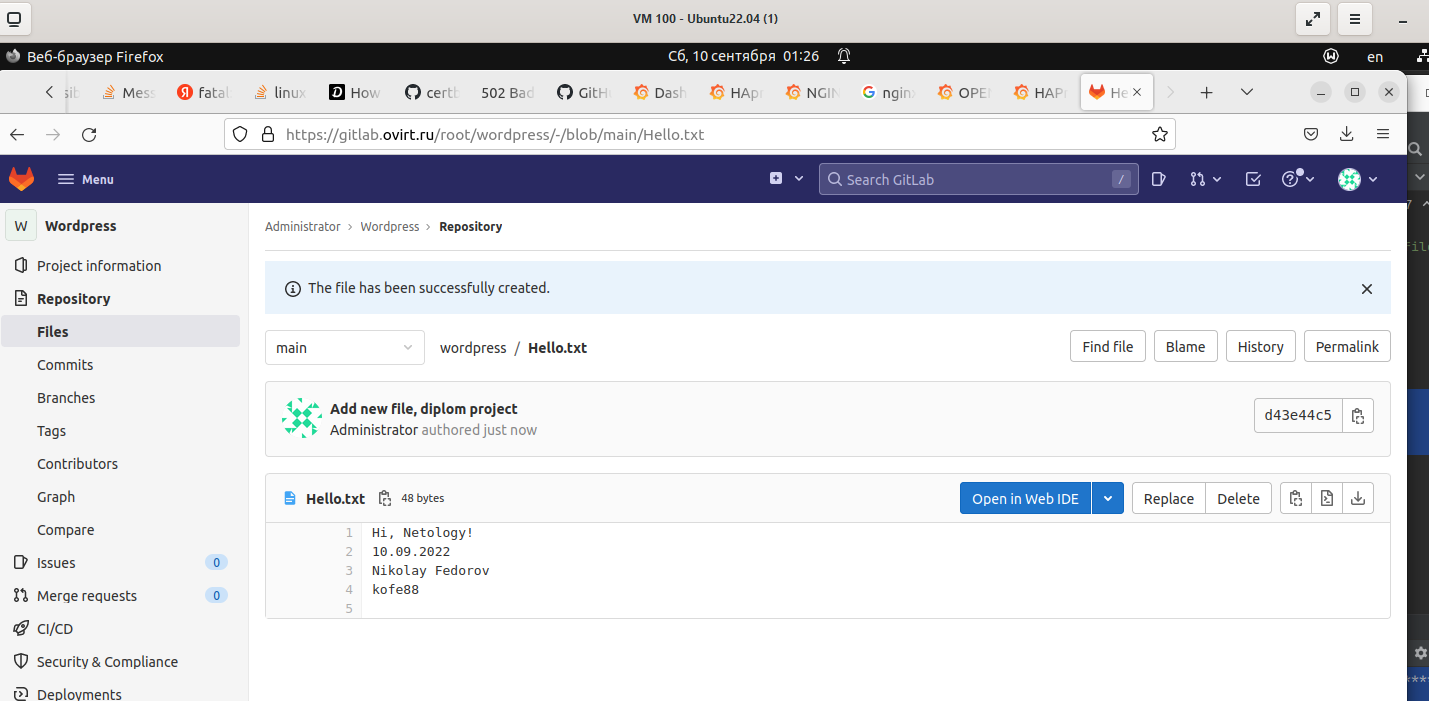

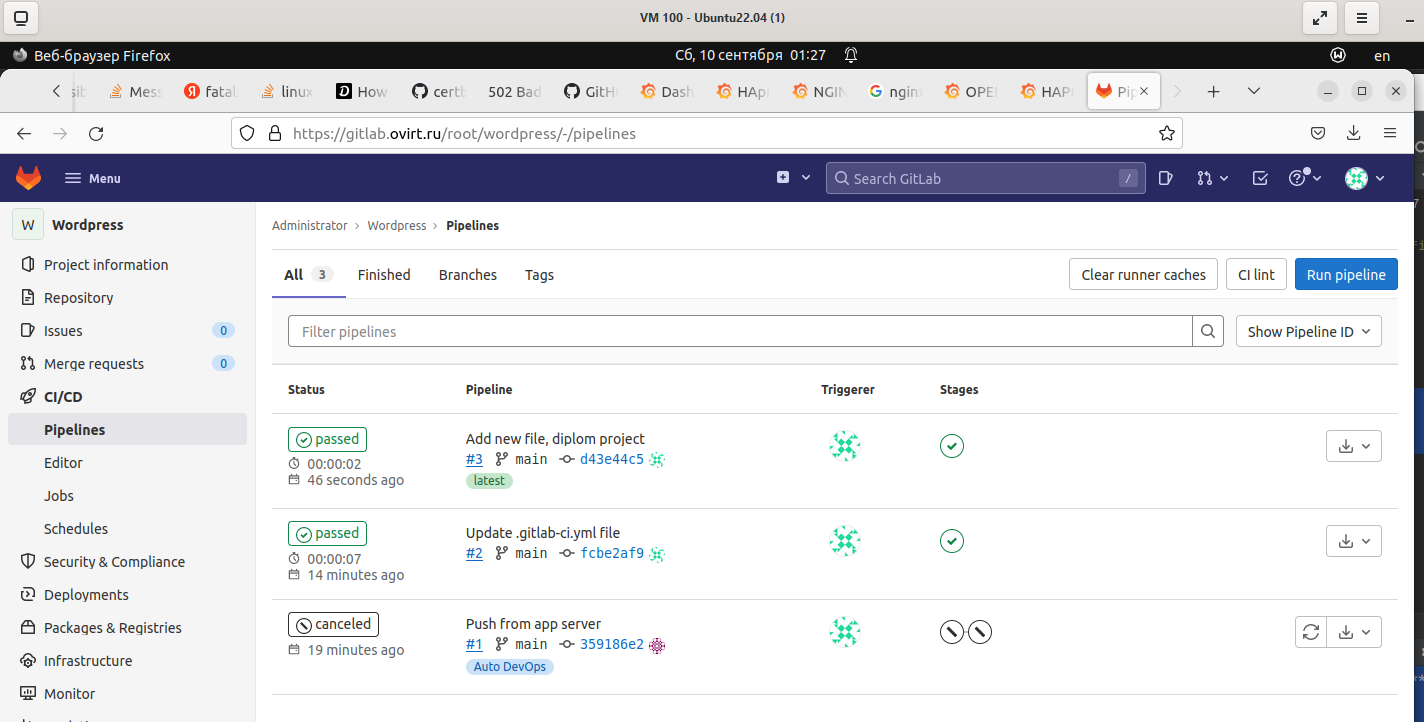

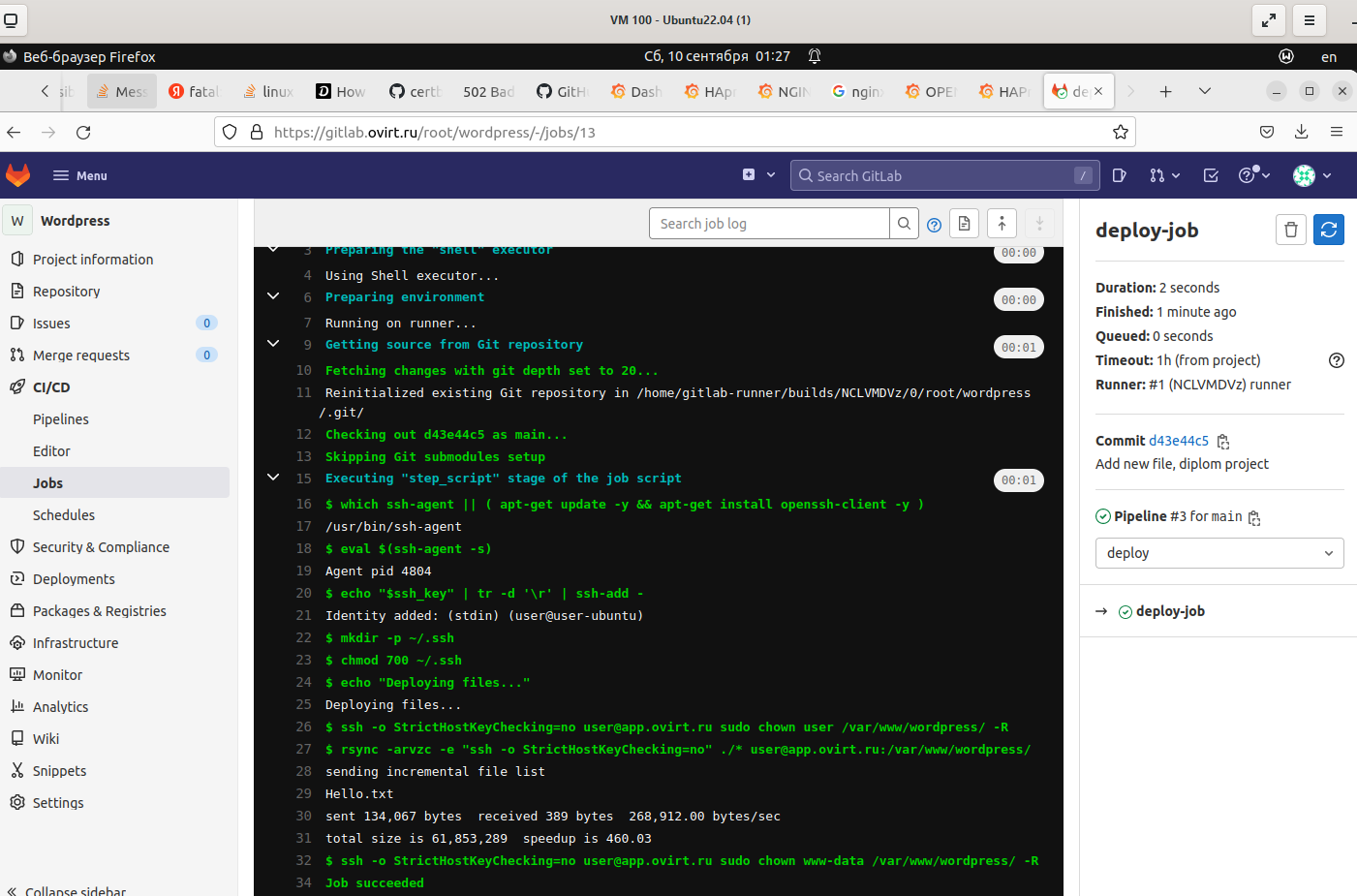

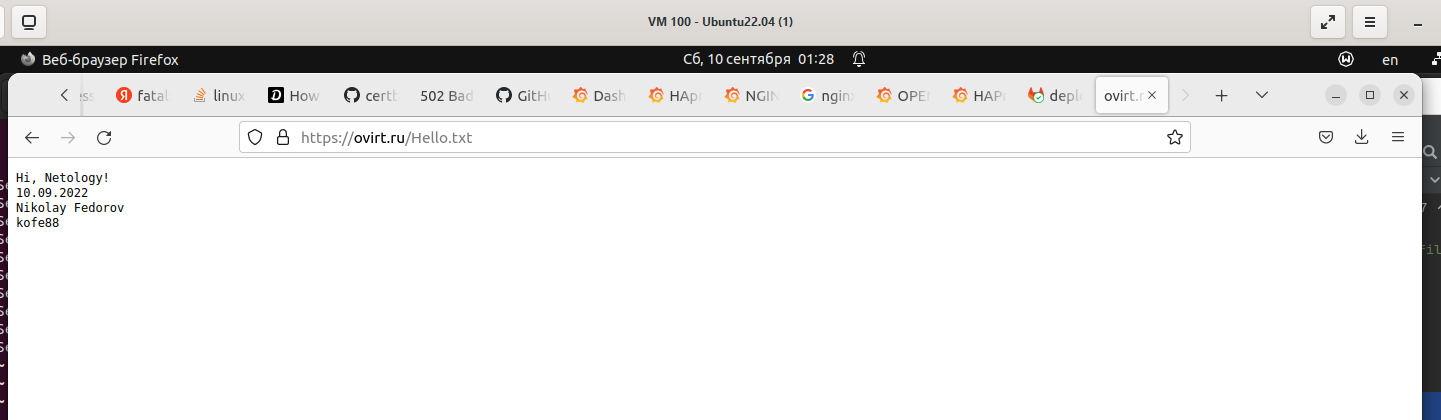

- Настроить CI/CD для автоматического развёртывания приложения.

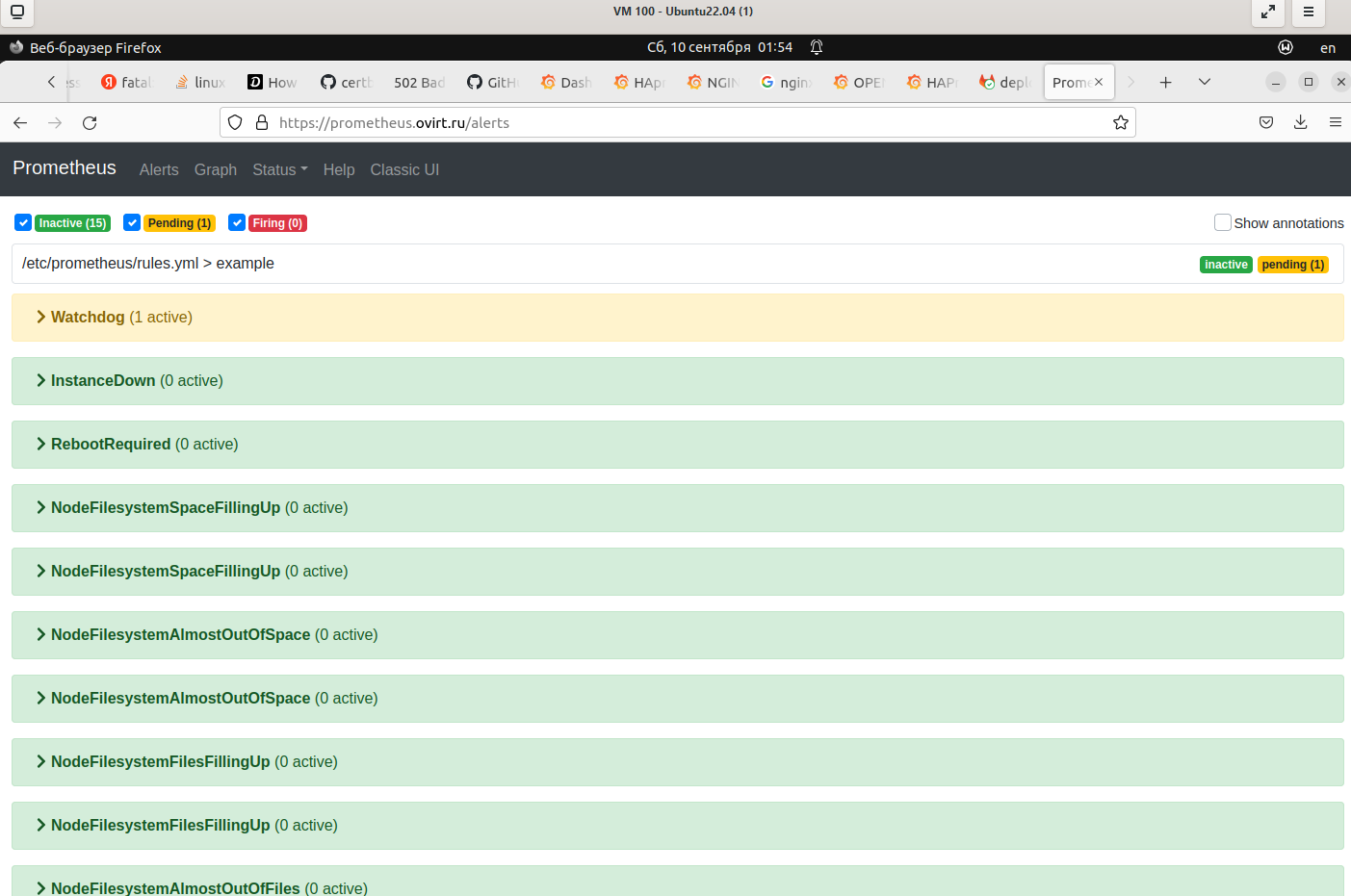

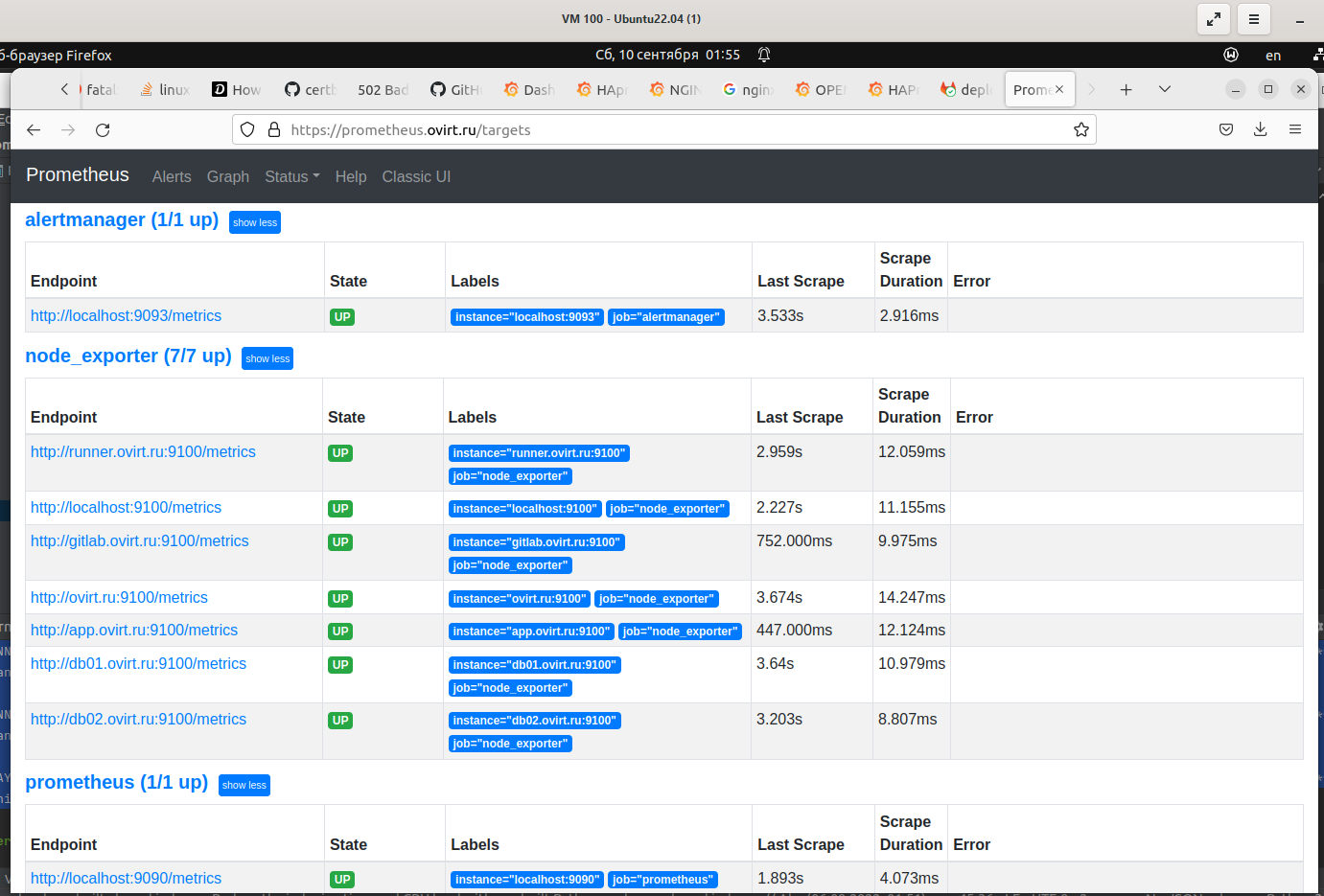

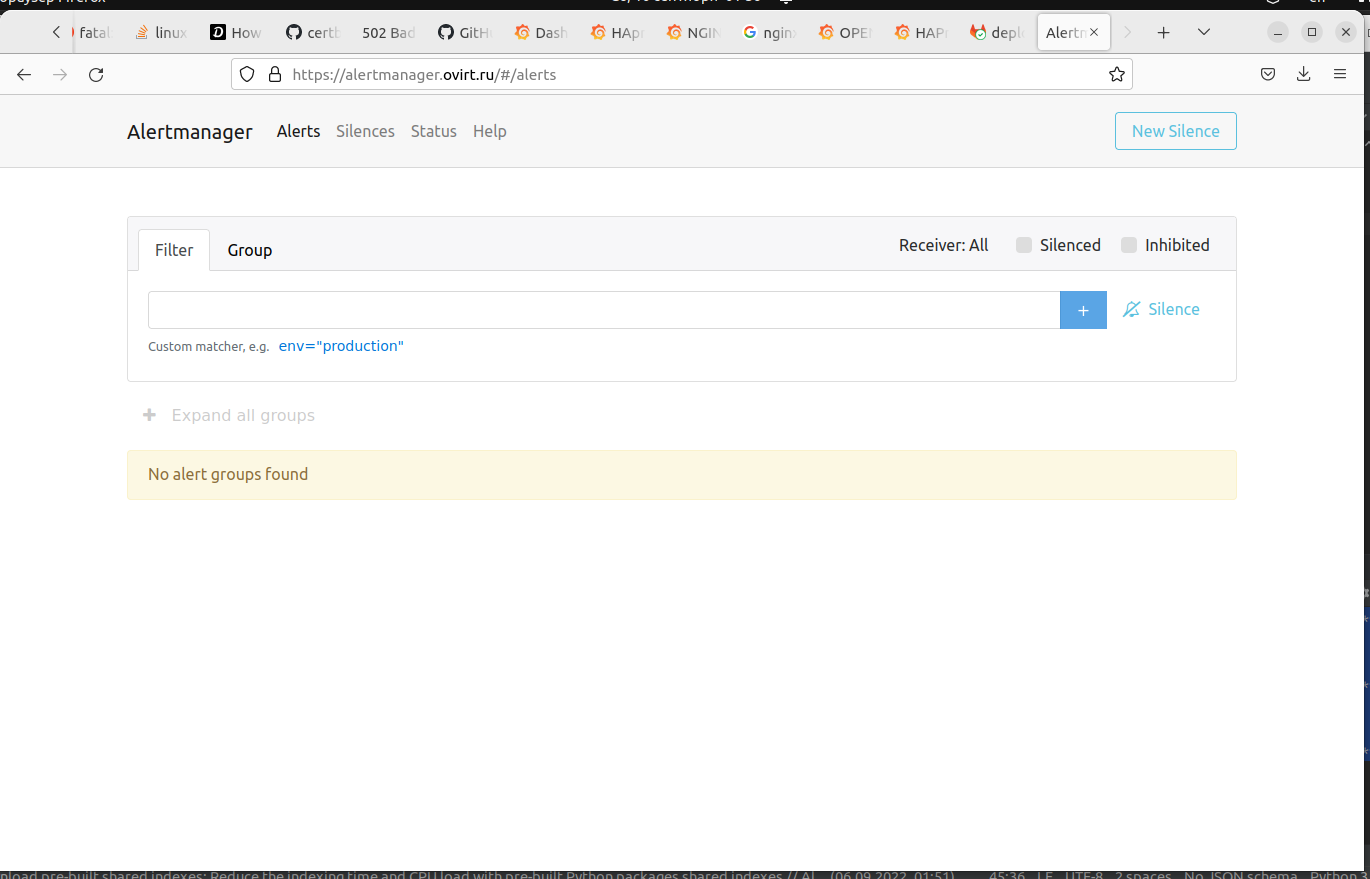

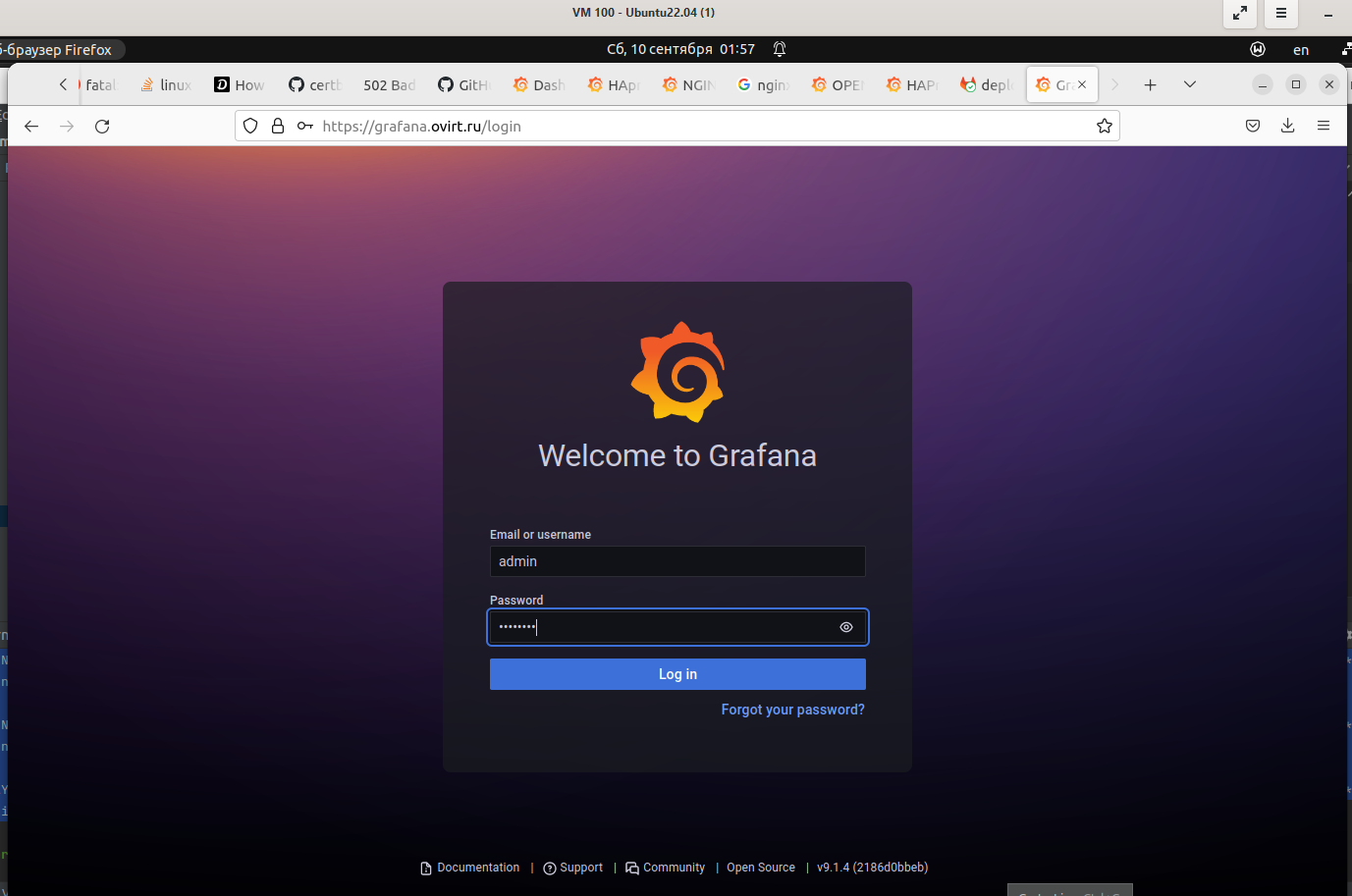

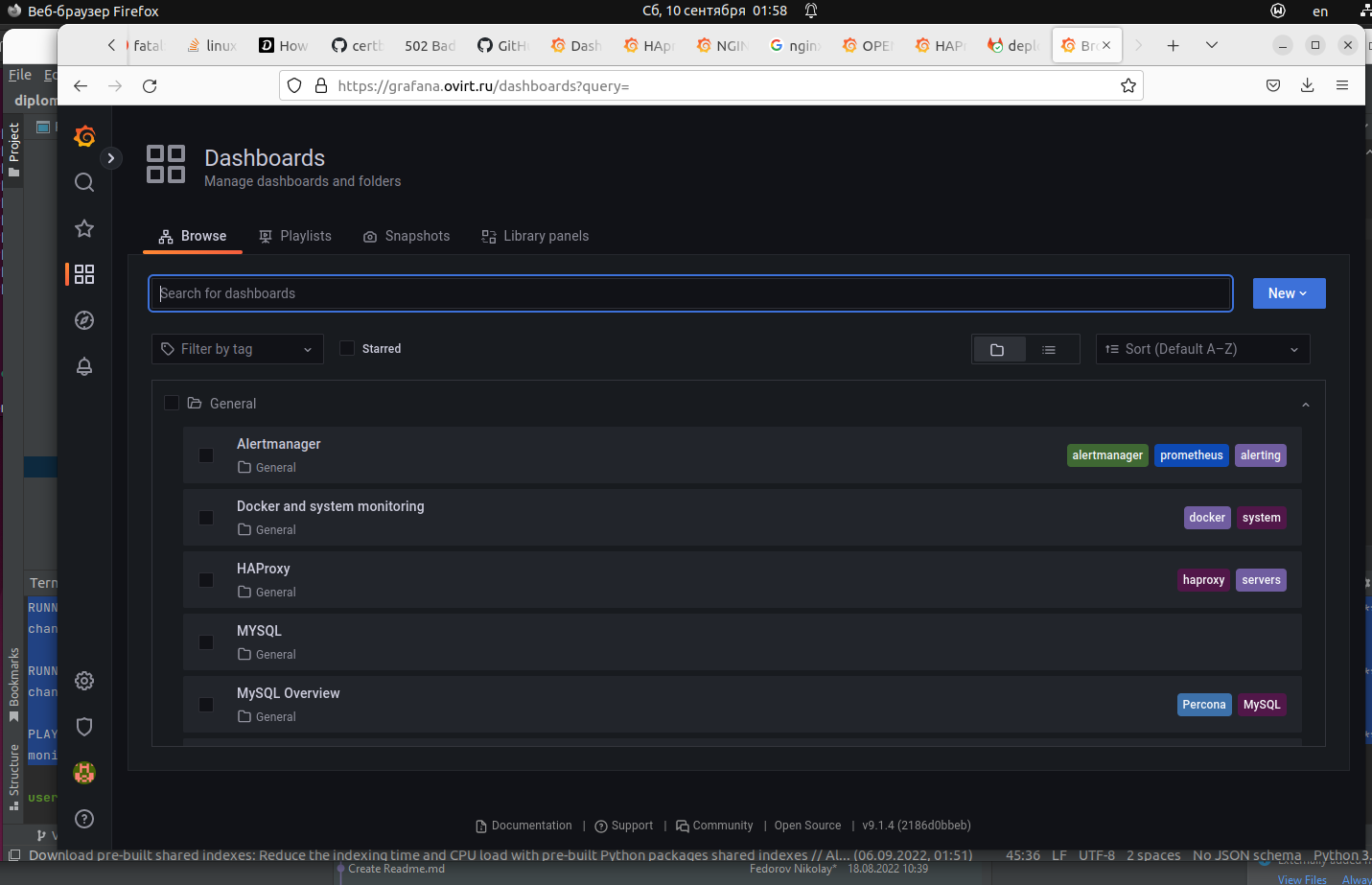

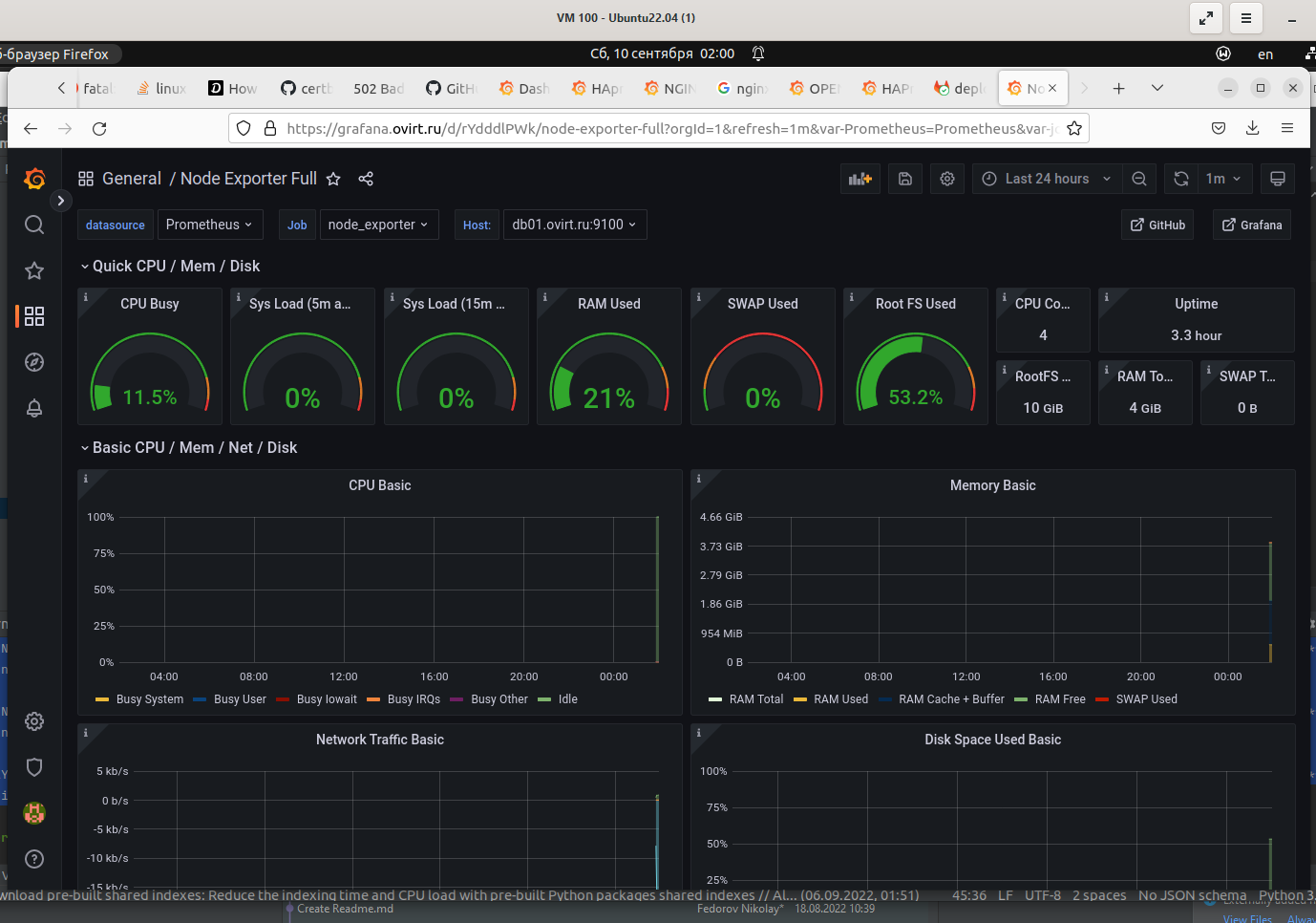

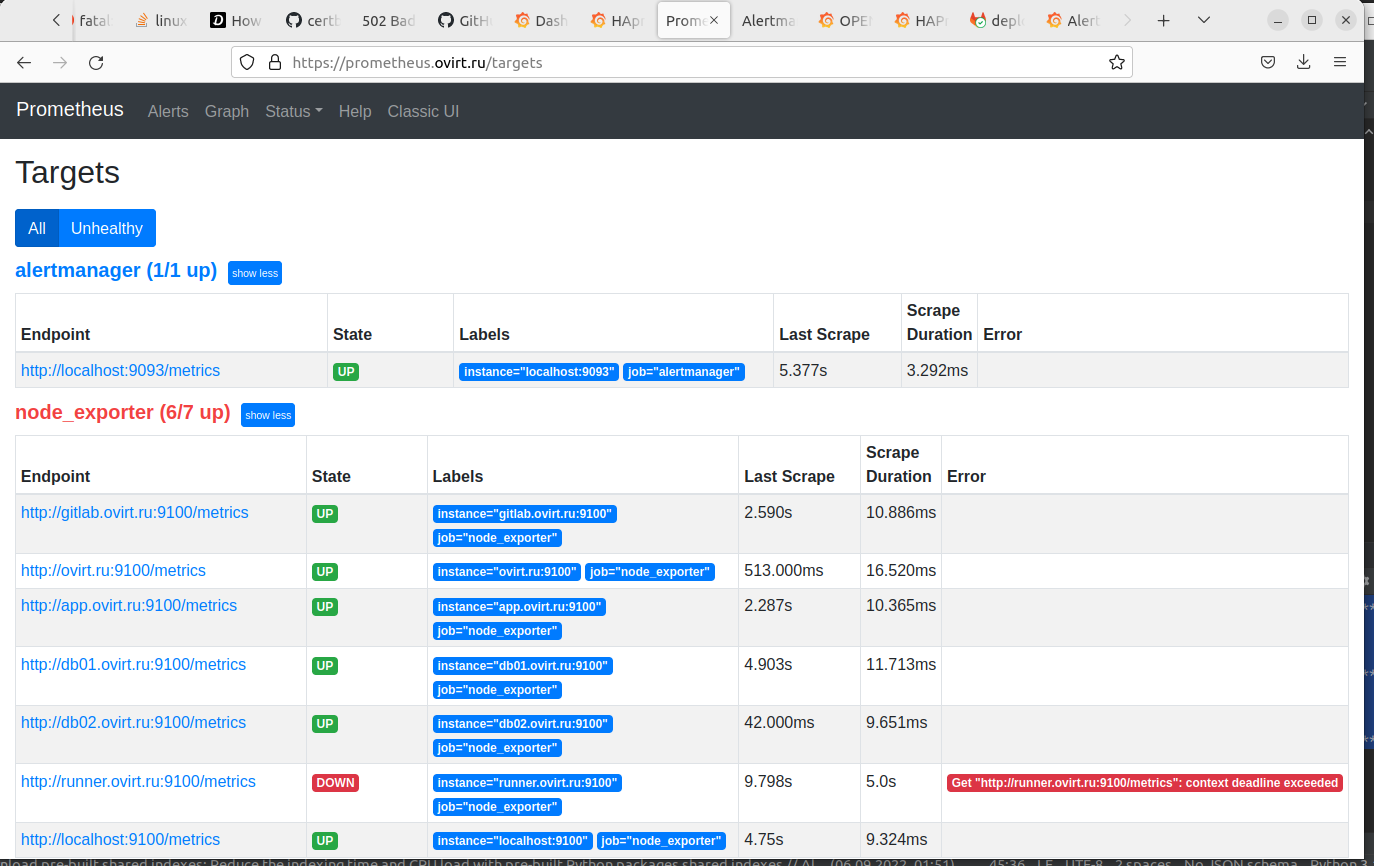

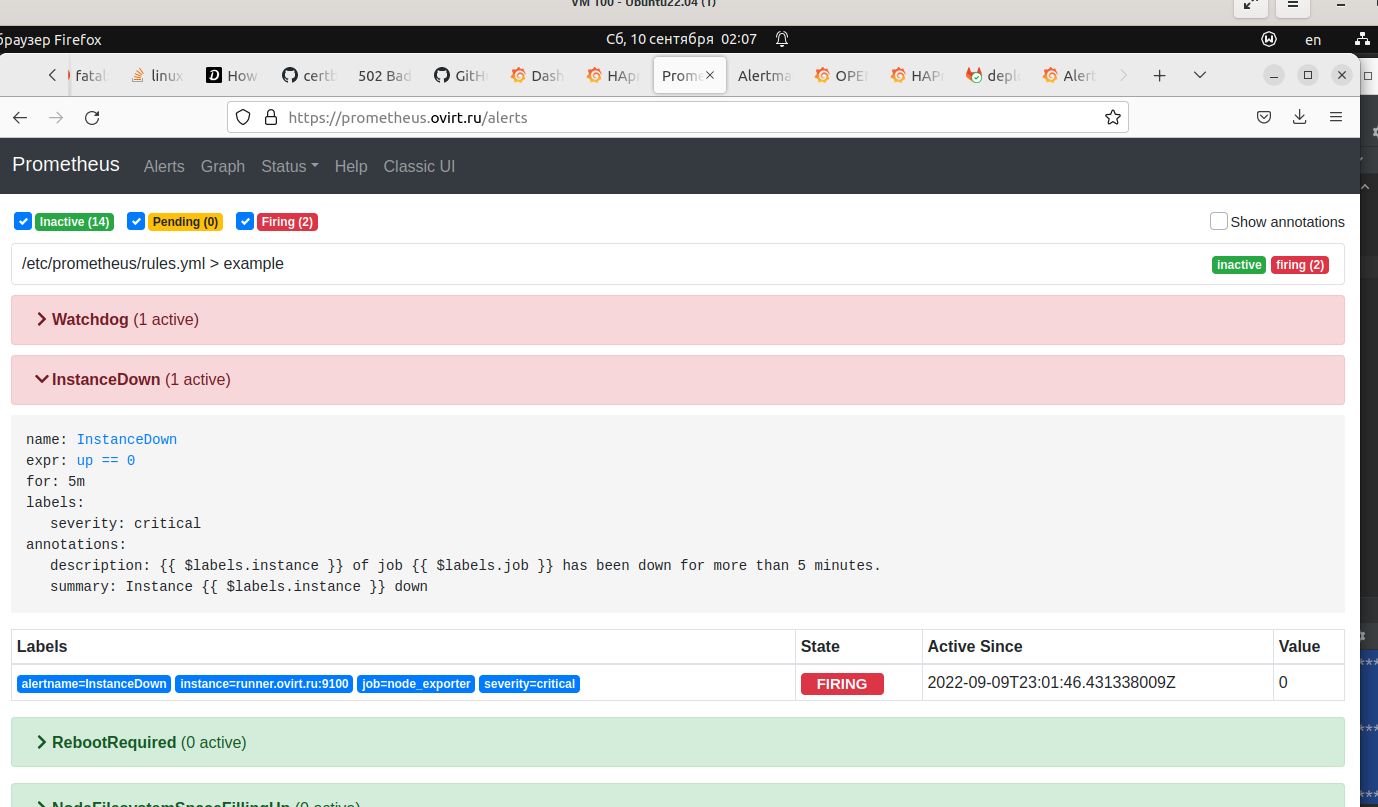

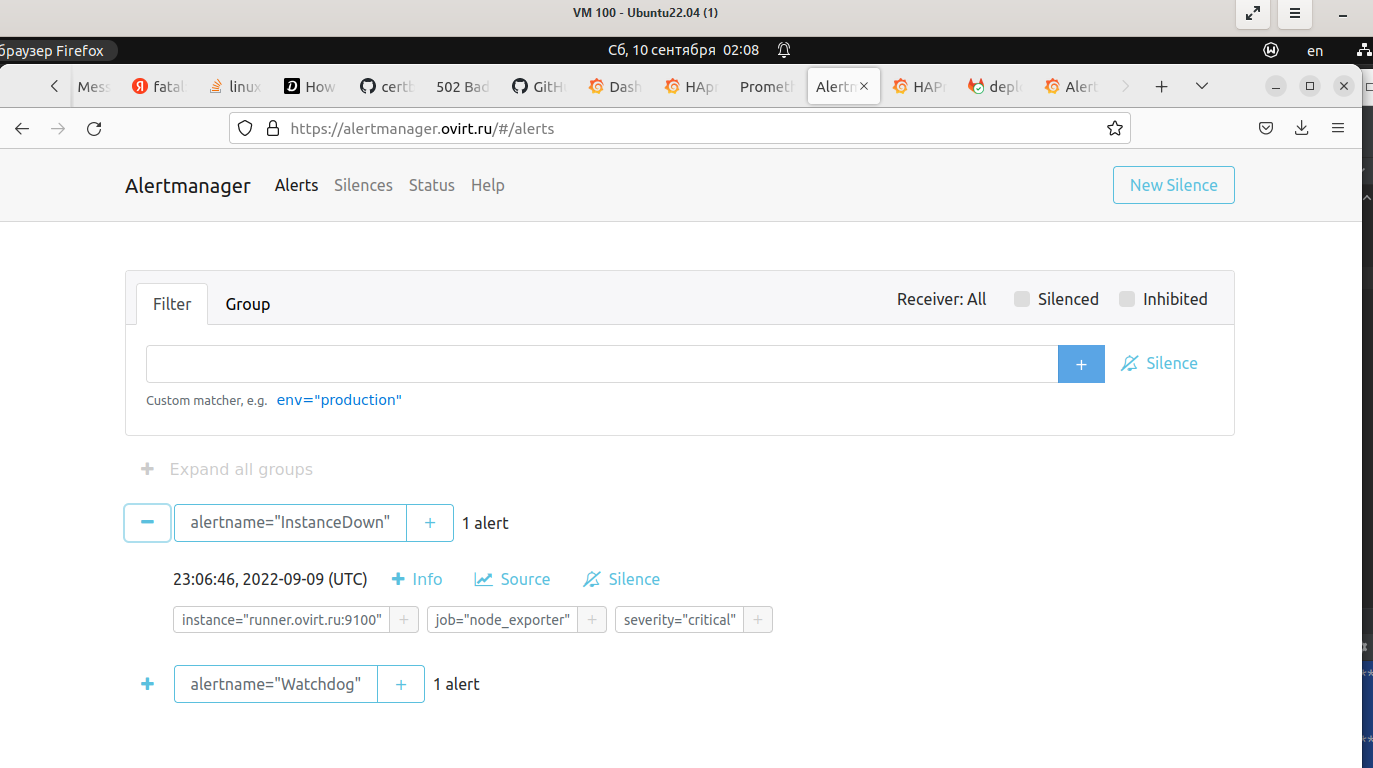

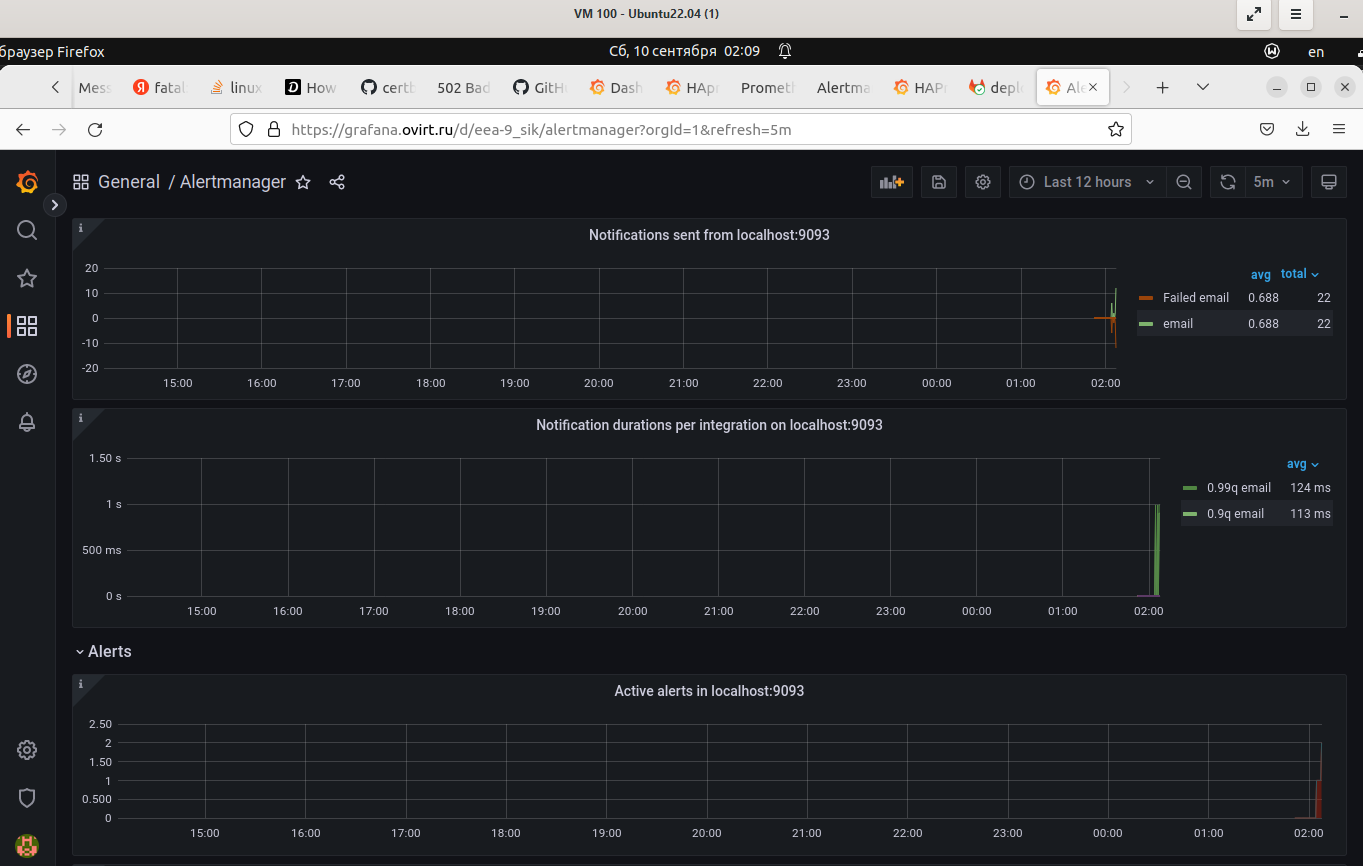

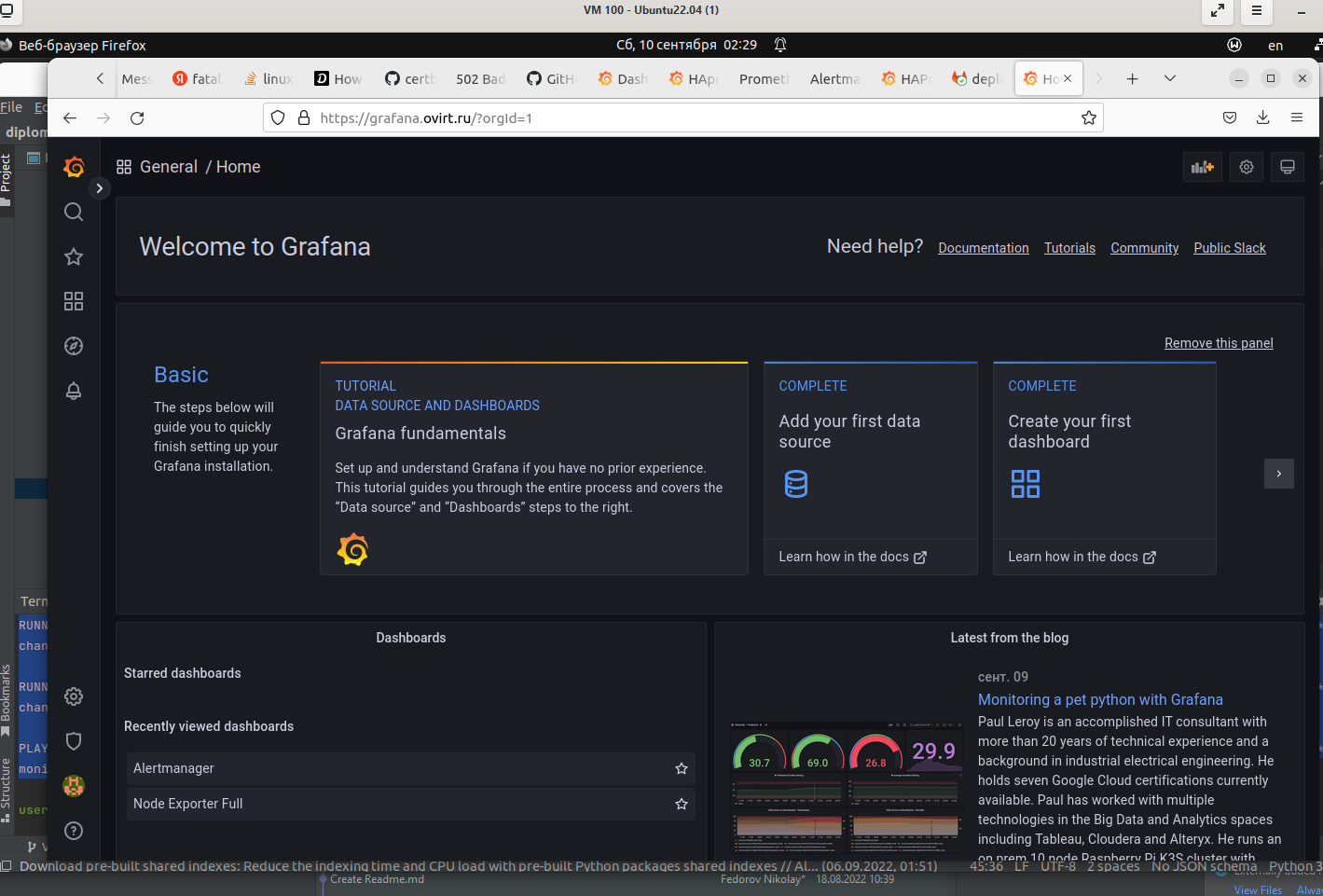

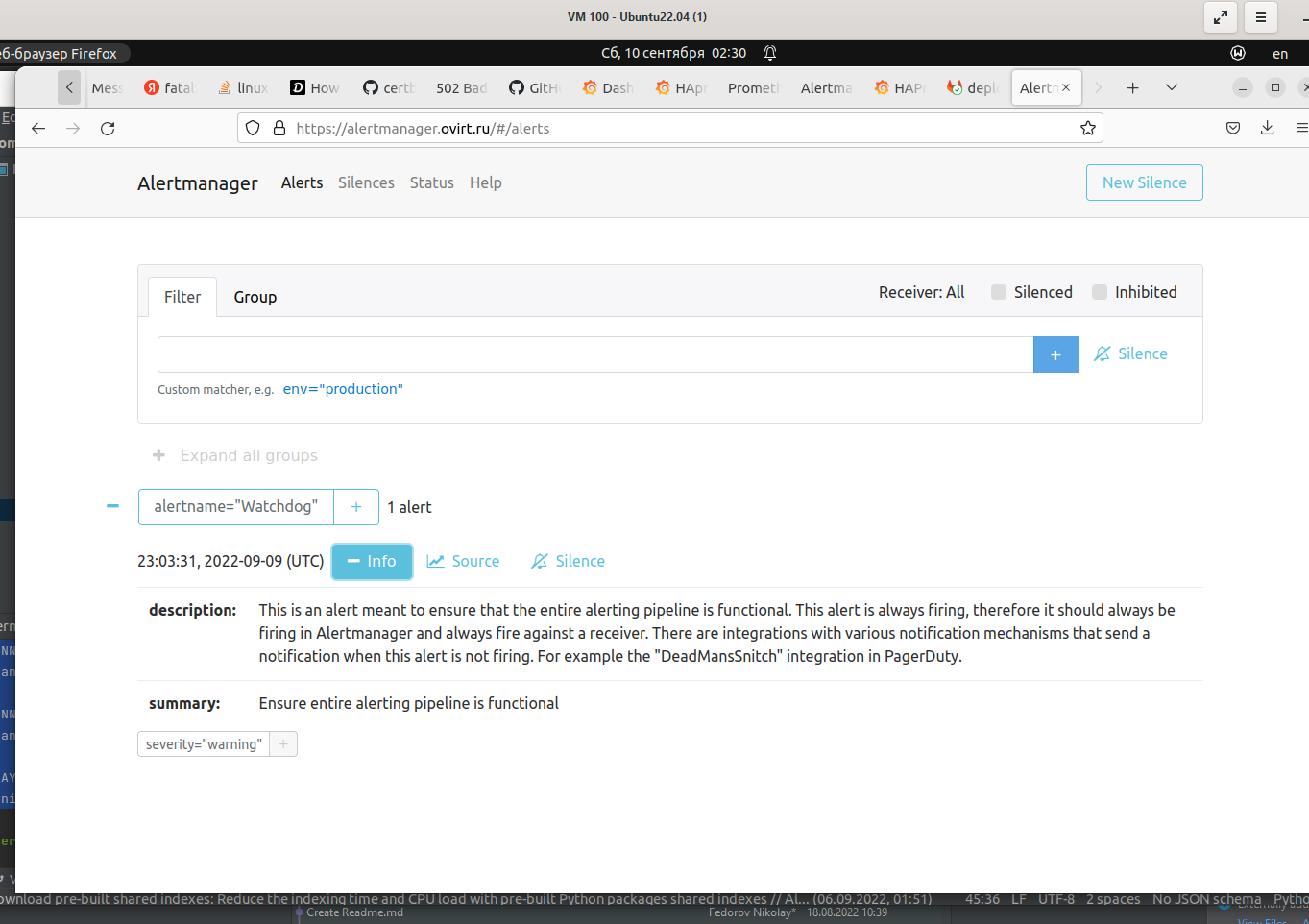

- Настроить мониторинг инфраструктуры с помощью стека: Prometheus, Alert Manager и Grafana.

Подойдет любое доменное имя на ваш выбор в любой доменной зоне.

ПРИМЕЧАНИЕ: Далее в качестве примера используется домен you.domain замените его вашим доменом.

Рекомендуемые регистраторы:

Цель:

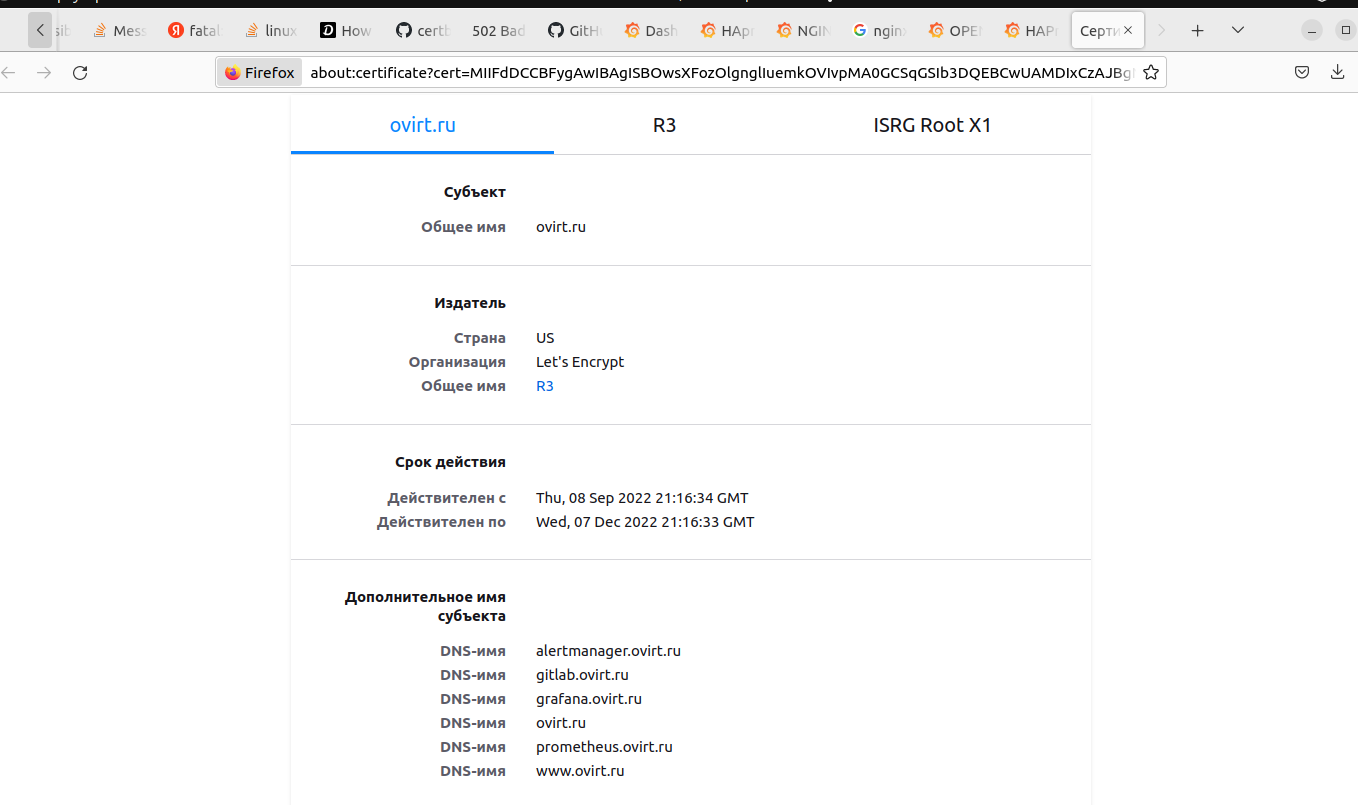

- Получить возможность выписывать TLS сертификаты для веб-сервера.

Ожидаемые результаты:

- У вас есть доступ к личному кабинету на сайте регистратора.

- Вы зарезистрировали домен и можете им управлять (редактировать dns записи в рамках этого домена).

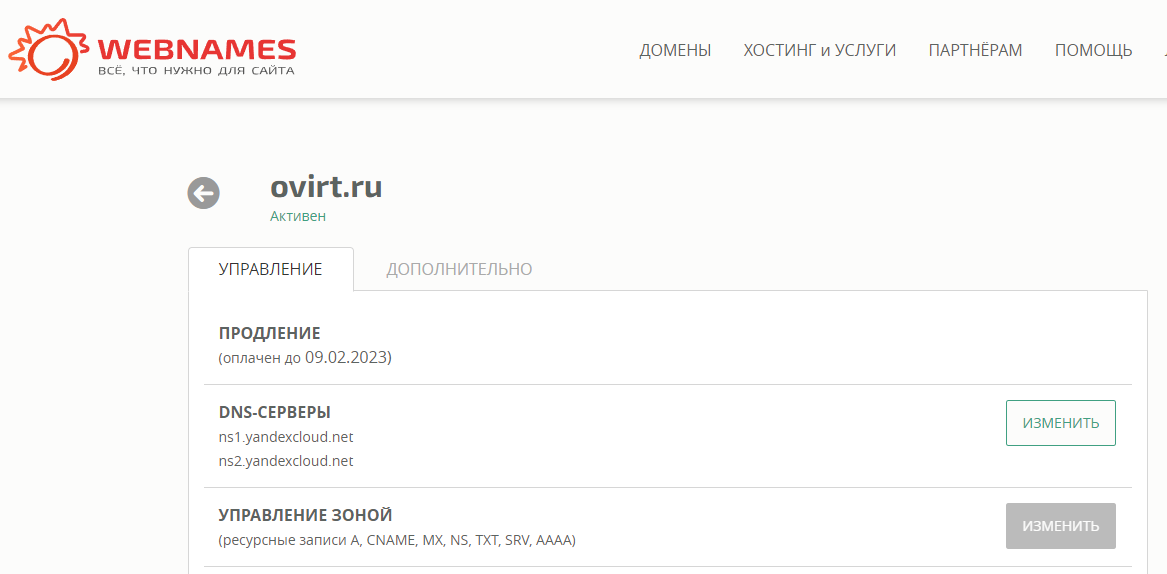

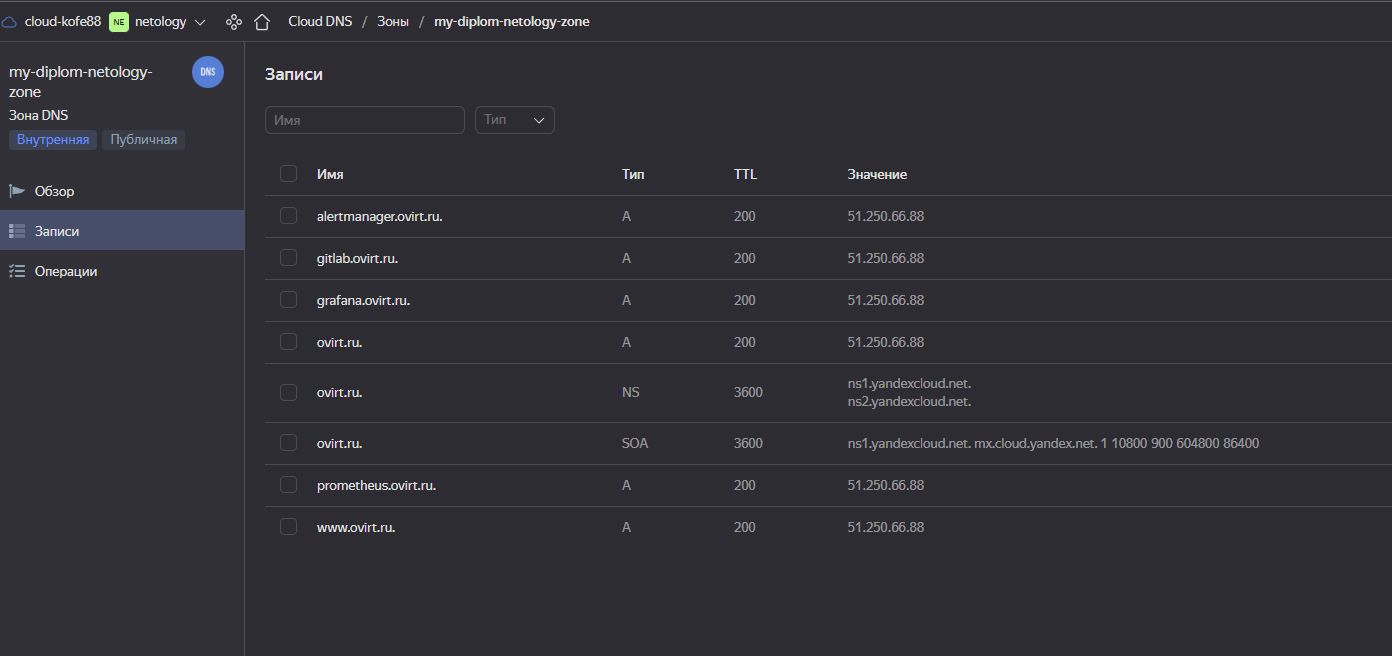

Есть зарегистрированное имя ovirt.ru у регистратора webnames.ru.

Делегировал его DNS на ns1.yandexcloud.net и ns2.yandexcloud.net, т.к. буду использовать DNS от YC.

resource "yandex_dns_zone" "diplom" {

name = "my-diplom-netology-zone"

description = "Diplom Netology public zone"

labels = {

label1 = "diplom-public"

}

zone = "ovirt.ru."

public = true

depends_on = [

yandex_vpc_subnet.net-101,yandex_vpc_subnet.net-102

]

}

resource "yandex_dns_recordset" "def" {

zone_id = yandex_dns_zone.diplom.id

name = "@.ovirt.ru."

type = "A"

ttl = 200

data = [yandex_vpc_address.addr.external_ipv4_address[0].address]

}

resource "yandex_dns_recordset" "gitlab" {

zone_id = yandex_dns_zone.diplom.id

name = "gitlab.ovirt.ru."

type = "A"

ttl = 200

data = [yandex_vpc_address.addr.external_ipv4_address[0].address]

}

resource "yandex_dns_recordset" "alertmanager" {

zone_id = yandex_dns_zone.diplom.id

name = "alertmanager.ovirt.ru."

type = "A"

ttl = 200

data = [yandex_vpc_address.addr.external_ipv4_address[0].address]

}

resource "yandex_dns_recordset" "grafana" {

zone_id = yandex_dns_zone.diplom.id

name = "grafana.ovirt.ru."

type = "A"

ttl = 200

data = [yandex_vpc_address.addr.external_ipv4_address[0].address]

}

resource "yandex_dns_recordset" "prometheus" {

zone_id = yandex_dns_zone.diplom.id

name = "prometheus.ovirt.ru."

type = "A"

ttl = 200

data = [yandex_vpc_address.addr.external_ipv4_address[0].address]

}

resource "yandex_dns_recordset" "www" {

zone_id = yandex_dns_zone.diplom.id

name = "www.ovirt.ru."

type = "A"

ttl = 200

data = [yandex_vpc_address.addr.external_ipv4_address[0].address]

}Так же буду арендовать статический ip у YC автоматически.

resource "yandex_vpc_address" "addr" {

name = "ip-${terraform.workspace}"

external_ipv4_address {

zone_id = "ru-central1-a"

}

}Для начала необходимо подготовить инфраструктуру в YC при помощи Terraform.

Особенности выполнения:

- Бюджет купона ограничен, что следует иметь в виду при проектировании инфраструктуры и использовании ресурсов;

- Следует использовать последнюю стабильную версию Terraform.

Предварительная подготовка:

- Создайте сервисный аккаунт, который будет в дальнейшем использоваться Terraform для работы с инфраструктурой с необходимыми и достаточными правами. Не стоит использовать права суперпользователя

- Подготовьте backend для Terraform:

а. Рекомендуемый вариант: Terraform Cloud

б. Альтернативный вариант: S3 bucket в созданном YC аккаунте. - Настройте workspaces

а. Рекомендуемый вариант: создайте два workspace: stage и prod. В случае выбора этого варианта все последующие шаги должны учитывать факт существования нескольких workspace.

б. Альтернативный вариант: используйте один workspace, назвав его stage. Пожалуйста, не используйте workspace, создаваемый Terraform-ом по-умолчанию (default). - Создайте VPC с подсетями в разных зонах доступности.

- Убедитесь, что теперь вы можете выполнить команды

terraform destroyиterraform applyбез дополнительных ручных действий. - В случае использования Terraform Cloud в качестве backend убедитесь, что применение изменений успешно проходит, используя web-интерфейс Terraform cloud.

Цель:

- Повсеместно применять IaaC подход при организации (эксплуатации) инфраструктуры.

- Иметь возможность быстро создавать (а также удалять) виртуальные машины и сети. С целью экономии денег на вашем аккаунте в YandexCloud.

Ожидаемые результаты:

- Terraform сконфигурирован и создание инфраструктуры посредством Terraform возможно без дополнительных ручных действий.

- Полученная конфигурация инфраструктуры является предварительной, поэтому в ходе дальнейшего выполнения задания возможны изменения.

Использовал сервисны аккаунт из лабораторных работ - my-netology.

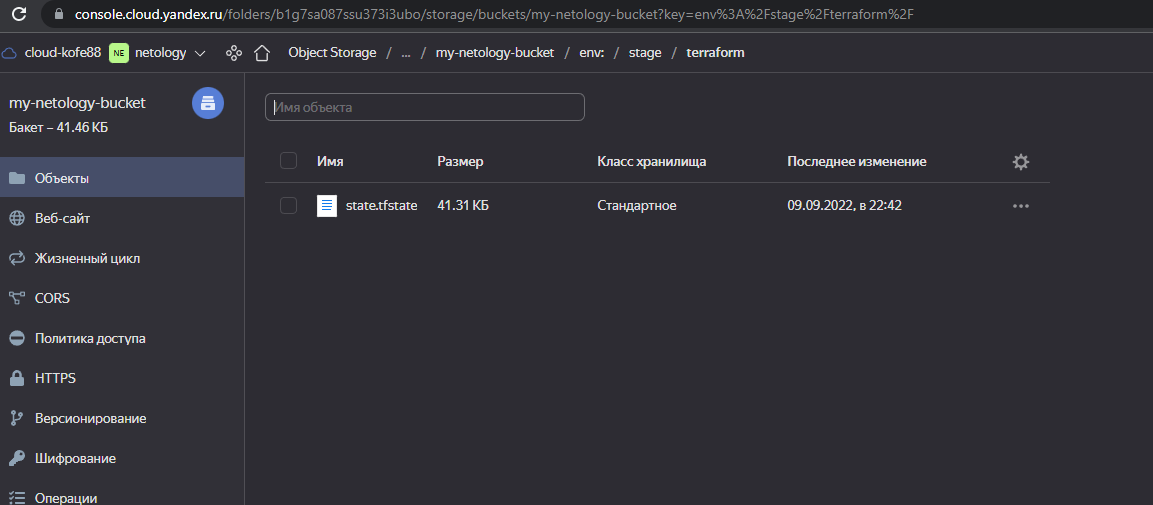

Бекенд подготавливаю отдельным конфигом терраформа s3, а затем уже использую его в основном stage, т.к. не вышло его сразу и создать и использовать в одном конфиге.

Использую один воркспейс stage.

VPC в разных зонах доступности, настроена маршрутизация:

resource "yandex_vpc_network" "default" {

name = "net-${terraform.workspace}"

}

resource "yandex_vpc_route_table" "route-table" {

name = "nat-instance-route"

network_id = "${yandex_vpc_network.default.id}"

static_route {

destination_prefix = "0.0.0.0/0"

next_hop_address = var.lan_proxy_ip

}

}

resource "yandex_vpc_subnet" "net-101" {

name = "subnet-${terraform.workspace}-101"

zone = "ru-central1-a"

network_id = "${yandex_vpc_network.default.id}"

v4_cidr_blocks = ["192.168.101.0/24"]

route_table_id = yandex_vpc_route_table.route-table.id

}

resource "yandex_vpc_subnet" "net-102" {

name = "subnet-${terraform.workspace}-102"

zone = "ru-central1-b"

network_id = "${yandex_vpc_network.default.id}"

v4_cidr_blocks = ["192.168.102.0/24"]

route_table_id = yandex_vpc_route_table.route-table.id

}Конфигурации terraform тут, в процессе могут измениться.

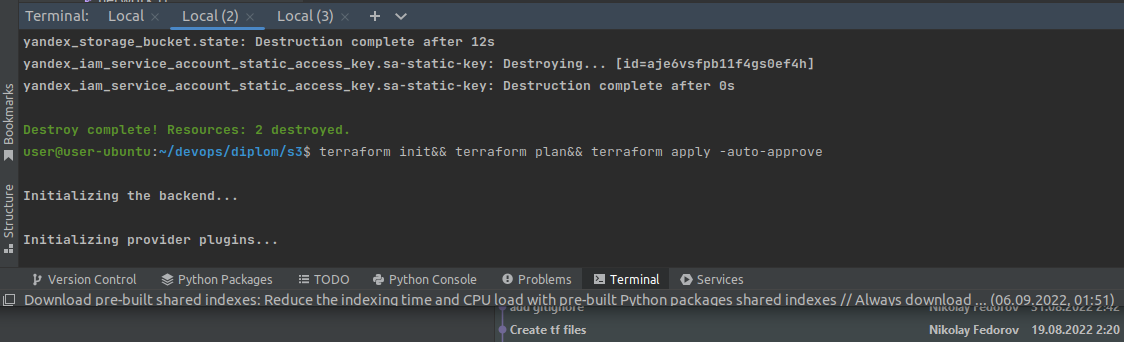

Сначала из каталога s3, для создания бакета в YC

export YC_TOKEN=$(yc config get token)

terraform init

terraform plan

terraform apply --auto-approveВывод terraform

user@user-ubuntu:~/devops/diplom/s3$ terraform init&& terraform plan&& terraform apply -auto-approve

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of yandex-cloud/yandex from the dependency lock file

- Using previously-installed yandex-cloud/yandex v0.78.1

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

data.yandex_iam_service_account.my-netology: Reading...

data.yandex_iam_service_account.my-netology: Read complete after 0s [id=ajesg66dg5r1ahte7mqd]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# yandex_iam_service_account_static_access_key.sa-static-key will be created

+ resource "yandex_iam_service_account_static_access_key" "sa-static-key" {

+ access_key = (known after apply)

+ created_at = (known after apply)

+ description = "static access key for object storage"

+ encrypted_secret_key = (known after apply)

+ id = (known after apply)

+ key_fingerprint = (known after apply)

+ secret_key = (sensitive value)

+ service_account_id = "ajesg66dg5r1ahte7mqd"

}

# yandex_storage_bucket.state will be created

+ resource "yandex_storage_bucket" "state" {

+ access_key = (known after apply)

+ acl = "private"

+ bucket = "my-netology-bucket"

+ bucket_domain_name = (known after apply)

+ default_storage_class = (known after apply)

+ folder_id = (known after apply)

+ force_destroy = true

+ id = (known after apply)

+ secret_key = (sensitive value)

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

+ anonymous_access_flags {

+ list = (known after apply)

+ read = (known after apply)

}

+ versioning {

+ enabled = (known after apply)

}

}

Plan: 2 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ access_key = (sensitive value)

+ secret_key = (sensitive value)

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now.

data.yandex_iam_service_account.my-netology: Reading...

data.yandex_iam_service_account.my-netology: Read complete after 0s [id=ajesg66dg5r1ahte7mqd]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# yandex_iam_service_account_static_access_key.sa-static-key will be created

+ resource "yandex_iam_service_account_static_access_key" "sa-static-key" {

+ access_key = (known after apply)

+ created_at = (known after apply)

+ description = "static access key for object storage"

+ encrypted_secret_key = (known after apply)

+ id = (known after apply)

+ key_fingerprint = (known after apply)

+ secret_key = (sensitive value)

+ service_account_id = "ajesg66dg5r1ahte7mqd"

}

# yandex_storage_bucket.state will be created

+ resource "yandex_storage_bucket" "state" {

+ access_key = (known after apply)

+ acl = "private"

+ bucket = "my-netology-bucket"

+ bucket_domain_name = (known after apply)

+ default_storage_class = (known after apply)

+ folder_id = (known after apply)

+ force_destroy = true

+ id = (known after apply)

+ secret_key = (sensitive value)

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

+ anonymous_access_flags {

+ list = (known after apply)

+ read = (known after apply)

}

+ versioning {

+ enabled = (known after apply)

}

}

Plan: 2 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ access_key = (sensitive value)

+ secret_key = (sensitive value)

yandex_iam_service_account_static_access_key.sa-static-key: Creating...

yandex_iam_service_account_static_access_key.sa-static-key: Creation complete after 0s [id=ajevatl8bfcpe66f6s6f]

yandex_storage_bucket.state: Creating...

yandex_storage_bucket.state: Still creating... [10s elapsed]

yandex_storage_bucket.state: Still creating... [20s elapsed]

yandex_storage_bucket.state: Still creating... [30s elapsed]

yandex_storage_bucket.state: Still creating... [40s elapsed]

yandex_storage_bucket.state: Still creating... [50s elapsed]

yandex_storage_bucket.state: Still creating... [1m0s elapsed]

yandex_storage_bucket.state: Creation complete after 1m1s [id=my-netology-bucket]

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

Outputs:

access_key = <sensitive>

secret_key = <sensitive>

Из файла terraform.tfstate берем значения access_key и secret_key и заносим их в файл main.tf каталога stage.

Далее из каталога stage

export YC_TOKEN=$(yc config get token)

terraform init

terraform workspace new stage

terraform init

terraform plan

terraform apply --auto-approve

terraform output -json > output.jsonВывод terraform

user@user-ubuntu:~/devops/diplom/stage$ terraform init -reconfigure&& terraform workspace new stage&& terraform init -reconfigure&& terraform plan&& terraform apply --auto-approve&& terraform output -json > output.json

Initializing the backend...

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Reusing previous version of yandex-cloud/yandex from the dependency lock file

- Using previously-installed yandex-cloud/yandex v0.78.1

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Created and switched to workspace "stage"!

You're now on a new, empty workspace. Workspaces isolate their state,

so if you run "terraform plan" Terraform will not see any existing state

for this configuration.

Initializing the backend...

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Reusing previous version of yandex-cloud/yandex from the dependency lock file

- Using previously-installed yandex-cloud/yandex v0.78.1

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

data.yandex_iam_service_account.my-netology: Reading...

data.yandex_iam_service_account.my-netology: Read complete after 0s [id=ajesg66dg5r1ahte7mqd]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# yandex_compute_instance.app will be created

+ resource "yandex_compute_instance" "app" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "app.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "app"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.db01 will be created

+ resource "yandex_compute_instance" "db01" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "db01.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "db01"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.db02 will be created

+ resource "yandex_compute_instance" "db02" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "db02.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "db02"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.gitlab will be created

+ resource "yandex_compute_instance" "gitlab" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "gitlab.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "gitlab"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8kdq6d0p8sij7h5qe3"

+ name = (known after apply)

+ size = 40

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.monitoring will be created

+ resource "yandex_compute_instance" "monitoring" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "monitoring.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "monitoring"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.proxy will be created

+ resource "yandex_compute_instance" "proxy" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "proxy"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-a"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd83slullt763d3lo57m"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = "192.168.101.100"

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = true

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 2

+ memory = 2

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.runner will be created

+ resource "yandex_compute_instance" "runner" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "runner.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "runner"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_dns_recordset.alertmanager will be created

+ resource "yandex_dns_recordset" "alertmanager" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "alertmanager.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.def will be created

+ resource "yandex_dns_recordset" "def" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "@.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.gitlab will be created

+ resource "yandex_dns_recordset" "gitlab" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "gitlab.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.grafana will be created

+ resource "yandex_dns_recordset" "grafana" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "grafana.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.prometheus will be created

+ resource "yandex_dns_recordset" "prometheus" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "prometheus.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.www will be created

+ resource "yandex_dns_recordset" "www" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "www.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_zone.diplom will be created

+ resource "yandex_dns_zone" "diplom" {

+ created_at = (known after apply)

+ description = "Diplom Netology public zone"

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = {

+ "label1" = "diplom-public"

}

+ name = "my-diplom-netology-zone"

+ private_networks = (known after apply)

+ public = true

+ zone = "ovirt.ru."

}

# yandex_vpc_address.addr will be created

+ resource "yandex_vpc_address" "addr" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "ip-stage"

+ reserved = (known after apply)

+ used = (known after apply)

+ external_ipv4_address {

+ address = (known after apply)

+ ddos_protection_provider = (known after apply)

+ outgoing_smtp_capability = (known after apply)

+ zone_id = "ru-central1-a"

}

}

# yandex_vpc_network.default will be created

+ resource "yandex_vpc_network" "default" {

+ created_at = (known after apply)

+ default_security_group_id = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "net-stage"

+ subnet_ids = (known after apply)

}

# yandex_vpc_route_table.route-table will be created

+ resource "yandex_vpc_route_table" "route-table" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "nat-instance-route"

+ network_id = (known after apply)

+ static_route {

+ destination_prefix = "0.0.0.0/0"

+ next_hop_address = "192.168.101.100"

}

}

# yandex_vpc_subnet.net-101 will be created

+ resource "yandex_vpc_subnet" "net-101" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "subnet-stage-101"

+ network_id = (known after apply)

+ route_table_id = (known after apply)

+ v4_cidr_blocks = [

+ "192.168.101.0/24",

]

+ v6_cidr_blocks = (known after apply)

+ zone = "ru-central1-a"

}

# yandex_vpc_subnet.net-102 will be created

+ resource "yandex_vpc_subnet" "net-102" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "subnet-stage-102"

+ network_id = (known after apply)

+ route_table_id = (known after apply)

+ v4_cidr_blocks = [

+ "192.168.102.0/24",

]

+ v6_cidr_blocks = (known after apply)

+ zone = "ru-central1-b"

}

Plan: 19 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ internal_ip_address_app_yandex_cloud = (known after apply)

+ internal_ip_address_db01_yandex_cloud = (known after apply)

+ internal_ip_address_db02_yandex_cloud = (known after apply)

+ internal_ip_address_gitlab_yandex_cloud = (known after apply)

+ internal_ip_address_monitoring_yandex_cloud = (known after apply)

+ internal_ip_address_proxy_lan_yandex_cloud = "192.168.101.100"

+ internal_ip_address_proxy_wan_yandex_cloud = (known after apply)

+ internal_ip_address_runner_yandex_cloud = (known after apply)

+ workspace = "stage"

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now.

data.yandex_iam_service_account.my-netology: Reading...

data.yandex_iam_service_account.my-netology: Read complete after 0s [id=ajesg66dg5r1ahte7mqd]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# yandex_compute_instance.app will be created

+ resource "yandex_compute_instance" "app" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "app.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "app"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.db01 will be created

+ resource "yandex_compute_instance" "db01" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "db01.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "db01"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.db02 will be created

+ resource "yandex_compute_instance" "db02" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "db02.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "db02"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.gitlab will be created

+ resource "yandex_compute_instance" "gitlab" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "gitlab.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "gitlab"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8kdq6d0p8sij7h5qe3"

+ name = (known after apply)

+ size = 40

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.monitoring will be created

+ resource "yandex_compute_instance" "monitoring" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "monitoring.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "monitoring"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.proxy will be created

+ resource "yandex_compute_instance" "proxy" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "proxy"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-a"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd83slullt763d3lo57m"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = "192.168.101.100"

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = true

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 2

+ memory = 2

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_compute_instance.runner will be created

+ resource "yandex_compute_instance" "runner" {

+ allow_stopping_for_update = true

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ fqdn = (known after apply)

+ hostname = "runner.ovirt.ru"

+ id = (known after apply)

+ metadata = {

+ "user-data" = <<-EOT

#cloud-config

users:

- name: user

groups: sudo

shell: /bin/bash

sudo: ['ALL=(ALL) NOPASSWD:ALL']

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCeOYFLwcOqvWVg6bamtPX/lxjq6wxnI7HBGOkqhusbpZajDbF7OZ0mSAzw4J4xV3rMMW1eimmi/vLTvYh2N91vUegbfleWuh9XfS0Ouv1XYiDiEw1X5wPfj8VwWIIIqSRfCxiO4C7njT+yRfpgJDXLHJ2Oy40c1kmvOPq6fA4zIBpqADAjCcLUS7qv1HIR3+K/v+fiUFUEKSFSKYY7ANsM0ujwjpnPnFpDlDxMkuX/8988zPlwIx2woEJTn8ea9UT0cdkdnIGGO7OVvPW16FoEMbs3ccp9l6Nv8DMFbWhd7Mp4Dkekpj+aLeDvCnQCUtcFZIn6AQ74m5ZwKNISoHZyqWehs7RIOPOEEbmpANEk1l7HZKvV7KvZPh1ucc2wj+prUD1ZRP7meRkwjn6orY80UVm7RP4ENsJYNePgZLmK247JbxVXnT93NpU597F78tOEdqpQPshc0jLDPpQRRfLfT6g3WMxL6yXl3CDiC9yr0FbSOzpcyaH7UsAqLsuozx0= user@user-ubuntu

EOT

}

+ name = "runner"

+ network_acceleration_type = "standard"

+ platform_id = "standard-v1"

+ service_account_id = (known after apply)

+ status = (known after apply)

+ zone = "ru-central1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_id = (known after apply)

+ mode = (known after apply)

+ initialize_params {

+ block_size = (known after apply)

+ description = (known after apply)

+ image_id = "fd8uoiksr520scs811jl"

+ name = (known after apply)

+ size = 10

+ snapshot_id = (known after apply)

+ type = "network-nvme"

}

}

+ network_interface {

+ index = (known after apply)

+ ip_address = (known after apply)

+ ipv4 = true

+ ipv6 = (known after apply)

+ ipv6_address = (known after apply)

+ mac_address = (known after apply)

+ nat = false

+ nat_ip_address = (known after apply)

+ nat_ip_version = (known after apply)

+ security_group_ids = (known after apply)

+ subnet_id = (known after apply)

}

+ placement_policy {

+ host_affinity_rules = (known after apply)

+ placement_group_id = (known after apply)

}

+ resources {

+ core_fraction = 100

+ cores = 4

+ memory = 4

}

+ scheduling_policy {

+ preemptible = (known after apply)

}

}

# yandex_dns_recordset.alertmanager will be created

+ resource "yandex_dns_recordset" "alertmanager" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "alertmanager.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.def will be created

+ resource "yandex_dns_recordset" "def" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "@.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.gitlab will be created

+ resource "yandex_dns_recordset" "gitlab" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "gitlab.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.grafana will be created

+ resource "yandex_dns_recordset" "grafana" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "grafana.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.prometheus will be created

+ resource "yandex_dns_recordset" "prometheus" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "prometheus.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_recordset.www will be created

+ resource "yandex_dns_recordset" "www" {

+ data = (known after apply)

+ id = (known after apply)

+ name = "www.ovirt.ru."

+ ttl = 200

+ type = "A"

+ zone_id = (known after apply)

}

# yandex_dns_zone.diplom will be created

+ resource "yandex_dns_zone" "diplom" {

+ created_at = (known after apply)

+ description = "Diplom Netology public zone"

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = {

+ "label1" = "diplom-public"

}

+ name = "my-diplom-netology-zone"

+ private_networks = (known after apply)

+ public = true

+ zone = "ovirt.ru."

}

# yandex_vpc_address.addr will be created

+ resource "yandex_vpc_address" "addr" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "ip-stage"

+ reserved = (known after apply)

+ used = (known after apply)

+ external_ipv4_address {

+ address = (known after apply)

+ ddos_protection_provider = (known after apply)

+ outgoing_smtp_capability = (known after apply)

+ zone_id = "ru-central1-a"

}

}

# yandex_vpc_network.default will be created

+ resource "yandex_vpc_network" "default" {

+ created_at = (known after apply)

+ default_security_group_id = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "net-stage"

+ subnet_ids = (known after apply)

}

# yandex_vpc_route_table.route-table will be created

+ resource "yandex_vpc_route_table" "route-table" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "nat-instance-route"

+ network_id = (known after apply)

+ static_route {

+ destination_prefix = "0.0.0.0/0"

+ next_hop_address = "192.168.101.100"

}

}

# yandex_vpc_subnet.net-101 will be created

+ resource "yandex_vpc_subnet" "net-101" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "subnet-stage-101"

+ network_id = (known after apply)

+ route_table_id = (known after apply)

+ v4_cidr_blocks = [

+ "192.168.101.0/24",

]

+ v6_cidr_blocks = (known after apply)

+ zone = "ru-central1-a"

}

# yandex_vpc_subnet.net-102 will be created

+ resource "yandex_vpc_subnet" "net-102" {

+ created_at = (known after apply)

+ folder_id = (known after apply)

+ id = (known after apply)

+ labels = (known after apply)

+ name = "subnet-stage-102"

+ network_id = (known after apply)

+ route_table_id = (known after apply)

+ v4_cidr_blocks = [

+ "192.168.102.0/24",

]

+ v6_cidr_blocks = (known after apply)

+ zone = "ru-central1-b"

}

Plan: 19 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ internal_ip_address_app_yandex_cloud = (known after apply)

+ internal_ip_address_db01_yandex_cloud = (known after apply)

+ internal_ip_address_db02_yandex_cloud = (known after apply)

+ internal_ip_address_gitlab_yandex_cloud = (known after apply)

+ internal_ip_address_monitoring_yandex_cloud = (known after apply)

+ internal_ip_address_proxy_lan_yandex_cloud = "192.168.101.100"

+ internal_ip_address_proxy_wan_yandex_cloud = (known after apply)

+ internal_ip_address_runner_yandex_cloud = (known after apply)

+ workspace = "stage"

yandex_vpc_network.default: Creating...

yandex_vpc_address.addr: Creating...

yandex_vpc_network.default: Creation complete after 2s [id=enpmc8fl0pnpqr81iv0l]

yandex_vpc_route_table.route-table: Creating...

yandex_vpc_address.addr: Creation complete after 2s [id=e9bi2bvda2n2sshdia03]

yandex_vpc_route_table.route-table: Creation complete after 1s [id=enpaodgt0egahkls4jrh]

yandex_vpc_subnet.net-101: Creating...

yandex_vpc_subnet.net-102: Creating...

yandex_vpc_subnet.net-101: Creation complete after 0s [id=e9bu3c9uuiklj4lkk181]

yandex_compute_instance.proxy: Creating...

yandex_vpc_subnet.net-102: Creation complete after 1s [id=e2lpd8q6sffi6jk8827q]

yandex_dns_zone.diplom: Creating...

yandex_compute_instance.gitlab: Creating...

yandex_compute_instance.db01: Creating...

yandex_compute_instance.db02: Creating...

yandex_compute_instance.runner: Creating...

yandex_compute_instance.app: Creating...

yandex_compute_instance.monitoring: Creating...

yandex_dns_zone.diplom: Creation complete after 1s [id=dnsa3r3poasr3qvf5u22]

yandex_dns_recordset.alertmanager: Creating...

yandex_dns_recordset.www: Creating...

yandex_dns_recordset.def: Creating...

yandex_dns_recordset.alertmanager: Creation complete after 1s [id=dnsa3r3poasr3qvf5u22/alertmanager.ovirt.ru./A]

yandex_dns_recordset.grafana: Creating...

yandex_dns_recordset.def: Creation complete after 1s [id=dnsa3r3poasr3qvf5u22/@.ovirt.ru./A]

yandex_dns_recordset.prometheus: Creating...

yandex_dns_recordset.grafana: Creation complete after 0s [id=dnsa3r3poasr3qvf5u22/grafana.ovirt.ru./A]

yandex_dns_recordset.gitlab: Creating...

yandex_dns_recordset.prometheus: Creation complete after 0s [id=dnsa3r3poasr3qvf5u22/prometheus.ovirt.ru./A]

yandex_dns_recordset.www: Creation complete after 2s [id=dnsa3r3poasr3qvf5u22/www.ovirt.ru./A]

yandex_dns_recordset.gitlab: Creation complete after 1s [id=dnsa3r3poasr3qvf5u22/gitlab.ovirt.ru./A]

yandex_compute_instance.proxy: Still creating... [10s elapsed]

yandex_compute_instance.gitlab: Still creating... [10s elapsed]

yandex_compute_instance.runner: Still creating... [10s elapsed]

yandex_compute_instance.db02: Still creating... [10s elapsed]

yandex_compute_instance.db01: Still creating... [10s elapsed]

yandex_compute_instance.app: Still creating... [10s elapsed]

yandex_compute_instance.monitoring: Still creating... [10s elapsed]

yandex_compute_instance.proxy: Still creating... [20s elapsed]

yandex_compute_instance.gitlab: Still creating... [20s elapsed]

yandex_compute_instance.db02: Still creating... [20s elapsed]

yandex_compute_instance.runner: Still creating... [20s elapsed]

yandex_compute_instance.db01: Still creating... [20s elapsed]

yandex_compute_instance.app: Still creating... [20s elapsed]

yandex_compute_instance.monitoring: Still creating... [20s elapsed]

yandex_compute_instance.db01: Creation complete after 25s [id=epd8tdab3jkoirf6j9mu]

yandex_compute_instance.app: Creation complete after 29s [id=epdtb69rrks8098msq5v]

yandex_compute_instance.proxy: Still creating... [30s elapsed]

yandex_compute_instance.gitlab: Still creating... [30s elapsed]

yandex_compute_instance.runner: Still creating... [30s elapsed]

yandex_compute_instance.db02: Still creating... [30s elapsed]

yandex_compute_instance.monitoring: Still creating... [30s elapsed]

yandex_compute_instance.db02: Creation complete after 30s [id=epdsn4dfp5t3v1cdvrvq]

yandex_compute_instance.gitlab: Creation complete after 32s [id=epdcdnr3qsucsm5j8hfk]

yandex_compute_instance.monitoring: Creation complete after 32s [id=epddfkiltbe9c3ivhk7q]

yandex_compute_instance.runner: Creation complete after 32s [id=epdbhs7ktfecphcvvndn]

yandex_compute_instance.proxy: Creation complete after 33s [id=fhme2i3cssi1hsfb12gi]

Apply complete! Resources: 19 added, 0 changed, 0 destroyed.

Outputs:

internal_ip_address_app_yandex_cloud = "192.168.102.23"

internal_ip_address_db01_yandex_cloud = "192.168.102.34"

internal_ip_address_db02_yandex_cloud = "192.168.102.25"

internal_ip_address_gitlab_yandex_cloud = "192.168.102.19"

internal_ip_address_monitoring_yandex_cloud = "192.168.102.29"

internal_ip_address_proxy_lan_yandex_cloud = "192.168.101.100"

internal_ip_address_proxy_wan_yandex_cloud = "51.250.66.88"

internal_ip_address_runner_yandex_cloud = "192.168.102.20"

workspace = "stage"

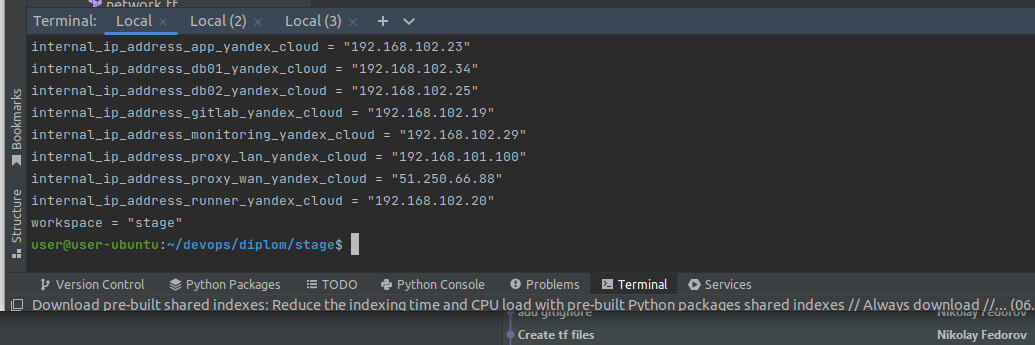

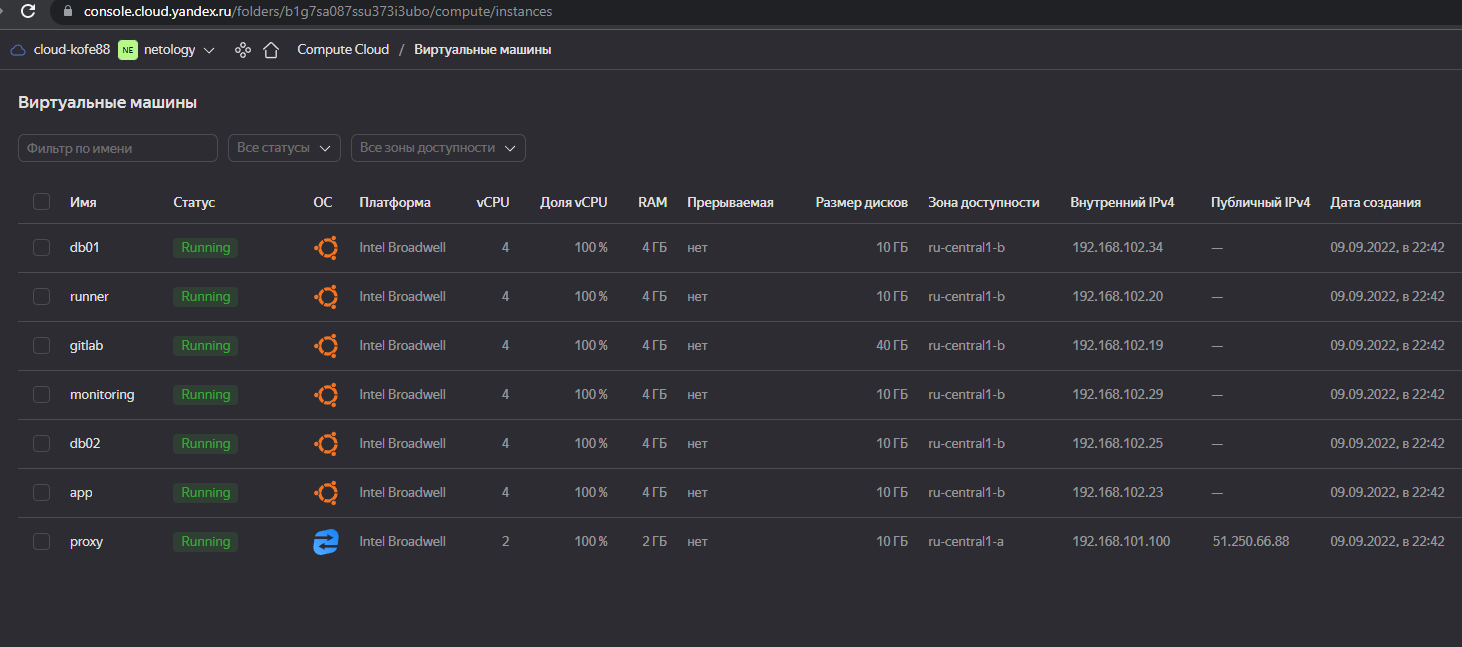

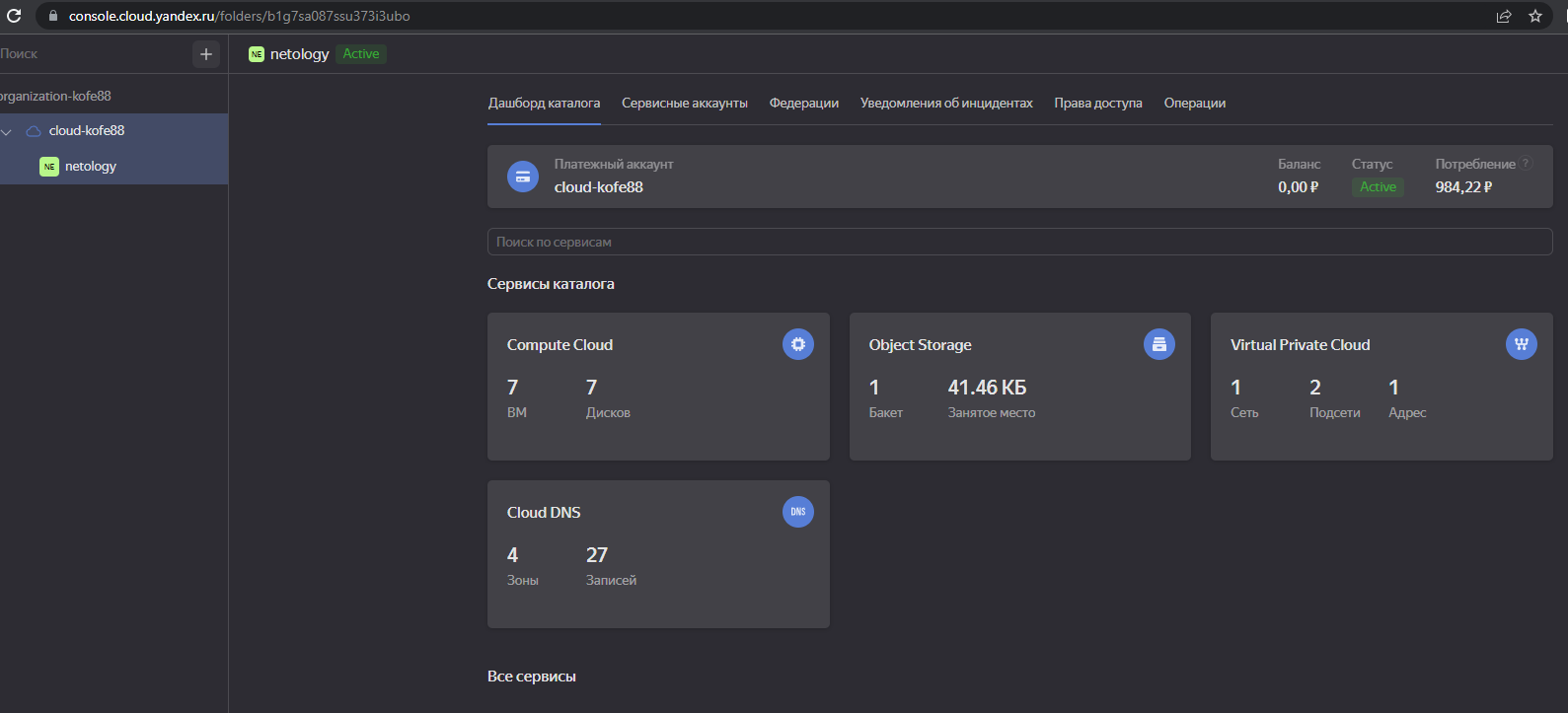

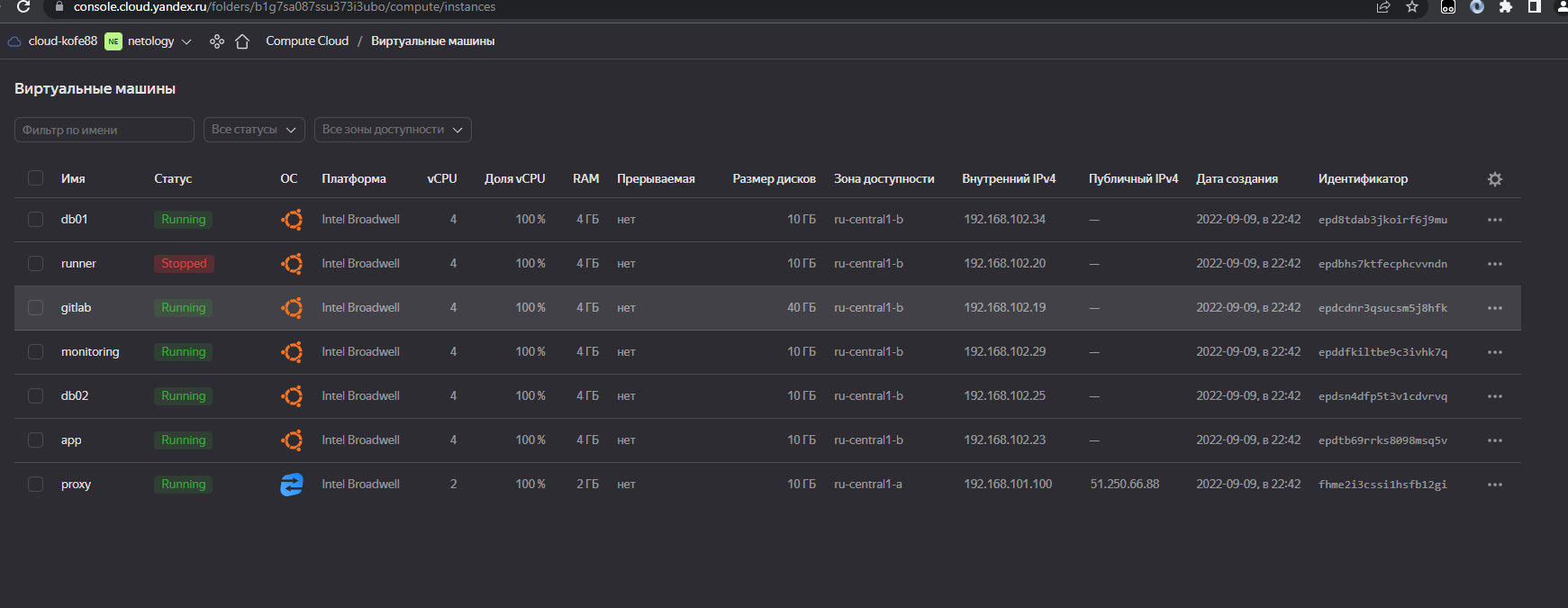

По итогу - создаются 7 виртуальных машин (5 - Ubuntu 22.04, 1 - Ubuntu 20.04, proxy - ubuntu 18.04 NAT Instance).

Создаются сеть и две подсети 192.168.101.0/24 и 192.168.102.0/24.

Настраиваются маршруты между ними.

Арендуется белый IP.

Прописываются DNS YC в соответствии с заданием.

В output.json выводится информацию о всех выданных ip адресах, для дальнейшего использования с Ansible.

Состояние воркспейса stage сохраняется в S3 бакете YC.

Содержимое output.tf вывожу в output.json.

Далее используем envsubst.

https://900913.ru/tldr/common/en/envsubst/

Replace environment variables in an input file and output to a file:

envsubst < {{path/to/input_file}} > {{path/to/output_file}}

Для начала нам нужно из файла json достать нужные данные, используем jq (о которой я узнал на домашних заданиях ранее, в т.ч. курсовая с Hasicorp Vault).

Для этого запускаем hosts.sh следующего содержания:

# /bin/bash

export internal_ip_address_app_yandex_cloud=$(< output.json jq -r '.internal_ip_address_app_yandex_cloud | .value')

export internal_ip_address_db01_yandex_cloud=$(< output.json jq -r '.internal_ip_address_db01_yandex_cloud | .value')

export internal_ip_address_db02_yandex_cloud=$(< output.json jq -r '.internal_ip_address_db02_yandex_cloud | .value')

export internal_ip_address_gitlab_yandex_cloud=$(< output.json jq -r '.internal_ip_address_gitlab_yandex_cloud | .value')

export internal_ip_address_monitoring_yandex_cloud=$(< output.json jq -r '.internal_ip_address_monitoring_yandex_cloud | .value')

export internal_ip_address_proxy_wan_yandex_cloud=$(< output.json jq -r '.internal_ip_address_proxy_wan_yandex_cloud | .value')

export internal_ip_address_runner_yandex_cloud=$(< output.json jq -r '.internal_ip_address_runner_yandex_cloud | .value')

envsubst < hosts.j2 > ../../ansible/hostsГде с помощью jq вычленяются нужные данные из файла output.json и помещаются в пересенные среды, а затем при помощи envsubst заполняется шаблон hosts.j2 с хостами для Ansible и копируется в директорию с Ansible.

Необходимо разработать Ansible роль для установки Nginx и LetsEncrypt.

Для получения LetsEncrypt сертификатов во время тестов своего кода пользуйтесь тестовыми сертификатами, так как количество запросов к боевым серверам LetsEncrypt лимитировано.

Рекомендации:

- Имя сервера:

you.domain - Характеристики: 2vCPU, 2 RAM, External address (Public) и Internal address.

Цель:

- Создать reverse proxy с поддержкой TLS для обеспечения безопасного доступа к веб-сервисам по HTTPS.

Ожидаемые результаты:

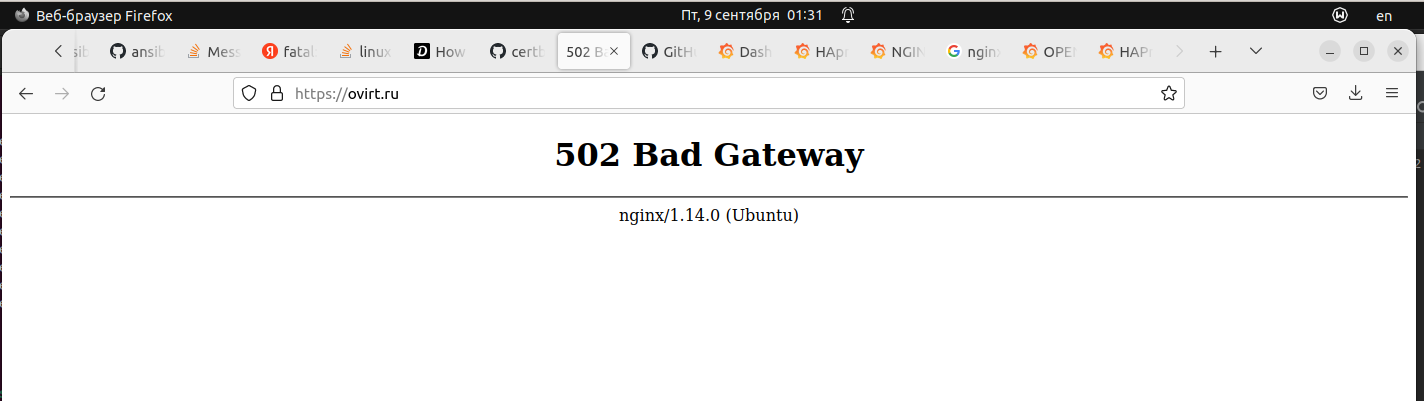

- В вашей доменной зоне настроены все A-записи на внешний адрес этого сервера:

https://www.you.domain(WordPress)https://gitlab.you.domain(Gitlab)https://grafana.you.domain(Grafana)https://prometheus.you.domain(Prometheus)https://alertmanager.you.domain(Alert Manager)

- Настроены все upstream для выше указанных URL, куда они сейчас ведут на этом шаге не важно, позже вы их отредактируете и укажите верные значения.

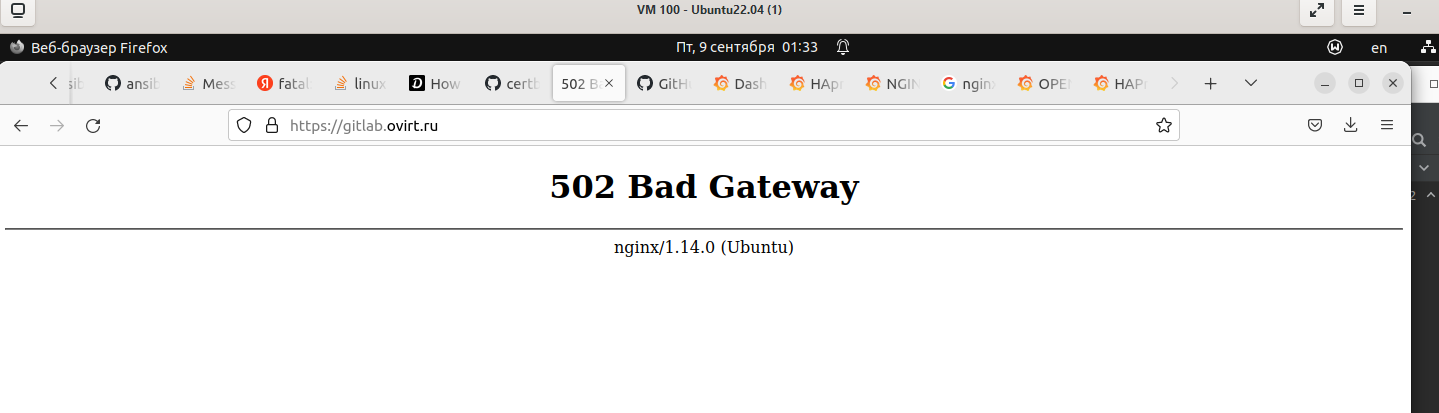

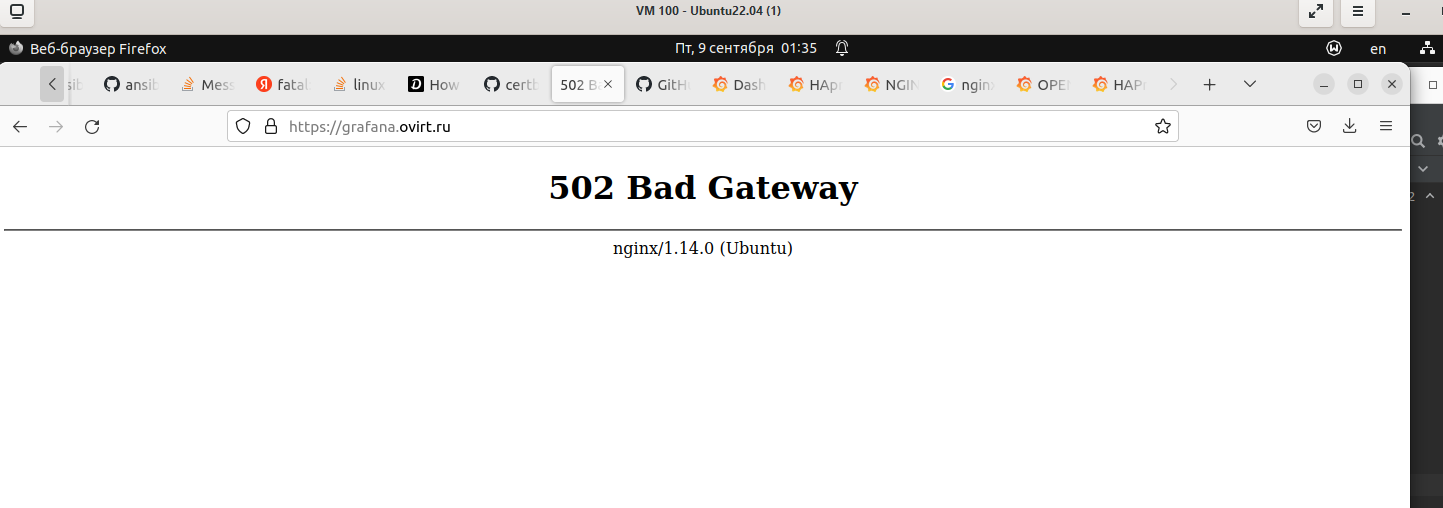

- В браузере можно открыть любой из этих URL и увидеть ответ сервера (502 Bad Gateway). На текущем этапе выполнение задания это нормально!

Тут использовал данные материалы:

https://github.com/coopdevs/certbot_nginx

https://github.com/geerlingguy/ansible-role-certbot/

Если нужно генерировать тестовый, то в дефолтных значениях нужно прописать :

letsencrypt_staging: trueПереходим в директорию с Ansible и выполняем ansible-playbook nginx.yml -i hosts

Вывод Ansible

user@user-ubuntu:~/devops/diplom/ansible$ ansible-playbook proxy.yml -i hosts

PLAY [proxy] ********************************************************************************************************************************************************************

TASK [Gathering Facts] **********************************************************************************************************************************************************

ok: [ovirt.ru]

TASK [proxy : Install Nginx] ****************************************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Set Certbot package name and versions (Ubuntu >= 20.04)] **********************************************************************************************************

skipping: [ovirt.ru]

TASK [proxy : Set Certbot package name and versions (Ubuntu < 20.04)] ***********************************************************************************************************

ok: [ovirt.ru]

TASK [proxy : Add certbot repository] *******************************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Install certbot] **************************************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Install certbot-nginx plugin] *************************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Check if certificate already exists] ******************************************************************************************************************************

ok: [ovirt.ru]

TASK [proxy : Force generation of a new certificate] ****************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Add cron job for certbot renewal] *********************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Add nginx.conf] ***************************************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Add default site] *************************************************************************************************************************************************

changed: [ovirt.ru]

TASK [proxy : Add site conf] ****************************************************************************************************************************************************

changed: [ovirt.ru]

RUNNING HANDLER [proxy : nginx systemd] *****************************************************************************************************************************************

ok: [ovirt.ru]

RUNNING HANDLER [proxy : nginx restart] *****************************************************************************************************************************************

changed: [ovirt.ru]

PLAY RECAP **********************************************************************************************************************************************************************

ovirt.ru : ok=14 changed=10 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

Необходимо разработать Ansible роль для установки кластера MySQL.

Рекомендации:

- Имена серверов:

db01.you.domainиdb02.you.domain - Характеристики: 4vCPU, 4 RAM, Internal address.

Цель:

- Получить отказоустойчивый кластер баз данных MySQL.

Ожидаемые результаты:

- MySQL работает в режиме репликации Master/Slave.

- В кластере автоматически создаётся база данных c именем

wordpress. - В кластере автоматически создаётся пользователь

wordpressс полными правами на базуwordpressи паролемwordpress.

Вы должны понимать, что в рамках обучения это допустимые значения, но в боевой среде использование подобных значений не приемлимо! Считается хорошей практикой использовать логины и пароли повышенного уровня сложности. В которых будут содержаться буквы верхнего и нижнего регистров, цифры, а также специальные символы!

Конфигурация master.cnf.j2:

[mysqld]

# Replication

server-id = 1

log-bin = mysql-bin

log-bin-index = mysql-bin.index

log-error = mysql-bin.err

relay-log = relay-bin

relay-log-info-file = relay-bin.info

relay-log-index = relay-bin.index

expire_logs_days=7

binlog-do-db = {{ db_name }}Конфигурация slave.cnf.j2:

[mysqld]

# Replication

server-id = 2

relay-log = relay-bin

relay-log-info-file = relay-log.info

relay-log-index = relay-log.index

replicate-do-db = {{ db_name }}Используемые материалы:

https://medium.com/@kelom.x/ansible-mysql-installation-2513d0f70faf

https://github.com/geerlingguy/ansible-role-mysql/blob/master/tasks/replication.yml

https://handyhost.ru/manuals/mysql/mysql-replication.html

Для создания кластера выполняем ansible-playbook mysql.yml -i hosts

Вывод Ansible

user@user-ubuntu:~/devops/diplom/ansible$ ansible-playbook mysql.yml -i hosts

PLAY [db01 db02] ****************************************************************************************************************************************************************

TASK [Gathering Facts] **********************************************************************************************************************************************************

ok: [db02.ovirt.ru]

ok: [db01.ovirt.ru]

TASK [mysql : Installing Mysql and dependencies] ********************************************************************************************************************************

changed: [db01.ovirt.ru] => (item=mysql-server)

changed: [db02.ovirt.ru] => (item=mysql-server)

changed: [db01.ovirt.ru] => (item=mysql-client)

changed: [db02.ovirt.ru] => (item=mysql-client)

changed: [db01.ovirt.ru] => (item=python3-mysqldb)

changed: [db02.ovirt.ru] => (item=python3-mysqldb)

changed: [db01.ovirt.ru] => (item=libmysqlclient-dev)

changed: [db02.ovirt.ru] => (item=libmysqlclient-dev)

TASK [mysql : start and enable mysql service] ***********************************************************************************************************************************

ok: [db02.ovirt.ru]

ok: [db01.ovirt.ru]

TASK [mysql : Creating database wordpress] **************************************************************************************************************************************

changed: [db01.ovirt.ru]

changed: [db02.ovirt.ru]

TASK [mysql : Creating mysql user wordpress] ************************************************************************************************************************************

changed: [db01.ovirt.ru]

changed: [db02.ovirt.ru]

TASK [mysql : Enable remote login to mysql] *************************************************************************************************************************************

changed: [db02.ovirt.ru]

changed: [db01.ovirt.ru]

TASK [mysql : Remove anonymous MySQL users.] ************************************************************************************************************************************

ok: [db01.ovirt.ru]

ok: [db02.ovirt.ru]

TASK [mysql : Remove MySQL test database.] **************************************************************************************************************************************

ok: [db01.ovirt.ru]

ok: [db02.ovirt.ru]