This is the official repository for the paper "Learning Motion in Feature Space: Locally-Consistent Deformable Convolution Networks for Fine-Grained Action Detection" in ICCV 2019.

Links

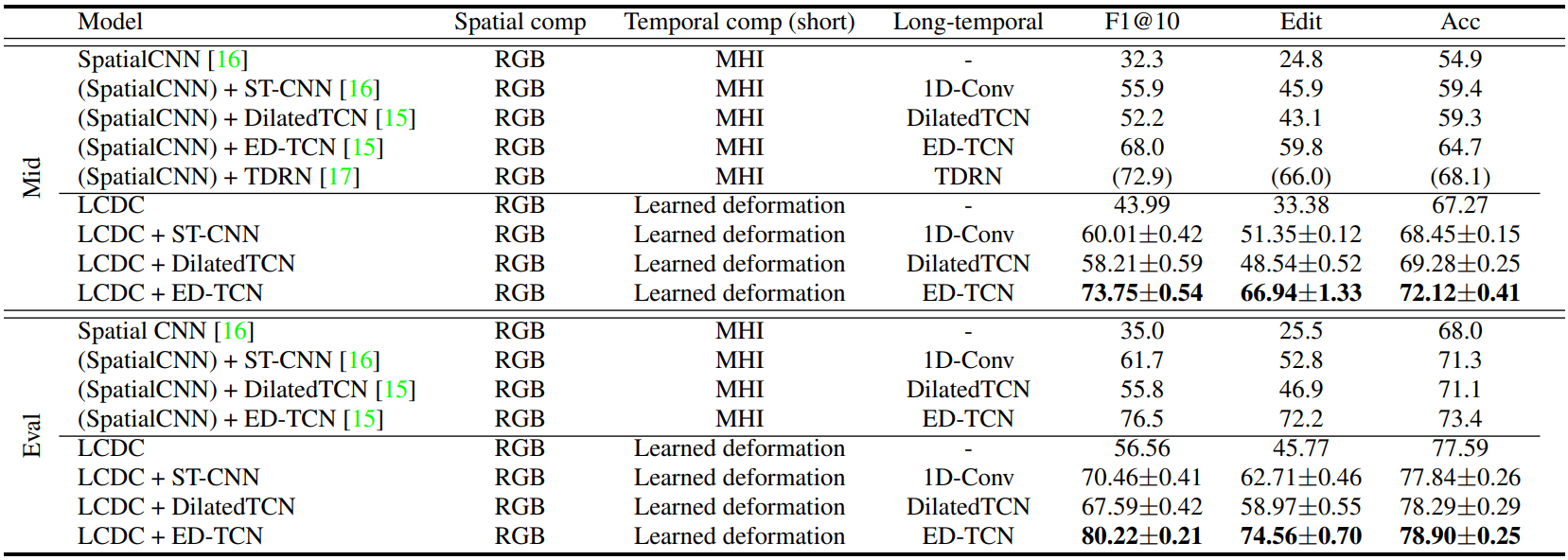

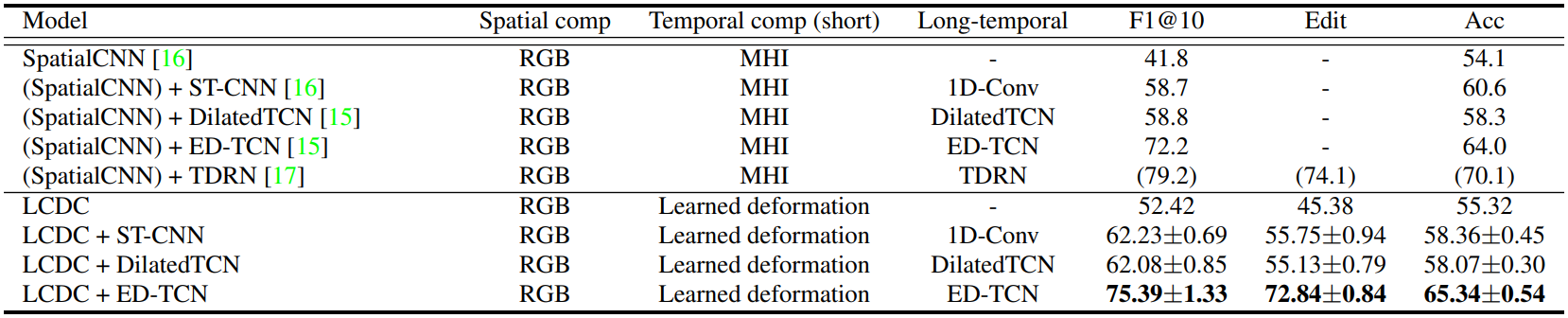

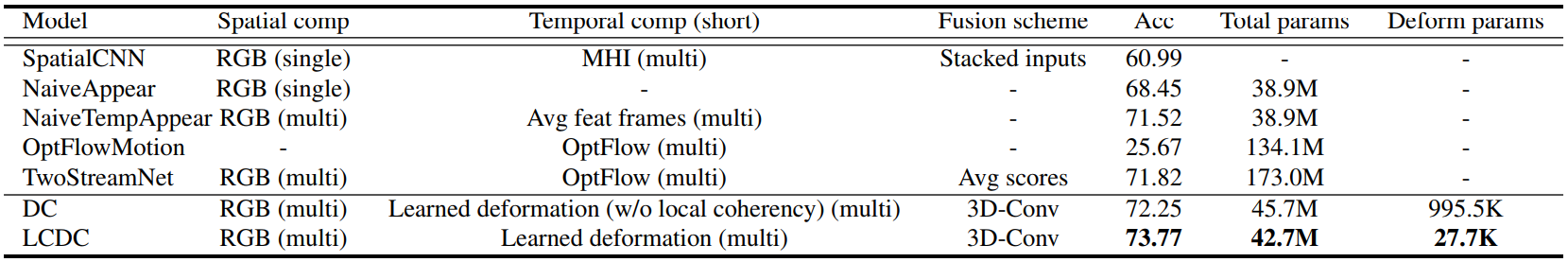

Experimental results:

Everything must be run from the root directory, e.g.

./scripts/50_salads/build_tfrecords_train.shTo view the usage of python code, please use the help feature, e.g.

python ./src/trainer.py --helpPlease consider copying and modifying the scripts in scripts/ directory for other use cases.

A docker for this project is available at: link.

It is recommended to create a separate virtual environment for this project.

Dependencies

Pip packages (can be installed using pip install -r requirements.txt):

tensorflow-gpu==1.3.0

matplotlib

opencv-python

pyyaml

progressbar2

cython

easydict

scikit-image

scikit-learnOther requirements:

python3

ffmpeg

gcc4.9

cuda8.0

cudnn6.0(The code may work with gcc5, but it has not been fully tested.)

Setup TF_Deformable_Net:

Clone TF_Deformable_Net

git clone https://github.com/Zardinality/TF_Deformable_Net $TF_DEFORMABLE_NETwhere $TF_DEFORMABLE_NET is the target directory.

Add $TF_DEFORMABLE_NET to your environment (e.g. .bashrc), e.g.

export PYTHONPATH=$PYTHONPATH:$TF_DEFORMABLE_NETContinue installing the framework by following the instruction on the original Zardinality's repository TF_Deformable_Net.

Extra:

In case the installation does not work, please replace with the files inside ./extra/TF_Deformable_Net/. Remember to backup before overwriting.

- Pretrained models (for initialization):

The pretrained weights are extracted from the weights provided by TF_Deformable_Net. Since the default deformable convolution network is implemented with 4 deformable group, we extract each of those to match the implementation of LCDC. The weights are indexed as g0, g1, g2, g3. The difference between choosing which one to use is minimal.

The weights are available here.

- Trained models:

Trained models are available here.

- Extracted features (for TCN):

Short-temporal features for TCN networks are available here.

This section shows you how to set up dataset, using 50 Salads dataset as an example. Other datasets should follow a similar routine.

Create data/ directory, then download 50 Salads dataset and link it in data/, e.g.

ln -s [/path/to/salads/dataset] ./data/50_salads_datasetCopy extra contents from ./extra/50_salads_dataset/ to ./data/50_salads_dataset/.

Credits: The labels and splits are originally from Colin Lea's repository TemporalConvolutionalNetworks.

You should have something similar to this:

data/

└── 50_Salads_dataset/

├── rgb/

├── labels/

├── splits/

└── timestamps/

Extract the frames and segment in fine-grained level. Modify the script accordingly.

./scripts/50_salads/prepare_data_50salads.shPrepare tfrecord files. Modify the script accordingly.

./scripts/50_salads/build_tfrecords_train.shModify the training scripts accordingly before running. All training and testing scripts require GPU ID as an input argument, e.g.

./scripts/50_salads/resnet_motionconstraint/train_nomotion_g0.sh $GPU_IDResults are stored in logs/ directory. You can use TensorBoard to visualize training and testing processes, e.g.

tensorboard --logdir [path/to/log/dir] --port [your/choice/of/port]Further details of how to use TensorBoard are available at this link.

It is recommmended to run the testing script in parallel with the training script, using a different GPU. The default configuration will go through the checkpoints you have generated and wait for new checkpoints coming. Press Ctrl+C to stop the testing process. You can customize the number of checkpoints or which checkpoints to run by switching to other testing mode. Please inspect the help of tester_fd.py for more information.

./scripts/50_salads/resnet_motionconstraint/test_nomotion_g0.sh $GPU_IDPlease change the output dir and other parameters accordingly.

./scripts/50_salads/resnet_motionconstraint/extract_feat_nomotion_g0.sh $GPU_IDIf you are interested in using this project, please cite our paper as

@InProceedings{Mac_2019_ICCV,

author = {Mac, Khoi-Nguyen C. and

Joshi, Dhiraj and

Yeh, Raymond A. and

Xiong, Jinjun and

Feris, Rogerio S. and

Do, Minh N.},

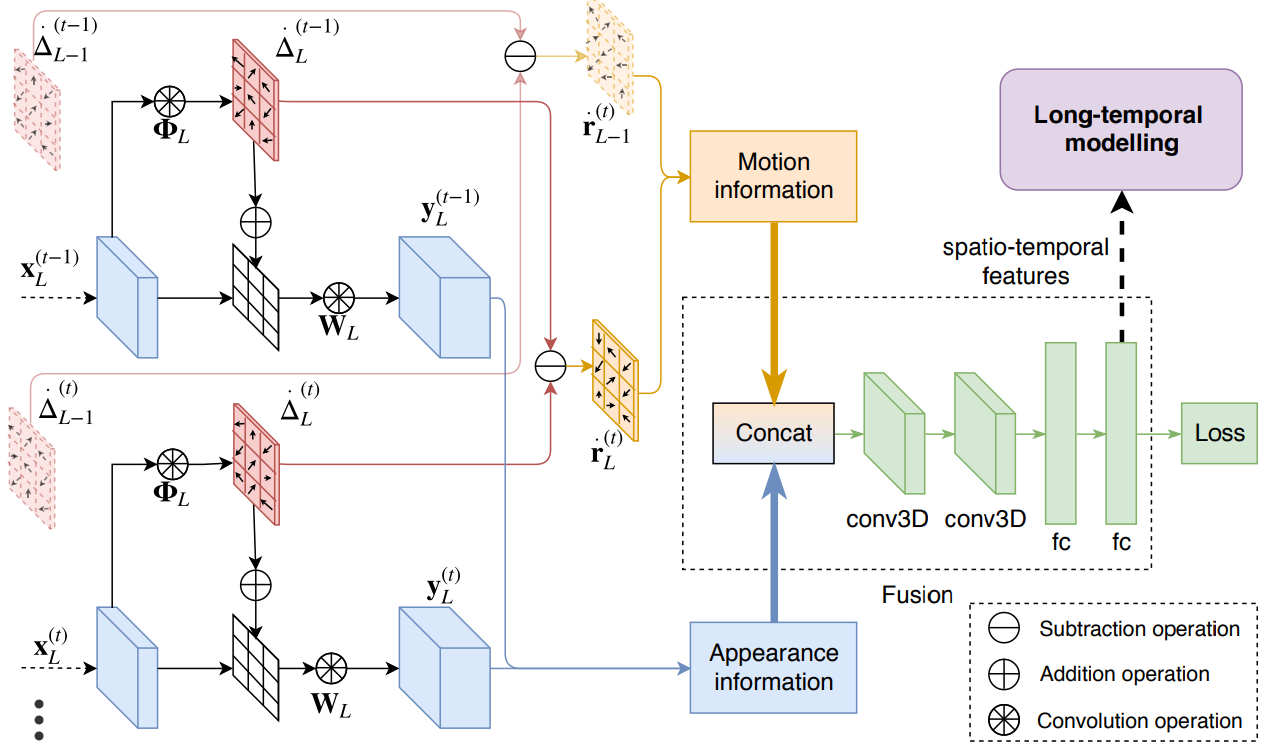

title = {Learning Motion in Feature Space: Locally-Consistent Deformable Convolution Networks for Fine-Grained Action Detection},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {October},

year = {2019}

}