Authors: Dongfu Jiang, Xiang Ren, Bill Yuchen Lin @ AI2-Mosaic USC-INK

Abstract

-

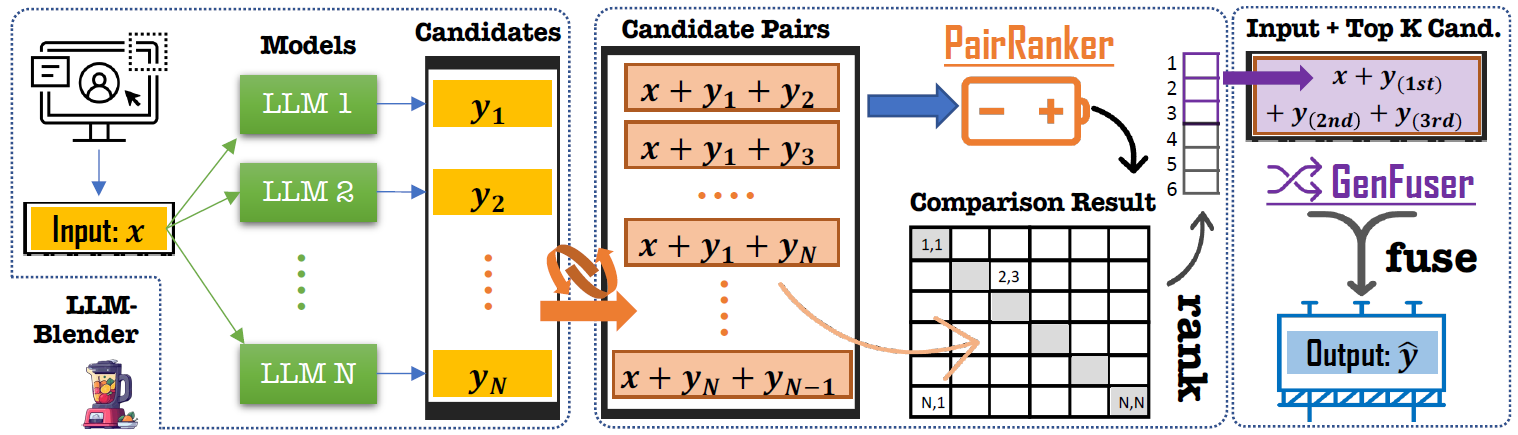

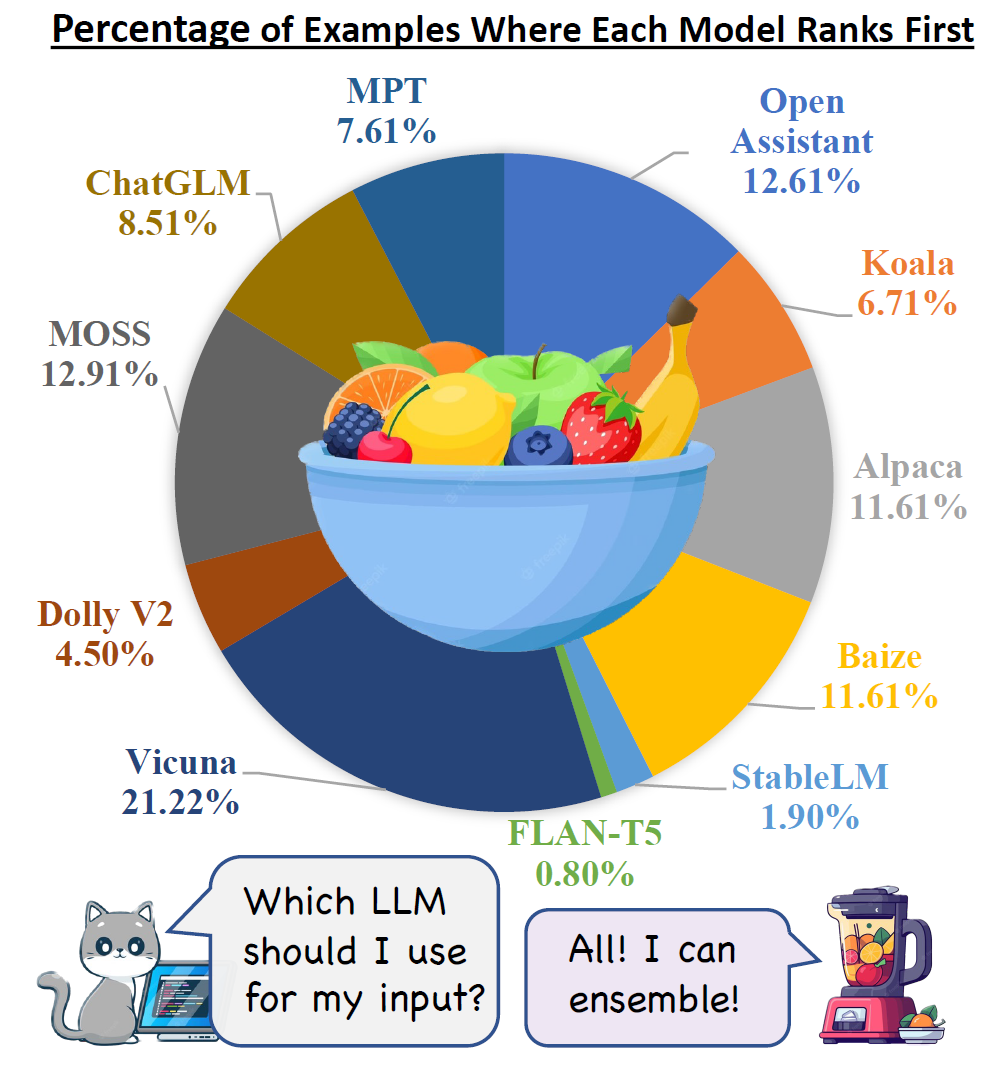

We introduce LLM-Blender, an innovative ensembling framework to attain consistently superior performance by leveraging the diverse strengths of multiple open-source large language models (LLMs). LLM-Blender cut the weaknesses through ranking and integrate the strengths through fusing generation to enhance the capability of LLMs.

-

Our framework consists of two complementary modules: PairRanker and GenFuser, addressing the observation that optimal LLMs for different examples can significantly vary. PairRanker employs a specialized pairwise comparison method to distinguish subtle differences between candidate outputs. GenFuser aims to merge the top-ranked candidates from the aggregation of PairRanker's pairwise comparisons into an improved output by capitalizing on their strengths and mitigating their weaknesses.

-

To facilitate large-scale evaluation, we introduce a benchmark dataset, MixInstruct, which is a mixture of multiple instruction datasets featuring oracle pairwise comparisons for testing purposes. Our LLM-Blender significantly surpasses the best LLMs and baseline ensembling methods across various metrics on MixInstruct, establishing a substantial performance gap.

git clone https://github.com/yuchenlin/LLM-Blender.git

cd LLM-Blender

pip install -e .Then you are good to go through our LLM-Blender with import llm_blender.

- Please first download our DeBERTa-v3-large PairRanker checkpoint to your local folder: checkpoint link

import llm_blender

ranker_config = llm_blender.RankerConfig

ranker_config.ranker_type = "pairranker"

ranker_config.model_type = "deberta"

ranker_config.model_name = "microsoft/deberta-v3-large"

ranker_config.load_checkpoint = "<your checkpoint path>"

ranker_config.cache_dir = "./hf_models"

ranker_config.source_maxlength = 128

ranker_config.candidate_maxlength = 128

ranker_config.n_tasks = 1

fuser_config = llm_blender.GenFuserConfig

fuser_config.model_name = "llm-blender/gen_fuser_3b"

fuser_config.cache_dir = "./hf_models"

fuser_config.max_length = 512

fuser_config.candidate_maxlength = 128

blender_config = llm_blender.BlenderConfig

blender_config.device = "cuda"

blender = llm_blender.Blender(blender_config, ranker_config, fuser_config)- Then you can rank with the following function

inputs = ["input1", "input2"]

candidates_texts = [["candidate1 for input1", "candidatefor input1"], ["candidate1 for input2", "candidate2 for input2"]]

ranks = blender.rank(inputs, candidates_texts, return_scores=False, batch_size=2)

# ranks is a list of ranks where ranks[i][j] represents the ranks of candidate-j for input-i- You can fuse the top-ranked candidates with the following code

from llm_blender.blender.blender_utils import get_topk_candidates_from_ranks

topk_candidates = get_topk_candidates_from_ranks(ranks, candidates_texts, top_k=3)

fuse_generations = blender.fuse(inputs, topk_candidates, batch_size=2)

# fuse_generations are the fused generations from our fine-tuned checkpoint- You can also do the rank and fusion as a whole

fuse_generations, ranks = blender.rank_and_fuse(inputs, candidates_texts, return_scores=False, batch_size=2, top_k=3)- Using llm-blender to directly compare two candidates

candidates_A = [cands[0] for cands in candidates]

candidates_B = [cands[1] for cands in candidates]

comparison_results = blender.compare(inputs, candidates_A, candidates_B)

# comparison_results is a list of bool, where element[i] denotes whether candidates_A[i] is better than candidates_B[i] for inputs[i]- Check more details on our example jupyter notebook usage:

blender_usage.ipynb

- To facilitate large-scale evaluation, we introduce a benchmark dataset, MixInstruct, which is a mixture of multiple instruction datasets featuring oracle pairwise comparisons for testing purposes.

- MixInstruct is the first large-scale dataset consisting of responses from 11 popular open-source LLMs on the instruction-following dataset. Each split of train/val/test contains 100k/5k/5k examples.

- MixInstruct instruct is collected from 4 famous instruction dataset: Alpaca-GPT4, Dolly-15k, GPT4All-LAION and ShareGPT. The ground-truth outputs comes from either ChatGPT, GPT-4 or human annotations.

- MixInstruct is evaluated by both auto-metrics including BLEURT, BARTScore, BERTScore, etc. and ChatGPT. We provide 4771 examples on test split that is evaluated by ChatGPT through pariwise comparison.

- Code to construct the dataset:

get_mixinstruct.py - HuggingFace 🤗 Dataset link

See more details in train_ranker.sh

Please follow the guide in the script to train the ranker.

Here are some explanations for the script parameters:

Changing the torchrun cmd

TORCHRUN_CMD=<you torchrun cmd path>Normally, it's just torchrun with proper conda env activated.

Changing the dataset

dataset="<your dataset>`Changing the ranker backbone

backbone_type="deberta" # "deberta" or "roberta"

backbone_name="microsoft/deberta-v3-large" # "microsoft/deberta-v3-large" or "roberta-large"Changing the ranker type

ranker="Pairranker" # "PairRanker" or "Summaranker" or "SimCLS"Filter the candidates used

candidate_model="flan-t5-xxl" # or "alpaca-native"

candidate_decoding_method="top_p_sampling"

n_candidates=15 # number of candidates to generate

using_metrics="rouge1,rouge2,rougeLsum,bleu" # metrics used to train the signalDo Training or Inference

do_inference=False # training

do_inference=True # inferenceWhen doing inference, you can change inference_mode to bubble or full to select difference pairwise inference model

Limit the datasize used for training, dev and test

max_train_data_size=-1 # -1 means no limit

max_eval_data_size=-1 # -1 means no limit

max_predict_data_size=-1 # -1 means no limitDo inference on dataset A with ranker training on dataset B

dataset=<A>

checkpoint_trained_dataset=<B>

do_inference=TrueToolkits

- LLM-Gen: A simple generation script used to get large-scale responses from various large language models.

Model checkpoints

-

PairRanker checkpoint fine-tuned on DeBERTa-v3-Large (304m)

-

GenFuser checkpoint fine-tuned on Flan-T5-XL (3b)

@inproceedings{llm-blender-2023,

title = "LLM-Blender: Ensembling Large Language Models with Pairwise Comparison and Generative Fusion",

author = "Jiang, Dongfu and Ren, Xiang and Lin, Bill Yuchen",

booktitle = "Proceedings of the 61th Annual Meeting of the Association for Computational Linguistics (ACL 2023)",

year = "2023"

}