TL;DR: Transformer model for motion prediction that incorporates two types of redundancy reduction.

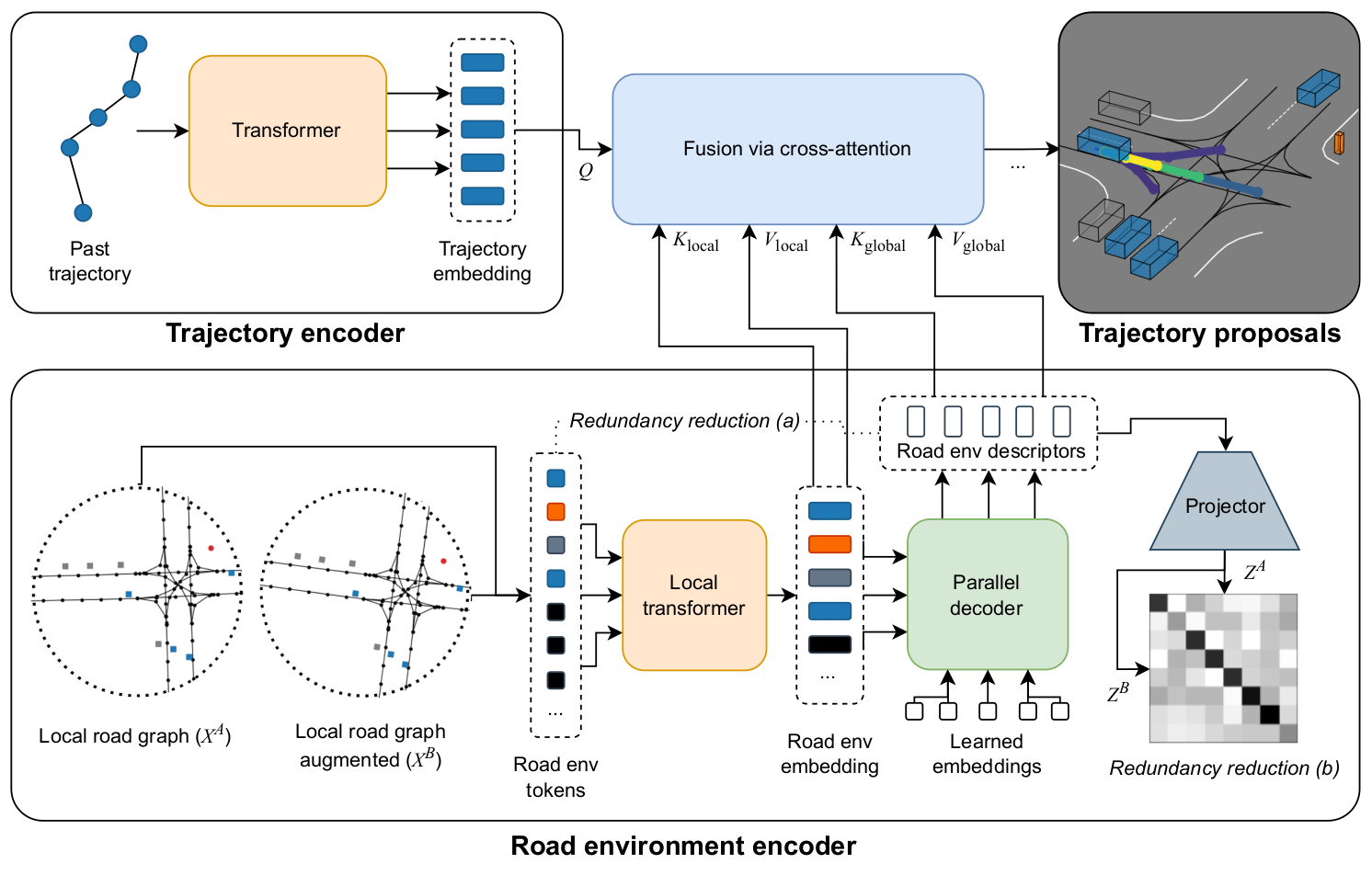

RedMotion model. Our model consists of two encoders. The trajectory encoder generates an embedding for the past trajectory of the current agent. The road environment encoder generates sets of local and global road environment embeddings as context. All embeddings are fused via cross-attention to yield trajectory proposals per agent.

This Colab notebook shows how to create a dataset, run inference and visualize the predicted trajectories.

Register and download the dataset (version 1.0) from here. Clone this repo and use the prerender script as described in their readme.

The local attention (Beltagy et al., 2020) and cross-attention (Chen et al., 2021) implementations are from lucidrain's vit_pytorch library.