This forked version is for GENEA Challenge 2022 dataset. Code is modified to be compatible with the joint names in the dataset.

A library for using motion capture data for machine learning.

This library is currently highly experimental and everything is subject to change :)

- Mocap Data Parsers and Writers

- Common mocap pre-processing algorithms

- Feature extraction library

- Visualization tools

- Read BVH Files

- Write BVH Files

- Pre-processing pipelines

- Supporting

scikit-learnAPI - Convert data representations

- Euler angles to positions

- Euler angles to exponential maps

- Exponential maps to euler angles

- Body-oriented global translation and rotation calculation with inverse tranform

- Root-centric position normalizer with inverse tranform

- Standard scaler

- Joint selectors

- Supporting

- Visualization tools

- Annotations

- Foot/ground contact detector

numpy scipy joblib scikit-learn pandas jupyter matplotlib

See the demo notebooks for processing and visualization examples:

- bvh2features: downsampling, root-centric transformation, joint-selection and data-augmentation (mirroring)

- features2bvh: converting features back to bvh format

- Position: bvh to joint positions

- PlayMocap: Visualize in HTML

from pymo.parsers import BVHParser

import pymo.viz_tools

parser = BVHParser()

parsed_data = parser.parse('data/AV_8Walk_Meredith_HVHA_Rep1.bvh')import pymo.viz_tools

viz_tools.print_skel(parsed_data)Will print the skeleton hierarchy:

- Hips (None)

| | - RightUpLeg (Hips)

| | - RightLeg (RightUpLeg)

| | - RightFoot (RightLeg)

| | - RightToeBase (RightFoot)

| | - RightToeBase_Nub (RightToeBase)

| - LeftUpLeg (Hips)

| - LeftLeg (LeftUpLeg)

| - LeftFoot (LeftLeg)

| - LeftToeBase (LeftFoot)

| - LeftToeBase_Nub (LeftToeBase)

- Spine (Hips)

| | - RightShoulder (Spine)

| | - RightArm (RightShoulder)

| | - RightForeArm (RightArm)

| | - RightHand (RightForeArm)

| | | - RightHand_End (RightHand)

| | | - RightHand_End_Nub (RightHand_End)

| | - RightHandThumb1 (RightHand)

| | - RightHandThumb1_Nub (RightHandThumb1)

| - LeftShoulder (Spine)

| - LeftArm (LeftShoulder)

| - LeftForeArm (LeftArm)

| - LeftHand (LeftForeArm)

| | - LeftHand_End (LeftHand)

| | - LeftHand_End_Nub (LeftHand_End)

| - LeftHandThumb1 (LeftHand)

| - LeftHandThumb1_Nub (LeftHandThumb1)

- Head (Spine)

- Head_Nub (Head)

data_pipe = Pipeline([

('rcpn', RootCentricPositionNormalizer()),

('delta', RootTransformer('abdolute_translation_deltas')),

('const', ConstantsRemover()),

('np', Numpyfier()),

('down', DownSampler(2)),

('stdscale', ListStandardScaler())

])

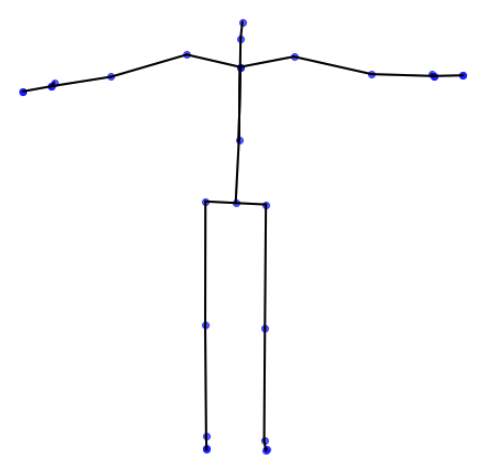

piped_data = data_pipe.fit_transform(train_X)mp = MocapParameterizer('positions')

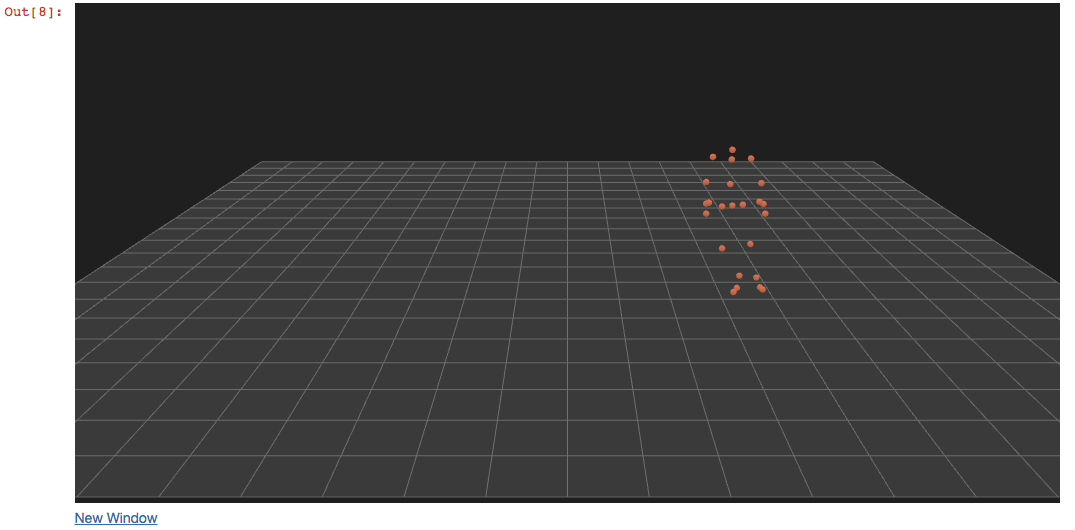

positions = mp.fit_transform([parsed_data])draw_stickfigure(positions[0], frame=10)nb_play_mocap(positions[0], 'pos',

scale=2, camera_z=800, frame_time=1/120,

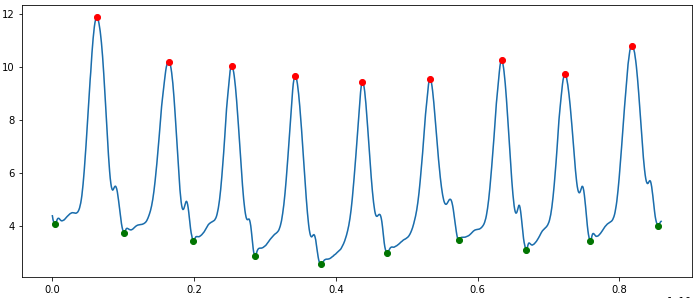

base_url='../pymo/mocapplayer/playBuffer.html')from pymo.features import *

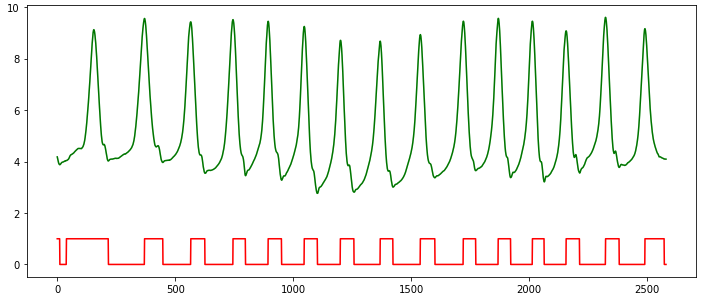

plot_foot_up_down(positions[0], 'RightFoot_Yposition')signal = create_foot_contact_signal(pos_data[3], 'RightFoot_Yposition')

plt.figure(figsize=(12,5))

plt.plot(signal, 'r')

plt.plot(pos_data[3].values['RightFoot_Yposition'].values, 'g')For any questions, feedback, and bug reports, please use the Github Issues.

Created by Omid Alemi

Modified by Simon Alexanderson

This code is available under the MIT license.