a Simple SVM from scratch (numpy components only, imperfect implementation)

We posit that we can separate binary classes

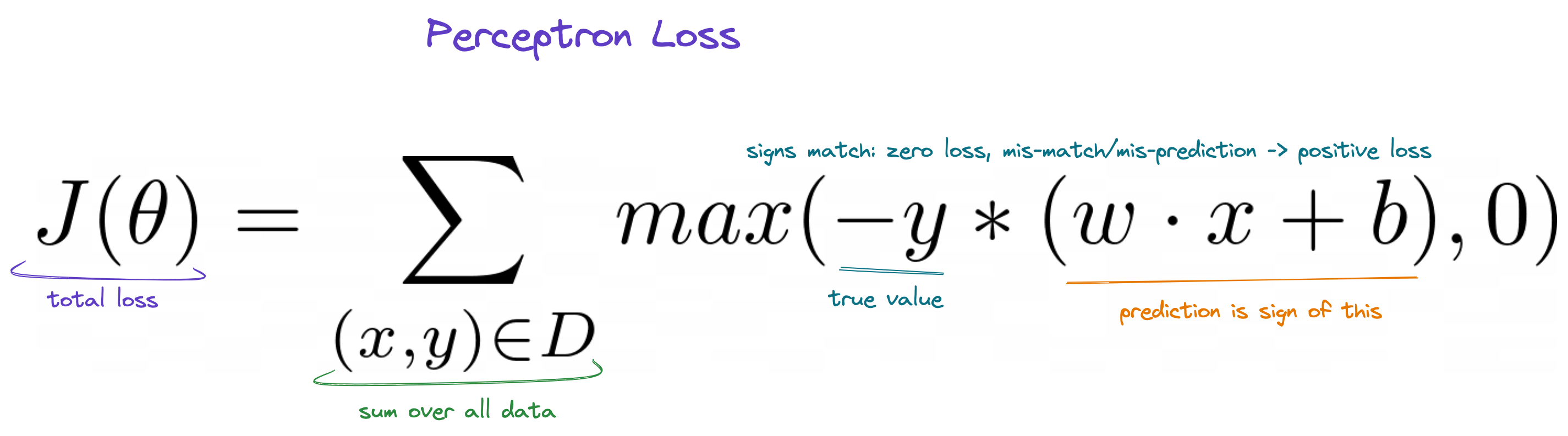

The perceptron learns

Gradient descent.

Same as perceptron:

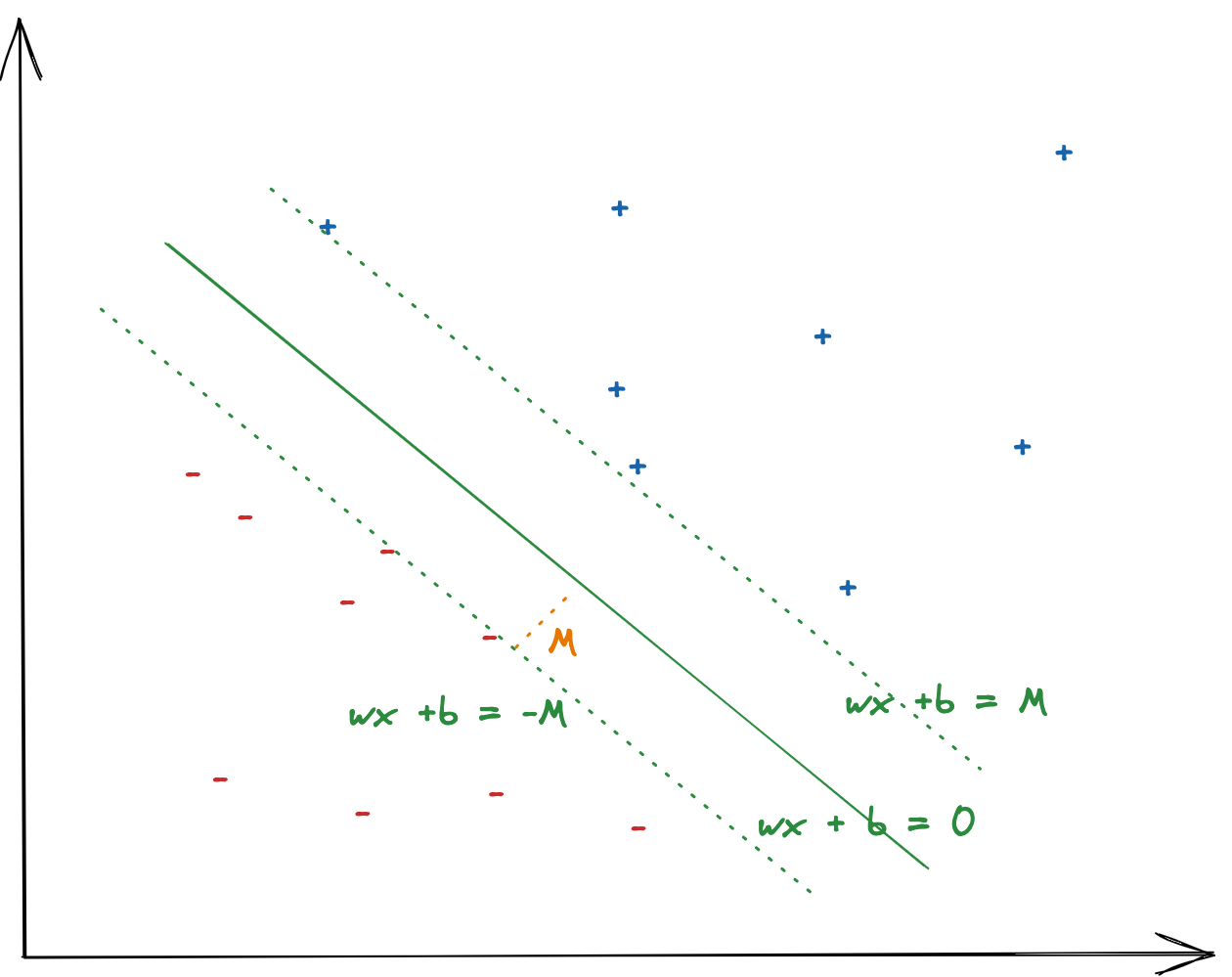

This is often given in discussion of SVMs but stems from the formula for distance between two parallel lines. The margin of an SVM can be modelled as two parallel lines to the decision boundary, where the decision boundary is given by

Where here

The distance between two parallel lines is proven here.. Skipping steps in proof linked, we arrive at

Thus to maximize this distance, we can minimize

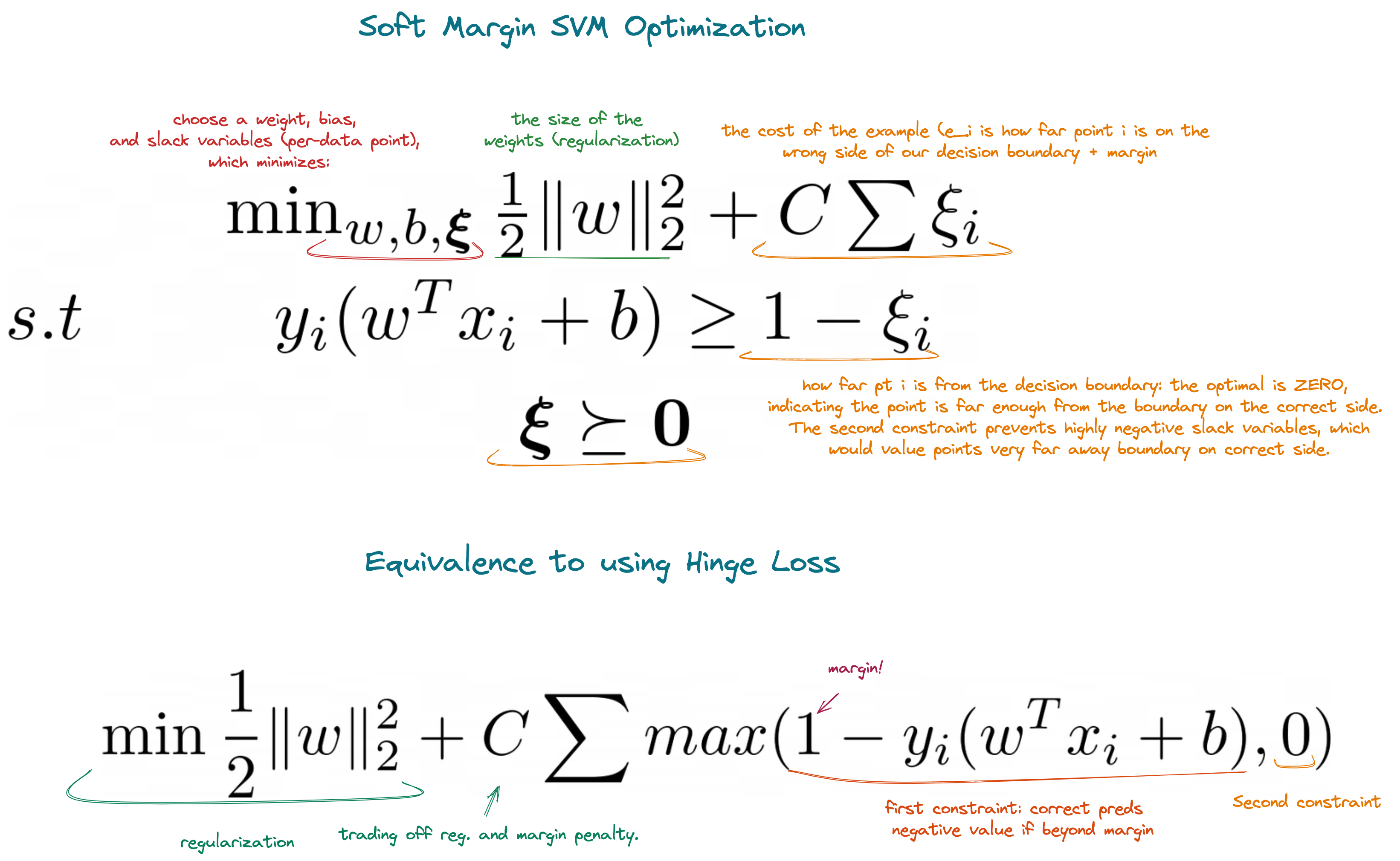

Finally, if our loss is:

Then our gradient w.r.t

Gradient decsent with known convergence: pick a learning rate 5-e4 and keep updating weights until max iterations

have passed or we are pass tolerance threshold. See fit in svm.py

Mostly seems to work, but doesn't consistently converge to the exact same values as in LinearSVC. I suspect I am doing something slightly wrong in the gradient update or stop criteria. Still, from repeated runs it is clear that the margin learned is much higher than chance, among choices of decision functions that partition the data.