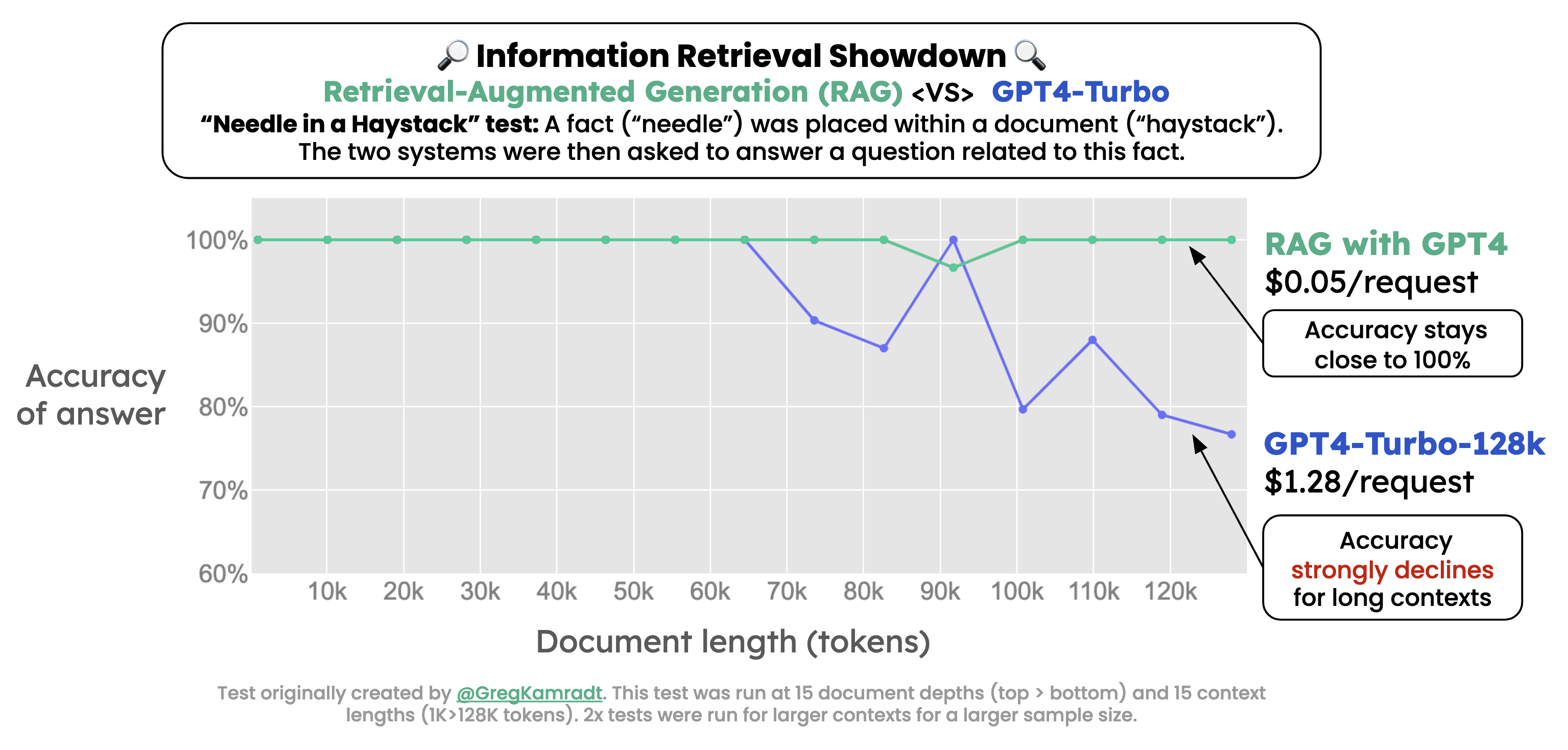

This is an extension of the "Needle in a Haystack" test created by Greg Kamradt. The goal is to compare GPT4-Turbo with its 128k tokens context length and a RAG system based on GPT4 forr context retrieval The result is clear: 🏆 𝗥𝗔𝗚 𝘄𝗶𝗻𝘀 🏆 > its edge becomes clear for the longest document sizes.

The Test

- Place a random fact or statement (the 'needle') in the middle of a long context window

- Ask the model to retrieve this statement

- Iterate over various document depths (where the needle is placed) and context lengths to measure performance

The key pieces:

needle: The random fact or statement you'll place in your contextquestion_to_ask: The question you'll ask your model which will prompt it to find your needle/statementresults_version: Set to 1. If you'd like to run this test multiple times for more data points change this value to your version numbercontext_lengths(List[int]): The list of various context lengths you'll test. In the original test this was set to 15 evenly spaced iterations between 1K and 128K (the max)document_depth_percents(List[int]): The list of various depths to place your random factmodel_to_test: The original test chosegpt-4-1106-preview. You can easily change this to any chat model from OpenAI, or any other model w/ a bit of code adjustments

(Made via pivoting the results, averaging the multiple runs, and adding labels in google slides)

(Made via pivoting the results, averaging the multiple runs, and adding labels in google slides)