| Project Page| LLMLingua Paper | LongLLMLingua Paper | HF Space Demo |

LLMLingua_demo.mp4

- 🖥 You can find the slides of EMNLP‘23 in Session 5 and BoF-6;

- 📚 We launched a blog to showcase the benefits in RAG and long context scenarios. Please see the script of the example at this link;

- 🎈 We launched a project page showcasing real-world case studies, including RAG, Online Meetings, CoT, and Code;

- 👨🦯 We have launched a series of examples in the './examples' folder, which include RAG, Online Meeting, CoT, Code, and RAG using LlamaIndex;

- 👾 LongLLMLingua has been incorporated into the LlamaIndex pipeline, which is a widely used RAG framework.

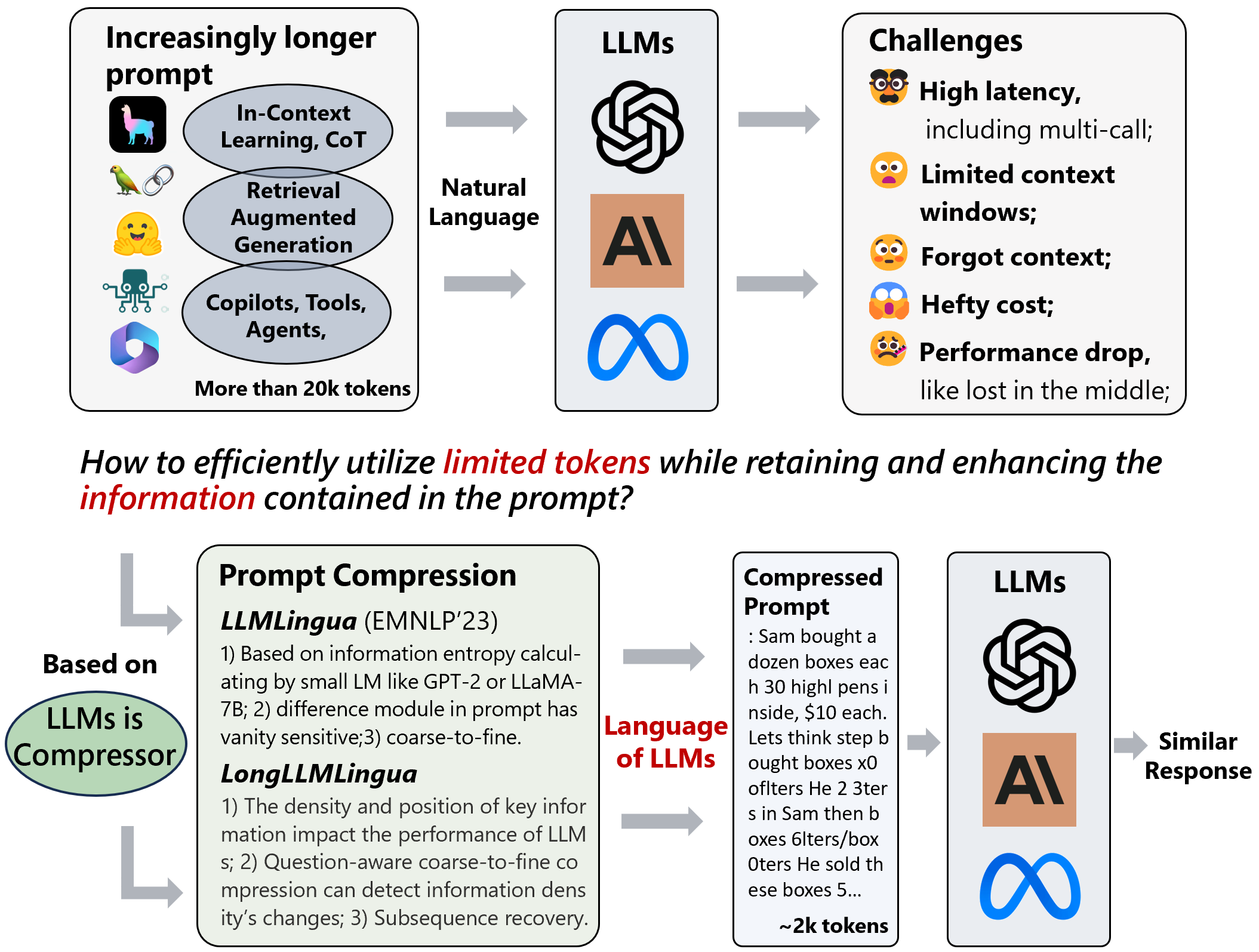

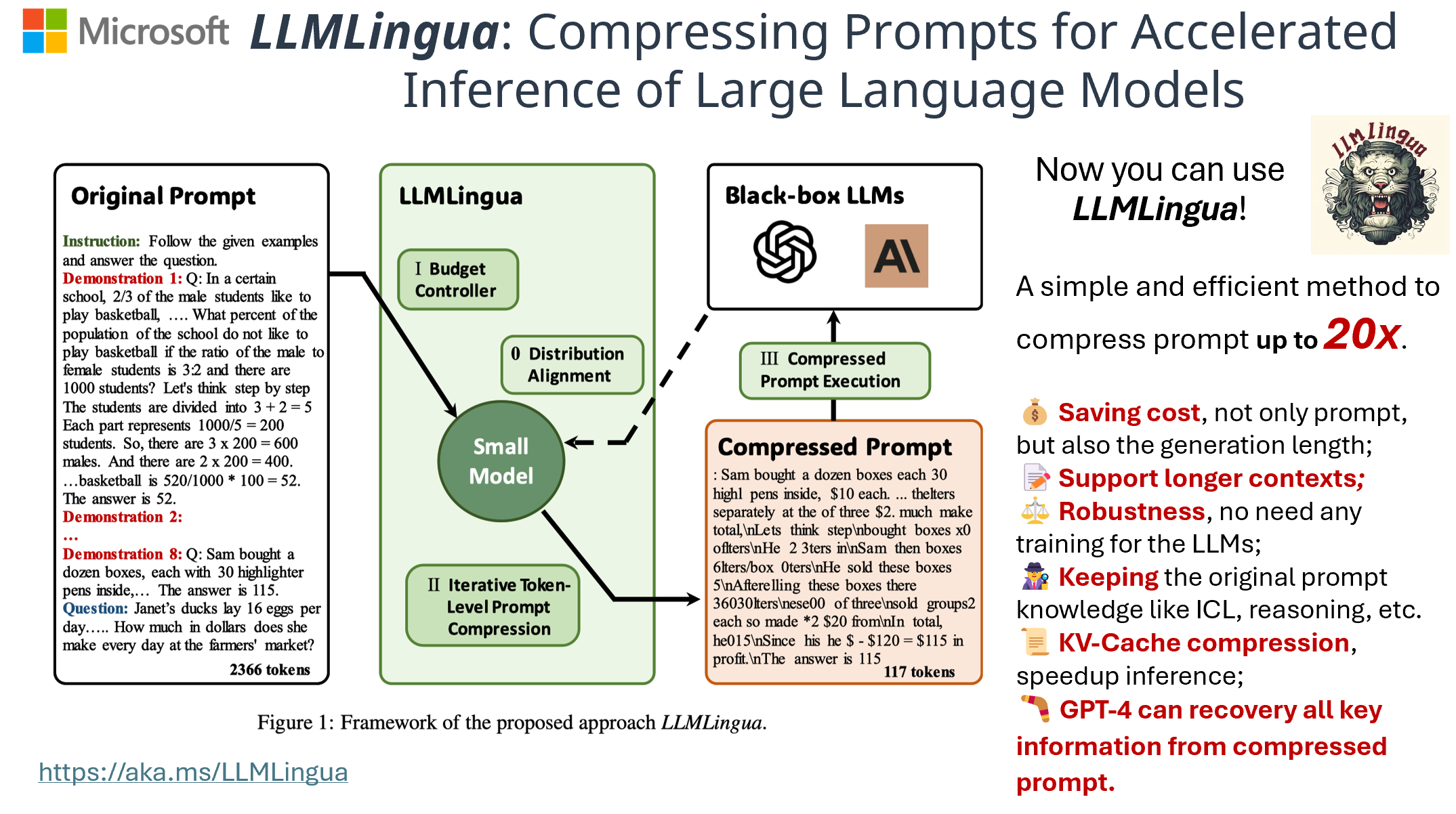

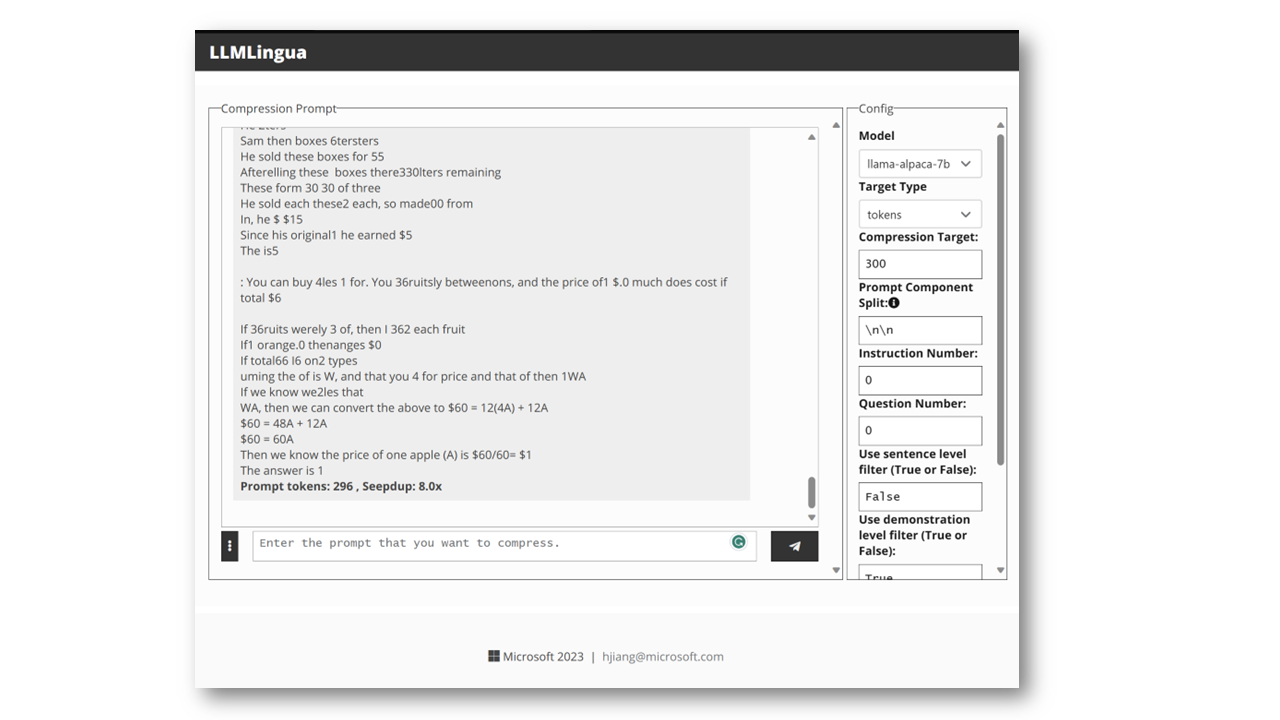

LLMLingua, that uses a well-trained small language model after alignment, such as GPT2-small or LLaMA-7B, to detect the unimportant tokens in the prompt and enable inference with the compressed prompt in black-box LLMs, achieving up to 20x compression with minimal performance loss.

LLMLingua: Compressing Prompts for Accelerated Inference of Large Language Models (EMNLP 2023)

Huiqiang Jiang, Qianhui Wu, Chin-Yew Lin, Yuqing Yang and Lili Qiu

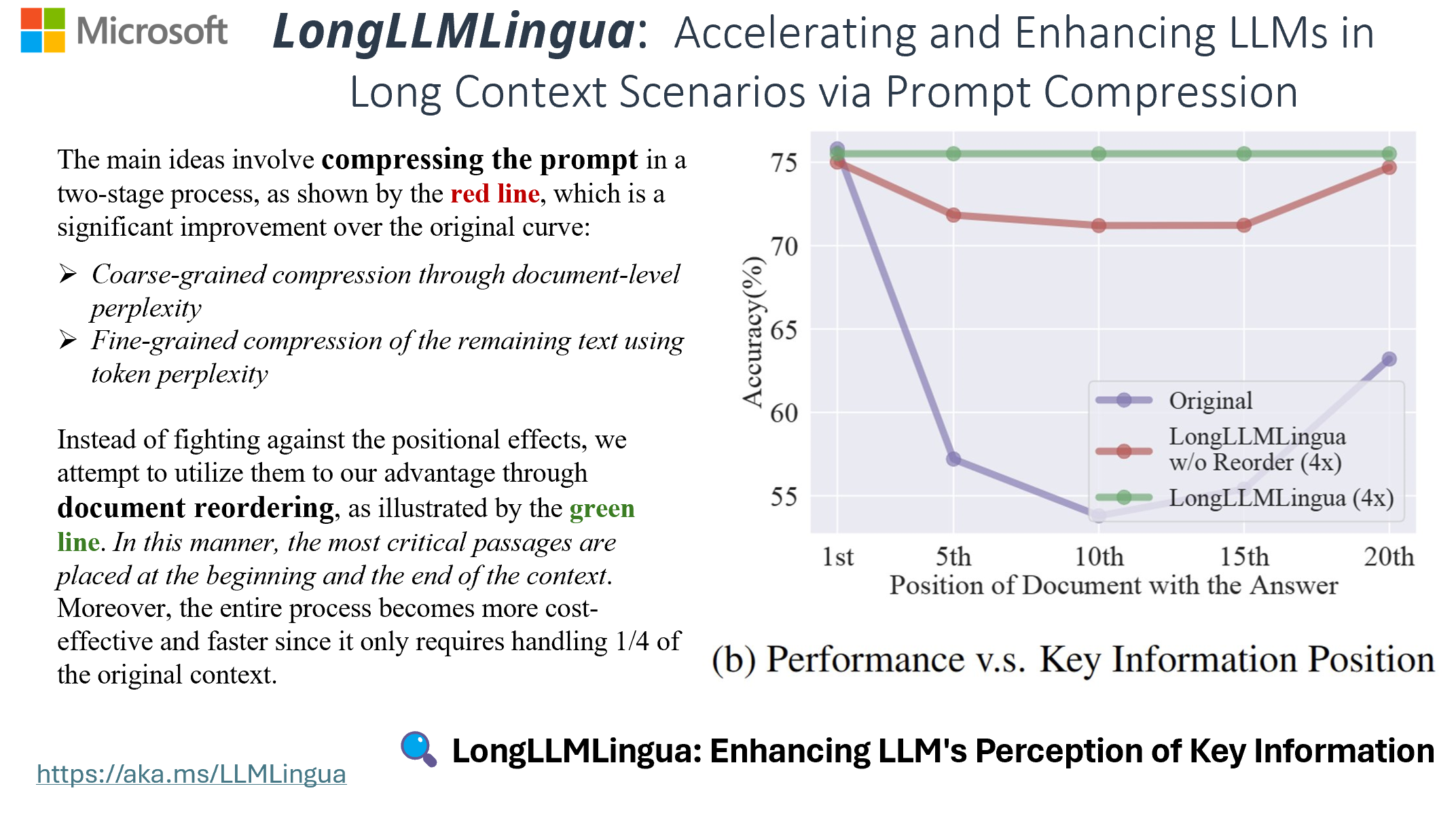

LongLLMLingua is a method that enhances LLMs' ability to perceive key information in long-context scenarios using prompt compression, achieving up to $28.5 in cost savings per 1,000 samples while also improving performance.

LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression (Under Review)

Huiqiang Jiang, Qianhui Wu, Xufang Luo, Dongsheng Li, Chin-Yew Lin, Yuqing Yang and Lili Qiu

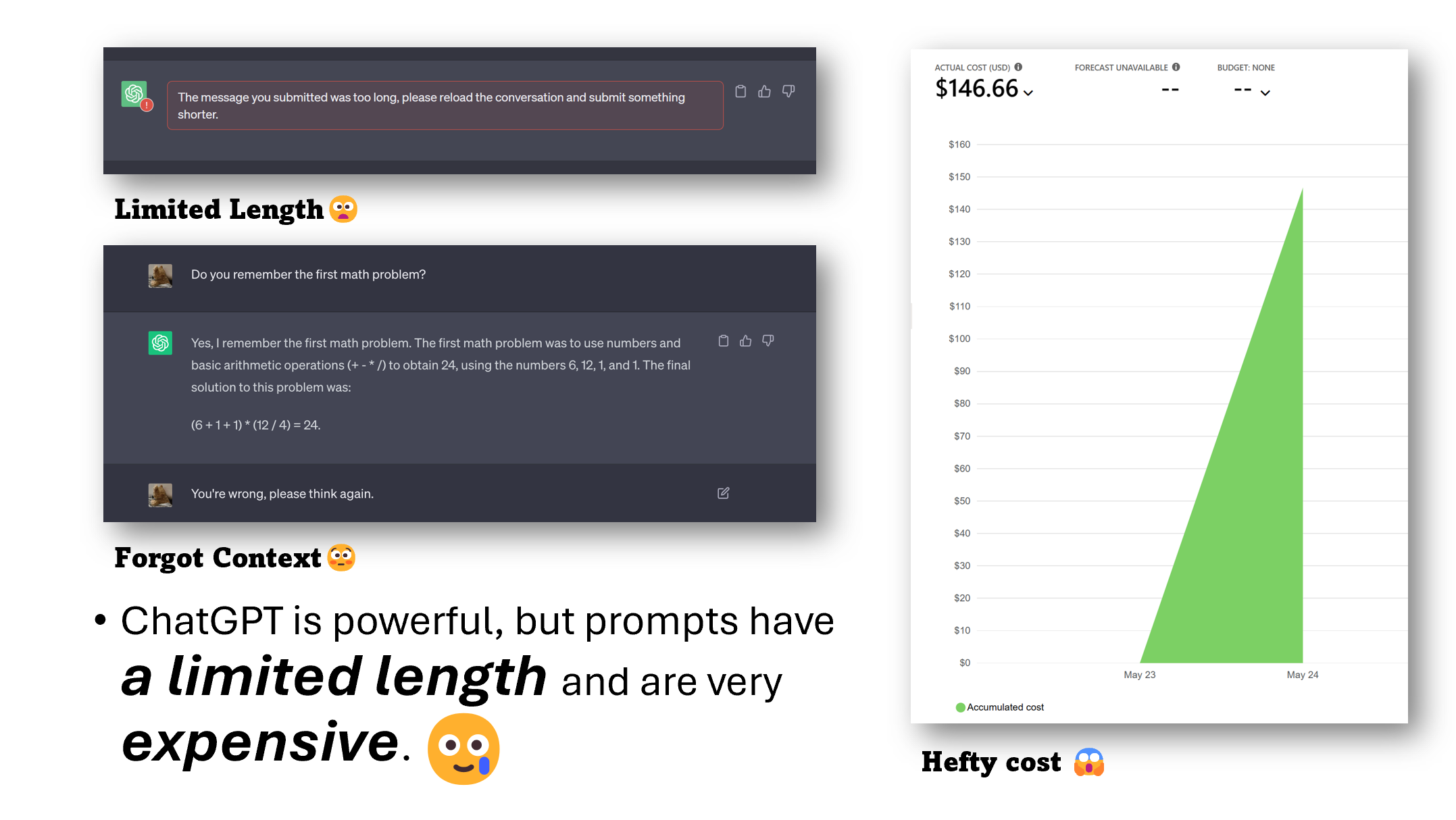

- Have you ever tried to input a long text and ask ChatGPT to summarize it, only to be told that it exceeds the token limit?

- Have you ever spent a lot of time fine-tuning the personality of ChatGPT, only to find that it forgets the previous instructions after a few rounds of dialogue?

- Have you ever used the GPT3.5/4 API for experiments, and got good results, but also received a huge bill after a few days?

Large language models, such as ChatGPT and GPT-4, impress us with their amazing generalization and reasoning abilities, but they also come with some drawbacks, such as the prompt length limit and the prompt-based pricing scheme.

Now you can use LLMLingua & LongLLMLingua!

A simple and efficient method to compress prompt up to 20x.

- 💰 Saving cost, not only prompt, but also the generation length;

- 📝 Support longer contexts, enhance the density of key information in the prompt and mitigate loss in the middle, thereby improving overall performance.

- ⚖️ Robustness, no need any training for the LLMs;

- 🕵️ Keeping the original prompt knowledge like ICL, reasoning, etc.

- 📜 KV-Cache compression, speedup inference;

- 🪃 GPT-4 can recovery all key information from the compressed prompt.

If you find this repo helpful, please cite the following papers:

@inproceedings{jiang-etal-2023-llmlingua,

title = "LLMLingua: Compressing Prompts for Accelerated Inference of Large Language Models",

author = "Huiqiang Jiang and Qianhui Wu and Chin-Yew Lin and Yuqing Yang and Lili Qiu",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/2310.05736",

}@article{jiang-etal-2023-longllmlingua,

title = "LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression",

author = "Huiqiang Jiang and Qianhui Wu and and Xufang Luo and Dongsheng Li and Chin-Yew Lin and Yuqing Yang and Lili Qiu",

url = "https://arxiv.org/abs/2310.06839",

journal = "ArXiv preprint",

volume = "abs/2310.06839",

year = "2023",

}Install LLMLingua,

pip install llmlinguaThen, you can use LLMLingua to compress your prompt,

from llmlingua import PromptCompressor

llm_lingua = PromptCompressor()

compressed_prompt = llm_lingua.compress_prompt(prompt, instruction="", question="", target_token=200)

# > {'compressed_prompt': 'Question: Sam bought a dozen boxes, each with 30 highlighter pens inside, for $10 each box. He reanged five of boxes into packages of sixlters each and sold them $3 per. He sold the rest theters separately at the of three pens $2. How much did make in total, dollars?\nLets think step step\nSam bought 1 boxes x00 oflters.\nHe bought 12 * 300ters in total\nSam then took 5 boxes 6ters0ters.\nHe sold these boxes for 5 *5\nAfterelling these boxes there were 3030 highlighters remaining.\nThese form 330 / 3 = 110 groups of three pens.\nHe sold each of these groups for $2 each, so made 110 * 2 = $220 from them.\nIn total, then, he earned $220 + $15 = $235.\nSince his original cost was $120, he earned $235 - $120 = $115 in profit.\nThe answer is 115',

# 'origin_tokens': 2365,

# 'compressed_tokens': 211,

# 'ratio': '11.2x',

# 'saving': ', Saving $0.1 in GPT-4.'}

## Or use the quantation model, like TheBloke/Llama-2-7b-Chat-GPTQ, only need <8GB GPU memory.

## Before that, you need to pip install optimum auto-gptq

llm_lingua = PromptCompressor("TheBloke/Llama-2-7b-Chat-GPTQ", model_config={"revision": "main"})You can refer to the examples to understand how to use LLMLingua and LongLLMLingua in practical scenarios, such as RAG, Online Meeting, CoT, Code, and RAG using LlamaIndex. Additionally, you can refer to the document for more recommendations on how to use LLMLingua effectively.

show in Transparency_FAQ.md

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.