FSGAN

-

Here is the official PyTorch implementation for our paper "Deep Facial Synthesis: A New Challenge".

-

This project achieve the translation between face photos and artistic portrait drawings using a GAN-based model. You may find useful information in training/testing tips.

-

📕Find our paper on arXiv.

-

✨Try our online Colab demo to generate your own facial sketches.

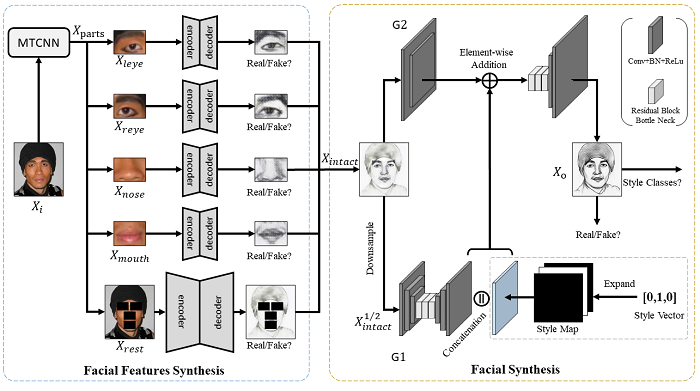

Our Proposed Framework

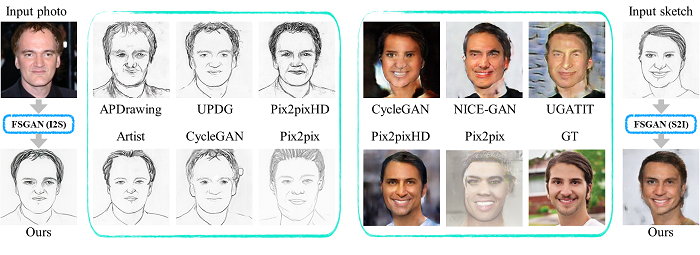

Sample Results

Prerequisites

- Ubuntu >= 18.04

- Python >= 3.6

- Our model can only train on GPU >=32 GB at present

Getting Started

Installation

- Install Pytorch==1.9.0, torchvision==0.10.0 and other dependencies (e.g., visdom and dominate). You can install all the dependencies by

pip install -r requirements.txtDataset

We conduct all the experiments on the currently largest Facial Sketch Synthesis (FSS) dataset FS2K. For more details about this dataset, please visit its repo.

In this project, we follow the APDrawingGAN to do some preprocessing on original images, including aligning photo by key points (MTCNN), segment human portrait regions (U2-Net). You can download the preprocessed FS2K dataset here.

If you want to conduct the preprocessing on other images, see preprocessing section.

Train

-

Run

python -m visdom.server -

python train.py --dataroot /home/pz1/datasets/fss/FS2K_data/train/photo/ --checkpoints_dir checkpoints --name ckpt_0 \ --use_local --discriminator_local --niter 150 --niter_decay 0 --save_epoch_freq 1

- If you run on DGX-server, you can use

sub_by_id.shto set up many experiments one time. - To see losses in training, please refer to log file slurm.out.

Test

Download the weights of pretrained models from the folder for this FSS task on google-drive and specify the path of weights in train/test shell script.

- To test a single model, please run

single_model_test.sh. - To test a series of models, please run

test_ours.sh. - Remember to specify the exp_id and epoch_num in these shell scripts.

- You can also download our results and all other relevant stuff in this google-drive folder.

Training/Test Tips

Best practice for training and testing your models.

Acknowledgments

Thanks to the great codebase of APDrawingGAN.

Citation

If you find our code and metric useful in your research, please cite our papers.

@aticle{Fan2021FS2K,

title={Deep Facial Synthesis: A New Challenge},

author={Deng-Ping, Fan and Ziling, Huang and Peng, Zheng and Hong, Liu and Xuebin, Qin and Luc, Van Gool},

journal={arXiv},

year={2021}

}

@article{Fan2019ScootAP,

title={Scoot: A Perceptual Metric for Facial Sketches},

author={Deng-Ping Fan and Shengchuan Zhang and Yu-Huan Wu and Yun Liu and Ming-Ming Cheng and Bo Ren and Paul L. Rosin and Rongrong Ji},

journal={2019 IEEE/CVF International Conference on Computer Vision (ICCV)},

year={2019},

pages={5611-5621}

}