Implementation of models for the Automatic Speech Recognition problem.

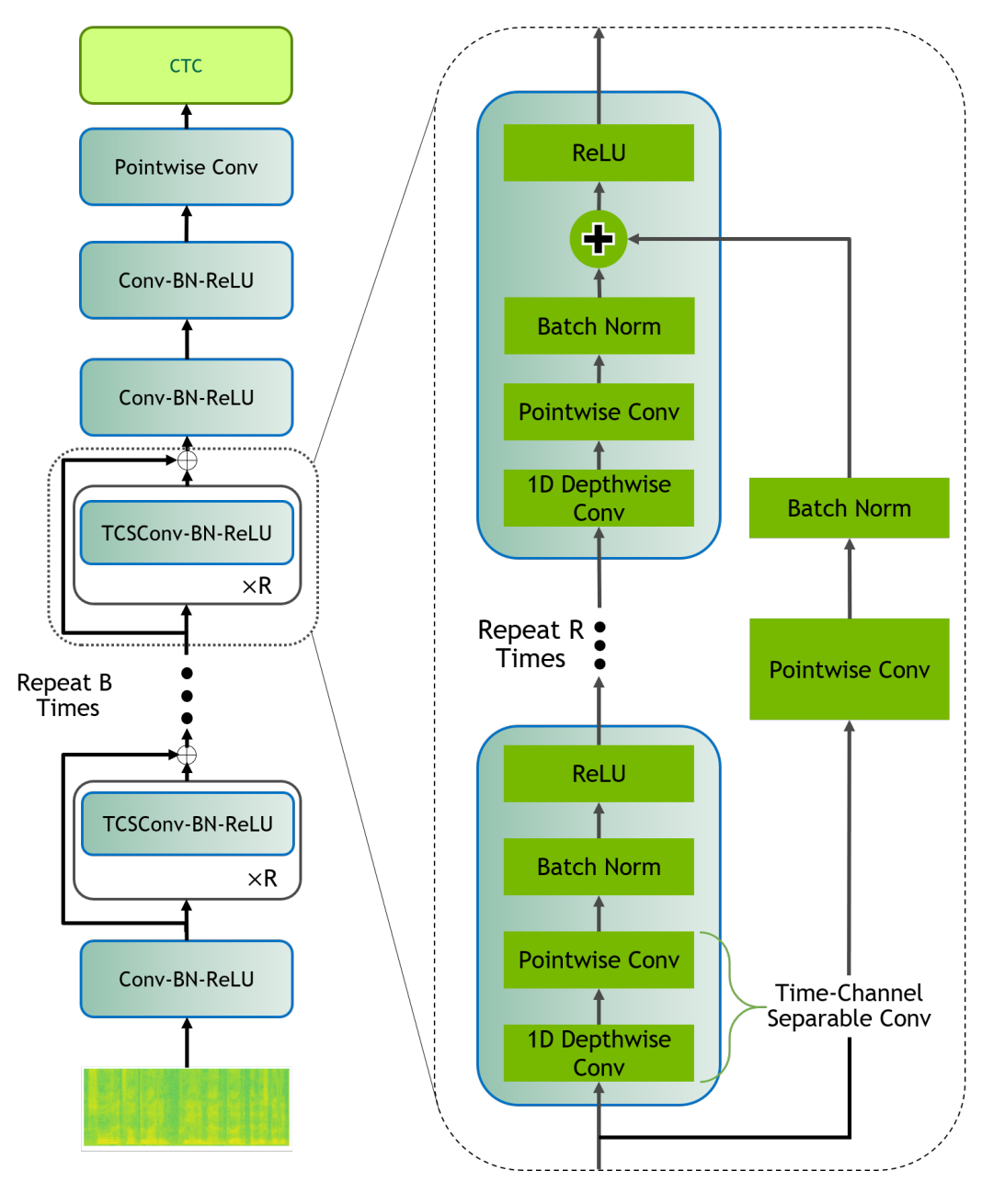

- QuartzNet with (BxS)xR architecture:

Clone the repository and step into it:

git clone https://github.com/khaykingleb/ASR.git

cd ASRInstall requirements and modules.

pip install -r requirements.txt

python setup.py installUse for training:

python train.py -c configs/cofig_name.jsonUse for testing:

python test.py \

-c default_test_model/config.json \

-r default_test_model/checkpoint.pth \

-o result.jsonPlease, note that for testing the model you need to specify the dataset in test.py, for instance LibrispeechDataset:

config.config["data"] = {

"test": {

"batch_size": args.batch_size,

"num_workers": args.jobs,

"datasets": [

{

"type": "LibrispeechDataset",

"args": {

"part": "test-clean"

}

}

]

}

}