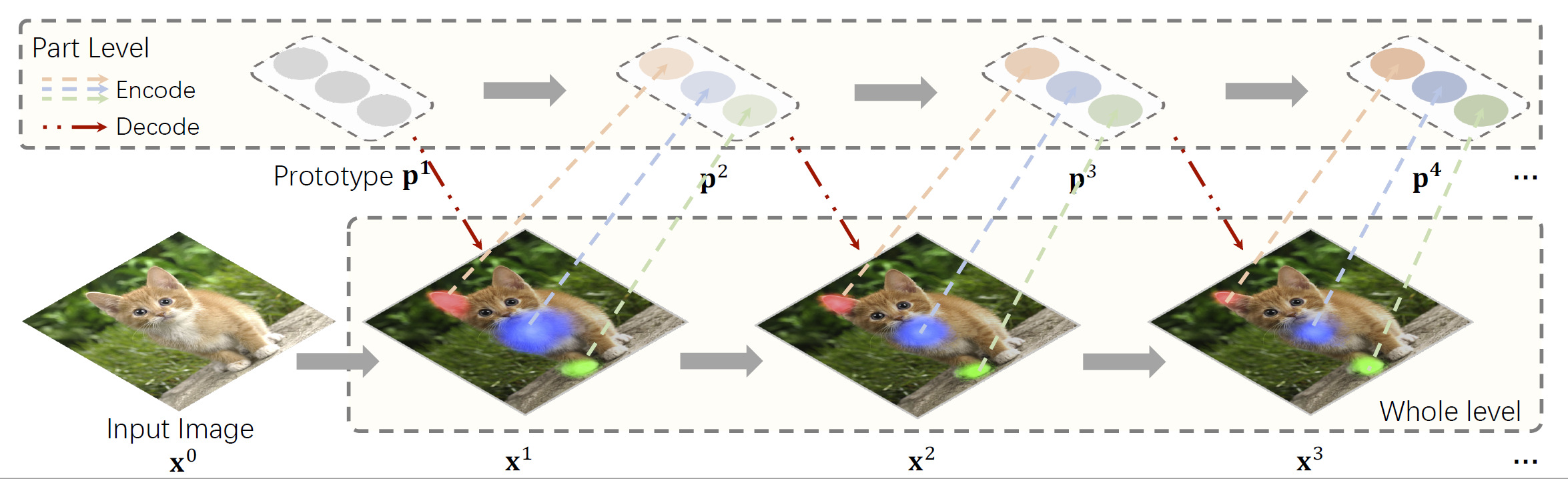

This is the official implementation of the paper Visual Parser: Representing Part-whole Hierarchies with Transformers.

We also released the codebase for ViP object detection & instance segmentation.

-

PyTorch Implementation of the ViP network. Check it out at models/vip.py

-

A fast and neat implementation of the relative positional encoding proposed in HaloNet, BOTNet and AANet.

-

A transformer-friendly FLOPS & Param counter that supports FLOPS calculation for

einsumandmatmuloperations.

Please refer to get_started.md.

All models listed below are evaluated with input size 224x224

| Model | Top1 Acc | #params | FLOPS | Download |

|---|---|---|---|---|

| ViP-Tiny | 79.0 | 12.8M | 1.7G | Google Drive |

| ViP-Small | 82.1 | 32.1M | 4.5G | Google Drive |

| ViP-Medium | 83.3 | 49.6M | 8.0G | Coming Soon |

| ViP-Base | 83.6 | 87.8M | 15.0G | Coming Soon |

To load the pretrained checkpoint, e.g. ViP-Tiny, simply run:

# first download the checkpoint and name it as vip_t_dict.pth

from models.vip import vip_tiny

model = vip_tiny(pretrained="vip_t_dict.pth")To evaluate a pre-trained ViP on ImageNet val, run:

python3 main.py <data-root> --model <model-name> -b <batch-size> --eval_checkpoint <path-to-checkpoint>To train a ViP on ImageNet from scratch, run:

bash ./distributed_train.sh <job-name> <config-path> <num-gpus>For example, to train ViP with 8 GPU on a single node, run:

ViP-Tiny:

bash ./distributed_train.sh vip-t-001 configs/vip_t_bs1024.yaml 8ViP-Small:

bash ./distributed_train.sh vip-s-001 configs/vip_s_bs1024.yaml 8ViP-Medium:

bash ./distributed_train.sh vip-m-001 configs/vip_m_bs1024.yaml 8ViP-Base:

bash ./distributed_train.sh vip-b-001 configs/vip_b_bs1024.yaml 8To measure the throughput, run:

python3 test_throughput.py <model-name>For example, if you want to get the test speed of Vip-Tiny on your device, run:

python3 test_throughput.py vip-tinyTo measure the FLOPS and number of parameters, run:

python3 test_flops.py <model-name>@article{vip,

title={Visual Parser: Representing Part-whole Hierarchies with Transformers},

author={Sun, Shuyang and Yue, Xiaoyu, Bai, Song and Torr, Philip},

journal={arXiv preprint arXiv:2107.05790},

year={2021}

}

If you have any questions, don't hesitate to contact Shuyang (Kevin) Sun. You can easily reach him by sending an email to kevinsun@robots.ox.ac.uk.