Anomaly Detection on unstructured logs using ensemble machine learning models

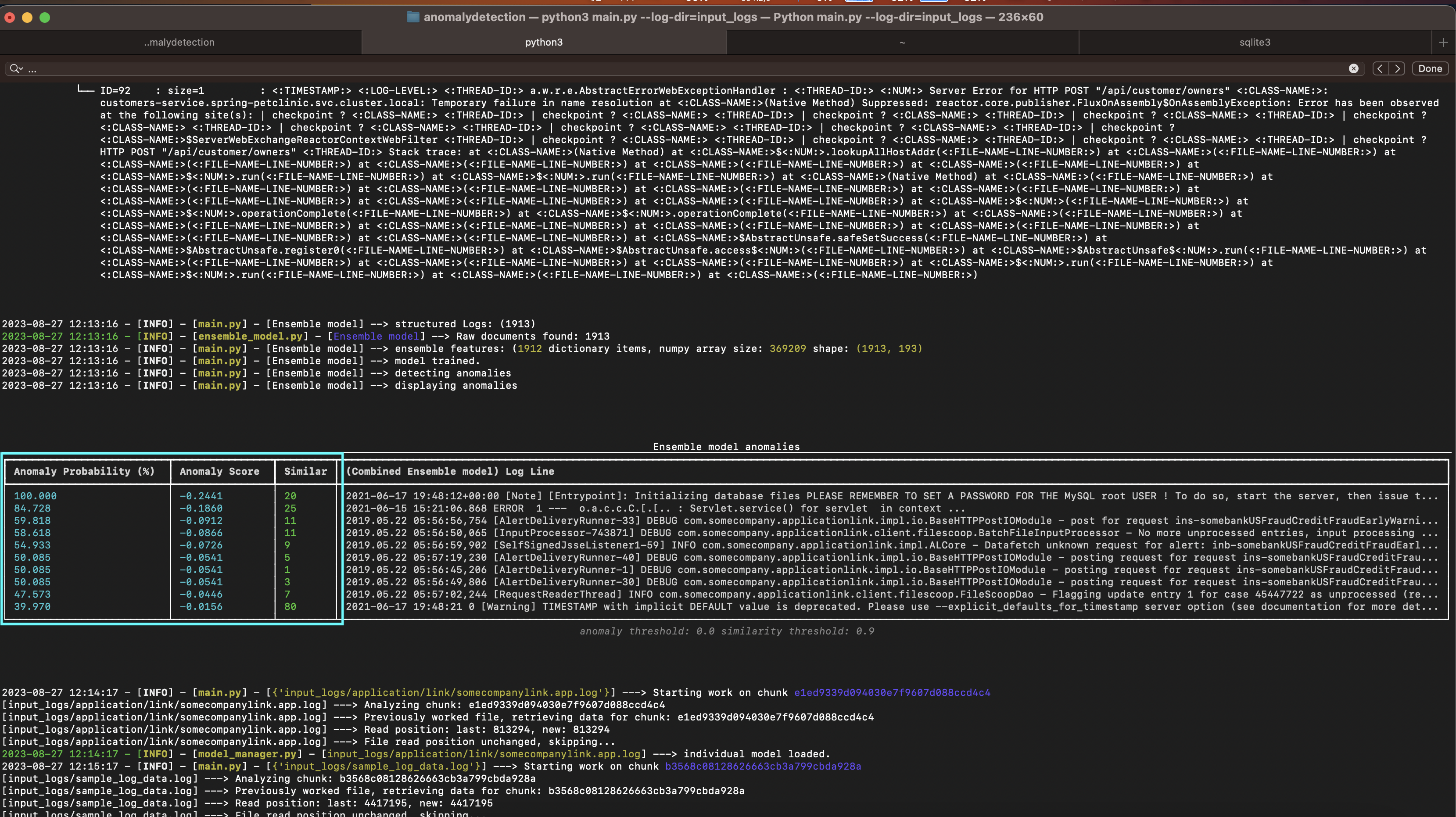

Screenshot

Overview

This project aims to perform anomaly detection on raw logs using ensemble machine learning models, it uses IsolationForest and Levenshein distance to determine outliers. The following steps outline the process:

- Input Data: Input data can be a root directory of static logs or live logs (logs being written to currently).

- Database Record Creation: The program will walk the directory and find logs to monitor, creating a database record for each one.

- Log Feeding: Logs are fed into the program by them being present in the specific root directory which is being polled for changes.

- Log Monitoring: Similar to tail, each log file's attributes (size, modified date, created date, etc) are continually hashed and compared against the database to detect log changes. This approach allows us to monitor a large number of logs, and process them in chunks (instead of opening file watchers and eating up resources).

- File Polling Interval: The file polling interval is configurable depending on how frequently you'd like to poll for changes to the logs. Each log is checked for changes per poll.

- Log Change Handling: When a log change is found, a chunk of the log is sent to the log parser.

- Log Cleanup and Parsing: Logs are cleaned up and parsed, removing any empty lines and duplicates which may be contained within that chunk.

- Individual Model Creation: One model is created for each log to train the machine learning model with data specific to that application's logging profile.

- Master Model: Once the individual models are trained on the log data, you can enable the master model which polls the individual models for anomalies and performs anomaly detection at a bird's eye level, watching over the individual models. This gives a reasonably accurate view of the anomalies detected across the entire logging root directory or your application suite.

- Future Integration: This is a POC and will likely include integration with a timeseries database to better visualize and tag the anomalies as they come into the monitoring system (similar to Splunk).

Install Requirements

bash

./run.sh

Running:

python3 main.py --log_dir sample_input_logs

Core Classes and Functions

Let's start by defining the core classes, functions, and methods that will be necessary for this project:

LogRetriever: This class will be responsible for retrieving logs from different sources. It will have methods likeretrieve_from_cloudwatchandretrieve_from_filesystem.DatabaseManager: This class will handle all database operations. It will have methods likestore_log_entry,get_log_entry,update_log_entry, anddelete_log_entry.LogMonitor: This class will monitor the logs for changes. It will have methods likecheck_for_changesandhandle_log_change.TaskScheduler: This class will handle the scheduling of tasks. It will have methods likeschedule_taskandexecute_task.LogParser: This class will parse the log lines. It will have methods likeparse_log_line.ModelManager: This class will handle all operations related to the model. It will have methods likefeed_log_line,extract_features,train_model,detect_anomalies, andupdate_model.