Status Jan 1: This challenge is open for submissions!

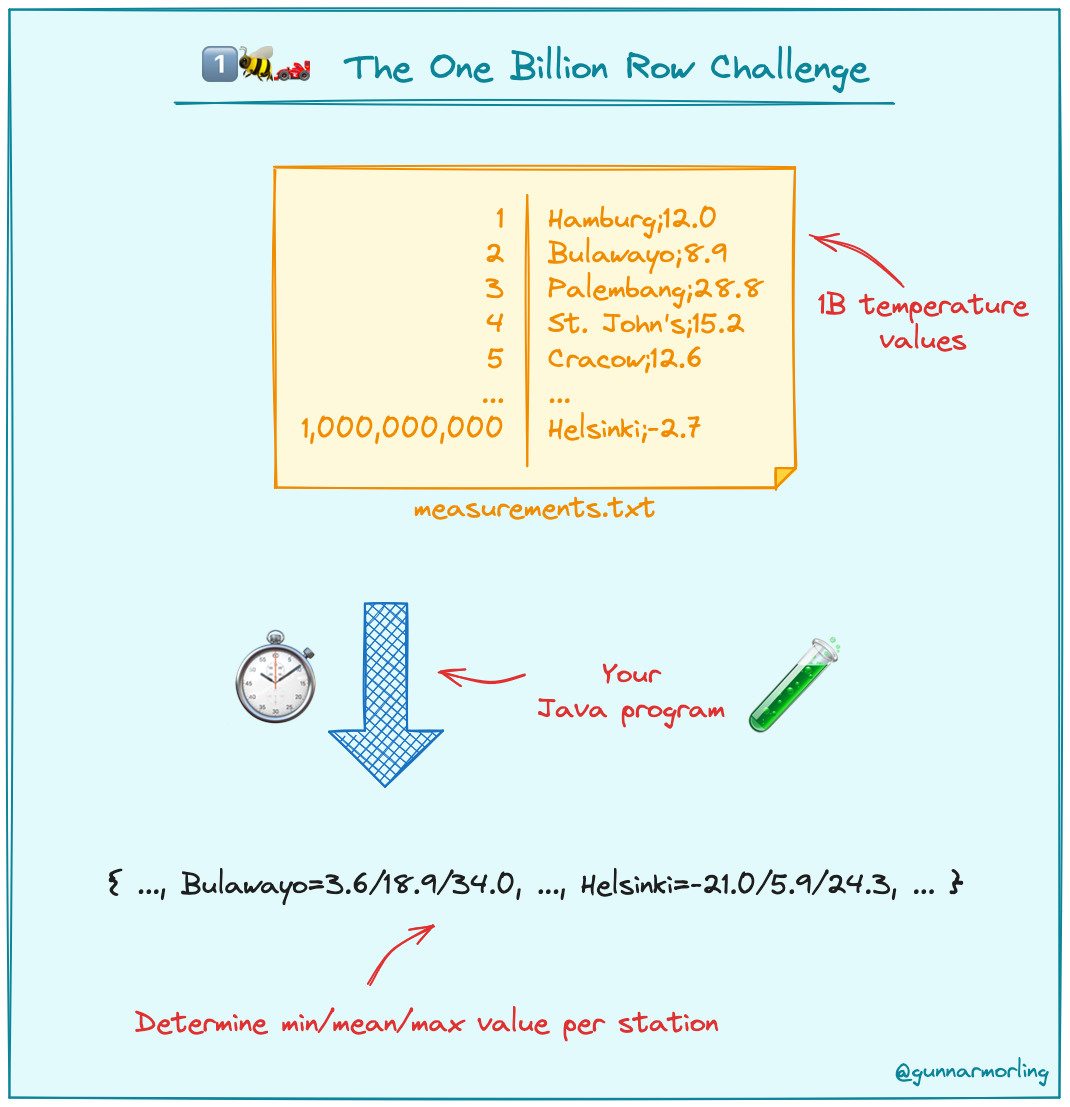

The One Billion Row Challenge (1BRC) is a fun exploration of how far modern Java can be pushed for aggregating one billion rows from a text file. Grab all your (virtual) threads, reach out to SIMD, optimize your GC, or pull any other trick, and create the fastest implementation for solving this task!

The text file contains temperature values for a range of weather stations.

Each row is one measurement in the format <string: station name>;<double: measurement>, with the measurement value having exactly one fractional digit.

The following shows ten rows as an example:

Hamburg;12.0

Bulawayo;8.9

Palembang;38.8

St. John's;15.2

Cracow;12.6

Bridgetown;26.9

Istanbul;6.2

Roseau;34.4

Conakry;31.2

Istanbul;23.0

The task is to write a Java program which reads the file, calculates the min, mean, and max temperature value per weather station, and emits the results on stdout like this

(i.e. sorted alphabetically by station name, and the result values per station in the format <min>/<mean>/<max>, rounded to one fractional digit):

{Abha=-23.0/18.0/59.2, Abidjan=-16.2/26.0/67.3, Abéché=-10.0/29.4/69.0, Accra=-10.1/26.4/66.4, Addis Ababa=-23.7/16.0/67.0, Adelaide=-27.8/17.3/58.5, ...}

Submit your implementation by Jan 31 2024 and become part of the leaderboard!

| # | Result (m:s.ms) | Implementation | JDK | Submitter | Notes |

|---|---|---|---|---|---|

| 1. | 00:06.159 | link | 21.0.1-graal | royvanrijn | |

| 2. | 00:06.532 | link | 21.0.1-graal | Thomas Wuerthinger | GraalVM native binary |

| 3. | 00:07.620 | link | 21.0.1-open | Quan Anh Mai | |

| 00:09.062 | link | 21.0.1-open | obourgain | ||

| 00:09.338 | link | 21.0.1-graal | Elliot Barlas | ||

| 00:10.589 | link | 21.0.1-graal | Artsiom Korzun | ||

| 00:10.613 | link | 21.0.1-graal | Sam Pullara | ||

| 00:11.038 | link | 21.0.1-open | Andrew Sun | ||

| 00:11.222 | link | 21.0.1-open | Jamie Stansfield | ||

| 00:13.277 | link | 21.0.1-graal | Yavuz Tas | ||

| 00:13.430 | link | 21.0.1-open | Johannes Schüth | ||

| 00:13.463 | link | 21.0.1-open | yemreinci | ||

| 00:13.615 | link | 21.0.1-graal | ags313 | ||

| 00:13.709 | link | 21.0.1-open | John Ziamos | ||

| 00:13.857 | link | 21.0.1-graal | Filip Hrisafov | ||

| 00:14.411 | link | 21.0.1-open | deemkeen | ||

| 00:15.956 | link | 21.0.1-open | Dimitar Dimitrov | ||

| 00:16.196 | link | 21.0.1-open | Parth Mudgal | ||

| 00:16.823 | link | 21.0.1-open | arjenvaneerde | ||

| 00:17.905 | link | 21.0.1-open | Peter Lawrey | ||

| 00:17.963 | link | 21.0.1-graal | Cool_Mineman | ||

| 00:18.380 | link | 21.0.1-open | Carlo | ||

| 00:18.866 | link | 21.0.1-graal | Rafael Merino García | ||

| 00:18.789 | link | 21.0.1-open | Nick Palmer | ||

| 00:19.561 | link | 21.0.1-open | Gabriel Reid | ||

| 00:22.210 | link | 21.0.1-open | Serghei Motpan | ||

| 00:22.634 | link | 21.0.1-open | Kevin McMurtrie | ||

| 00:22.709 | link | 21.0.1-graal | Markus Ebner | ||

| 00:23.078 | link | 21.0.1-open | Richard Startin | ||

| 00:24.879 | link | 21.0.1-open | David Kopec | ||

| 00:26.253 | link | 21.0.1-graal | Stefan Sprenger | ||

| 00:26.576 | link | 21.0.1-open | Roman Romanchuk | ||

| 00:27.787 | link | 21.0.1-open | Nils Semmelrock | ||

| 00:28.167 | link | 21.0.1-open | Roman Schweitzer | ||

| 00:28.386 | link | 21.0.1-open | Gergely Kiss | ||

| 00:32.764 | link | 21.0.1-open | Moysés Borges Furtado | ||

| 00:34.848 | link | 21.0.1-open | Arman Sharif | ||

| 00:36.518 | link | 21.0.1-open | Ramzi Ben Yahya | ||

| 00:38.510 | link | 21.0.1-open | Hampus Ram | ||

| 00:47.717 | link | 21.0.1-open | Kuduwa Keshavram | ||

| 00:50.547 | link | 21.0.1-open | Aurelian Tutuianu | ||

| 00:51.678 | link | 21.0.1-tem | Tobi | ||

| 00:53.679 | link | 21.0.1-open | Chris Riccomini | ||

| 00:59.377 | link | 21.0.1-open | Horia Chiorean | ||

| 01:24.721 | link | 21.0.1-open | Ujjwal Bharti | ||

| 01:27.912 | link | 21.0.1-open | Jairo Graterón | ||

| 01:39.360 | link | 21.0.1-open | Mudit Saxena | ||

| 02:00.087 | link | 21.0.1-open | Santanu Barua | ||

| 02:00.101 | link | 21.0.1-open | khmarbaise | ||

| 02:08.315 | link | 21.0.1-open | itaske | ||

| 02:16.635 | link | 21.0.1-open | twohardthings | ||

| 02:23.316 | link | 21.0.1-open | Abhilash | ||

| 03:16.334 | link | 21.0.1-open | 김예환 Ye-Hwan Kim (Sam) | ||

| 03:42.297 | link | 21.0.1-open | Samson | ||

| 04:13.449 | link (baseline) | 21.0.1-open | Gunnar Morling |

See below for instructions how to enter the challenge with your own implementation.

Java 21 must be installed on your system.

This repository contains two programs:

dev.morling.onebrc.CreateMeasurements(invoked via create_measurements.sh): Creates the file measurements.txt in the root directory of this project with a configurable number of random measurement valuesdev.morling.onebrc.CalculateAverage(invoked via calculate_average_baseline.sh): Calculates the average values for the file measurements.txt

Execute the following steps to run the challenge:

-

Build the project using Apache Maven:

./mvnw clean verify -

Create the measurements file with 1B rows (just once):

./create_measurements.sh 1000000000This will take a few minutes. Attention: the generated file has a size of approx. 12 GB, so make sure to have enough diskspace.

-

Calculate the average measurement values:

./calculate_average_baseline.shThe provided naive example implementation uses the Java streams API for processing the file and completes the task in ~2 min on environment used for result evaluation. It serves as the base line for comparing your own implementation.

-

Optimize the heck out of it:

Adjust the

CalculateAverageprogram to speed it up, in any way you see fit (just sticking to a few rules described below). Options include parallelizing the computation, using the (incubating) Vector API, memory-mapping different sections of the file concurrently, using AppCDS, GraalVM, CRaC, etc. for speeding up the application start-up, choosing and tuning the garbage collector, and much more.

A tip is that if you have jbang installed, you can get a flamegraph of your program by running async-profiler via ap-loader:

jbang --javaagent=ap-loader@jvm-profiling-tools/ap-loader=start,event=cpu,file=profile.html -m dev.morling.onebrc.CalculateAverage_yourname target/average-1.0.0-SNAPSHOT.jar

or directly on the .java file:

jbang --javaagent=ap-loader@jvm-profiling-tools/ap-loader=start,event=cpu,file=profile.html src/main/java/dev/morling/onebrc/CalculateAverage_yourname

When you run this, it will generate a flamegraph in profile.html. You can then open this in a browser and see where your program is spending its time.

- Any of these Java distributions may be used:

- Any builds provided by SDKMan

- Early access builds available on openjdk.net may be used (including EA builds for OpenJDK projects like Valhalla)

- Builds on builds.shipilev.net If you want to use a build not available via these channels, reach out to discuss whether it can be considered.

- No external library dependencies may be used

- Implementations must be provided as a single source file

- The computation must happen at application runtime, i.e. you cannot process the measurements file at build time (for instance, when using GraalVM) and just bake the result into the binary

- Input value ranges are as follows:

- Station name: non null UTF-8 string of min length 1 character and max length 100 bytes (i.e. this could be 100 one-byte characters, or 50 two-byte characters, etc.)

- Temperature value: non null double between -99.9 (inclusive) and 99.9 (inclusive), always with one fractional digit

- There is a maximum of 10,000 unique station names

- Implementations must not rely on specifics of a given data set, e.g. any valid station name as per the constraints above and any data distribution (number of measurements per station) must be supported

To submit your own implementation to 1BRC, follow these steps:

- Create a fork of the onebrc GitHub repository.

- Create a copy of CalculateAverage.java, named CalculateAverage_<your_GH_user>.java, e.g. CalculateAverage_doloreswilson.java.

- Make that implementation fast. Really fast.

- Create a copy of calculate_average_baseline.sh, named calculate_average_<your_GH_user>.sh, e.g. calculate_average_doloreswilson.sh.

- Adjust that script so that it references your implementation class name. If needed, provide any JVM arguments via the

JAVA_OPTSvariable in that script. Make sure that script does not write anything to standard output other than calculation results. - OpenJDK 21 is the default. If a custom JDK build is required, include the SDKMAN command

sdk use java [version]in the launch shell script prior to application start. - (Optional) If you'd like to use native binaries (GraalVM), adjust the pom.xml file so that it builds that binary.

- Run the test suite by executing /test.sh <your_GH_user>; if any differences are reported, fix them before submitting your implementation.

- Create a pull request against the upstream repository, clearly stating

- The name of your implementation class.

- The execution time of the program on your system and specs of the same (CPU, number of cores, RAM). This is for informative purposes only, the official runtime will be determined as described below.

- I will run the program and determine its performance as described in the next section, and enter the result to the scoreboard.

Note: I reserve the right to not evaluate specific submissions if I feel doubtful about the implementation (I.e. I won't run your Bitcoin miner ;).

If you'd like to discuss any potential ideas for implementing 1BRC with the community, you can use the GitHub Discussions of this repository. Please keep it friendly and civil.

The challenge runs until Jan 31 2024. Any submissions (i.e. pull requests) created after Jan 31 2024 23:59 UTC will not be considered.

Results are determined by running the program on a Hetzner Cloud CCX33 instance (8 dedicated vCPU, 32 GB RAM).

The time program is used for measuring execution times, i.e. end-to-end times are measured.

Each contender will be run five times in a row.

The slowest and the fastest runs are discarded.

The mean value of the remaining three runs is the result for that contender and will be added to the results table above.

The exact same measurements.txt file is used for evaluating all contenders.

If you'd like to spin up your own box for testing on Hetzner Cloud, you may find these set-up scripts (based on Terraform and Ansible) useful. It has been reported that instances of the CCX33 machine class can significantly vary in terms of performance, so results are only comparable when obtained from one and the same instance. Note this will incur cost you are responsible for, I am not going to pay your cloud bill :)

If you enter this challenge, you may learn something new, get to inspire others, and take pride in seeing your name listed in the scoreboard above. Rumor has it that the winner may receive a unique 1️⃣🐝🏎️ t-shirt, too!

Q: Can I use Kotlin or other JVM languages other than Java?

A: No, this challenge is focussed on Java only. Feel free to inofficially share implementations significantly outperforming any listed results, though.

Q: Can I use non-JVM languages and/or tools?

A: No, this challenge is focussed on Java only. Feel free to inofficially share interesting implementations and results though. For instance it would be interesting to see how DuckDB fares with this task.

Q: I've got an implementation—but it's not in Java. Can I share it somewhere?

A: Whilst non-Java solutions cannot be formally submitted to the challenge, you are welcome to share them over in the Show and tell GitHub discussion area.

Q: Can I use JNI?

A: Submissions must be completely implemented in Java, i.e. you cannot write JNI glue code in C/C++. You could use AOT compilation of Java code via GraalVM though, either by AOT-compiling the entire application, or by creating a native library (see here.

Q: What is the encoding of the measurements.txt file?

A: The file is encoded with UTF-8.

Q: Can I make assumptions on the names of the weather stations showing up in the data set?

A: No, while only a fixed set of station names is used by the data set generator, any solution should work with arbitrary UTF-8 station names

(for the sake of simplicity, names are guaranteed to contain no ; character).

Q: Can I copy code from other submissions?

A: Yes, you can. The primary focus of the challenge is about learning something new, rather than "winning". When you do so, please give credit to the relevant source submissions. Please don't re-submit other entries with no or only trivial improvements.

Q: Which operating system is used for evaluation?

A: Fedora 39.

Q: My solution runs in 2 sec on my machine. Am I the fastest 1BRC-er in the world?

A: Probably not :) 1BRC results are reported in wallclock time, thus results of different implementations are only comparable when obtained on the same machine. If for instance an implementation is faster on a 32 core workstation than on the 8 core evaluation instance, this doesn't allow for any conclusions. When sharing 1BRC results, you should also always share the result of running the baseline implementation on the same hardware.

Q: Why 1️⃣🐝🏎️ ?

A: It's the abbreviation of the project name: One Billion Row Challenge.

This code base is available under the Apache License, version 2.

Be excellent to each other! More than winning, the purpose of this challenge is to have fun and learn something new.