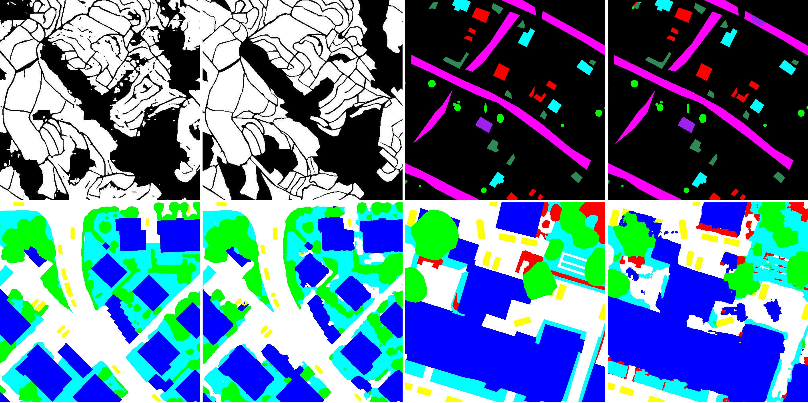

Figure 1. Examples of results obtained in this work.

Semantic segmentation requires methods capable of learning high-level features while dealing with a large volume of data. Towards such goal, Convolutional Networks can learn specific and adaptable features based on the data. However, these networks are not capable of processing a whole remote sensing image, given its huge size. To overcome such limitation, the image is processed using fixed size patches. The definition of the input patch size is usually performed empirically (evaluating several sizes) or imposed (by network constraint). Both strategies suffer from drawbacks and could not lead to the best patch size. To alleviate this problem, several works exploited multi-context information by combining networks or layers. This process increases the number of parameters resulting in a more difficult model to train. In this work, we propose a novel technique to perform semantic segmentation of remote sensing images that exploits a multi-context paradigm without increasing the number of parameters while defining, in training time, the best patch size. The main idea is to train a dilated network with distinct patch sizes, allowing it to capture multi-context characteristics from heterogeneous contexts. While processing these varying patches, the network provides a score for each patch size, helping in the definition of the best size for the current scenario. A systematic evaluation of the proposed algorithm is conducted using four high-resolution remote sensing datasets with very distinct properties. Our results show that the proposed algorithm provides improvements in pixelwise classification accuracy when compared to state-of-the-art methods.

Each Python code has a usage briefly explaining how to use the code. In general, the required parameters have intuitive names. For instance, to call the code responsible to classify the ISPRS datasets, one can call:

python isprs_dilated_random.py.py input_path output_path trainingInstances testing_instances learningRate weight_decay batch_size niter reference_crop_size net_type distribution_type probValues update_type process

where,

input_pathis the path for the dataset images.output_pathis the path to save models, images, graphs, etc.trainingInstancesrepresent the instances that should be used to train the model (ex.: 1,3,5,7).testing_instancesrepresent the instances that will be used to validate/test the algorithm (same logic as above).learningRatecorresponds to the learning rate used in the Stochastic Gradient Descent.weight_decayrepresents the weight decay used to regularize the learning.batch_sizeis the size of the batch.niteris the number of iterations of the algorithm (used related to the epoch).reference_crop_sizeis the size used as reference (only used for internal estimation).net_typeis the flag to identify which network should be trained. There are several options, including:dilated_grsl, which in the paper has name Dilated6Pooling,dilated_icpr_original, that is actually Dilated6 network,dilated_icpr_rate6_densely, which is the DenseDilated6 network in the paper, anddilated_grsl_rate8, that is presented as Dilated8Pooling in the paper.

distribution_typerepresents the probability distribution that should be used to select the values. There are four options:single_fixed, which uses one patch size during the whole training,multi_fixed, which is presented in the manuscript as Uniform Fixed,uniform, the Uniform distribution, andmultinomial, the Multinomial distribution.

probValuesrepresents the values of patch size that will be used (together with the distribution) during the processing.update_typewhich represents how the update of the patch sizes should be performed (options areaccandlossfor accuracy and loss, respectively).processwhich is the operation that will be performed.

Four datasets were used in this work:

- Coffee dataset, composed multispectral high-resolution scenes of coffee crops and non-coffee areas. Unfortunately, this dataset has not yet been released for the public.

- GRSS Data Fusion dataset, consisting of very high-resolution of visible spectrum images. This dataset was publicly released in the 2014 GRSS Data Fusion Contest.

- Vaihingen dataset, composed of multispectral high-resolution images and normalized Digital Surface Model, and

- Potsdam dataset, also composed of multispectral high-resolution images and normalized Digital Surface Model. The last two datasets are publicly available here.

If you use this code in your research, please consider citing:

@article{nogueiraTGRS2019dynamic,

author = {Keiller Nogueira and Mauro Dalla Mura and Jocelyn Chanussot and William Robson Schwartz and Jefersson A. dos Santos}

title = {Dynamic Multi-Context Segmentation of Remote Sensing Images based on Convolutional Networks},

journal = {{IEEE} Transactions on Geoscience and Remote Sensing},

year = {2019},

publisher={IEEE}

}