Universal Perturbation Attack on Differentiable No-Reference Image- and Video-Quality Metrics (BMVC 2022)

We make the first attempt in attacking differentiable no-reference image- and video-quality metrics through UAPs. The goal of attacks on quality metric is to increase the quality score of an output image, when visual quality does not improve after the attack.

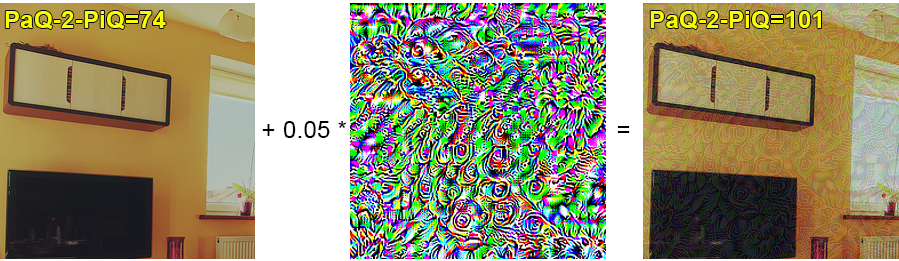

Example of attack on NR quality metric PaQ-2-PiQ

Example of attack on NR quality metric PaQ-2-PiQ

- We employed a universal perturbation attack on seven differentiable NR metrics (PaQ-2-PiQ, Linearity, VSFA, MDTVSFA, KonCept512, Nima and SPAQ)

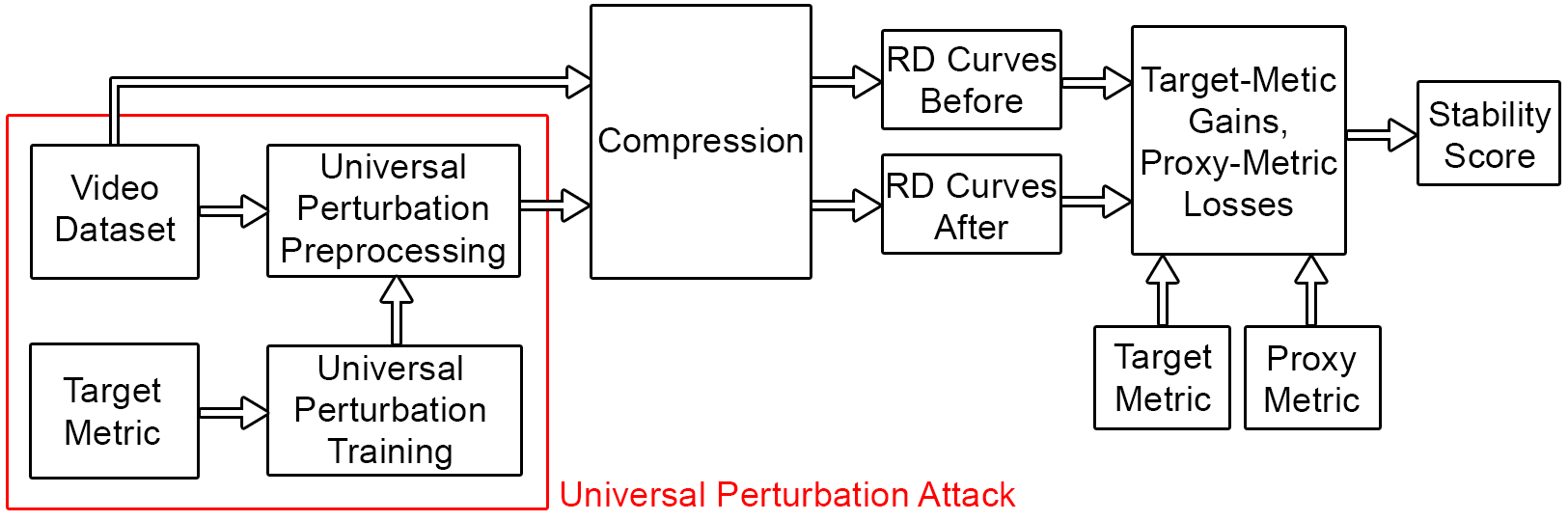

- We applied trained UAPs to FullHD video frames before compression and proposed a method for comparing metrics stability based on RD curves to identify metrics that are the most resistant to UAP attack

Scheme of proposed method for assessing target metric stability

Scheme of proposed method for assessing target metric stability

- Universal perturbaiton training: the training dataset consist of 10 000 256x256 images from the COCO dataset

- Video dataset: 20 FullHD raw videos from the Xiph.org dataset

- Compression: H.264 codec preset medium at four bitrates (200 kbps, 1 Mbps, 5 Mbps and 12 Mbps)

- Proxy metric: PSNR

Stability score is the area under the target-metric gain versus proxy-metric loss dependence, with the opposite sign, multiplied by 100. For each metric we calculate the area along the proxy-metric-axis where dependencies for all tested metrics are determined.

Calculation of target-metric gain and proxy-metric loss using normalized RD

curves for a video at four amplitude levels. The rightmost chart shows the target-metric

versus proxy-metric loss dependence for these amplitude levels

Calculation of target-metric gain and proxy-metric loss using normalized RD

curves for a video at four amplitude levels. The rightmost chart shows the target-metric

versus proxy-metric loss dependence for these amplitude levels

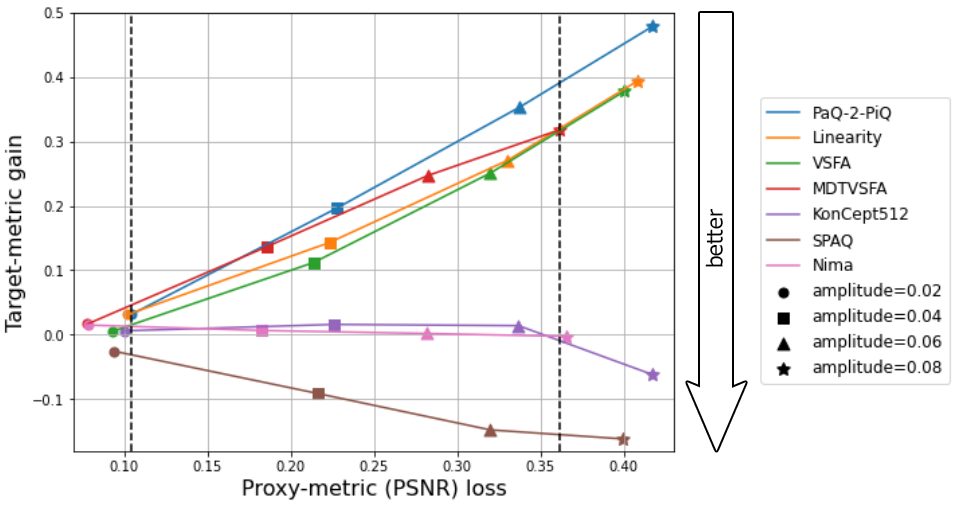

Target-metric gain versus proxy-metric loss dependencies for all tested NR metrics.

Dotted lines highlight the region where dependencies are defined for all metrics. The less

target-metric gain and the higher PSNR loss are, the more stable is the metric

Target-metric gain versus proxy-metric loss dependencies for all tested NR metrics.

Dotted lines highlight the region where dependencies are defined for all metrics. The less

target-metric gain and the higher PSNR loss are, the more stable is the metric

| Target Metric | Stability score ↑ | SRCC ↑ MSU benchmark | SRCC ↑ BVQA benchmark |

|---|---|---|---|

| PaQ-2-PiQ | -5.3 | 0.87 | 0.61 |

| Linearity | -4.2 | 0.91 | - |

| VSFA | -3.8 | 0.90 | 0.75 |

| MDTVSFA | -4.8 | 0.93 | 0.78 |

| KonCept512 | -0.3 | 0.84 | 0.73 |

| SPAQ | 2.6 | 0.85 | - |

| Nima | -0.1 | 0.88 | - |

Stability scores and Spearman correlation coefficient (SRCC) correlations for all tested metrics. Our rating shows which metrics are stable and which are easily increased by attack

We recommend the proposed method as an additional verification of metric reliability to complement traditional subjective tests and benchmarks.

If you use this code for your research, please cite our paper.

@inproceedings{Shumitskaya_2022_BMVC,

author = {Ekaterina Shumitskaya and Anastasia Antsiferova and Dmitriy S Vatolin},

title = {Universal Perturbation Attack on Differentiable No-Reference Image- and Video-Quality Metrics},

booktitle = {33rd British Machine Vision Conference 2022, {BMVC} 2022, London, UK, November 21-24, 2022},

publisher = {{BMVA} Press},

year = {2022},

url = {https://bmvc2022.mpi-inf.mpg.de/0790.pdf}

}