PerfSpect is a system performance characterization tool based on linux perf targeting Intel microarchitectures.

The tool has two parts

- perf collection to collect underlying PMU (Performance Monitoring Unit) counters

- post processing that generates csv output of performance metrics.

- Linux perf

- Python3+

- Install docker

- Ensure docker commands execute without sudo (for example -

docker run hello-worldruns successfully)

execute build.sh

./build.sh

./non_container_build.sh

On successful build, binaries will be created in "dist" folder

(sudo) ./perf-collect (options) -- Some options can be used only with root privileges

Options:

-h, --help (show this help message and exit)

-v, --version display version info

-e EVENTFILE, --eventfile EVENTFILE (Event file containing events to collect, default=events/<architecture specific file>)

-i INTERVAL, --interval INTERVAL (interval in seconds for time series dump, default=1)

-m MUXINTERVAL, --muxinterval MUXINTERVAL (event mux interval for events in ms, default=0 i.e., will use the system default. Requires root privileges)

-o OUTCSV, --outcsv OUTCSV (perf stat output in csv format, default=results/perfstat.csv)

-a APP, --app APP (Application to run with perf-collect, perf collection ends after workload completion)

-p PID, --pid PID perf-collect on selected PID(s)

-t TIMEOUT, --timeout TIMEOUT ( perf event collection time)

--percore (Enable per core event collection)

--nogroups (Disable perf event grouping, events are grouped by default as in the event file)

--dryrun (Test if Performance Monitoring Counters are in-use, and collect stats for 10sec)

--metadata (collect system info only, does not run perf)

-csp CLOUD, --cloud CLOUD (Name of the Cloud Service Provider(ex- AWS), if collecting on cloud instances)

-ct CLOUDTYPE, --cloudtype CLOUDTYPE (Instance type: Options include - VM/BM depending on the instance if it's baremetal or virtual system)

- sudo ./perf-collect (collect PMU counters using predefined architecture specific event file until collection is terminated)

- sudo ./perf-collect -m 10 -t 30 (sets event multiplexing interval to 10ms and collects PMU counters for 30 seconds using default architecture specific event file)

- sudo ./perf-collect -a "myapp.sh myparameter" (collect perf for myapp.sh)

- sudo ./perf-collect --dryrun (checks PMU usage, and collects PMU counters for 10 seconds using default architecture specific event file)

- sudo ./perf-collect --metadata (collect system info and PMU event info without running perf, uses default outputfile if -o option is not used)

- Intel CPUs (until Cascadelake) have 3 fixed PMUs (cpu-cycles, ref-cycles, instructions) and 4 programmable PMUs. The events are grouped in event files with this assumption. However, some of the counters may not be available on some CPUs. You can check the correctness of the event file with dryrun and check the output for anamolies. Typically output will have "not counted", "unsuppported" or zero values for cpu-cycles if number of available counters are less than events in a group.

- Globally pinned events can limit the number of counters available for perf event groups. On X86 systems NMI watchdog pins a fixed counter by default. NMI watchdog is disabled during perf collection if run as a sudo user. If NMI watchdog can't be disabled, event grouping will be forcefully disabled to let perf driver handle event multiplexing.

./perf-postprocess (options)

Options:

-h, --help (show this help message and exit)

-v, --version display version info

-m METRICFILE, --metricfile METRICFILE (formula file, default=events/metric.json)

-o OUTFILE, --outcsv OUTFILE (perf stat output file, csv or xlsx format is supported, default=results/metric_out.csv)

--keepall (keep all intermediate csv files)

--persocket (generate persocket metrics)

--percore (generate percore metrics)

--epoch (time series in epoch format, default is sample count)

required arguments:

-r RAWFILE, --rawfile RAWFILE (Raw CSV output from perf-collect)

./perf-postprocess -r results/perfstat.csv (post processes perfstat.csv and creates metric_out.csv, metric_out.average.csv, metric_out.raw.csv)

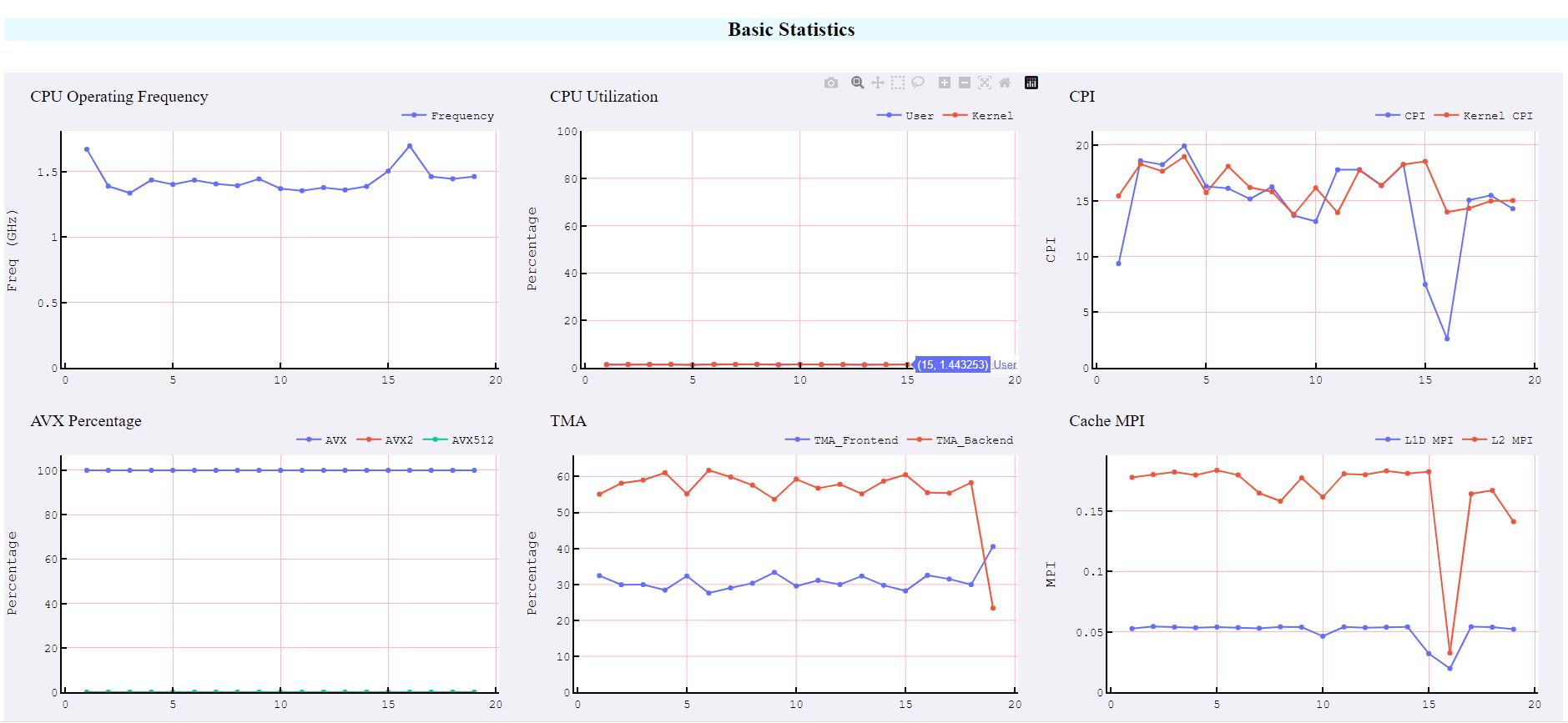

- metric_out.csv : Time series dump of the metrics. The metrics are defined in events/metric.json

- metric_out.averags.csv: Average of metrics over the collection period

- metric_out.raw.csv: csv file with raw events normalized per second

- Socket/core level metrics: Additonal csv files .socket.csv/.core.csv will be generated. Socket/core level data will be in added as new sheets if excel output is chosen

- The tool can collect only the counters supported by underlying linux perf version.

- Current version supports Intel Icelake, Cascadelake, Skylake and Broadwell microarchitectures only.

- Perf collection overhead will increase with increase in number of counters and/or dump interval. Using the right perf multiplexing (check perf-collection.py Notes for more details) interval to reduce overhead

- If you run into locale issues -

UnicodeDecodeError: 'ascii' codec can't decode byte 0xc2 in position 4519: ordinal not in range(128), more likely the locales needs to be set appropriately. You could also try running post-process step withLC_ALL=C.UTF-8 LANG=C.UTF-8 ./perf-postprocess -r result.csv

Special thanks to Vaishali Karanth for her fantastic contributions to the project.

Create a pull request on github.com/intel/PerfSpect with your patch. Please make sure your patch is building without errors. A maintainer will contact you if there are questions or concerns.