Kangxue Yin, Hui Huang, Daniel Cohen-Or, Hao Zhang.

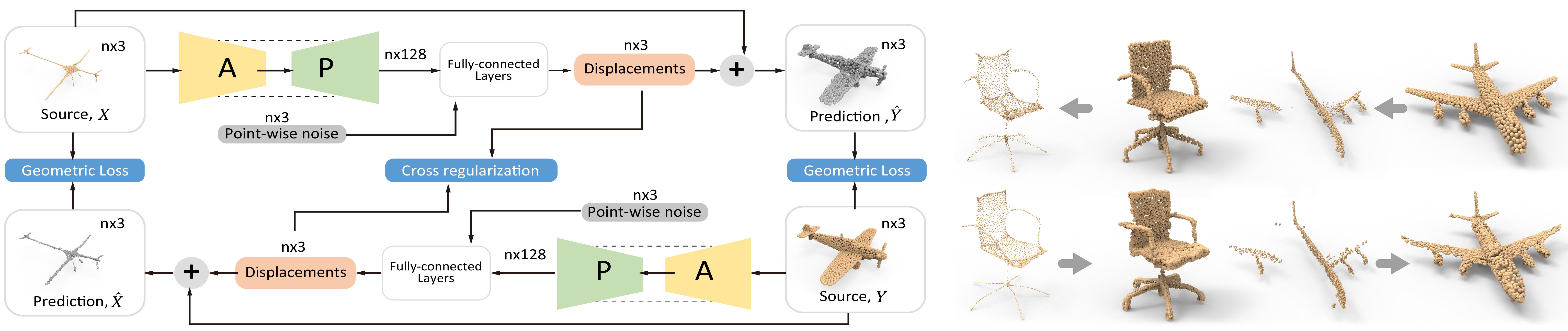

P2P-NET is a general-purpose deep neural network which learns geometric transformations between point-based shape representations from two domains, e.g., meso-skeletons and surfaces, partial and complete scans, etc. The architecture of the P2P-NET is that of a bi-directional point displacement network, which transforms a source point set to a target point set with the same cardinality, and vice versa, by applying point-wise displacement vectors learned from data. P2P-NET is trained on paired shapes from the source and target domains, but without relying on point-to-point correspondences between the source and target point sets... [more in the paper].

- Linux (tested under Ubuntu 16.04 )

- Python (tested under 2.7)

- TensorFlow (tested under 1.3.0-GPU )

- numpy, h5py

The code is built on the top of PointNET++. Before run the code, please compile the customized TensorFlow operators of PointNet++ under the folder "pointnet_plusplus/tf_ops".

If you are in China, you can also choose to download them from Weiyun: HDF5, Raw.

An example of training P2P-NET

(to learn transformations between point-based skeletons and point-based surfaces with the airplane dataset.)

python -u run.py --mode=train --train_hdf5='data_hdf5/airplane_train.hdf5' --test_hdf5='data_hdf5/airplane_test.hdf5' --domain_A=skeleton --domain_B=surface --gpu=0

Test the model:

python -u run.py --mode=test --train_hdf5='data_hdf5/airplane_train.hdf5' --test_hdf5='data_hdf5/airplane_test.hdf5' --domain_A=skeleton --domain_B=surface --gpu=0 --checkpoint='output_airplane_skeleton-surface/trained_models/epoch_200.ckpt'

If you find our work useful in your research, please consider citing:

@article {yin2018p2pnet,

author = {Kangxue Yin and Hui Huang and Daniel Cohen-Or and Hao Zhang},

title = {P2P-NET: Bidirectional Point Displacement Net for Shape Transform},

journal = {ACM Transactions on Graphics(Special Issue of SIGGRAPH)},

volume = {37},

number = {4},

pages = {152:1--152:13},

year = {2018}

}

The code is built on the top of PointNET++. Thanks for the precedent contribution.