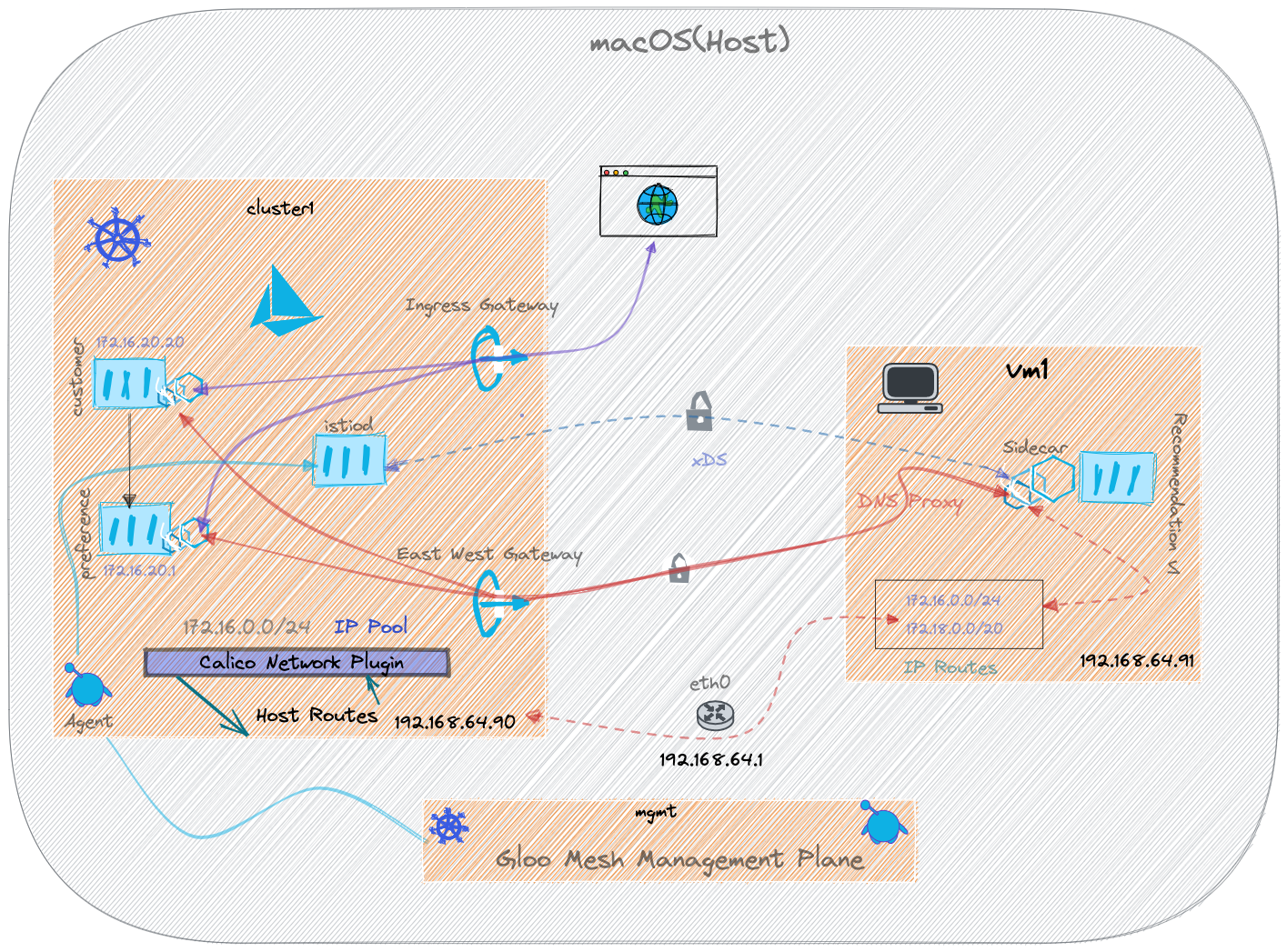

A simple microservices demo to show how to onboard VM workloads with Istio and make them work with Kubernetes services. The demo also shows how to use Gloo Mesh to add features like Traffic Policy, Access Policy etc., that spans across Kubernetes and VM workloads.

Checkout the HTML Documentation for a detailed DIY guide.

git clone https://github.com/kameshsampath/gloo-mesh-vm-demo

cd gloo-mesh-vm-demodirenv allow .The Makefile in the $PROJECT_HOME helps to perform the various setup tasks,

make helphelp: print this help message

setup-ansible: setup ansible environment

clean-up: cleans the setup and environment

create-vms: create the multipass vms

create-kubernetes-clusters: Installs k3s on the multipass vms

deploy-base: Prepare the vm with required packages and tools

deploy-istio: Deploy Istio on to workload cluster

deploy-gloo: Deploy Gloo Mesh on to management and workload clusters

deploy-workload: Deploy workload recommendation service on vm

The demo will be using Ansible to setup the environment, run the following command to install Ansible modules and extra collections and roles the will be used by various tasks.

make setup-ansiblemake create-vmsThe k3s cluster will be a single node cluster run via multipass VM. We will configure that to with the following flags,

--cluster-cidr=172.16.0.0/24allows us to create 65 – 110 Pods on this node--service-cidr=172.18.0.0/20allows us to create 4096 services--disable=traefikdisabletraefikdeployment

For more information on how to calculate the number of pods and service per CIDR rang, check the GKE doc.

The following command will create kubernetes(k3s) cluster and configure it with Calico plugin.

make create-kubernetes-clustersmake deploy-gloomake deploy-istiohelm repo add istio-demo-apps https://github.com/kameshsampath/istio-demo-apps

helm repo updatekubectl --context="$CLUSTER1" label ns default istio.io/rev=1-11-5Deploy Customer,

helm install --kube-context="$CLUSTER1" \

customer istio-demo-apps/customer \

--set enableIstioGateway="true"Deploy Preference,

helm install --kube-context="$CLUSTER1" \

preference istio-demo-apps/preference Call the service to test,

export INGRESS_GATEWAY_IP=$(kubectl --context ${CLUSTER1} -n istio-gateways get svc ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].*}')

export SVC_URL="${INGRESS_GATEWAY_IP}/customer"curl $SVC_URLThe command should shown an output like,

customer => preference => Service host 'http://recommendation:8080' not known.%

Install some essential packages on the vms,

make deploy-baseDeploy the workload the recommendation service,

make deploy-workloadcurl $SVC_URLThe command should shown an output like,

customer => preference => recommendation v1 from 'vm1': 2

Shell into the vm1,

multipass exec vm1 bashcurl --connect-timeout 3 customer.default.svc.cluster.local:8080You might not get a response to the command and when checking the sidecar logs on the vm1 you should see something like:

[2022-02-09T13:34:21.473Z] "GET / HTTP/1.1" 503 UF,URX upstream_reset_before_response_started{connection_failure} - "-" 0 91 24255 - "-" "curl/7.68.0" "5ab981ed-c95f-4730-8732-2fe6fd7f6208" "customer.default.svc.cluster.local:8080" "172.16.0.15:8080" outbound|8080||customer.default.svc.cluster.local - 172.18.2.58:8080 192.168.205.6:34832 - default

Let's fix it by adding routes to our pods and services,

export CLUSTER1_IP=$(kubectl get nodes -owide --no-headers | awk 'NR==1{print $6}')

sudo ip route add 172.16.0.0/28 via $CLUSTER1_IP

sudo ip route add 172.18.0.0/20 via $CLUSTER1_IPNow running the following command from the vm1,

curl --connect-timeout 3 customer.default.svc.cluster.local:8080The command should shown an output like,

customer => preference => recommendation v1 from 'vm1': 2

make clean-up