Exploring deep learning for predicting the binding affinity between a small molecule (i.e. a drug) and a protein. The models are fitted on the Kiba dataset.

The Kiba dataset is made up of drug-protein pairs labelled with an experimentally measured binding affinity (numerical). The Kiba dataset has 2 068 unique drugs, 229 proteins and 98 545 drug-protein pairs. Thus, each drug (and protein) occurs in multiple different pairs. This suggests three different ways of partitioning the data.

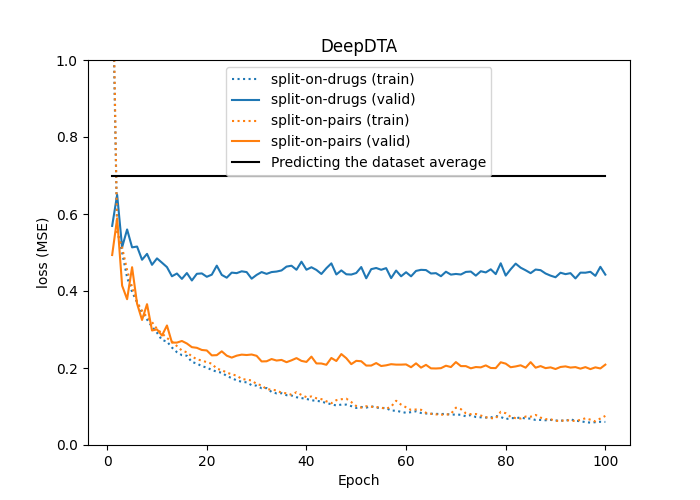

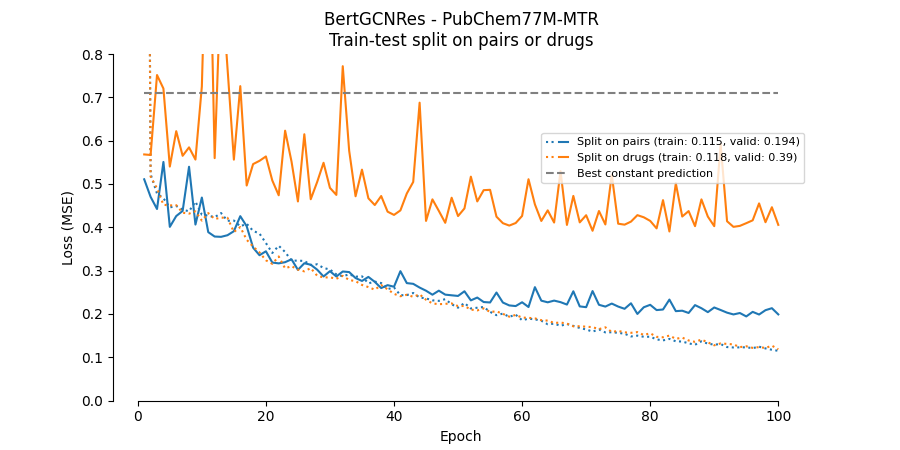

- Split on pairs, where each pair is considered unique, and the data is split by pairs (this is done in DeepDTA and BERT-GCN). This results in the same drugs (and proteins) occurring in both partitions.

- Split on drugs, where the data is split on the unique drugs so that only proteins occur in both partitions, not drugs.

- Split on proteins, where the data is split on the unique proteins so that only drugs occur in both partitions, not drugs. Splitting on unique drugs and proteins is not feasible since it requires excluding all pairs made up of a drug from one partition and a protein from the other (thus losing a lot of data).

What strategy to use depends on how the model is intended to be used. When using strategy 1) the performance is only relevant to drugs and proteins already present in the training set. This seems unrealistic for practical applications. For example, when predicting the binding affinity of a NEW drug. With strategy 2) (or 3)), the performance is relevant to situations where the drug (or protein) was not present in the training set, but the protein (or drug) was. This seems more realistic.

The performance depends strongly on the chosen method. See, for example, the difference between DeepDTA models fitted and evaluated on data split by strategies 1) and 2).

pip install -r requirements.txt

In the working directory, make the directory data, and then the subdirectories raw and processed in data.

mkdir -p data/raw

mkdir -p data/processed

The DeepDTA model is presented in DeepDTA: Deep Drug-Target Binding Affinity Prediction. The original code is available here.

python train_deepDTA.py

The Hybrid model combines a GCN-branch for drug encoding and an embedding (DeepDTA-style) branch for protein encoding. In the drug branch, the drugs SMILES are first represented as graphs (nodes and edges) followed by three layers of graph convolutions. These are combined with protein encodings in a common regression head. Original code here.

python train_Hybrid.py

BERT embeddings and 1D convolutional branches as in DeepDTA. Original code here.

python train_BertDTA.py

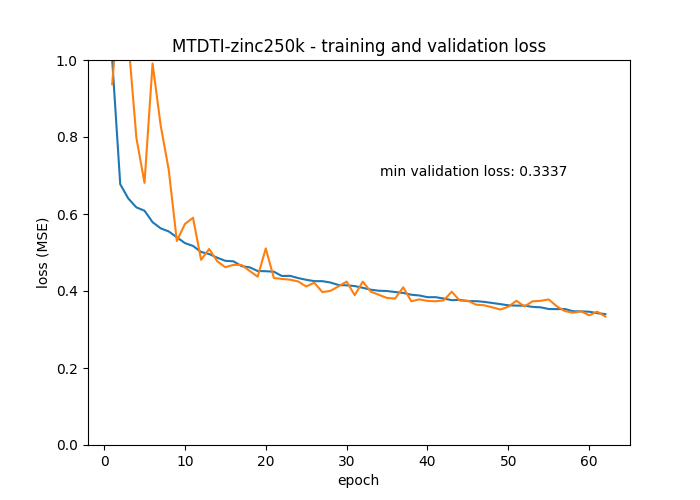

The model has roughly the same architecture as DeepDTA but differs in that a pre-trained BERT encoder encodes the drug SMILES.

The original TenforFlow implementation is available here. The implementation here differs in that it relies on a pre-trained drug encoder from DeepChem/ChemBERTa-77M-MTR that, after initial MLM pre-training, it has been fine-tuned with Multi Task Regression. The output of ChemBERTa-77M-MTR is 386 elements per token and not 784 as in the original model. For the current DTI task, most of the drug encoder (ChemBERTa) is frozen, and only the final pooler layer is newly initialized and trained.

In the original MT-DTI paper the model was trained for 1000 epochs. However, the training takes a long time on a GPU due to the BERT drug encoder, so I only trained for 100 epochs.

python train_MTDTI.py

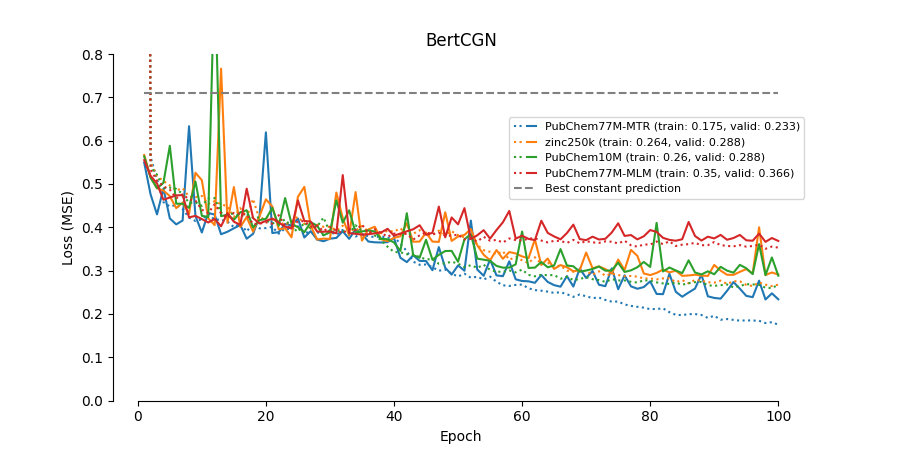

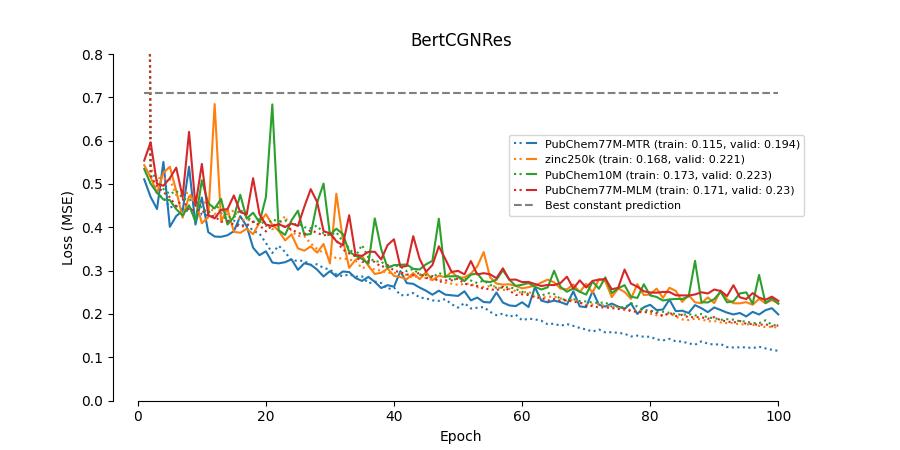

- Try BERT pre-trained on the 10M PubChem dataset ("seyonec/PubChem10M_SMILES_*" at https://huggingface.co/seyonec).

- Replaced ChemBERTa_zinc250k_v2_40k with the 77M PubChem MTR model DeepChem/ChemBERTa-77M-MTR.

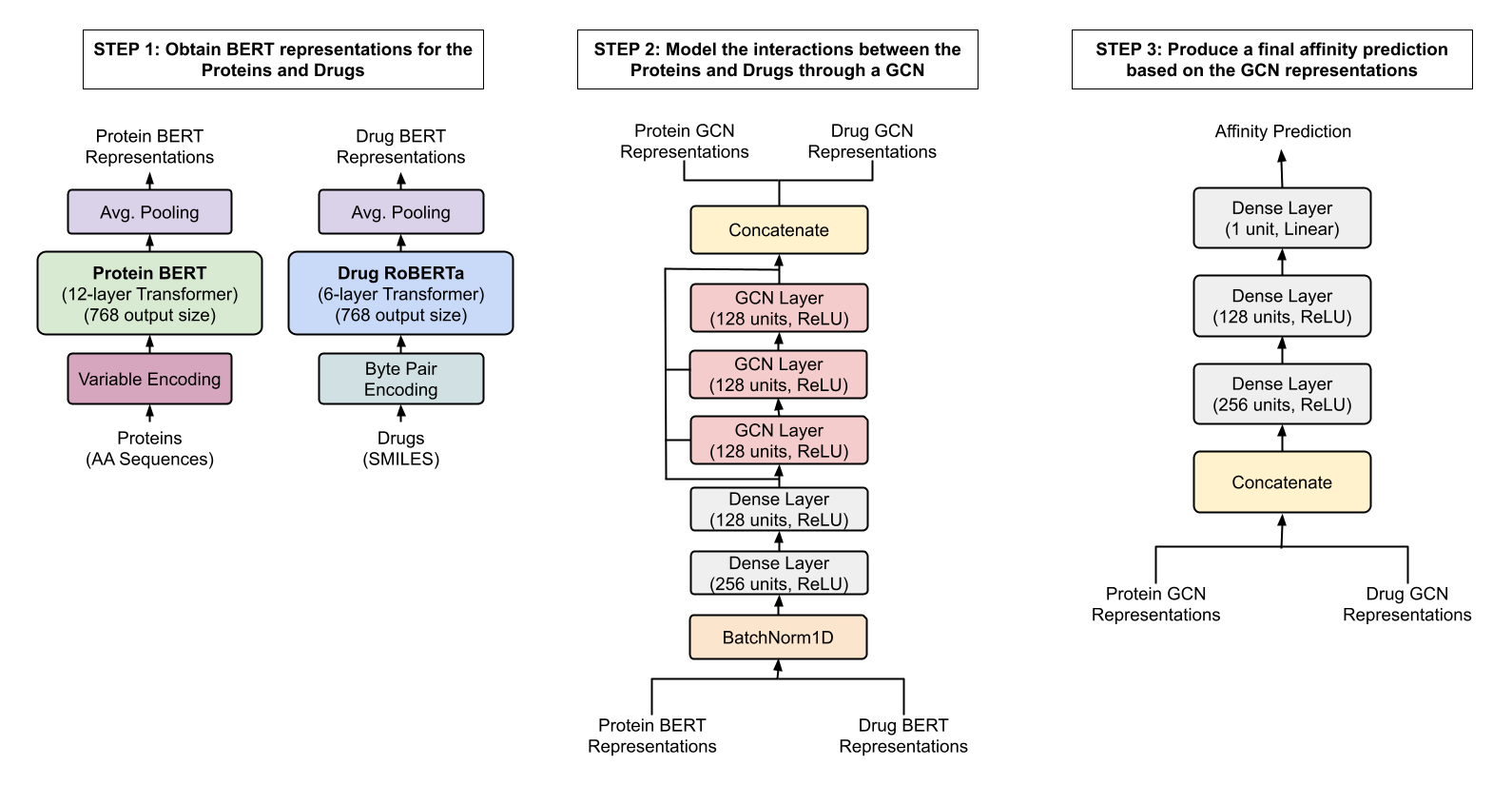

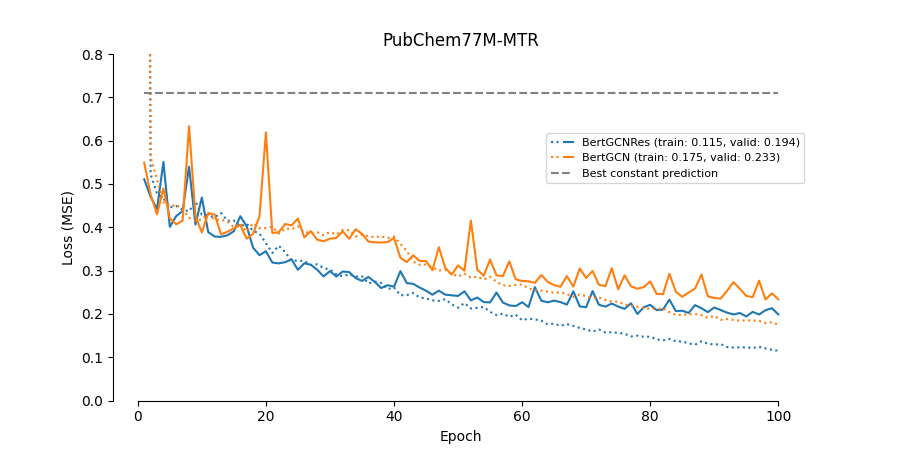

The paper Modelling Drug-Target Binding Affinity using a BERT based Graph Neural network by Lennox, Robertson and Devereux presents a graph convolutional neural network (GCN) trained to predict the binding affinity of drugs to proteins. Their model takes as input BERT-embedded protein sequences and drug molecules. This combination of BERT embeddings and a graph network is relatively novel, and the model achieves (at publication) state-of-the-art results. However, the paper leaves many technical details unspecified, and no code is provided. Thus, the goal is the implement the GCN and replicate the results from the paper.

A GCN takes nodes and edges as inputs. The paper describes embedding both proteins and drugs using pre-trained BERT models where each token (node) is embedded as a 784-long vector. This results in two issues that are not mentioned in the paper.

The primary protein sequence is tokenized into amino acids, and there are trivial edges between neighbouring tokens (nodes). However, a protein's 3D structure puts some amino acids very close to each other and edges between those should also be included. Incorporating these edges into the dataset requires knowledge of the proteins' 3D structure (not provided in the Kiba or Davis). The authors do where or if they added 3D-dependent edges. Here, only edges to neighbours in the primary sequence are included making the protein graph convolutions equivalent to 1-D convolutions with a kernel size of three.

The drugs are tokenized with a Byte-Pair Encoder (BPE) from Simplified molecular-input line-entry system SMILES. A BPE combines characters to produce a fixed-size vocabulary where frequently occurring subsequences of characters are combined into tokens. The particular BPE (probably) used in the paper separates bond tokens and atom tokens so that multi-character tokens are made of either only atoms (nodes) or only bonds (edges). An embedded SMILES will be made up of both node (atoms) and bond vectors. Since the bonds often correspond to small groups of atoms, the edges computed directly from a SMILES string (using torch_geometric.utils.from_smiles()) do not match (edges from the latter are between atoms and not multi-atom BPE tokens). The paper does not specify how this was resolved. Here, only edges between nodes were included, and embedding vectors corresponding to bonds were removed (i.e. only node vectors were included as inputs to the GCN).

The network architecture is described in Fig. 1 of the paper.

- Issue: In step 1, there is an average pooling layer after embedding (both protein and drug). This layer collapses the tensors over the nodes. The purpose of the average pooling layer before the GCN layers is unclear. GCN layers take as inputs nodes and edges; thus, an output averaged over nodes cannot be used as input to a GCN layer. (Collapsing over nodes is typically done as a readout layer right before the classification/regression head.) Solution: This average pooling layer was omitted.

- Issue: Step 2 has a concatenation layer directly after the GCN layers. What is being concatenated needs to be clarified. Solution: This concatenation layer was omitted.

- Issue: In step 3, there is no readout layer that collapses over nodes. Thus, the input to the final dense layers will have a variable number of nodes. Something else is needed. Solution: After the GCN layers, an average pooling layer was added.

This will download and process the data the first time it runs.

python train_BertGCN.py

python train_BertGCNRes.py

This implementation does not re-produce the performance reported in the paper.

As for DeepDTA the validation performance drops significantly when the dataset is partitioned on unique drugs instead of unique drug-protein pairs. Thus, these models will not generalize well to new drugs not present in the training data.

- See if the actual

edge_indexfor the proteins can be downloaded from the UniProt protein database. - Add residual connections to the GCN layers.