Kanchana Ranasinghe, Muzammal Naseer, Munawar Hayat, Salman Khan, & Fahad Shahbaz Khan

Paper Link | Project Page | Video

Abstract: Deep neural networks have achieved remarkable performance on a range of classification tasks, with softmax cross-entropy (CE) loss emerging as the de-facto objective function. The CE loss encourages features of a class to have a higher projection score on the true class-vector compared to the negative classes. However, this is a relative constraint and does not explicitly force different class features to be well-separated. Motivated by the observation that ground-truth class representations in CE loss are orthogonal (one-hot encoded vectors), we develop a novel loss function termed “Orthogonal Projection Loss” (OPL) which imposes orthogonality in the feature space. OPL augments the properties of CE loss and directly enforces inter-class separation alongside intra-class clustering in the feature space through orthogonality constraints on the mini-batch level. As compared to other alternatives of CE, OPL offers unique advantages e.g., no additional learnable parameters, does not require careful negative mining and is not sensitive to the batch size. Given the plug-and-play nature of OPL, we evaluate it on a diverse range of tasks including image recognition (CIFAR-100), large-scale classification (ImageNet), domain generalization (PACS) and few-shot learning (miniImageNet, CIFAR-FS, tiered-ImageNet and Meta-dataset) and demonstrate its effectiveness across the board. Furthermore, OPL offers better robustness against practical nuisances such as adversarial attacks and label noise.

@InProceedings{Ranasinghe_2021_ICCV,

author = {Ranasinghe, Kanchana and Naseer, Muzammal and Hayat, Munawar and Khan, Salman and Khan, Fahad Shahbaz},

title = {Orthogonal Projection Loss},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {12333-12343}

}- Contributions

- Usage

- Pretrained Models

- Training

- Evaluation

- What Can You Do?

- Quantitative Results

- Qualitative Results

- We propose a novel loss, OPL, that directly enforces inter-class separation and intra-class clustering via orthogonality constraints on the feature space with no additional learnable parameters.

- Our orthogonality constraints are efficiently formulated in comparison to existing methods, allowing mini-batch processing without the need for explicit calculation of singular values. This leads to a simple vectorized implementation of OPL directly integrating with CE.

- We conduct extensive evaluations on a diverse range of image classification tasks highlighting the discriminative ability of OPL. Further, our results on few-shot learning (FSL) and domain generalization (DG) datasets establish the transferability and generalizability of features learned with OPL. Finally, we establish the improved robustness of learned features to adversarial attacks and label noise.

Refer to requirements.txt for dependencies. Orthogonal Projection Loss (OPL) can be simply plugged-in with any standard

loss function similar to Softmax Cross-Entropy Loss (CE) as below. You may need to edit the forward function of your

model to output features (we use the penultimate feature maps) alongside the final logits. You can set the gamma and

lambda values to default as 0.5 and 1 respectively.

import torch.nn.functional as F

from loss import OrthogonalProjectionLoss

ce_loss = F.cross_entropy

op_loss = OrthogonalProjectionLoss(gamma=0.5)

op_lambda = 1

for inputs, targets in dataloader:

features, logits = model(inputs)

loss_op = op_loss(features, targets)

loss_ce = ce_loss(logits, targets)

loss = loss_ce + op_lambda * loss_op

loss.backward()If you find our OPL pretrained models useful, please consider citing our work.

Refer to the sub-folders for CIFAR-100 (cifar), ImageNet (imagenet), few-shot learning (rfs) training, and label noise training (truncated_loss). The README.MD within each directory contains the training instructions for that task.

Refer to the relevant sub-folders (same as in Training above). You can find the pretrained models for these tasks on our releases page.

For future work, we hope to explore the following:

- Test how OPL can be adapted for un-supervised representation learning

- Test the performance of OPL on more architectures (e.g. vision transformers)

- Test how OPL performs on class-imbalanced datasets

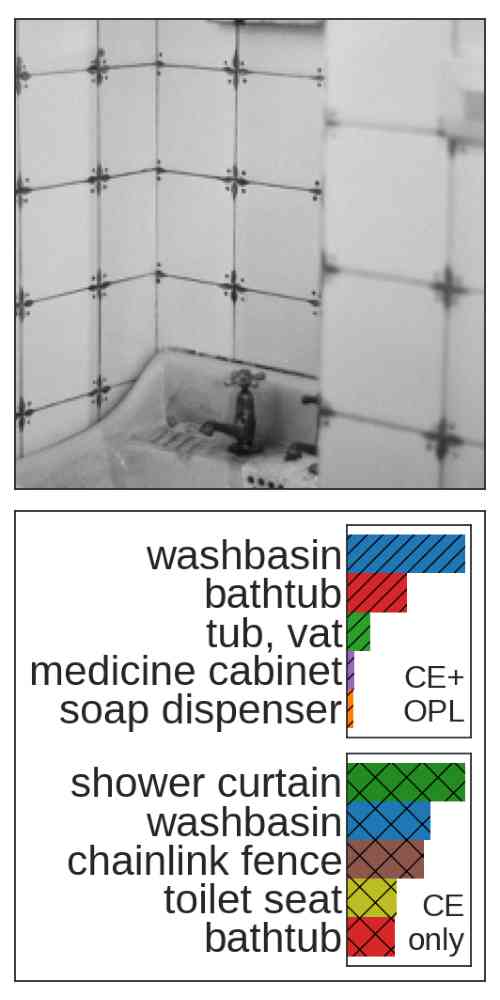

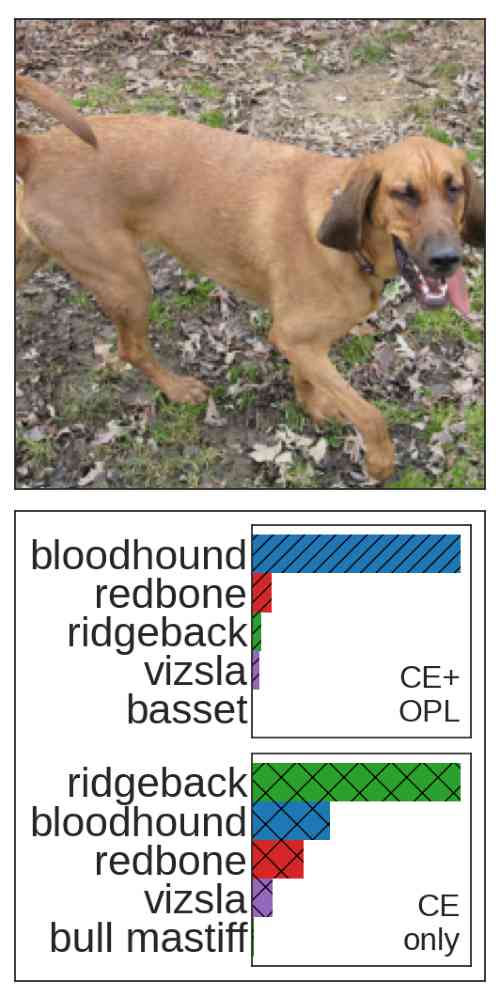

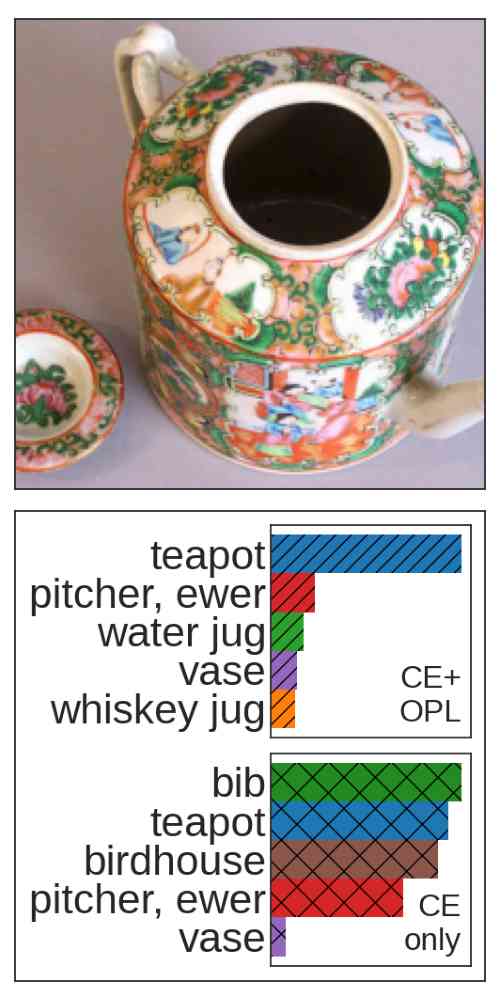

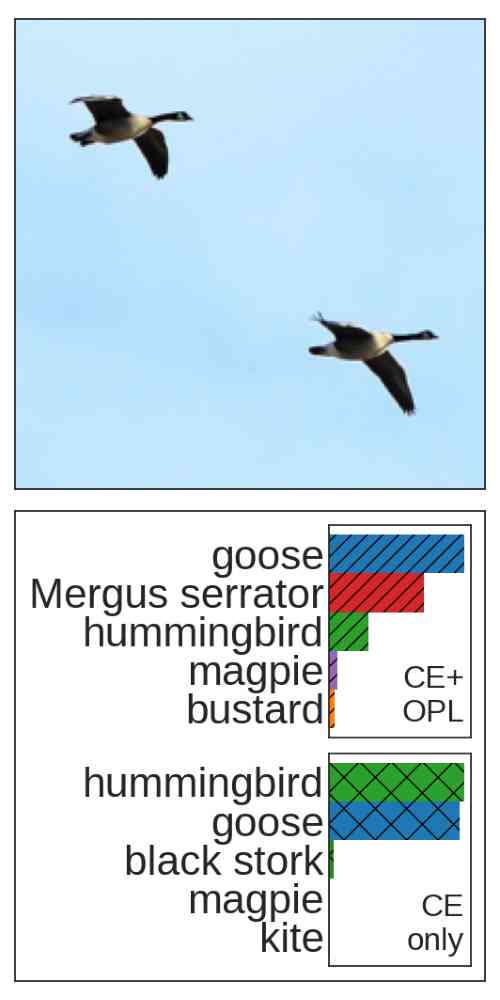

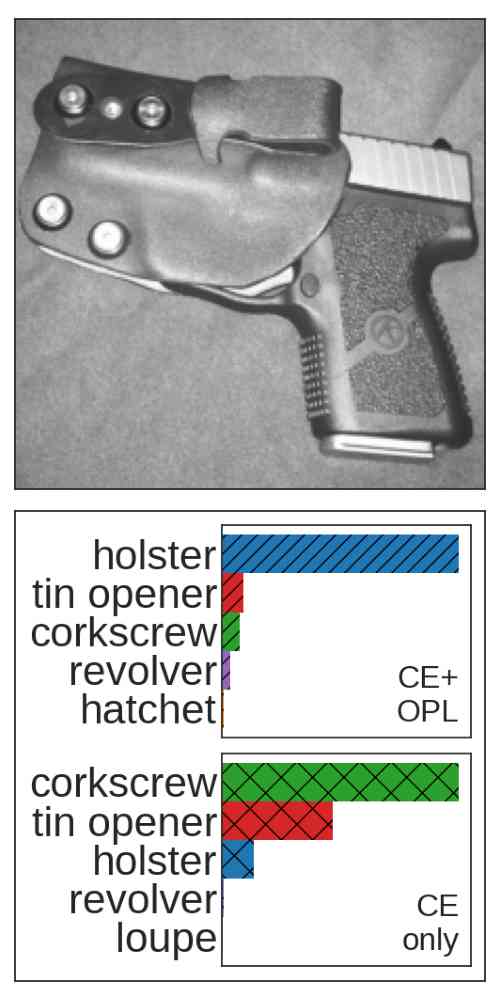

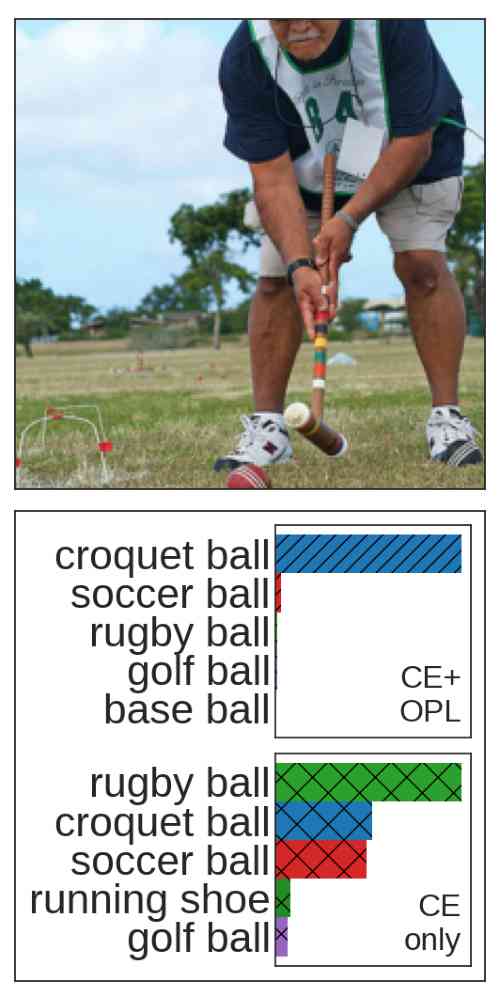

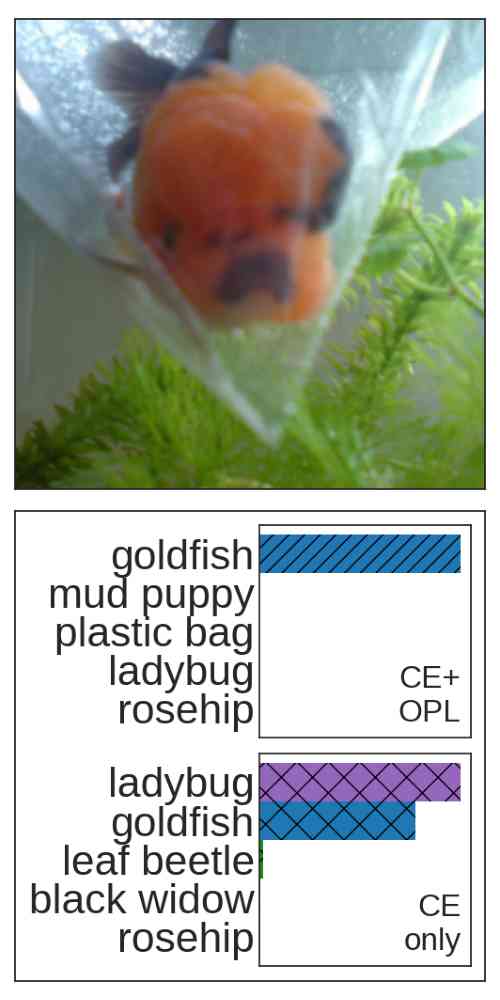

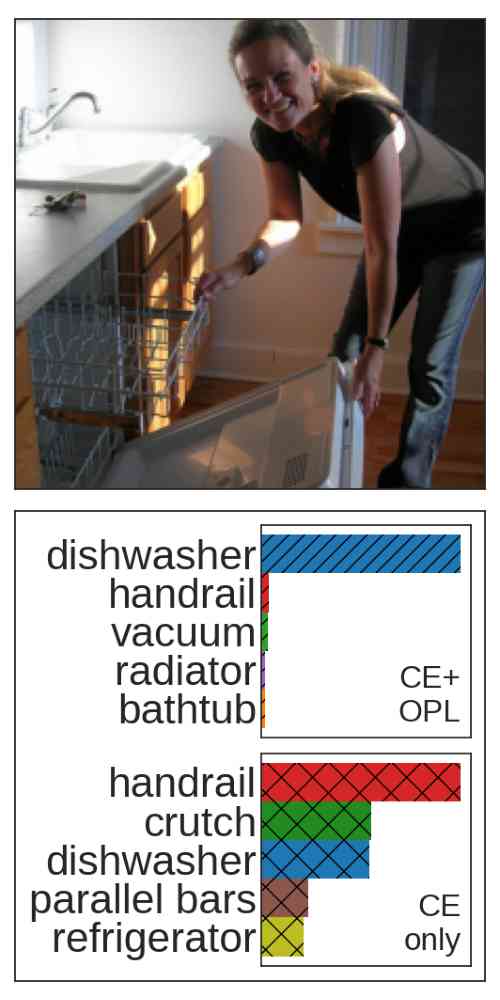

We present qualitative results for training with OPL (against a CE only backbone) for various classification tasks.

| Model Backbone | ResNet-18 | ResNet-18 | ResNet-50 | ResNet-50 |

|---|---|---|---|---|

| top-1 | top-5 | top-1 | top-5 | |

| CE (Baseline) | 69.91% | 89.08% | 76.15% | 92.87% |

| CE + OPL (ours) | 70.27% | 89.60% | 76.98% | 93.30% |

| Method | New Loss | Cifar:1shot | Cifar:5shot | Mini:1shot | Mini:5shot | Tier:1shot | Tier:5shot |

|---|---|---|---|---|---|---|---|

| MAML | ✗ | 58.90±1.9 | 71.50±1.0 | 48.70±1.84 | 63.11±0.92 | 51.67±1.81 | 70.30±1.75 |

| Prototypical Networks | ✗ | 55.50±0.7 | 72.00±0.6 | 49.42±0.78 | 68.20±0.66 | 53.31±0.89 | 72.69±0.74 |

| Relation Networks | ✗ | 55.00±1.0 | 69.30±0.8 | 50.44±0.82 | 65.32±0.70 | 54.48±0.93 | 71.32±0.78 |

| Shot-Free | ✗ | 69.20±N/A | 84.70±N/A | 59.04±N/A | 77.64±N/A | 63.52±N/A | 82.59±N/A |

| MetaOptNet | ✗ | 72.60±0.7 | 84.30±0.5 | 62.64±0.61 | 78.63±0.46 | 65.99±0.72 | 81.56±0.53 |

| RFS | ✗ | 71.45±0.8 | 85.95±0.5 | 62.02±0.60 | 79.64±0.44 | 69.74±0.72 | 84.41±0.55 |

| RFS + OPL (Ours) | ✔ | 73.02±0.4 | 86.12±0.2 | 63.10±0.36 | 79.87±0.26 | 70.20±0.41 | 85.01±0.27 |

| NAML | ✔ | - | - | 65.42±0.25 | 75.48±0.34 | - | - |

| Neg-Cosine | ✔ | - | - | 63.85±0.81 | 81.57±0.56 | - | - |

| SKD | ✔ | 74.50±0.9 | 88.00±0.6 | 65.93±0.81 | 83.15±0.54 | 71.69±0.91 | 86.66±0.60 |

| SKD + OPL (Ours) | ✔ | 74.94±0.4 | 88.06±0.3 | 66.90±0.37 | 83.23±0.25 | 72.10±0.41 | 86.70±0.27 |

| Dataset | Method | Uniform | Class Dependent |

|---|---|---|---|

| CIFAR10 | TL | 87.62% | 82.28% |

| TL+OPL | 88.45% | 87.02% | |

| CIFAR100 | TL | 62.64% | 47.66% |

| TL+OPL | 65.62% | 53.94% |

We present some examples for qualitative improvements over imagenet below.