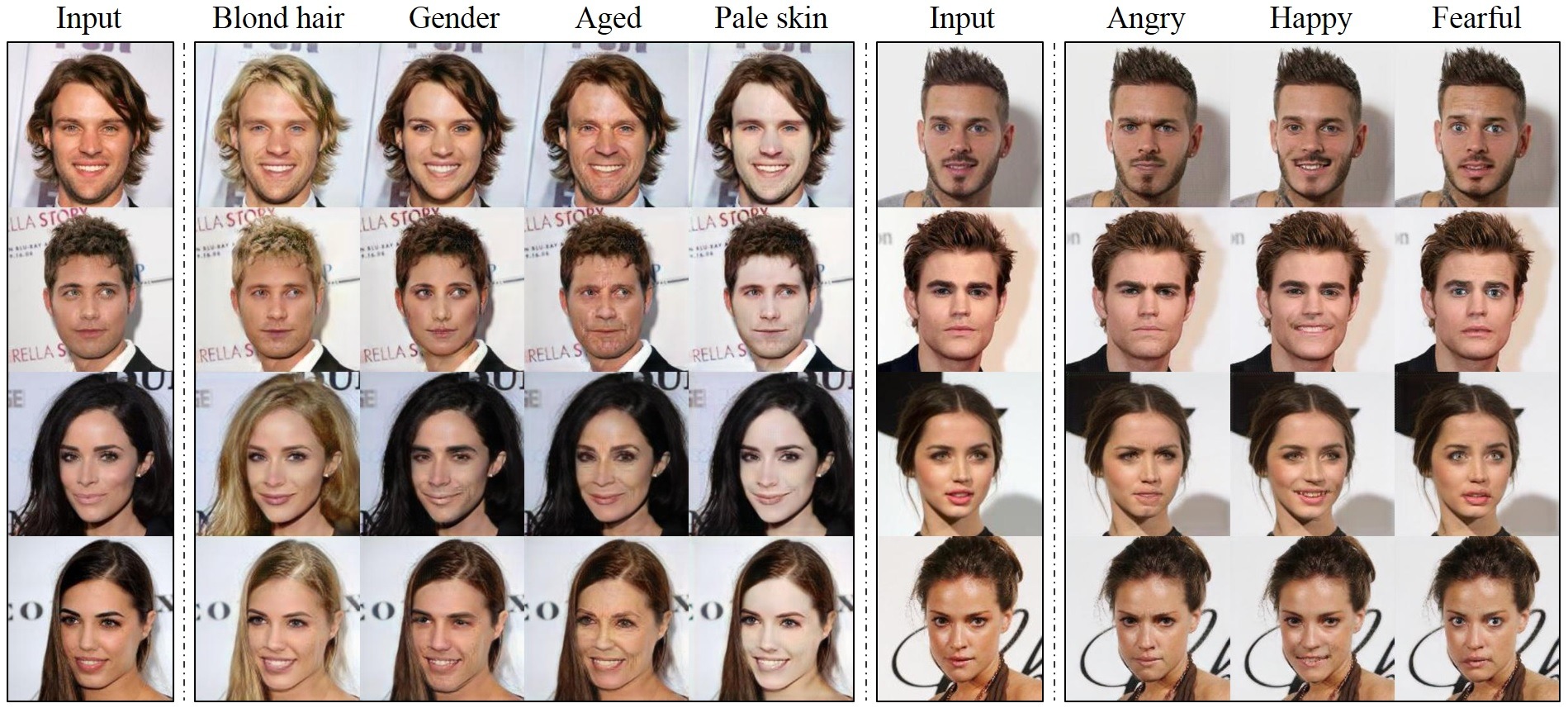

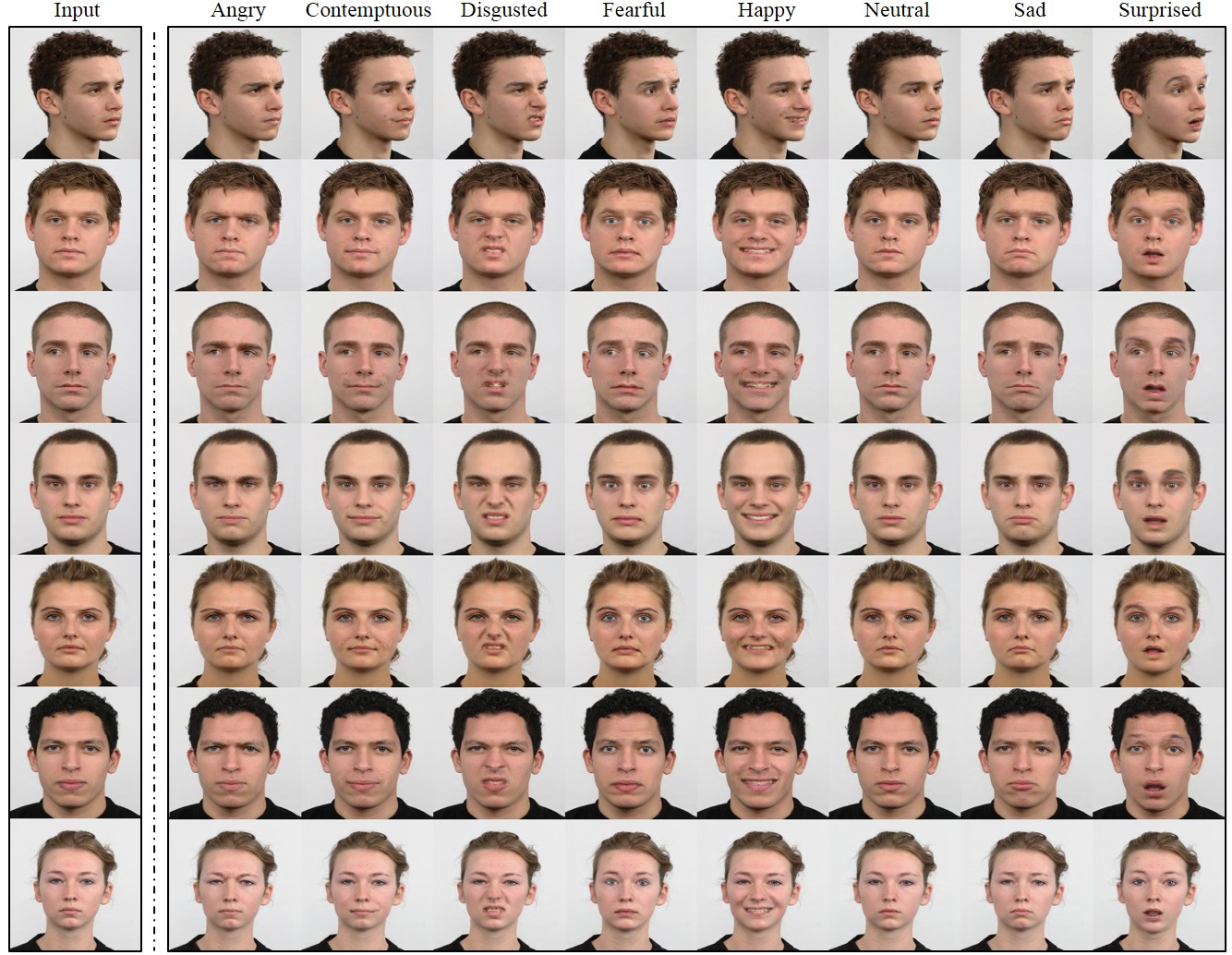

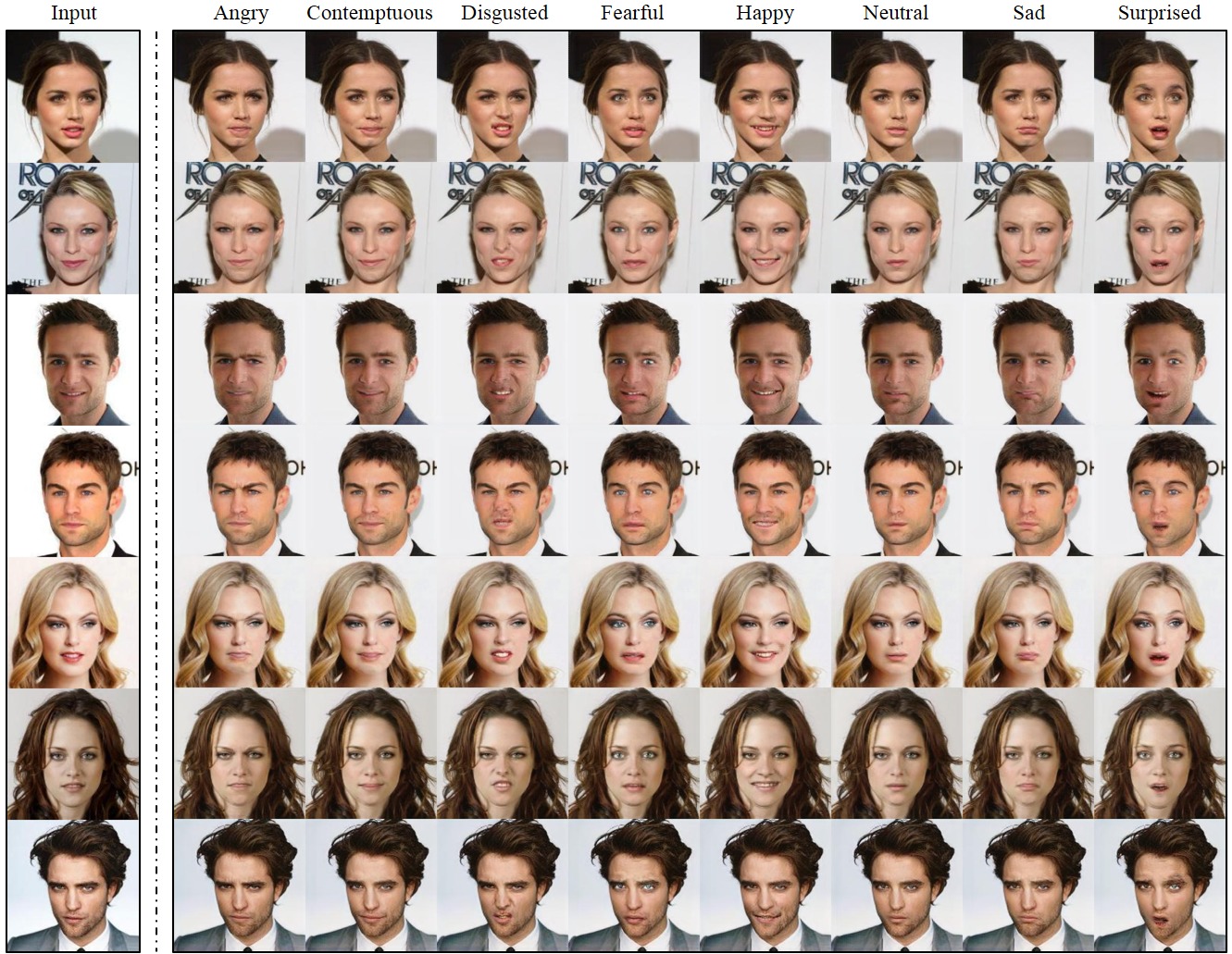

This repository provides a PyTorch implementation of StarGAN. StarGAN can flexibly translate an input image to any desired target domain using only a single generator and a discriminator. The demo video for StarGAN can be found here.

StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation

Yunjey Choi 1,2, Minje Choi 1,2, Munyoung Kim 2,3, Jung-Woo Ha 2, Sung Kim 2,4, and Jaegul Choo 1,2

1 Korea University, 2 Clova AI Research (NAVER Corp.), 3 The College of New Jersey, 4 HKUST

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018 (Oral)

- Python 3.5+

- PyTorch 0.4.0+

- TensorFlow 1.3+ (optional for tensorboard)

$ git clone https://github.com/yunjey/StarGAN.git

$ cd StarGAN/To download the CelebA dataset:

$ bash download.sh celebaTo download the RaFD dataset, you must request access to the dataset from the Radboud Faces Database website. Then, you need to create a folder structure as described here.

To train StarGAN on CelebA, run the training script below. See here for a list of selectable attributes in the CelebA dataset. If you change the selected_attrs argument, you should also change the c_dim argument accordingly.

$ python main.py --mode train --dataset CelebA --image_size 128 --c_dim 5 \

--sample_dir stargan_celeba/samples --log_dir stargan_celeba/logs \

--model_save_dir stargan_celeba/models --result_dir stargan_celeba/results \

--selected_attrs Black_Hair Blond_Hair Brown_Hair Male YoungTo train StarGAN on RaFD:

$ python main.py --mode train --dataset RaFD --image_size 128 --c_dim 8 \

--sample_dir stargan_rafd/samples --log_dir stargan_rafd/logs \

--model_save_dir stargan_rafd/models --result_dir stargan_rafd/resultsTo train StarGAN on both CelebA and RafD:

$ python main.py --mode=train --dataset Both --image_size 256 --c_dim 5 --c2_dim 8 \

--sample_dir stargan_both/samples --log_dir stargan_both/logs \

--model_save_dir stargan_both/models --result_dir stargan_both/resultsTo train StarGAN on your own dataset, create a folder structure in the same format as RaFD and run the command:

$ python main.py --mode train --dataset RaFD --rafd_crop_size CROP_SIZE --image_size IMG_SIZE \

--c_dim LABEL_DIM --rafd_image_dir TRAIN_IMG_DIR \

--sample_dir stargan_custom/samples --log_dir stargan_custom/logs \

--model_save_dir stargan_custom/models --result_dir stargan_custom/resultsTo test StarGAN on CelebA:

$ python main.py --mode test --dataset CelebA --image_size 128 --c_dim 5 \

--sample_dir stargan_celeba/samples --log_dir stargan_celeba/logs \

--model_save_dir stargan_celeba/models --result_dir stargan_celeba/results \

--selected_attrs Black_Hair Blond_Hair Brown_Hair Male YoungTo test StarGAN on RaFD:

$ python main.py --mode test --dataset RaFD --image_size 128 \

--c_dim 8 --rafd_image_dir data/RaFD/test \

--sample_dir stargan_rafd/samples --log_dir stargan_rafd/logs \

--model_save_dir stargan_rafd/models --result_dir stargan_rafd/resultsTo test StarGAN on both CelebA and RaFD:

$ python main.py --mode test --dataset Both --image_size 256 --c_dim 5 --c2_dim 8 \

--sample_dir stargan_both/samples --log_dir stargan_both/logs \

--model_save_dir stargan_both/models --result_dir stargan_both/resultsTo test StarGAN on your own dataset:

$ python main.py --mode test --dataset RaFD --rafd_crop_size CROP_SIZE --image_size IMG_SIZE \

--c_dim LABEL_DIM --rafd_image_dir TEST_IMG_DIR \

--sample_dir stargan_custom/samples --log_dir stargan_custom/logs \

--model_save_dir stargan_custom/models --result_dir stargan_custom/resultsTo download a pretrained model checkpoint, run the script below. The pretrained model checkpoint will be downloaded and saved into ./stargan_celeba_256/models directory.

$ bash download.sh pretrained-celeba-256x256To translate images using the pretrained model, run the evaluation script below. The translated images will be saved into ./stargan_celeba_256/results directory.

$ python main.py --mode test --dataset CelebA --image_size 256 --c_dim 5 \

--selected_attrs Black_Hair Blond_Hair Brown_Hair Male Young \

--model_save_dir='stargan_celeba_256/models' \

--result_dir='stargan_celeba_256/results'If this work is useful for your research, please cite our paper:

@InProceedings{StarGAN2018,

author = {Choi, Yunjey and Choi, Minje and Kim, Munyoung and Ha, Jung-Woo and Kim, Sunghun and Choo, Jaegul},

title = {StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2018}

}

This work was mainly done while the first author did a research internship at Clova AI Research, NAVER. We thank all the researchers at NAVER, especially Donghyun Kwak, for insightful discussions.