This is the official implementation of our ACL'2022 paper "Hyperlink-induced Pre-training for Passage Retrieval in OpenQA".

[Update-20230223] We add evaluation on the widely used BEIR benchmark. See here

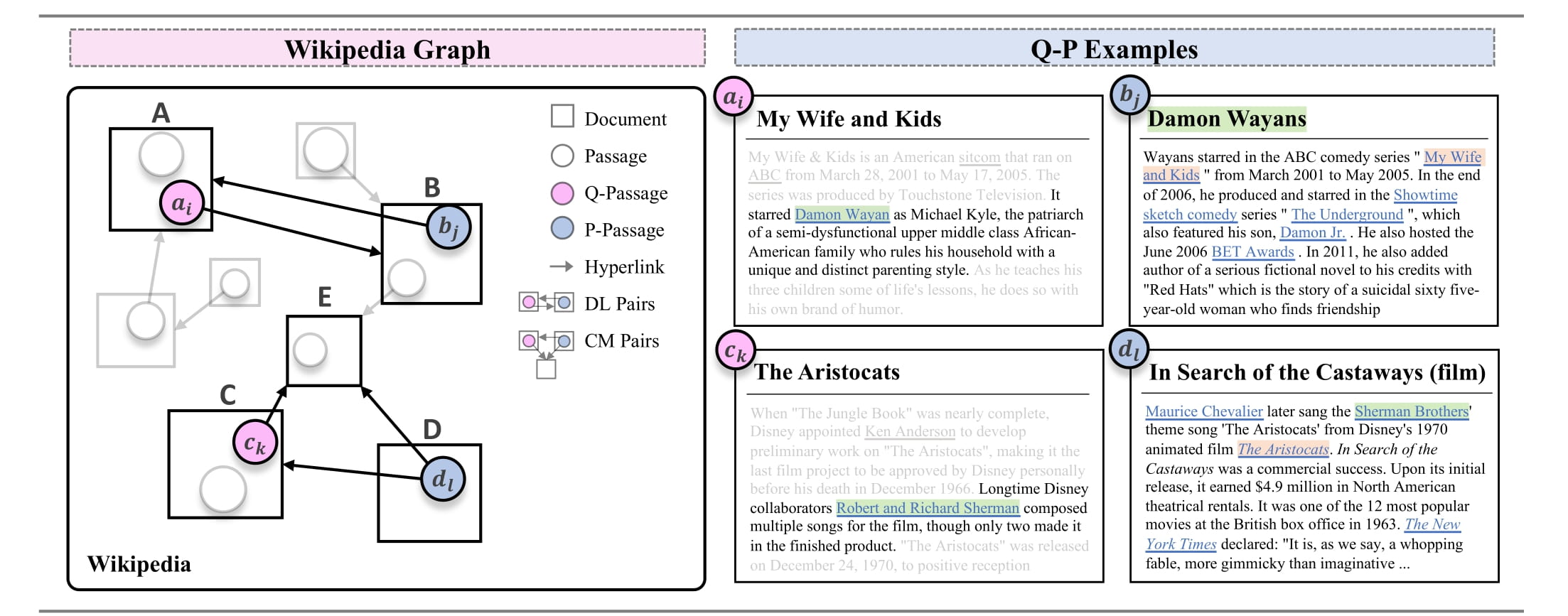

In this paper, we propose HyperLink-induced Pre-training (HLP), a pre-training method to learn effective Q-P relevance induced by the hyperlink topology within naturally-occurring Web documents. Specifically, these Q-P pairs are automatically extracted from the online documents with relevance adequately designed via hyperlink-based topology to facilitate downstream retrieval for question answering.

Note: the hyperlink-induced Q-P pairs are mostly semantically closed but lexically diverse, which could be considered/used as unsupervised paraphrase extracted from internet. Some examples are shared here.

- Installation from the source. Python's virtual or Conda environments are recommended.

git clone git@github.com:jzhoubu/HLP.git

cd HLP

conda create -n hlp python=3.7

conda activate hlp

pip install -r requirements.txt-

Please change the

HLP_HOMEvariable inbiencoder_train_cfg.yaml,gen_embs.yamlanddense_retriever.yaml. TheHLP_HOMEis the path to the HLP directory you download. -

You may also need to build apex.

git clone https://github.com/NVIDIA/apex

cd apex

python -m pip install -v --disable-pip-version-check --no-cache-dir ./[Option1] Download Data via Command

bash downloader.shThis command will automatically download the necessary data (about 50GB) for experiments.

[Option2] Download Data Manually

Please download these data to the pre-defined location in conf/*/*.yaml.

| Dataset | Download Links | |||

|---|---|---|---|---|

| train | dev | test | corpus | |

| HLP | dl_10m.jsonl cm_10m.jsonl | / | / | / |

| NQ | nq-train.jsonl | nq-dev.jsonl | nq-test.qa.csv | psgs_w100.tsv |

| TriviaQA | trivia-train.jsonl | trivia-dev.jsonl | trivia-test.qa.csv | |

| WebQA | webq-train.jsonl | webq-dev.jsonl | webq-test.qa.csv | |

| MS MARCO | msmarco-train.jsonl | msmarco-dev.jsonl | / | |

Download Models

| Models | Trainset | TrainConfig | Size | Zero-shot Performance | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NQ | TriviaQA | WebQ | ||||||||||

| Top5 | Top20 | Top100 | Top5 | Top20 | Top100 | Top5 | Top20 | Top100 | ||||

| BM25 | / | / | / | 43.6 | 62.9 | 78.1 | 66.4 | 76.4 | 83.2 | 42.6 | 62.8 | 76.8 |

| DL | dl_10m | pretrain_8xV100 | 418M | 49.0 | 67.8 | 79.7 | 62.0 | 73.8 | 82.1 | 48.4 | 67.1 | 79.5 |

| CM | cm_10m | pretrain_8xV100 | 418M | 42.5 | 62.2 | 77.9 | 63.2 | 75.8 | 83.7 | 45.4 | 64.5 | 78.9 |

| HLP | dl_10m cm_10m | pretrain_8xV100 | 418M | 50.9 | 69.3 | 82.1 | 65.3 | 77.0 | 84.1 | 49.1 | 67.4 | 80.5 |

| Models | TuneSet | TuneConfig | Size | Finetune Performance | ||||||||

| HLP | nq-train | finetune_8xV100 | 840M | 70.6 | 81.3 | 88.0 | / | / | / | / | / | / |

More information of these checkpoints can be found in the model-card.

Below is an example to pre-train HLP.

python -m torch.distributed.launch --nproc_per_node=8 train_dense_encoder.py \

hydra.run.dir=./experiments/pretrain_hlp/train \

val_av_rank_start_epoch=0 \

train_datasets=[dl,cm] dev_datasets=[nq_dev] \

train=pretrain_8xV100hydra.run.dir: working directory of hydra (logs and checkpoints will be saved here).val_av_rank_start_epoch: epoch number when we start use average ranking for validation.train_datasets: alias of the train set name (seeconf/datasets/train.yaml).dev_datasets: alias of the dev set name (seeconf/datasets/train.yaml).train: a yaml file of training configuration (underconf/train)- See more configuration setting in

biencoder_train_cfg.yamlandpretrain_8xV100.yaml.

Below is an example to fine-tune on NQ dataset using a pre-trained checkpoint:

python -m torch.distributed.launch --nproc_per_node=8 train_dense_encoder.py \

hydra.run.dir=./experiments/finetune_nq/train \

model_file=../../pretrain_hlp/train/dpr_biencoder.best \

train_datasets=[nq_train] dev_datasets=[nq_dev] \

train=finetune_8xV100model_file: a relative path to the model checkpoint

Note: To fine-tuning on NQ dataset, please also use train=finetune_8xV100 during the embedding phrase and the retrieval phrase.

Generating representation vectors for the static documents dataset is a highly parallelizable process which can take up to a few days if computed on a single GPU. You might want to use multiple available GPU servers by running the script on each of them independently and specifying their own shards.

Below is an example to generate embeddings of the wikipedia corpus.

python ./generate_dense_embeddings.py \

hydra.run.dir=./experiments/pretrain_hlp/embed \

train=pretrain_8xV100 \

model_file=../train/dpr_biencoder.best \

ctx_src=dpr_wiki \

shard_id=0 num_shards=1 \

out_file=embedding_dpr_wiki \

batch_size=10000model_file: a relative path to the model checkpoint.ctx_src: alias of the passages resource (seeconf/ctx_sources/corpus.yaml).out_file: prefix name of the output embedding.shard_id: number(0-based) of data shard to processnum_shards: total amount of data shards

Below is an example to evaluate a model on NQ test set.

python dense_retriever.py \

hydra.run.dir=./experiments/pretrain_hlp/infer \

train=pretrain_8xV100 \

model_file=../train/dpr_biencoder.best \

qa_dataset=nq_test \

ctx_datatsets=[dpr_wiki] \

encoded_ctx_files=["../embed/embedding_dpr_wiki*"] \

out_file=nq_test.result \model_file: a relative path to the model checkpointqa_dataset: alias of the test set (seeconf/datasets/eval.yaml)encoded_ctx_files: list of corpus embedding files glob expressionout_file: path of the output file

Below shows data format of our train and dev data (i.e. dl_10m.jsonl and nq-train.json). Our implementation can work with json and jsonl files.

More format descriptions can refer to here.

[

{

"question": "....",

"positive_ctxs": [{"title": "...", "text": "...."}],

"negative_ctxs": [{"title": "...", "text": "...."}],

"hard_negative_ctxs": [{"title": "...", "text": "...."}]

},

...

]

We also release our processed wikipedia graph which considers passages as nodes and hyperlinks as links. Further details can be found in the Section 3 in our paper. Click here to download.

import json, glob

from tqdm import tqdm

PATH = "/home/data/jzhoubu/wiki_20210301_processed/**/wiki_**.json" # change this path accordingly

files = glob.glob(PATH)

title2info = {}

for f in tqdm(files):

sample = json.load(open(f, "r"))

for k,v in sample.items():

title2info[k] = v

print(len(title2info.keys()))

# 22334994

print(title2info['Anarchism_0'])

# {'text':

# 'Anarchism is a <SOE> political philosophy <EOE> and <SOE> movement <EOE> that is sceptical of <SOE> authority <EOE> and rejects all involuntary, coercive forms of <SOE> hierarchy <EOE> . Anarchism calls for the abolition of the <SOE> state <EOE> , which it holds to be undesirable, unnecessary, and harmful. It is usually described alongside <SOE> libertarian Marxism <EOE> as the libertarian wing ( <SOE> libertarian socialism <EOE> ) of the socialist movement and as having a historical association with <SOE> anti-capitalism <EOE> and <SOE> socialism <EOE> . The <SOE> history of anarchism <EOE> goes back to <SOE> prehistory <EOE> ,',

# 'mentions':

# ['political philosophy', 'movement', 'authority', 'hierarchy', 'state', 'libertarian Marxism', 'libertarian socialism', 'anti-capitalism', 'socialism', 'history of anarchism', 'prehistory'],

# 'linkouts':

# ['Political philosophy', 'Political movement', 'Authority', 'Hierarchy', 'State (polity)', 'Libertarian Marxism', 'Libertarian socialism', 'Anti-capitalism', 'Socialism', 'History of anarchism', 'Prehistory']

# }| Query | Passage |

|---|---|

|

Title:

Abby Kelley Text: Liberty Farm in Worcester, Massachusetts , the home of Abby Kelley and Stephen Symonds Foster, was designated a National Historic Landmark because of its association with their lives of working for abolitionism. |

Title:

Worcester, Massachusetts Text: Two of the nation’s most radical abolitionists, Abby Kelley Foster and her husband Stephen S. Foster, adopted Worcester as their home, as did Thomas Wentworth Higginson, the editor of The Atlantic Monthly and Emily Dickinson’s avuncular correspondent, and Unitarian minister Rev. Edward Everett Hale. The area was already home to Lucy Stone, Eli Thayer, and Samuel May Jr. They were joined in their political activities by networks of related Quaker families such as the Earles and the Chases, whose organizing efforts were crucial to ... |

|

Title: Daniel Gormally Text: In 2015 he tied for the second place with David Howell and Nicholas Pert in the 102nd British Championship andeventually finished fourth on tiebreak. |

Title: Nicholas Pert Text: In 2015, Pert tied for 2nd–4th with David Howell and Daniel Gormally, finishing third on tiebreak, in the British Chess Championship and later that year, he finished runner-up in the inaugural British Knockout Championship, which was held alongside the London Chess Classic. In this latter event, Pert, who replaced Nigel Short after his late withdrawal, eliminated Jonathan Hawkins in the quarterfinals and Luke McShane in the semifinals, then he lost to David Howell 4–6 in the final. |

NDCG@10 performance Before and After tuning on MSMARCO dataset

| Dataset | Before | After |

|---|---|---|

| ArguAna | 34.4 | 51.8 |

| climate-fever | 20.9 | 17.0 |

| DBPedia | 30.3 | 33.5 |

| FEVER | 64.1 | 68.9 |

| FiQA | 13.2 | 25.8 |

| HotpotQA | 55.0 | 55.0 |

| NFCorpus | 29.1 | 32.9 |

| NQ | 23.6 | 45.6 |

| SCIDOCS | 12.8 | 14.7 |

| SciFact | 60.7 | 53.7 |

| TREC-COVID | 36.3 | 63.1 |

| Touche-2020 | 8.9 | 21.8 |

| Avg | 32.4 | 40.3 |

| MSMARCO-dev (MRR@10) | 11.0 |

If you find this work useful, please cite the following paper:

@article{zhou2022hyperlink,

title={Hyperlink-induced Pre-training for Passage Retrieval in Open-domain Question Answering},

author={Zhou, Jiawei and Li, Xiaoguang and Shang, Lifeng and Luo, Lan and Zhan, Ke and Hu, Enrui and Zhang, Xinyu and Jiang, Hao and Cao, Zhao and Yu, Fan and others},

journal={arXiv preprint arXiv:2203.06942},

year={2022}

}