OUR-GAN: One-shot Ultra-high-Resolution Generative Adversarial Networks - Demo

Abstract

We propose OUR-GAN, the first one-shot ultra-high-resolution (UHR) image synthesis framework that generates non-repetitive images with 4K or higher resolution from a single training image. OUR-GAN generates a visually coherent image at low resolution and then gradually increases the resolution by super-resolution. Since OUR-GAN learns from a real UHR image, it can synthesize large-scale shapes with fine details while maintaining long-range coherence, which is difficult with conventional generative models that generate large images based on the patch distribution learned from relatively small images. OUR-GAN applies seamless subregion-wise super-resolution that synthesizes 4k or higher UHR images with limited memory preventing discontinuity at the boundary. Additionally, OUR-GAN improves diversity and visual coherence by adding vertical positional embeddings to the feature maps. In experiments on the ST4K and RAISE datasets, OUR-GAN exhibited improved fidelity, visual coherency, and diversity compared with existing methods. The synthesized images are presented at https://anonymous-62348.github.io.

Notice

Loading UHR images may take time because the files are large.

Therefore, we've posted downsampled versions of the images on this page for faster image loading.

Click on the images to access the full-size raw images.

The images may look distorted depending on the viewer since the image resolution is very high.

So, please download samples, then evaluate the quality.

Download all samples (including all sections) - link

1. 16K (16,384 x 10,912) image synthesized by OUR-GAN trained with a single 4K training image.

OUR-GAN can synthesize UHR image with higher resolution than that of the training image.

The resolution of this image is 16K, whereas that of the training image is only 4K.

OUR-GAN synthesize high-fidelity UHR images, preserving even fine details.

Download Sec 1. samples - link

|

|---|

16K (16,384 x 10,912) Images synthesized by OUR-GAN |

|

|---|

4K (4,096 x 2,728) training image |

|

|

|---|---|

8K (8,192 x 5,456) images synthesized by OUR-GAN |

|

2. Improving visual coherence

For one-shot image synthesis, achieving visual coherence while maintaining diversity is challenging.

HP-VAE-GAN[1] synthesizes diverse images but fails to catch global coherence, as shown below.

OUR-GAN, applied vertical coordinate convolution to HP-VAE-GAN, significantly improves the global coherence of patterns still generating diverse patterns.

Download Sec 2. samples - link

|

|

|

|

|---|---|---|---|

|

|

|

|

HP-VAE-GAN |

OUR-GAN (proposed) [HP-VAE-GAN + Vertical coordinate convolution] |

||

3. Large-scale shape generation

For UHR image synthesis, models that learns from small patch images like InfinityGAN[2] are hard to synthesize large-scale shapes.

But, OUR-GAN can synthesize globally coherent large-scale objects such as buildings.

You can download full-size InfinityGAN samples in the InfinityGAN project page.

Download Sec 3. samples - link

|

|

|---|---|

OUR-GAN (proposed) |

|

|

|

|

|---|---|---|

InfinityGAN |

||

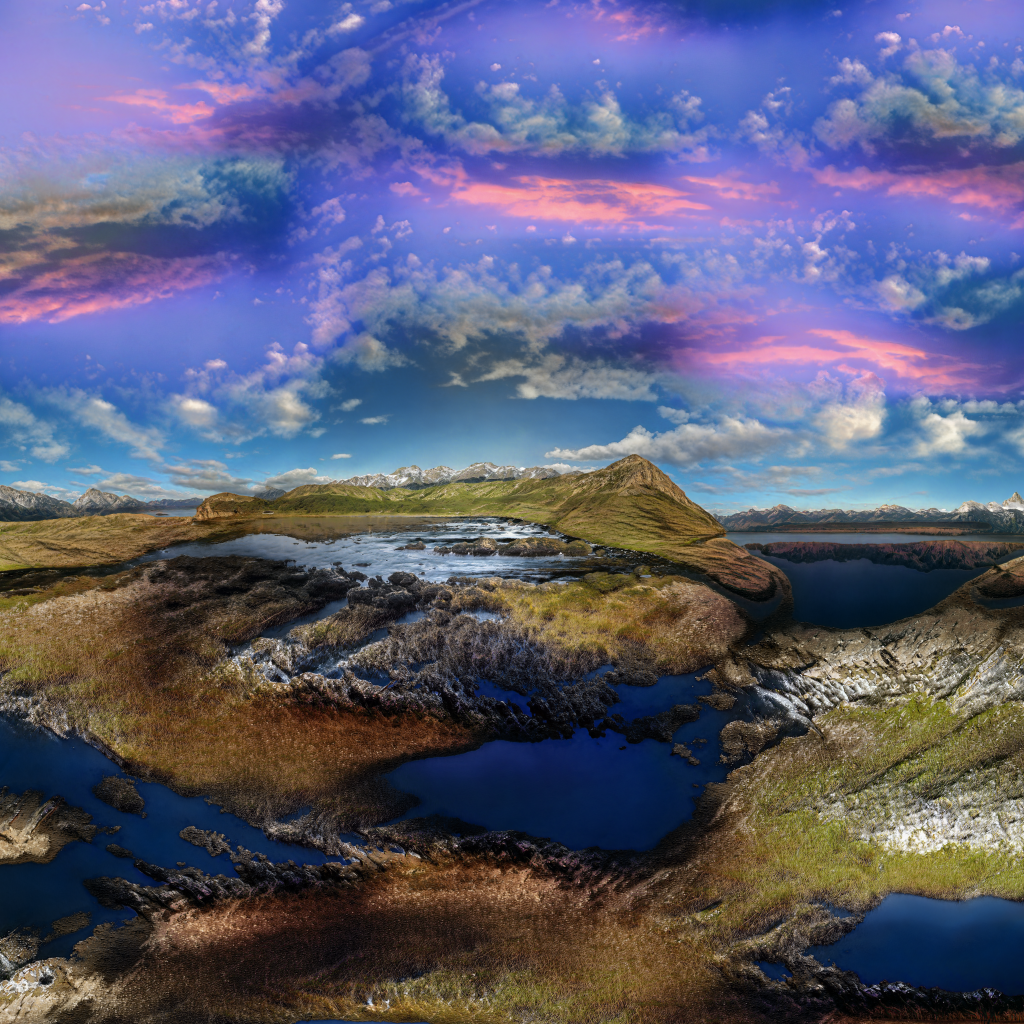

4. 16K (16,384 x 10,912) image synthesized by OUR-GAN trained with a single 16K training image.

OUR-GAN can synthesize UHR image with higher resolution than that of the training image.

The resolution of this image is 16K, whereas that of the training image is only 4K.

OUR-GAN synthesize high-fidelity UHR images, preserving even fine details.

Download Sec 4. samples - island, forest

|

|---|

16K (16,384 x 9,152) island images synthesized by OUR-GAN |

|

|---|

16K (16,329 x 9,185) island training image |

|

|---|

16K (16,384 x 10,880) forest images synthesized by OUR-GAN |

|

|---|

16K (16,384 x 10,880) forest training image |

References

[1] Shir Gur, Sagie Benaim, and Lior Wolf. Hierarchical patch vae-gan: Generating diverse videos from a single sample. In H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 16761–16772. Curran Associates, Inc., 2020.

[2] Chieh Hubert Lin, Yen-Chi Cheng, Hsin-Ying Lee, Sergey Tulyakov, and Ming-Hsuan Yang. InfinityGAN: Towards infinite-pixel image synthesis. In International Conference on Learning Representations, 2022.