ACL 2020

Selections from ACL 2020

This contains a selection** of papers from ACL 2020. For a more complete list of papers organized by topic, see here. The full list of papers (in both the main conference and in workshops) can be found here. The full proceedings, organized by track, are in this giant pdf.

I also enjoyed reading Vered Shwartz's highlights of ACL 2020, as well as Yoav Goldberg's thoughts on the format of ACL 2020 (in particular that the virtual format makes free-form converations, socializing, meeting people, and generating ideas very difficult).

** NOTE: Some areas (Dialogue, Question Answering, Machine Translation, Language Grounding, Multilingual/cross-lingual Tasks, and Syntax) are not well-represented in this list.

- General Remarks

- Tutorials

- Birds of a feather

- Workshops

- Keynotes

- System Demonstrations

- Topics

- NLG papers

- NLU papers

- Applications

- Other

General Remarks

Four new tracks this year:

- Ethics and NLP (44)

- Interpretation and Analysis of Models for NLP (95)

- Theory and Formalism in NLP (Linguistic and Mathematical) (12)

- Theme: Taking Stock of Where We've Been and Where We're Going (65)

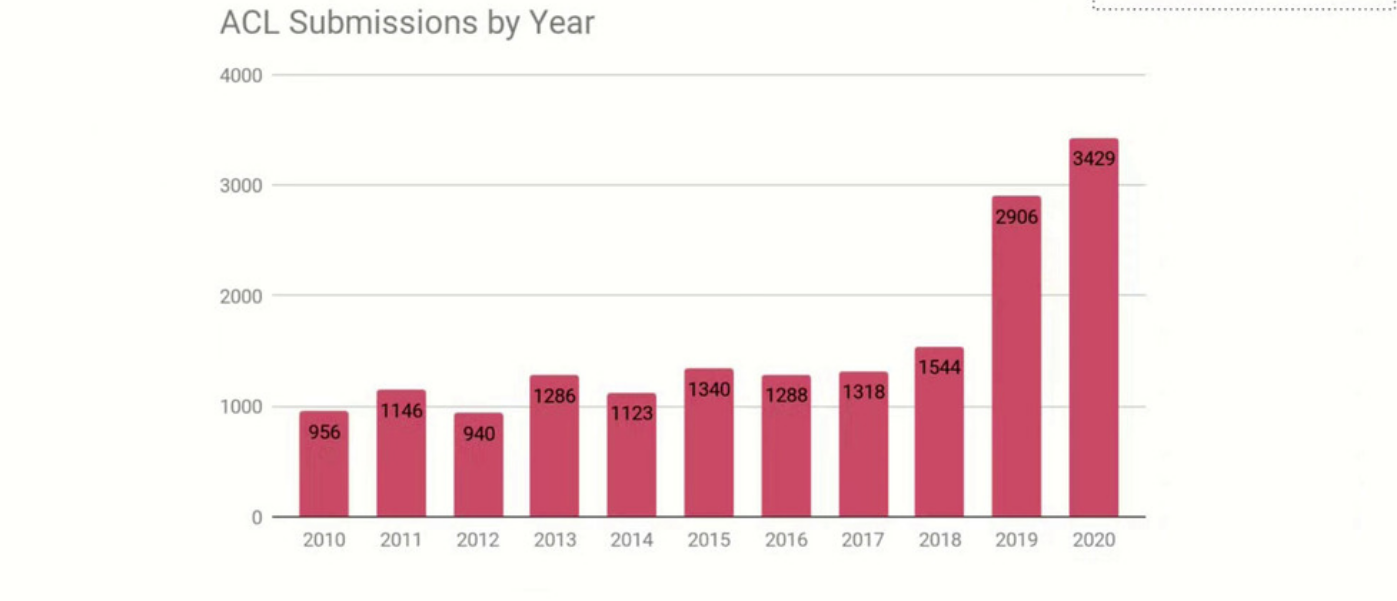

The number of submissions was over double those of ACL 2018:

Popular topics:

- ACL 2020 paper keyword statistics: https://github.com/roomylee/ACL-2020-Papers (top keywords: generation, translation, dialogue, graph, extraction).

- The six most popular tracks (by number of papers accepted) according to https://acl2020.org/blog/general-conference-statistics/:

- Machine Translation

- Machine Learning for NLP

- Dialogue and Interactive Technologies

- Information Extraction

- Generation

- NLP Applications

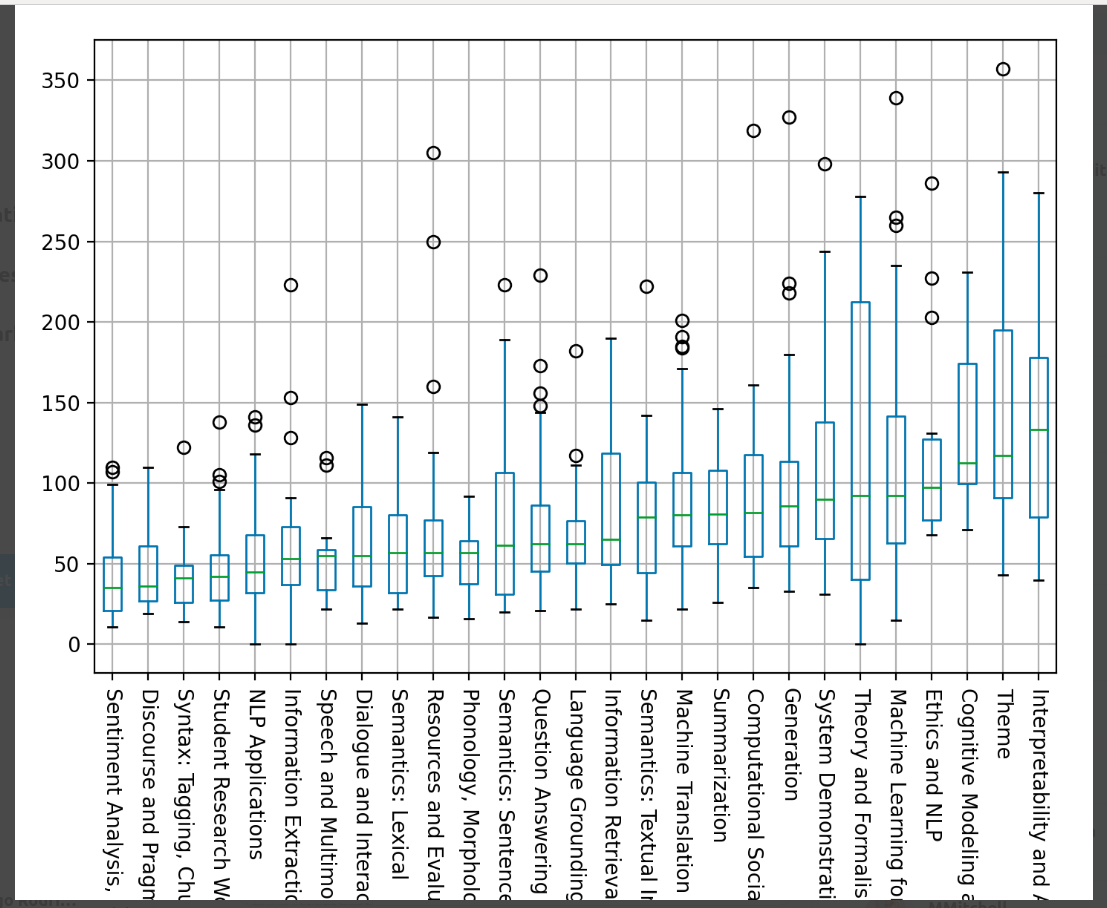

Interestingly, these don't correspond to the number of views per paper. Yoav Goldberg made a box-plot for the number of views each video received:

Box-plot for number of video views in each track. Image from https://twitter.com/yoavgo/status/1282459579339681792

Although there were far fewer Theme, Interpretability, Cognitive Modeling and Ethics papers, these had the highest number of views. On the other hand, Information Extraction, NLP Applications, and Dialogue and Interactive Systems papers had very few views.

Sadly, Discourse and Pragmatics had both few submissions and few views (despite Bonnie Webber winning the lifetime achievement award).

Tutorials

- T1: Interpretability and Analysis in Neural NLP (slides) (slides and video)

- T2: Multi-modal Information Extraction from Text, Semi-structured, and Tabular Data on the Web (slides)

- T3: Reviewing Natural Language Processing Research (slides) (slides and video)

- T4: Stylized Text Generation: Approaches and Applications (slides and video)

- T5: Achieving Common Ground in Multi-modal Dialogue (slides and video)

- T6: Commonsense Reasoning for Natural Language Processing (slides and video) (website)

- T7: Integrating Ethics into the NLP Curriculum (slides)

- T8: Open-Domain Question Answering (slides and video) (website)

Birds of a feather

Unfortunately many of these sessions were scheduled at the same time, so I missed many interesting sessions: Lexical Semantics, Summarization, Information Retrieval & Text Mining, Interpretability, Language Grounding, and NLP Applications.

There were some interesting discussions in Discourse and Pragmatics, Information Extraction, and Generation (with over a hundred people in the Generation session!) Unfortunately the Zoom format with large numbers of people is not conducive to fluid converations between participants; these were mostly QA with the host. It was good to hear some established researchers express a desire to move beyond the current "pre-training on huge datasets and fine-tuning" kind of NLP.

Workshops

Full list of workshops: https://acl2020.org/program/workshops/

- W5: FEVER 3 (papers)

- Maintaining Quality in FEVER Annotation (paper)

"Annotations in the FEVER fact-checking dataset aren't riddled with superficial shortcuts like those Niven&Kao found elsewhere.". TO FIND OUT: what about https://arxiv.org/pdf/1908.05267.pdf ?

- W7: The Second Workshop on Figurative Language Processing (papers)

- W8: NUSE (Workshop on Narrative Understanding, Storylines, and Events) (papers)

- W10: 5th Workshop on Representation Learning for NLP (papers)

- W20: NLPCovid

- WNGT (The 4th Workshop on Neural Generation and Translation) (papers)

And two multi-modal workshops:

- ALVR (Workshop on Advances in Language and Vision Research) (papers)

- The Second Grand-Challenge and Workshop on Human Multimodal Language (papers)

Keynotes

- Kathleen R. McKeown, Rewriting the Past: Assessing the Field through the Lens of Language Generation

- Josh Tenenbaum, Cognitive and computational building blocks for more human-like language in machines

System Demonstrations

Topics

🔝 Methodological

🔝 Theme Papers

See also: https://arxiv.org/abs/2003.11922 , https://arxiv.org/pdf/2006.02419.pdf

See also: Ronen Tamari mentioned Bayesian program learning (DreamCoder) as a related line of work. Also see Extraction of causal structure from procedural text for discourse representations, Neural Execution of Graph Algorithms, Object Files and Schemata: Factorizing Declarative and Procedural Knowledge in Dynamical Systems, Embodiment and language comprehension: reframing the discussion and Recent Advances in Neural Program Synthesis.

See also this dicussion: To Dissect an Octopus: Making Sense of the Form/Meaning Debate

See also: Glenberg and Robertson, Symbol Grounding and Meaning: A Comparison of High-Dimensional and Embodied Theories of Meaning, 2000, Changing Notions of Linguistic Competence in the History of Formal Semantics and Distribution is not enough: going Firther

💥 How Can We Accelerate Progress Towards Human-like Linguistic Generalization? (honorable mention theme paper) (paper)

See also: McNamara and Magliano, Toward a Comprehensive Model of Comprehension, 2009

🔝 New Datasets

➖ S2ORC: The Semantic Scholar Open Research Corpus (paper) (data, model)

➖ Cross-modal Coherence Modeling for Caption Generation (paper) (data)

Clue: a new dataset of 10,000 image-caption pairs, annotated with coherence relations (e.g., visible, subjective, action, story).

➖ ASSET: A Dataset for Tuning and Evaluation of Sentence Simplification Models with Multiple Rewriting Transformations (paper) (code)

🔝 Integrating text with other kinds of data

See also: Reinald Kim Amplayo, "Rethinking Attribute Representation and Injection for Sentiment Classification", EMNLP 2019

➖ GCAN: Graph-aware Co-Attention Networks for Explainable Fake News Detection on Social Media (paper) (code)

➖ Integrating Semantic and Structural Information with Graph Convolutional Network for Controversy Detection (paper) (data)

➖ Learning to Tag OOV Tokens by Integrating Contextual Representation and Background Knowledge (paper)

🔝 Semantics

💥 What are the Goals of Distributional Semantics? (paper)

➖ A Frame-based Sentence Representation for Machine Reading Comprehension (paper)

🔝 Discourse

🔝 Explainability

I was glad to see a that interpretability/explainability was a popular topic this year. Here is a summary of interpretability and model analysis methods at ACL 2020 by Carolin Lawrence.

See also:"Evaluating Explanation Methods for Neural Machine Translation" (listed under Machine Translation).

Faithfulness

💥 💥 Towards Faithfully Interpretable NLP Systems: How Should We Define and Evaluate Faithfulness? (paper)

A survey of the literature on interpretability, with a focus on faithfulness (whether an interpretation accurately represents the reasoning process behind a model's decision). Many papers conflate faithfulness and plausibility. Some guidelines are offered for future work on evaluating interpretability:

- Be explicit about which aspect of interpretability is being evaluated.

- Evaluation of faithfulness should not involve human judgment. We cannot judge whether an interpretation is faithful or not, because if we could, there would be no need for interpretability. Rather, human judgment measures plausibility. Similarly, end-task performance, while potentially valuable, is not an evaluation of faithfulness.

- Even so-called "inherently interpretable" systems should be tested for faithfulness. For example, some studies claimed attention mechanisms were inherently interpretable, while more recent work (e.g., "Attention is not explanation") have shown one must be cautious about such claims.

- Evaluation of faithfulness should not focus only on correct model predictions. Finally, the authors argue that researchers should move beyond ``faithfulness'' as a binary notion (since one can usually find counter-examples to disprove faithfulness) to a more nuanced, graded notion of faithfulness (a model should be sufficiently faithful).

💥 💥 Learning to Faithfully Rationalize by Construction (paper) (code)

A rationale is a snippet of text "explaining" a model's decision. We would like faithful rationales, but this can be difficult (e.g., contextualized representations mix signals up, and we don't know which tokens affect the output). Discrete Rationalization (Lei et al., EMNLP 2016) uses an extractor trained to select a rationale, followed by a separate classifier, which only uses the rationale to make a prediction. Unfortunately, to train the extractor one needs human rationales, which are hard to obtain.

Solution: FRESH. First train a black-box model (e.g., BERT). Then apply a saliency scorer (many options available, does not need to be faithful), and use thresholding (with other heuristics) to select a text snippet from the saliency scores. This text snippet is then used to train a fresh model to make a final prediction. The rationale is thus faithful by construction. However, there is still no insight into why a given rationale was selected, or in how the rationale was used.

See also: Jacovi and Goldberg, Aligning Faithful Interpretations with their Social Attribution

A systematic evaluation of the intermediate outputs of neural module networks on the NLVR2 and DROP datasets, to see whether modules are faithful (i.e., that the modules do what we would expect). Results: no, they are not faithful ("the intermediate outputs differ from the expected output"). Several methods are explored which improve faithfulness.

Evaluation

Carries out human subject tests to evaluate the "simulatability" of explainability methods ("a model is simulatable when a person can predict its behavior on new inputs"), on both text and tabular data. Users try to predict model output, with or without a corresponding model explanation.

A dataset of rationales for various NLP tasks, together with metrics that capture faithfulness of the rationales (comprehensiveness: "were all features needed to make a prediction?", and sufficiency: "are the rationales sufficient to make a given prediction?")

Attention

See also these earlier papers on whether attention can be used for explainability: Jain and Wallace, "Attention is not Explanation" (2019), Serrano and Smith, "Is Attention Interpretable" (2019), and Wiegreffe & Pinter, "Attention is not not Explanation" (2019).

This paper investigates why attention weights in LSTMs are often neither plausible nor faithful; one reason for this is the low diversity of hidden states. To improve interpretability, a diversity objective is added which increases the diversity of the hidden representations. Future work: try something similar for transformer-based models.

Presents a simple method for training models with deceptive attention masks, with very little reduction in accuracy. The method reduces weights for certain tokens which are actually used to drive predictions. A human study shows that the manipulated attention-based explanations can trick people into thinking that biased models (against gender) are unbiased.

Creates a new dataset encoding what parts of a text humans focus on when doing text classification based on YELP reviews. Various metrics are introduced to compare the human and computer attention weights. Results: (1) "human-likeness" of the attention is very sensitive to attention type, with biRNNs being most similar, (2) increased length size reduces the similarity, across models. I wonder if Hierarchical Attention Networks would do better here.

➖ Understanding Attention for Text Classification (paper) (code)

Looks at attention and polarity scores as training approaches a local minimum to understand under which conditions attention is more or less interpretable.

Influence Functions

If one has access to the training dataset, one can ask: which are the training examples that influenced the prediction the most?

An empirical investigation of incfluence functions in NLP tasks, answering the following: (1) do they reliably explain transformer-based models? (2) are they consistent with insights from gradient-based saliency scores?. In addition, the authors discuss the use of influence functions to analyze artifacts in the training data.

?

➖ Considering Likelihood in NLP Classification Explanations with Occlusion and Language Modeling (SRW) (Student Research Workshop) (paper) (code)

Other

➖ Interpretable Operational Risk Classification with Semi-Supervised Variational Autoencoder (paper)

🔝 Learning with Less Data

Data Augmentation

💥 Good-Enough Compositional Data Augmentation (paper) (code)

Are the limitations of BERT on natural language inference (NLI) due to limitations of the model, or the lack of enough (appropriate) data? The training set is augmented with "syntactically informative examples" (e.g., subject/object inversion), which improves BERT's performance on controlled examples.

➖ Incorporating External Knowledge through Pre-training for Natural Language to Code Generation (paper) (code)

Other

[less data]: "We show that self-training — a semi-supervised technique for incorporating unlabeled data — sets a new state-of-the-art for the self-attentive parser on disfluency detection, demonstrating that self-training provides benefits orthogonal to the pre-trained contextualized word representations"

🔝 Language Models and Transformers

➖ Effective Estimation of Deep Generative Language Models (paper) (code)

🔝 Embeddings

➖ Estimating Mutual Information Between Dense Word Embeddings (paper) (code)

🔝 Cognitive Models and Psycholinguistics

See also: (earlier paper by the same authors, which contains some additional analysis) Revisiting the poverty of the stimulus: hierarchical generalization without a hierarchical bias in recurrent neural networks

💥 A Systematic Assessment of Syntactic Generalization in Neural Language Models (paper) (code)

➖ Recollection versus Imagination: Exploring Human Memory and Cognition via Neural Language Models (paper) (data)

NLG papers

🔝 Dialogue

🔝 Text Generation

Evaluation:

💥 BLEURT: Learning Robust Metrics for Text Generation (paper) (code)

Generation with constraints:

Poetry generation, in French and English, conditioned on a given "topic".

➖ Rigid Formats Controlled Text Generation (paper) (code)

Generation of English sonnets and classical Chinese poetry.

Beyond plain autoregressive text generation:

See also the papers under "Infilling" below.

VAE

Generation via Retrieval and/or Editing:

See also: "Iterative Edit-Based Unsupervised Sentence Simplification" listed under Summarization and Simplification.

➖ Unsupervised Paraphrasing by Simulated Annealing (paper) (code)

Other

Detecting generated text:

➖ Automatic Detection of Generated Text is Easiest when Humans are Fooled (paper)

Infilling:

💥 Enabling Language Models to Fill in the Blanks (paper) (code) (demo)

NLU and NLG together:

See also:

- Pickering and Garrod, An integrated theory of language production and comprehension (2013)

- Dell, Gary S., and Franklin Chang. "The P-chain: Relating sentence production and its disorders to comprehension and acquisition." (2014)

🔝 Data-to-Text Generation

See also: http://nlpprogress.com/english/data_to_text_generation.html

➖ Few-Shot NLG with Pre-Trained Language Model (paper) (code)

Table-to-Text Generation:

AMR-to-Text Generation:

➖ GPT-too: A Language-Model-First Approach for AMR-to-Text Generation (paper) (code)

➖ Line Graph Enhanced AMR-to-Text Generation with Mix-Order Graph Attention Networks (paper) (code)

🔝 Summarization and Simplification

Evaluation:

➖ Fact-based Content Weighting for Evaluating Abstractive Summarisation (paper) (code)

Factuality, Truthfulness, Faithfulness:

Noisy data is one reason for ``hallucination'' of facts in neural summarization systems. One way to deal with this issue is to replace log loss with a more robust alternative.

Unsupervised:

➖ The Summary Loop: Learning to Write Abstractive Summaries Without Examples (paper) (code)

Simplification

➖ Neural CRF Model for Sentence Alignment in Text Simplification (paper) (code and data)

See also: "Iterative Edit-Based Unsupervised Sentence Simplification" and "ASSET: A Dataset for Tuning and Evaluation of Sentence Simplification Models with Multiple Rewriting Transformations", above.

🔝 Machine Translation

💥 Hard-Coded Gaussian Attention for Neural Machine Translation (paper) (code)

See also: Fixed Encoder Self-Attention Patterns in Transformer-Based Machine Translation

➖ “You Sound Just Like Your Father” Commercial Machine Translation Systems Include Stylistic Biases (paper)

🔝 Style Transfer

➖ Exploring Contextual Word-level Style Relevance for Unsupervised Style Transfer (paper)

NLU papers

🔝 Text Classification

➖ Text Classification with Negative Supervision (paper)

➖ Every Document Owns Its Structure: Inductive Text Classification via Graph Neural Networks (paper) (code)

🔝 Topic Models

➖ Neural Topic Modeling with Bidirectional Adversarial Training (paper)

🔝 Knowledge Graphs

➖ Can We Predict New Facts with Open Knowledge Graph Embeddings? A Benchmark for Open Link Prediction (paper) (code)

🔝 Hypernymy Detection

➖ Hypernymy Detection for Low-Resource Languages via Meta Learning (paper)

🔝 Natural Language Inference

See also these papers from ACL 2020 listed under other sections: "NILE: Natural Language Inference with Faithful Natural Language Explanations" and "Syntactic Data Augmentation Increases Robustness to Inference Heuristics".

See also these similar papers: Rohde et al., 2018 for discourse coherence relations; Handler et al., 2019 for sentence acceptability judgements.

➖ Towards Robustifying NLI Models Against Lexical Dataset Biases (paper) (code)

🔝 Emergent Language in Multi-Agent Communication

💥 Multi-agent Communication meets Natural Language: Synergies between Functional and Structural Language Learning (paper)

See also: Terry Regier, Noga Zaslavsky, Ted Gibson and https://cogsci.mindmodeling.org/2018/papers/0363/0363.pdf

🔝 Applications

🔝 Other

💥 PuzzLing Machines: A Challenge on Learning From Small Data (paper) (code)

See also: ARC Challenge. Compositional attention networks for machine reasoning (https://arxiv.org/pdf/1803.03067.pdf)

➖ It Takes Two to Lie: One to Lie, and One to Listen (paper) (data) (see also)

➖ Logical Inferences with Comparatives and Generalized Quantifiers (SRW) (paper) (code)

Stolen Probability: A Structural Weakness of Neural Language Models

Analysing Lexical Semantic Change with Contextualised Word Representations

Improving Disentangled Text Representation Learning with Information-Theoretic Guidance

Language (Technology) is Power: A Critical Survey of "Bias" in NLP

Toward Gender-Inclusive Coreference Resolution

MixText: Linguistically-Informed Interpolation of Hidden Space for Semi-Supervised Text Classification

Shape of Synth to Come

A Knowledge-Enhanced Pretraining Model for Commonsense Story Generation

** Generalizing Natural Language Analysis through Span-relation Representations