The bigger picture: paper

A nice looking picture: poster

A 30fps sequence of pictures: Rise @ MLIR ODM talk

Core lambda calculus:

rise.lambda

rise.apply

rise.return

Patterns:

rise.zip

rise.mapSeq

rise.mapPar

rise.reduceSeq

rise.tuple

rise.fst

rise.snd

Interoperability:

rise.embed

rise.in

rise.out

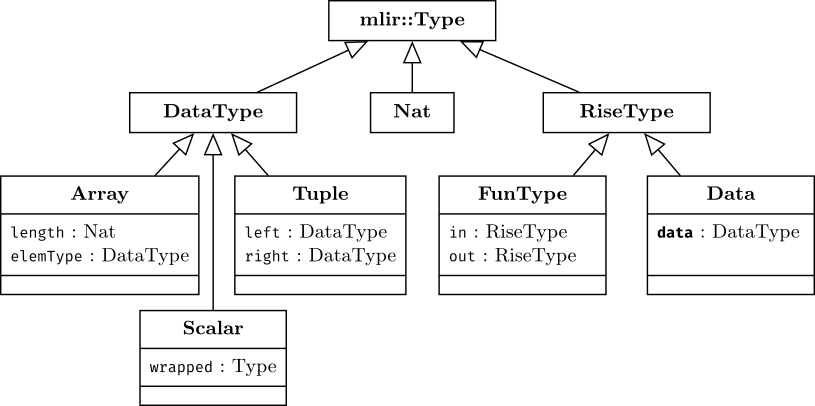

- We clearly separate between functions and data, i.e. we can never store functions in an array

- Nats are used for denoting the dimensions of Arrays. They will support computations in the indices.

- Scalars are used to wrap arbitrary scalar types from other MLIR dialects e.g.

scalar<f32>

All our operations return a RiseType. rise.literal and rise.apply return a Data and all others return a FunType.

Next to the operations we have the following Attributes:

NatAttr -> #rise.nat<natural_number_here> e.g. #rise.nat<1>

DataTypeAttr -> #rise.some_datatype_here e.g. #rise.scalar<f32> or #rise.array<4, scalar<f32>>

LiteralAttr -> #rise.lit<some_datatype_and_its_value> e.g #rise.lit<2.0> (printing form likely to change soon to seperate type from value better!)

We follow the mlir syntax.

Operations begin with: rise.

Types begin with: !rise. (although we omit !rise. when nesting types, e.g. !rise.array<4, scalar<f32>> instead of !rise.array<4, !rise.scalar<f32>>)

Attributes begin with: #rise.

See the following examples of types:

-

!rise.scalar<f32>- Float type -

!rise.array<4, scalar<f32>>- ArrayType of size4with elementTypescalar<f32> -

!rise.array<2, array<2, scalar<f32>>- ArrayType of size2with elementType Arraytype of size2with elementTypescalar<f32>

Note FunTypes always have a RiseType (either Data or FunType) both as input and output!

-

!rise.fun<tuple<scalar<f32>, scalar<f32>> -> scalar<f32>>- FunType from a tuple of twoscalar<f32>to a singlescalar<f32> -

!rise.fun<fun<scalar<f32> -> scalar<f32>> -> scalar<f32>>- FunType with input FunType from (scalar<f32>toscalar<f32>) toscalar<f32>

See the following examples of attributes:

-

#rise.lit<4.0>- LiteralAttribute containing afloatof value4 -

#rise.lit<array<4, scalar<f32>, [1,2,3,4]>- LiteralAttribute containing an Array of4floats with values, 1,2,3 and 4

Consider the following example: map(fun(summand => summand + summand), [5, 5, 5, 5]) that will compute [10, 10, 10, 10].

We have the map function that is called with two arguments: a lambda expression that doubles it's input and an array literal.

We model each of these components individually:

- the function call via a

rise.applyoperation; - the

mapfunction via therise.mapoperation; - the lambda expression via the

rise.lambdaoperation; and finally - the array literal via the

rise.literaloperation.

Overall the example in the Rise MLIR dialect looks like

%array = rise.literal #rise.lit<array<4, !rise.float, [5,5,5,5]>>

%doubleFun = rise.lambda (%summand : !rise.scalar<f32>) -> !rise.scalar<f32> {

%doubled = rise.embed(%summand) {

%added = addf %summand, %summand : f32

rise.return %added : f32

}

rise.return %doubled : !rise.scalar<f32>

}

%map = rise.map #rise.nat<4> #rise.scalar<f32> #rise.scalar<f32>

%mapDoubleFun = rise.apply %map, %doubleFun %array

Let us highlight some key principles regarding the map operation that are true for all Rise patterns (zip, fst, ...):

rise.mapis a function that is called usingrise.apply.- For

rise.mapa couple of attributes are specified, here:rise.map #rise.nat<4> #rise.scalar<f32> #rise.scalar<f32>. These are required to specify the type of themapfunction at this specific call site. You can think about therise.mapoperation as being polymorphic and that the attributes specify the type parameters to make the resulting MLIR value%mapmonomorphic (i.e. it has a concrete type free of type parameters).

Lowering rise code to imperative is accomplished

with the riseToImperative pass of mlir-opt.

This brings us from the functional lambda calculus representation of rise to an imperative representation, which for us right now means a mixture of the std, loop and linalg dialects.

Leveraging the existing passes in MLIR, we can emit the llvm IR dialect by

executing the passes: mlir-opt -convert-rise-to-imperative -convert-linalg-to-loops -convert-loop-to-std -convert-std-to-llvm

The operations shown above model lambda calculus together with common data parallel patterns and some operations for interoperability with other dialetcs.

Besides we also have the following internal codegen operations, which drive the imperative code generation. These are intermediate operations in the sense that they are created and consumed in the riseToImperative pass. They should not be used manually. They will not be emmitted in the lowered code.

rise.codegen.assignrise.codegen.idxrise.codegen.ziprise.codegen.fstrise.codegen.snd

These Intermediate operations are constructed during the first lowering phase

(rise -> intermediate) and are mostly used to model indexing for reading and

writing multidimensional data. They have similarities with views on the data. For details on the translation of these codegen

operations to the final indexings refer to Figure 6 of this paper[1].

Outdated but kept for future reference: Here are further descriptions of lowering specific examples:

- current state of lowering to imperative

- current(outdated) state of lowering to imperative

- lowering strategy and concepts

- lowering a simple reduction

- lowering a simple 2D map

- lowering a simple map

- lowering a simple zip

- outdated - lowering a simple reduction - example

- outdated - lowering a reduction - IR transformation

- concept for lowering a dot_product

- matrix-multiplication example