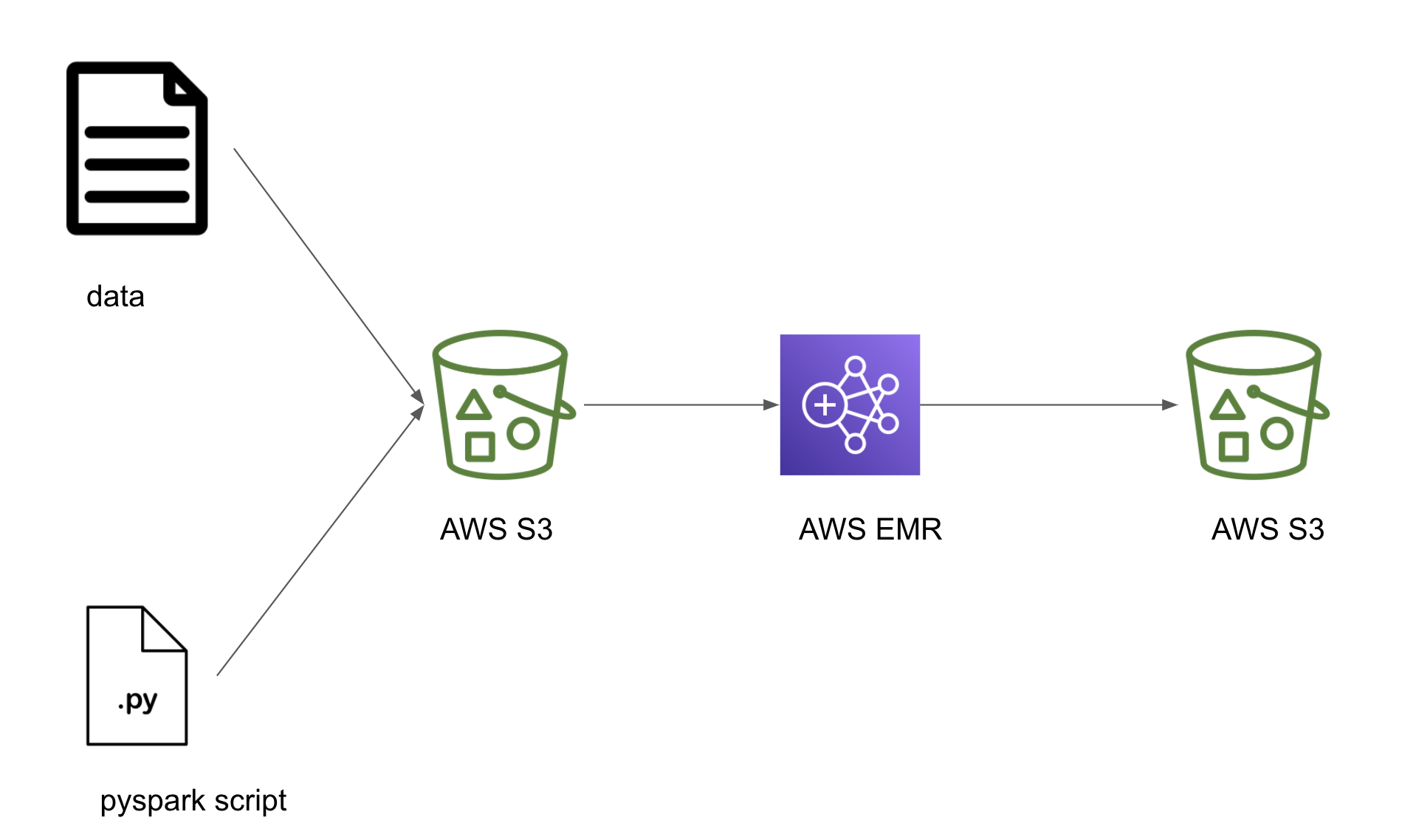

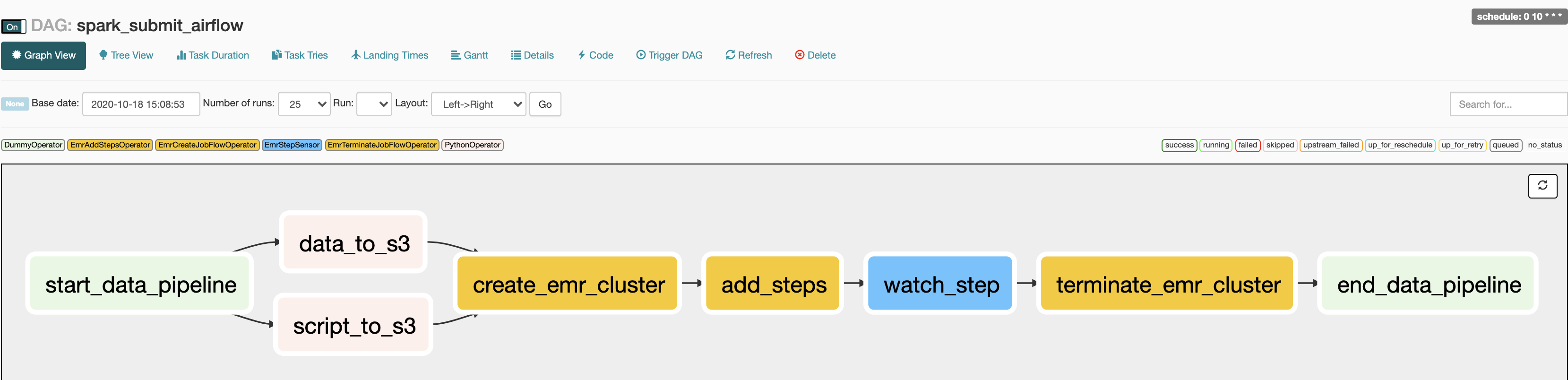

This is the repository for blog at How to submit Spark jobs to EMR cluster from Airflow.

- docker (make sure to have docker-compose as well).

- git to clone the starter repo.

- AWS account to set up required cloud services.

- Install and configure AWS CLI on your machine.

From the project directory do

wget https://www.dropbox.com/sh/amdyc6z8744hrl5/AADS8aPTbA-dRAUjvVfjTo2qa/movie_review

mkdir ./dags/data

mv movie_review ./dags/data/movie_review.csvIf this is your first time using AWS, make sure to check for presence of the EMR_EC2_DefaultRole and EMR_DefaultRole default role as shown below.

aws iam list-roles | grep 'EMR_DefaultRole\|EMR_EC2_DefaultRole'

# "RoleName": "EMR_DefaultRole",

# "RoleName": "EMR_EC2_DefaultRole",If the roles not present, create them using the following command

aws emr create-default-rolesAlso create a bucket, using the following command.

aws s3api create-bucket --acl public-read-write --bucket <your-bucket-name>Replace <your-bucket-name> with your bucket name. eg.) if your bucket name is my-bucket then the above command becomes aws s3api create-bucket --acl public-read-write --bucket my-bucket

and press q to exit the prompt

After use, you can delete your S3 bucket as shown below

aws s3api delete-bucket --bucket <your-bucket-name>and press q to exit the prompt

docker-compose -f docker-compose-LocalExecutor.yml up -dgo to http://localhost:8080/admin/ and turn on the spark_submit_airflow DAG. You can check the status at http://localhost:8080/admin/airflow/graph?dag_id=spark_submit_airflow.

docker-compose -f docker-compose-LocalExecutor.yml downaws s3api delete-bucket --bucket <your-bucket-name>website: https://www.startdataengineering.com/

twitter: https://twitter.com/start_data_eng