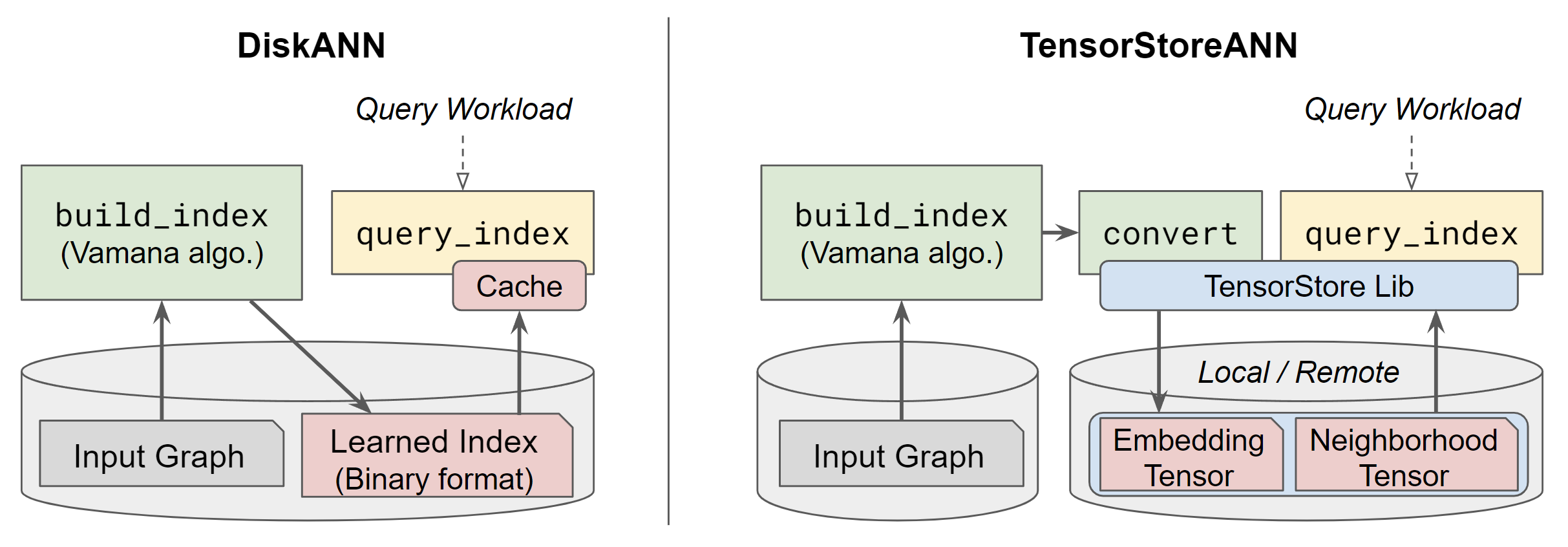

DiskANN with TensorStore Backend

UW-Madison CS744, Fall 2022

Benefits of using TensorStore as the index storage backend:

- Shareable index files across multiple array formats with a uniform API

- Asynchronous I/O for high-throughput access

- Automatic handling of data caching

- Controlled concurrent I/O with remote storage backend

Build

On a CloudLab Ubuntu 20.04 machine:

- Install necessary DiskANN dependencies (see original README below)

- Install

gccsuite version >=10.x and set as default - Install

cmakeversion >= 3.24

mkdir build && cd build

cmake -DCMAKE_BUILD_TYPE=Release ..

make -j$(nproc)Note that Internet connection is required for the build, as the CMake involves Google's FetchContent utility, which will download tensorstore from our forked GitHub repo and its dependencies over the network.

Run

For help messages:

./scripts/run.py [subcommand] -hParse Sift dataset fvecs into fbin format:

./scripts/run.py to_fbin --sift_base /mnt/ssd/data/sift-small/siftsmall --dataset /mnt/ssd/data/sift-tiny/sifttiny [--max_npts 1000]Build on-disk index from learning input (may take very long):

./scripts/run.py build --dataset /mnt/ssd/data/sift-tiny/sifttinyConvert on-disk index to zarr format tensors:

./scripts/run.py convert --dataset /mnt/ssd/data/sift-tiny/sifttinyRun queries with different parameters:

./scripts/run.py query --dataset /mnt/ssd/data/sift-tiny/sifttiny [--k_depth 10] [--npts_to_cache 100] [--use_ts] [--ts_async] [-L 10 50 100]Automated wrapper for run.py:

./scripts/run_tests.sh /mnt/ssd/data/gist/gist /mnt/ssd/result/gist

# the first argument is a path prefix of `*_learn.fbin`

# the second argument is the log directoryBar graph plotting with run.py wrapper generated data:

./scripts/plot.py /mnt/ssd/result/gist /mnt/ssd/result/gist/plotsTo run TensorStore with remote http server, create another node (assume IP address 10.10.1.2) and launch a http server:

# at the parent directory of gist/

python3 -m http.server # this will use 8000 portThen in the previous node, run the script with remote address specified:

./scripts/run.py query --dataset /mnt/ssd/data/gist/gist --k_depth 10 --list_sizes 10 --use_ts --use_remote http://10.10.1.2:8000/gist/gistThis will load query from local and use TensorStore on http://10.10.1.2:8000 server.

TODO List

- Converter from disk index to zarr tensors

- Search path tensorstore reader integration

- Allow turning on/off async I/O patterns for comparison

- Allow turning on/off tensorstore cache pool for comparison (currently sees no effect, needs further study)

- Using a remote storage backend

DiskANN - Original README

The goal of the project is to build scalable, performant, streaming and cost-effective approximate nearest neighbor search algorithms for trillion-scale vector search. This release has the code from the DiskANN paper published in NeurIPS 2019, the streaming DiskANN paper and improvements. This code reuses and builds upon some of the code for NSG algorithm.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

See guidelines for contributing to this project.

Linux build:

Install the following packages through apt-get

sudo apt install make cmake g++ libaio-dev libgoogle-perftools-dev clang-format libboost-all-devInstall Intel MKL

Ubuntu 20.04

sudo apt install libmkl-full-devEarlier versions of Ubuntu

Install Intel MKL either by downloading the oneAPI MKL installer or using apt (we tested with build 2019.4-070 and 2022.1.2.146).

# OneAPI MKL Installer

wget https://registrationcenter-download.intel.com/akdlm/irc_nas/18487/l_BaseKit_p_2022.1.2.146.sh

sudo sh l_BaseKit_p_2022.1.2.146.sh -a --components intel.oneapi.lin.mkl.devel --action install --eula accept -s

Build

mkdir build && cd build && cmake -DCMAKE_BUILD_TYPE=Release .. && make -j Windows build:

The Windows version has been tested with Enterprise editions of Visual Studio 2022, 2019 and 2017. It should work with the Community and Professional editions as well without any changes.

Prerequisites:

- CMake 3.15+ (available in VisualStudio 2019+ or from https://cmake.org)

- NuGet.exe (install from https://www.nuget.org/downloads)

- The build script will use NuGet to get MKL, OpenMP and Boost packages.

- DiskANN git repository checked out together with submodules. To check out submodules after git clone:

git submodule init

git submodule update

- Environment variables:

- [optional] If you would like to override the Boost library listed in windows/packages.config.in, set BOOST_ROOT to your Boost folder.

Build steps:

- Open the "x64 Native Tools Command Prompt for VS 2019" (or corresponding version) and change to DiskANN folder

- Create a "build" directory inside it

- Change to the "build" directory and run

cmake ..

OR for Visual Studio 2017 and earlier:

<full-path-to-installed-cmake>\cmake ..

- This will create a diskann.sln solution. Open it from VisualStudio and build either Release or Debug configuration.

- Alternatively, use MSBuild:

msbuild.exe diskann.sln /m /nologo /t:Build /p:Configuration="Release" /property:Platform="x64"

* This will also build gperftools submodule for libtcmalloc_minimal dependency.

- Generated binaries are stored in the x64/Release or x64/Debug directories.

Usage:

Please see the following pages on using the compiled code:

- Commandline interface for building and search SSD based indices

- Commandline interface for building and search in memory indices

- Commandline examples for using in-memory streaming indices

- To be added: Python interfaces and docker files