This repository is the official implementation of Using Soft Actor-Critic for Low-Level UAV Control. This work will be presented in the IROS 2020 Workshop - "Perception, Learning, and Control for Autonomous Agile Vehicles".

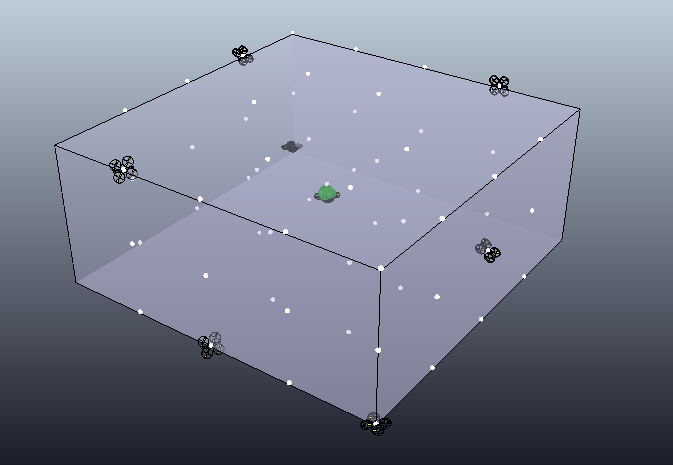

We train a policy using Soft Actor-Critic to control a UAV. This agent is dropped in the air, with a sampled distance and inclination from the target (the green sphere in the [0,0,0] position), and has to get as close as possible to the target. In our experiments the target always has the position = [0,0,0] and angular velocity = [0,0,0].

Watch the video

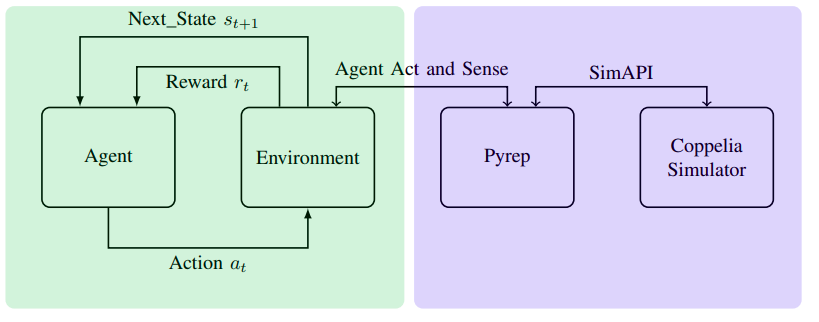

Framework It is a traditional RL env that accesses the Pyrep plugin, which accesses Coppelia Simulator API. It is a lot faster than using the Remote API of Coppelia Simulator, and you also have access to a simpler API for manipulating/creating objects inside your running simulation.

Initial positions for the UAV agent

One of the safest ways to emulate our environment is by using a Docker container. This approach is better to train in a cluster and have a stable environment, although forwarding the display server with Docker is always tricky (we leave this one to the reader).

Change the container's variables and then use the Makefile to make it easier to use our Docker Container. The commands are self-explanatory.

create-image

make create-image

create-container

make create-container

training

make training

evaluate-container

make evaluate-container

- Install Coppelia Coppelia Simulator

- Install Pyrep Pyrep

- Install Drone_RL Drone_RL 4)To install requirements:

pip install -r requirements.txt

- To install this repo:

python setup.py install

To train the model(s) in the paper, run this command:

./training.sh

Is somewhat tricky to train an exact policy, because that is a variability inherent to off-policy models and reward-shaping to achieve optimal control politics for Robotics.

One hack that alleviates this problem is save something like a moving-window of say 5-10 policies and pick the best one (qualitatively) after a particular reward stabilization. More research is needed to alleviate the need for qualitative assessment of the trained policies.

To evaluate my model with the optimal policy, run:

./evaluate.sh

You can check the saved trained policies in:

Run the notebooks on notebooks/ to check the images presented on the paper.

Code heavily based in RL-Adventure-2

The environment is a continuation of the work in:

G. Lopes, M. Ferreira, A. Sim ̃oes, and E. Colombini, “Intelligent Control of a Quadrotor with Proximal Policy Optimization,”Latin American Robotic Symposium, pp. 503–508, 11 2018

Barros, Gabriel M.; Colombini, Esther L, "Using Soft Actor-Critic for Low-Level UAV Control", IROS - workshop Perception, Learning, and Control for Autonomous Agile Vehicles, 2020.

@misc{barros2020using, title={Using Soft Actor-Critic for Low-Level UAV Control}, author={Gabriel Moraes Barros and Esther Luna Colombini}, year={2020}, eprint={2010.02293}, archivePrefix={arXiv}, primaryClass={cs.RO}, journal={IROS - workshop "Perception, Learning, and Control for Autonomous Agile Vehicles"}, }