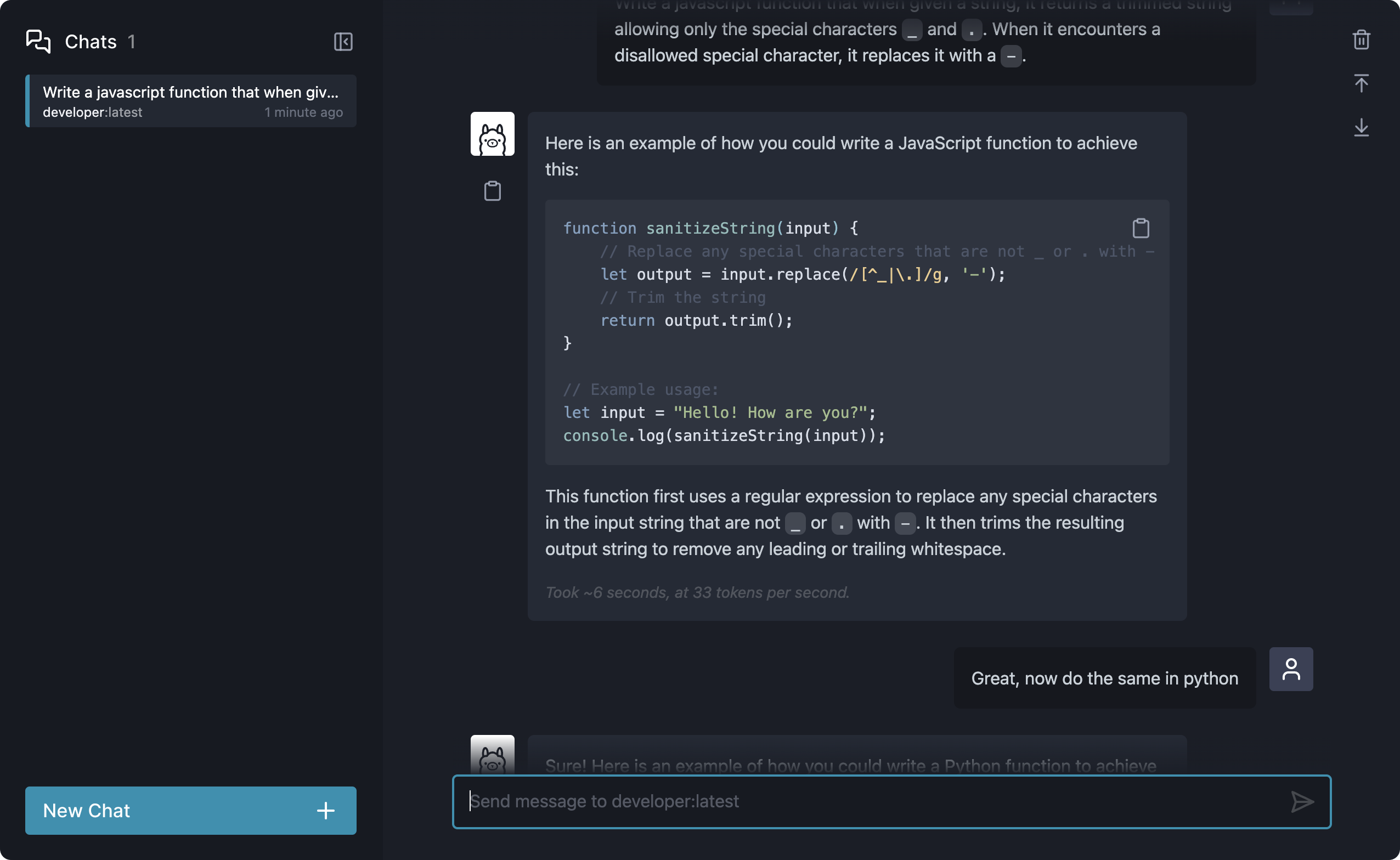

Chat with your Ollama models, locally

Chat-Ollama is a local chat app for your Ollama models in a web browser. With multiple dialogs and message formatting support, you can easily communicate with your ollama models without using the CLI.

To use chat-ollama without building from source:

# First, clone and move into the repo.

$ git clone https://github.com/jonathandale/chat-ollama

$ cd chat-ollama# Then, serve bundled app from /dist

$ yarn serve

# Visit http://localhost:1420NB: If you don't want to install serve, consider an alternative.

Chat-Ollama is built with ClojureScript, using Shadow CLJS for building and Helix for rendering views. Global state management is handled by Refx (a re-frame for Helix). Tailwind is used for css.

- node.js (v6.0.0+, most recent version preferred)

- npm (comes bundled with node.js) or yarn

- Java SDK (Version 11+, Latest LTS Version recommended)

With yarn:

$ yarn installRunning in development watches source files (including css changes), and uses fast-refresh resulting in near-instant feedback.

$ yarn dev

# Visit http://localhost:1420Running build compiles javascript and css to the dist folder.

$ yarn buildServe the built app.

# Serve bundled app from /dist

$ yarn serve

# Visit http://localhost:1420Distributed under the MIT License.

Copyright © 2023 Jonathan Dale