Semantic Segmentation with Pytorch-Lightning

Note: this example is now part of Pytorch-Lightning repo and directly maintained there.

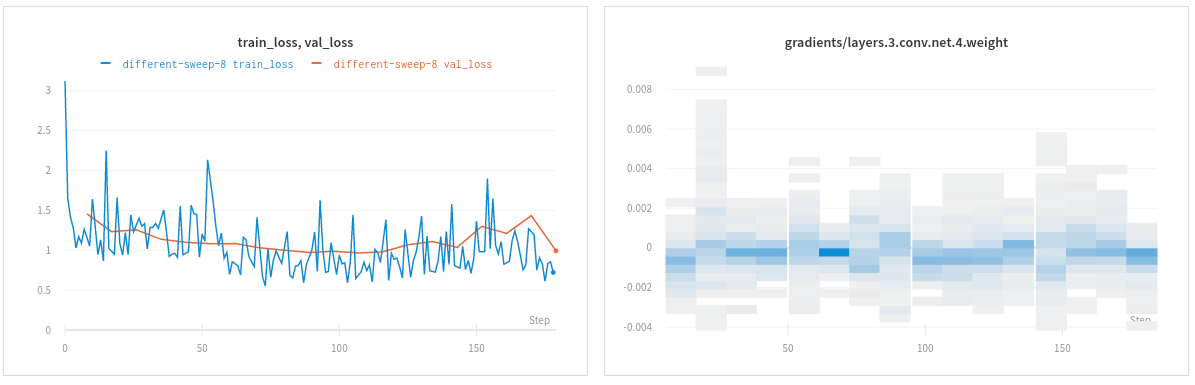

This is a simple demo for performing semantic segmentation on the Kitti dataset using Pytorch-Lightning and optimizing the neural network by monitoring and comparing runs with Weights & Biases.

Pytorch-Ligthning includes a logger for W&B that can be called simply with:

from pytorch_lightning.loggers import WandbLogger

from pytorch_lightning import Trainer

wandb_logger = WandbLogger()

trainer = Trainer(logger=wandb_logger)Refer to the documentation for more details.

Hyper-parameters can be defined manually and every run is automatically logged onto Weights & Biases for easier analysis/interpretation of results and how to optimize the architecture.

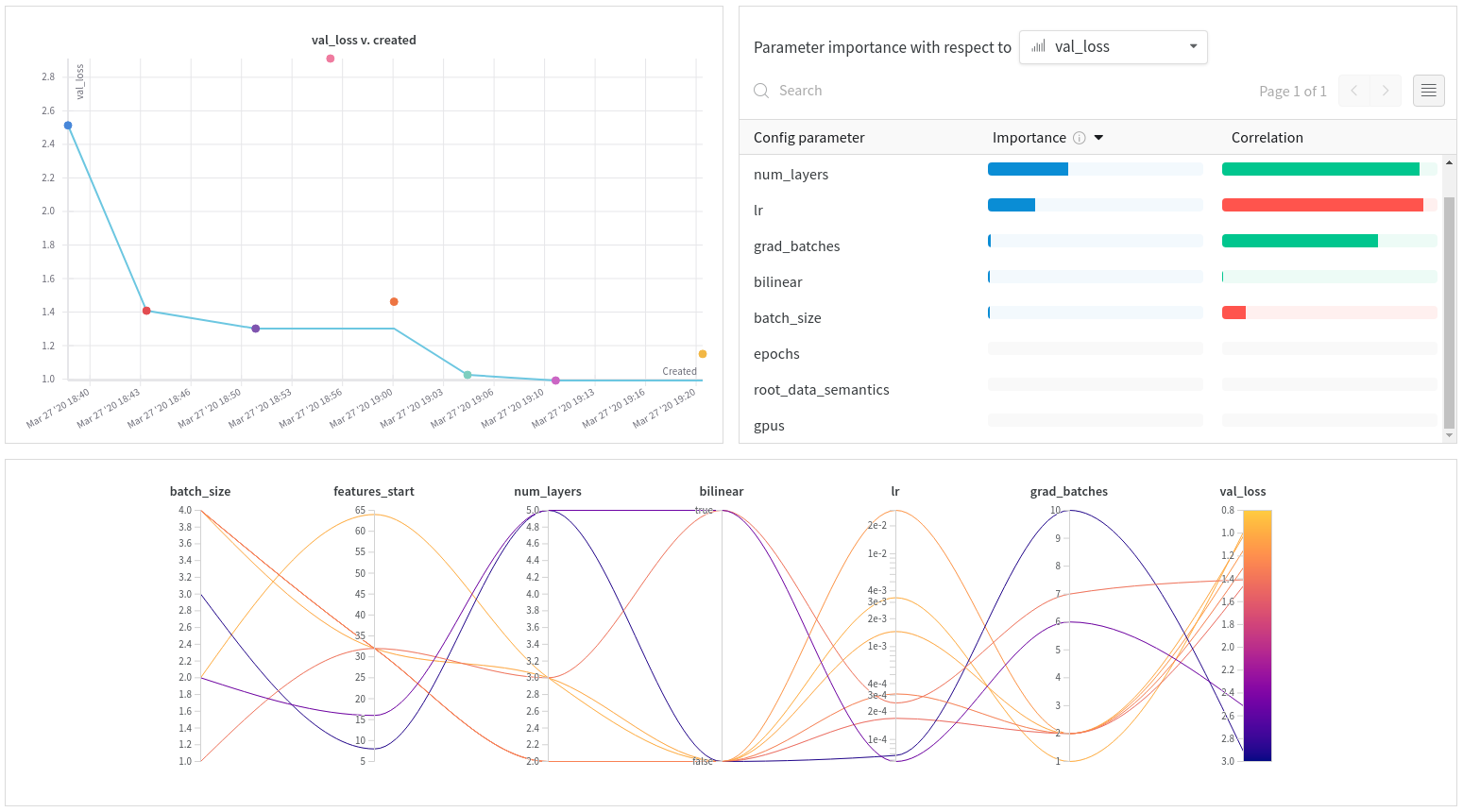

You can also run sweeps to optimize automatically hyper-parameters.

Note: this example has been adapted from Pytorch-Lightning examples.

-

Install dependencies through

requirements.txt,Pipfileor manually (Pytorch, Pytorch-Lightning & Wandb) -

Log in or sign up for an account ->

wandb login -

Run

python train.py -

Visualize and compare your runs through generated link

-

Run

wandb sweep sweep.yaml -

Run

wandb agent <sweep_id>where<sweep_id>is given by previous command -

Visualize and compare the sweep runs

After running the script a few times, you will be able to compare quickly a large combination of hyperparameters.

Feel free to modify the script and define your own hyperparameters.